本文通过在hdfs中三种不同数据格式文件存储相同数量的数据,通过hive和impala两种客户端查询进行比较。

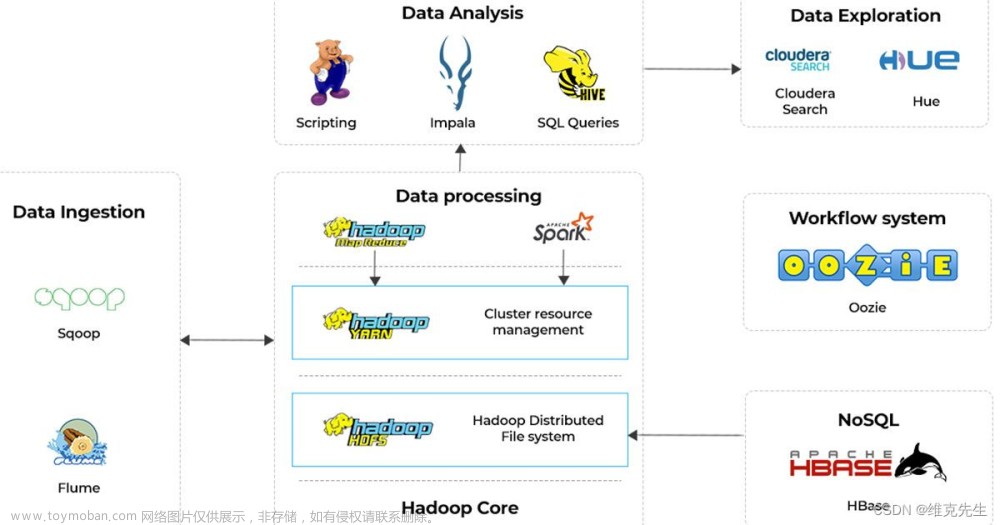

本文前提:熟悉hadoop、hive和impala、kafka、flink等,并且其环境都可正常使用。(在后续的专栏中都会将对应的内容补全,目前已经完成了zookeeper和hadoop的部分。)

本文分为五个部分,即结论、三种文件介绍、需求、实现步骤、实现和网上别人的结论与验证。

由于本文太长,导致阅读可能比较麻烦,故一篇文章分为两篇,第一篇是准备数据,第二篇是查询比较。本文是第二篇。

本文接1、通过亿级数据量在hive和impala中查询比较text、orc和parquet性能表现(一),阅读本文前需要先阅读第一篇。

9、分别在hive和impala中查询验证结果(比較HDFS存儲三種格式文件的查詢性能textfile、orc、parquet)

-----------------1、sql----------------------------

select count(*) from t_kafkauser_orc;

select count(*) from t_kafkauser_parquet;

-- 2、隨便找一條信息,按照name查詢

-----------------1、sql----------------------------

select * from t_kafkauser_orc where name = '';

select * from t_kafkauser_parquet where name = '';

-- 3、按照時間區間查詢

-----------------1、sql----------------------------

select * from t_kafkauser_orc where create_time between '' and '';

select * from t_kafkauser_parquet where create_time between '' and '';

1)、查詢總條數

具體查詢結果如下:

sql:

select count(*) from t_kafkauser;

select count(name) from t_kafkauser;

select count(id) from t_kafkauser;

select count(*) from t_kafkauser_orc;

select count(name) from t_kafkauser_orc;

select count(id) from t_kafkauser_orc;

select count(*) from t_kafkauser_parquet;

select count(name) from t_kafkauser_parquet;

select count(id) from t_kafkauser_parquet;

0、前提驗證MR運行正常與否

驗證MR能否正常的運行,命令如下:

cd /opt/cloudera/parcels/CDH-6.2.1-1.cdh6.2.1.p0.1425774/lib/hadoop-mapreduce

hadoop jar hadoop-mapreduce-examples-3.0.0-cdh6.2.1.jar pi 1 1

1、hive查詢

0: jdbc:hive2://server8:10000> select count(*) from t_kafkauser;

INFO : Total jobs = 1

INFO : number of splits:31

INFO : Stage-Stage-1: Map: 31 Reduce: 1 Cumulative CPU: 152.83 sec HDFS Read: 8376828994 HDFS Write: 109 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 2 minutes 32 seconds 830 msec

INFO : Completed executing command(queryId=hive_20230202093505_2d22ca60-dc1a-4492-b2f7-493e80a136c4); Time taken: 42.478 seconds

+------------+

| _c0 |

+------------+

| 100000000 |

+------------+

1 row selected (42.725 seconds)

0: jdbc:hive2://server8:10000> select count(name) from t_kafkauser;

INFO : Total jobs = 1

INFO : number of splits:31

INFO : Stage-Stage-1: Map: 31 Reduce: 1 Cumulative CPU: 195.78 sec HDFS Read: 8376832781 HDFS Write: 109 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 3 minutes 15 seconds 780 msec

INFO : Completed executing command(queryId=hive_20230202093616_76a6e3dc-8ec4-4409-afe7-130977713eca); Time taken: 41.496 seconds

+------------+

| _c0 |

+------------+

| 100000000 |

+------------+

1 row selected (41.698 seconds)

0: jdbc:hive2://server8:10000> select count(id) from t_kafkauser;

INFO : Total jobs = 1

INFO : number of splits:31

INFO : Stage-Stage-1: Map: 31 Reduce: 1 Cumulative CPU: 203.88 sec HDFS Read: 8376832818 HDFS Write: 109 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 3 minutes 23 seconds 880 msec

INFO : Completed executing command(queryId=hive_20230202093719_ba62f1d1-b866-4507-b5fa-2283e59053c6); Time taken: 40.668 seconds

+------------+

| _c0 |

+------------+

| 100000000 |

+------------+

1 row selected (40.823 seconds)

0: jdbc:hive2://server8:10000> select count(*) from t_kafkauser_orc;

INFO : Total jobs = 1

INFO : number of splits:2

INFO : Stage-Stage-1: Map: 2 Reduce: 1 Cumulative CPU: 15.92 sec HDFS Read: 83789 HDFS Write: 109 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 15 seconds 920 msec

INFO : Completed executing command(queryId=hive_20230201184450_28482688-b11e-4a18-9fa9-947cfba0589c); Time taken: 26.617 seconds

+------------+

| _c0 |

+------------+

| 100000000 |

+------------+

1 row selected (27.605 seconds)

0: jdbc:hive2://server8:10000> select count(name) from t_kafkauser_orc;

INFO : Total jobs = 1

INFO : number of splits:2

INFO : Stage-Stage-1: Map: 2 Reduce: 1 Cumulative CPU: 17.95 sec HDFS Read: 293956548 HDFS Write: 109 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 17 seconds 950 msec

INFO : Completed executing command(queryId=hive_20230202085041_e16bf186-b3b5-4d38-8c10-d60452e75dae); Time taken: 25.029 seconds

+------------+

| _c0 |

+------------+

| 100000000 |

+------------+

1 row selected (25.202 seconds)

0: jdbc:hive2://server8:10000> select count(id) from t_kafkauser_orc;

INFO : Total jobs = 1

INFO : number of splits:2

INFO : Stage-Stage-1: Map: 2 Reduce: 1 Cumulative CPU: 15.19 sec HDFS Read: 299434 HDFS Write: 109 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 15 seconds 190 msec

INFO : Completed executing command(queryId=hive_20230202085132_dbc210c6-18b7-45d3-9264-807346fb3fa0); Time taken: 25.213 seconds

+------------+

| _c0 |

+------------+

| 100000000 |

+------------+

1 row selected (25.395 seconds)

0: jdbc:hive2://server8:10000> select count(*) from t_kafkauser_parquet;

INFO : Total jobs = 1

INFO : number of splits:21

INFO : Stage-Stage-1: Map: 21 Reduce: 1 Cumulative CPU: 233.89 sec HDFS Read: 5794677889 HDFS Write: 109 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 3 minutes 53 seconds 890 msec

INFO : Completed executing command(queryId=hive_20230202085230_920b24bf-4525-4412-a297-03caf7cb8a79); Time taken: 41.679 seconds

+------------+

| _c0 |

+------------+

| 100000000 |

+------------+

1 row selected (41.874 seconds)

0: jdbc:hive2://server8:10000> select count(name) from t_kafkauser_parquet;

INFO : Total jobs = 1

INFO : number of splits:21

INFO : Stage-Stage-1: Map: 21 Reduce: 1 Cumulative CPU: 169.43 sec HDFS Read: 1789619337 HDFS Write: 109 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 2 minutes 49 seconds 430 msec

INFO : Completed executing command(queryId=hive_20230202085352_7b1b02b6-8507-4197-b74d-e0495e87cf22); Time taken: 36.554 seconds

+------------+

| _c0 |

+------------+

| 100000000 |

+------------+

1 row selected (36.731 seconds)

0: jdbc:hive2://server8:10000> select count(id) from t_kafkauser_parquet;

INFO : Total jobs = 1

INFO : number of splits:21

INFO : Stage-Stage-1: Map: 21 Reduce: 1 Cumulative CPU: 145.24 sec HDFS Read: 400635630 HDFS Write: 109 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 2 minutes 25 seconds 240 msec

INFO : Completed executing command(queryId=hive_20230202085452_29b54852-cc94-4098-b87b-99623c9367f3); Time taken: 34.608 seconds

+------------+

| _c0 |

+------------+

| 100000000 |

+------------+

1 row selected (34.796 seconds)

2、impala查詢

[server7:21000] test_million_data> select count(*) from t_kafkauser;

+-----------+

| count(*) |

+-----------+

| 100000000 |

+-----------+

Fetched 1 row(s) in 1.52s

[server7:21000] test_million_data> select count(id) from t_kafkauser;

+-----------+

| count(id) |

+-----------+

| 100000000 |

+-----------+

Fetched 1 row(s) in 1.43s

[server7:21000] test_million_data> select count(name) from t_kafkauser;

+-------------+

| count(name) |

+-------------+

| 100000000 |

+-------------+

Fetched 1 row(s) in 1.32s

[server7:21000] test_million_data> select count(*) from t_kafkauser_orc;

+-----------+

| count(*) |

+-----------+

| 100000000 |

+-----------+

Fetched 1 row(s) in 0.21s

[server7:21000] test_million_data> select count(id) from t_kafkauser_orc;

+-----------+

| count(id) |

+-----------+

| 100000000 |

+-----------+

Fetched 1 row(s) in 1.51s

[server7:21000] test_million_data> select count(name) from t_kafkauser_orc;

+-------------+

| count(name) |

+-------------+

| 100000000 |

+-------------+

Fetched 1 row(s) in 2.72s

[server7:21000] test_million_data> select count(*) from t_kafkauser_parquet;

+-----------+

| count(*) |

+-----------+

| 100000000 |

+-----------+

Fetched 1 row(s) in 0.11s

[server7:21000] test_million_data> select count(id) from t_kafkauser_parquet;

+-----------+

| count(id) |

+-----------+

| 100000000 |

+-----------+

Fetched 1 row(s) in 0.41s

[server7:21000] test_million_data> select count(name) from t_kafkauser_parquet;

+-------------+

| count(name) |

+-------------+

| 100000000 |

+-------------+

Fetched 1 row(s) in 0.51s

2)、隨便找一條信息,按照name查詢

1、SQL

select * from t_kafkauser where name = 'alan6522345_t';

select * from t_kafkauser_orc where name = 'alan6522345_t';

select * from t_kafkauser_parquet where name = 'alan6522345_t';

2、Hive查詢

0: jdbc:hive2://server8:10000> select * from t_kafkauser where name = 'alan6522345_t';

INFO : Total jobs = 1

INFO : number of splits:31

INFO : Stage-Stage-1: Map: 31 Cumulative CPU: 193.94 sec HDFS Read: 8376850688 HDFS Write: 2790 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 3 minutes 13 seconds 940 msec

INFO : Completed executing command(queryId=hive_20230202104525_12cbc21b-fcc2-4f4b-b4c5-dbebf916f8c4); Time taken: 39.02 seconds

+-----------------+---------------------+-------------------+------------------------------+--------------------------+

| t_kafkauser.id | t_kafkauser.userid | t_kafkauser.name | t_kafkauser.url | t_kafkauser.create_time |

+-----------------+---------------------+-------------------+------------------------------+--------------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+-----------------+---------------------+-------------------+------------------------------+--------------------------+

1 row selected (39.252 seconds)

0: jdbc:hive2://server8:10000> select * from t_kafkauser_orc where name = 'alan6522345_t';

INFO : Total jobs = 1

INFO : number of splits:2

INFO : Stage-Stage-1: Map: 2 Cumulative CPU: 8.53 sec HDFS Read: 12458143 HDFS Write: 267 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 8 seconds 530 msec

INFO : Completed executing command(queryId=hive_20230202105023_3ae232fb-d3c0-4082-b154-0a7dd9793ee4); Time taken: 16.772 seconds

+---------------------+-------------------------+-----------------------+------------------------------+------------------------------+

| t_kafkauser_orc.id | t_kafkauser_orc.userid | t_kafkauser_orc.name | t_kafkauser_orc.url | t_kafkauser_orc.create_time |

+---------------------+-------------------------+-----------------------+------------------------------+------------------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+---------------------+-------------------------+-----------------------+------------------------------+------------------------------+

1 row selected (16.978 seconds)

0: jdbc:hive2://server8:10000> select * from t_kafkauser_parquet where name = 'alan6522345_t';

INFO : Total jobs = 1

INFO : number of splits:21

INFO : Stage-Stage-1: Map: 21 Cumulative CPU: 110.97 sec HDFS Read: 536025572 HDFS Write: 1920 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 1 minutes 50 seconds 970 msec

INFO : Completed executing command(queryId=hive_20230202105140_e843cd7b-79bd-4c5b-bf7f-60a525aa1faa); Time taken: 27.3 seconds

+-------------------------+-----------------------------+---------------------------+------------------------------+----------------------------------+

| t_kafkauser_parquet.id | t_kafkauser_parquet.userid | t_kafkauser_parquet.name | t_kafkauser_parquet.url | t_kafkauser_parquet.create_time |

+-------------------------+-----------------------------+---------------------------+------------------------------+----------------------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+-------------------------+-----------------------------+---------------------------+------------------------------+----------------------------------+

1 row selected (27.54 seconds)

3、impala查詢

[server7:21000] test_million_data> select * from t_kafkauser where name = 'alan6522345_t';

+----------+---------+---------------+-----------------------------+-----------------------+

| id | userid | name | url | create_time |

+----------+---------+---------------+-----------------------------+-----------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+----------+---------+---------------+-----------------------------+-----------------------+

Fetched 1 row(s) in 1.84s

[server7:21000] test_million_data> select * from t_kafkauser_orc where name = 'alan6522345_t';

Query: select * from t_kafkauser_orc where name = 'alan6522345_t'

Query submitted at: 2023-02-02 10:54:09 (Coordinator: http://server7:25000)

Query progress can be monitored at: http://server7:25000/query_plan?query_id=9247e834afb1281b:742462f600000000

+----------+---------+---------------+-----------------------------+-----------------------+

| id | userid | name | url | create_time |

+----------+---------+---------------+-----------------------------+-----------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+----------+---------+---------------+-----------------------------+-----------------------+

Fetched 1 row(s) in 10.67s

[server7:21000] test_million_data> select * from t_kafkauser_parquet where name = 'alan6522345_t';

Query: select * from t_kafkauser_parquet where name = 'alan6522345_t'

Query submitted at: 2023-02-02 10:55:21 (Coordinator: http://server7:25000)

Query progress can be monitored at: http://server7:25000/query_plan?query_id=9740d6450f2529e5:f013a3cb00000000

+----------+---------+---------------+-----------------------------+-----------------------+

| id | userid | name | url | create_time |

+----------+---------+---------------+-----------------------------+-----------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+----------+---------+---------------+-----------------------------+-----------------------+

Fetched 1 row(s) in 0.61s

3)、按照多条件查询

1、sql

select

* from t_kafkauser

where name = 'alan6522345_t'

and id = '14565794'

and userid = '6522345'

or url = 'https://www.win.com/6522345';

select

* from t_kafkauser_orc

where name = 'alan6522345_t'

and id = '14565794'

and userid = '6522345'

or url = 'https://www.win.com/6522345';

select

* from t_kafkauser_parquet

where name = 'alan6522345_t'

and id = '14565794'

and userid = '6522345'

or url = 'https://www.win.com/6522345';

2、hive查詢

0: jdbc:hive2://server8:10000> select * from t_kafkauser where name = 'alan6522345_t' and id = '14565794' and userid = '6522345' or url = 'https://www.win.com/6522345';

INFO : Total jobs = 1

INFO : number of splits:31

INFO : Stage-Stage-1: Map: 31 Cumulative CPU: 216.8 sec HDFS Read: 8376852455 HDFS Write: 2790 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 3 minutes 36 seconds 800 msec

INFO : Completed executing command(queryId=hive_20230202110048_d977e61a-f378-4d42-a4b3-81722742916c); Time taken: 39.688 seconds

+-----------------+---------------------+-------------------+------------------------------+--------------------------+

| t_kafkauser.id | t_kafkauser.userid | t_kafkauser.name | t_kafkauser.url | t_kafkauser.create_time |

+-----------------+---------------------+-------------------+------------------------------+--------------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+-----------------+---------------------+-------------------+------------------------------+--------------------------+

1 row selected (39.935 seconds)

0: jdbc:hive2://server8:10000> select * from t_kafkauser_orc where name = 'alan6522345_t' and id = '14565794' and userid = '6522345' or url = 'https://www.win.com/6522345';

INFO : Total jobs = 1

INFO : number of splits:2

INFO : Stage-Stage-1: Map: 2 Cumulative CPU: 10.16 sec HDFS Read: 12458871 HDFS Write: 267 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 10 seconds 160 msec

INFO : Completed executing command(queryId=hive_20230202110142_76710f60-5317-4584-9381-cc26e732843e); Time taken: 16.993 seconds

+---------------------+-------------------------+-----------------------+------------------------------+------------------------------+

| t_kafkauser_orc.id | t_kafkauser_orc.userid | t_kafkauser_orc.name | t_kafkauser_orc.url | t_kafkauser_orc.create_time |

+---------------------+-------------------------+-----------------------+------------------------------+------------------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+---------------------+-------------------------+-----------------------+------------------------------+------------------------------+

1 row selected (17.197 seconds)

0: jdbc:hive2://server8:10000> select * from t_kafkauser_parquet where name = 'alan6522345_t' and id = '14565794' and userid = '6522345' or url = 'https://www.win.com/6522345';

INFO : Total jobs = 1

INFO : number of splits:21

INFO : Stage-Stage-1: Map: 21 Cumulative CPU: 107.92 sec HDFS Read: 536028491 HDFS Write: 1920 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 1 minutes 47 seconds 920 msec

INFO : Completed executing command(queryId=hive_20230202110301_3b351bdc-7262-4641-9ca0-6250e8287a93); Time taken: 27.76 seconds

+-------------------------+-----------------------------+---------------------------+------------------------------+----------------------------------+

| t_kafkauser_parquet.id | t_kafkauser_parquet.userid | t_kafkauser_parquet.name | t_kafkauser_parquet.url | t_kafkauser_parquet.create_time |

+-------------------------+-----------------------------+---------------------------+------------------------------+----------------------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+-------------------------+-----------------------------+---------------------------+------------------------------+----------------------------------+

1 row selected (27.95 seconds)

3、impala查詢

[server7:21000] test_million_data> select * from t_kafkauser where name = 'alan6522345_t' and id = 14565794 and userid = 6522345 or url = 'https://www.win.com/6522345';

+----------+---------+---------------+-----------------------------+-----------------------+

| id | userid | name | url | create_time |

+----------+---------+---------------+-----------------------------+-----------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+----------+---------+---------------+-----------------------------+-----------------------+

Fetched 1 row(s) in 1.85s

[server7:21000] test_million_data> select * from t_kafkauser_orc where name = 'alan6522345_t' and id = 14565794 and userid = 6522345 or url = 'https://www.win.com/6522345';

+----------+---------+---------------+-----------------------------+-----------------------+

| id | userid | name | url | create_time |

+----------+---------+---------------+-----------------------------+-----------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+----------+---------+---------------+-----------------------------+-----------------------+

Fetched 1 row(s) in 11.31s

[server7:21000] test_million_data> select * from t_kafkauser_parquet where name = 'alan6522345_t' and id = 14565794 and userid = 6522345 or url = 'https://www.win.com/6522345';

+----------+---------+---------------+-----------------------------+-----------------------+

| id | userid | name | url | create_time |

+----------+---------+---------------+-----------------------------+-----------------------+

| 14565794 | 6522345 | alan6522345_t | https://www.win.com/6522345 | 2023-01-18 07:44:05.0 |

+----------+---------+---------------+-----------------------------+-----------------------+

Fetched 1 row(s) in 4.28s

4)、按照時間區間查詢

1、sql

select count(url) from t_kafkauser where create_time BETWEEN '2023-01-18 08:01:27' and '2023-01-18 08:01:28' ;

select count(url) from t_kafkauser_orc where create_time BETWEEN '2023-01-18 08:01:27' and '2023-01-18 08:01:28' ;

select count(url) from t_kafka_user_parquet where create_time BETWEEN '2023-01-18 08:01:27' and '2023-01-18 08:01:28' ;

2、hive查詢

0: jdbc:hive2://server8:10000> select count(url) from t_kafkauser where create_time BETWEEN '2023-01-18 08:01:27' and '2023-01-18 08:01:28' ;

INFO : Total jobs = 1

INFO : number of splits:31

INFO : Stage-Stage-1: Map: 31 Reduce: 1 Cumulative CPU: 215.09 sec HDFS Read: 8376853562 HDFS Write: 105 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 3 minutes 35 seconds 90 msec

INFO : Completed executing command(queryId=hive_20230202132555_547f89e1-aa9c-4234-aeca-69f33f5eceb8); Time taken: 41.917 seconds

+--------+

| _c0 |

+--------+

| 95500 |

+--------+

1 row selected (42.106 seconds)

0: jdbc:hive2://server8:10000> select count(url) from t_kafkauser_orc where create_time BETWEEN '2023-01-18 08:01:27' and '2023-01-18 08:01:28' ;

INFO : Total jobs = 1

INFO : number of splits:2

INFO : Stage-Stage-1: Map: 2 Reduce: 1 Cumulative CPU: 12.87 sec HDFS Read: 828205 HDFS Write: 105 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 12 seconds 870 msec

INFO : Completed executing command(queryId=hive_20230202132656_c1fbba6c-05c0-4a9e-a760-b65b6eb26f58); Time taken: 22.105 seconds

+--------+

| _c0 |

+--------+

| 95500 |

+--------+

1 row selected (22.274 seconds)

0: jdbc:hive2://server8:10000> select count(url) from t_kafkauser_parquet where create_time BETWEEN '2023-01-18 08:01:27' and '2023-01-18 08:01:28' ;

INFO : Total jobs = 1

INFO : number of splits:21

INFO : Stage-Stage-1: Map: 21 Reduce: 1 Cumulative CPU: 130.16 sec HDFS Read: 64663344 HDFS Write: 105 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 2 minutes 10 seconds 160 msec

INFO : Completed executing command(queryId=hive_20230202132729_01d23207-6ddb-45f3-8d72-d505da333fe5); Time taken: 30.511 seconds

+--------+

| _c0 |

+--------+

| 95500 |

+--------+

1 row selected (30.685 seconds)

3、impala查詢

[server7:21000] test_million_data> select count(url) from t_kafkauser where create_time BETWEEN '2023-01-18 08:01:27' and '2023-01-18 08:01:28' ;

+------------+

| count(url) |

+------------+

| 95500 |

+------------+

Fetched 1 row(s) in 4.33s

[server7:21000] test_million_data> select count(url) from t_kafkauser_orc where create_time BETWEEN '2023-01-18 08:01:27' and '2023-01-18 08:01:28' ;

+------------+

| count(url) |

+------------+

| 95500 |

+------------+

Fetched 1 row(s) in 5.93s

[server7:21000] test_million_data> select count(url) from t_kafkauser_parquet where create_time BETWEEN '2023-01-18 08:01:27' and '2023-01-18 08:01:28' ;

+------------+

| count(url) |

+------------+

| 95500 |

+------------+

Fetched 1 row(s) in 0.31s

5)、兩張表join

1、sql

select a.* from t_kafkauser a join t_kafkauser_orc b on a.id = b.id and a.id = 108045695;

select a.* from t_kafkauser_parquet a join t_kafkauser_orc b on a.id = b.id and a.id = 108045695;

select a.* from t_kafkauser_parquet a join t_kafkauser b on a.id = b.id and a.id = 108045695;

2、hive查詢

0: jdbc:hive2://server8:10000> select a.* from t_kafkauser a join t_kafkauser_orc b on a.id = b.id and a.id = 108045695;

INFO : Total jobs = 1

INFO : number of splits:33

INFO : Stage-Stage-1: Map: 33 Reduce: 138 Cumulative CPU: 638.43 sec HDFS Read: 8377892321 HDFS Write: 12103 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 10 minutes 38 seconds 430 msec

INFO : Completed executing command(queryId=hive_20230202134136_f7336a78-9201-4be8-b721-31e4c6e19674); Time taken: 166.162 seconds

+------------+-----------+-----------------+-------------------------------+------------------------+

| a.id | a.userid | a.name | a.url | a.create_time |

+------------+-----------+-----------------+-------------------------------+------------------------+

| 108045695 | 99999999 | alan99999999_t | https://www.win.com/99999999 | 2023-01-18 08:01:28.0 |

+------------+-----------+-----------------+-------------------------------+------------------------+

1 row selected (166.772 seconds)

0: jdbc:hive2://server8:10000> select a.* from t_kafkauser_parquet a join t_kafkauser_orc b on a.id = b.id and a.id = 108045695;

INFO : Total jobs = 1

INFO : number of splits:23

INFO : Stage-Stage-1: Map: 23 Reduce: 100 Cumulative CPU: 440.63 sec HDFS Read: 117456695 HDFS Write: 8797 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 7 minutes 20 seconds 630 msec

INFO : Completed executing command(queryId=hive_20230202135628_4ed7755b-b567-47b5-bb93-c523a0043af6); Time taken: 121.57 seconds

+------------+-----------+-----------------+-------------------------------+------------------------+

| a.id | a.userid | a.name | a.url | a.create_time |

+------------+-----------+-----------------+-------------------------------+------------------------+

| 108045695 | 99999999 | alan99999999_t | https://www.win.com/99999999 | 2023-01-18 08:01:28.0 |

+------------+-----------+-----------------+-------------------------------+------------------------+

1 row selected (121.973 seconds)

0: jdbc:hive2://server8:10000> select a.* from t_kafkauser_parquet a join t_kafkauser b on a.id = b.id and a.id = 108045695;

INFO : Total jobs = 1

INFO : number of splits:52

INFO : Stage-Stage-1: Map: 52 Reduce: 212 Cumulative CPU: 1022.64 sec HDFS Read: 8494722690 HDFS Write: 18541 HDFS EC Read: 0 SUCCESS

INFO : Total MapReduce CPU Time Spent: 17 minutes 2 seconds 640 msec

INFO : Completed executing command(queryId=hive_20230202140053_84155ad0-e645-4c5f-bbeb-feaf41dcbac6); Time taken: 242.307 seconds

+------------+-----------+-----------------+-------------------------------+------------------------+

| a.id | a.userid | a.name | a.url | a.create_time |

+------------+-----------+-----------------+-------------------------------+------------------------+

| 108045695 | 99999999 | alan99999999_t | https://www.win.com/99999999 | 2023-01-18 08:01:28.0 |

+------------+-----------+-----------------+-------------------------------+------------------------+

1 row selected (242.882 seconds)

3、impala查詢

[server7:21000] test_million_data> select a.* from t_kafkauser a join t_kafkauser_orc b on a.id = b.id and a.id = 108045695;

+-----------+----------+----------------+------------------------------+-----------------------+

| id | userid | name | url | create_time |

+-----------+----------+----------------+------------------------------+-----------------------+

| 108045695 | 99999999 | alan99999999_t | https://www.win.com/99999999 | 2023-01-18 08:01:28.0 |

+-----------+----------+----------------+------------------------------+-----------------------+

Fetched 1 row(s) in 2.98s

[server7:21000] test_million_data> select a.* from t_kafkauser_parquet a join t_kafkauser_orc b on a.id = b.id and a.id = 108045695;

+-----------+----------+----------------+------------------------------+-----------------------+

| id | userid | name | url | create_time |

+-----------+----------+----------------+------------------------------+-----------------------+

| 108045695 | 99999999 | alan99999999_t | https://www.win.com/99999999 | 2023-01-18 08:01:28.0 |

+-----------+----------+----------------+------------------------------+-----------------------+

Fetched 1 row(s) in 1.62s

[server7:21000] test_million_data> select a.* from t_kafkauser_parquet a join t_kafkauser b on a.id = b.id and a.id = 108045695;

+-----------+----------+----------------+------------------------------+-----------------------+

| id | userid | name | url | create_time |

+-----------+----------+----------------+------------------------------+-----------------------+

| 108045695 | 99999999 | alan99999999_t | https://www.win.com/99999999 | 2023-01-18 08:01:28.0 |

+-----------+----------+----------------+------------------------------+-----------------------+

Fetched 1 row(s) in 2.03s

6)、總結

1、文件存儲

- text文件存儲文件最大,orc最小,parquet居中

- text:1.92G 4個文件,合計大小近8G

- orc:208.12M 4個,合計大小近832M

- parquet:1.34G 4個,合計大小近5.36G

- parquet-snappy:442M左右,4個 ,合計大小近1.76G

2、hive查詢與impala查詢速度

- 總體上看,hive的查詢速度與文件類型關係較大,text最慢,orc最快,parquet居中;impala的查詢速度與文件類型關係較大,text居中,orc最慢,parquet最快。不管是hive還是impala,與查詢類別關係不大。impala的查詢速度比hive中的查詢速度快上2個數量級。

3、不同查詢類型的查詢速度

- 查詢速度與查詢類型關係不大,只是稍微會慢一些。

4、結論

- 僅從查詢速度上考慮,如果是hive中使用首選orc文件格式,如果是impala中使用首選parquet文件格式。

- 如果綜合查詢速度與存儲大小上考慮,如果是hive中使用首選是orc文件格式,如果是impala中使用首選orc文件格式(文件大小相差近7倍,但查詢速度僅相差4倍)。

- 一般而言,綜合系統應用而言,選擇parquet文件格式是常見的選擇。但還需要考慮文件的相關特性,比如parquet不支持索引頁,主要與impala配合使用。

五、下文是網上找的別人驗證結果及結論

文章来源:https://www.toymoban.com/news/detail-522819.html

文章来源:https://www.toymoban.com/news/detail-522819.html

[server7:21000] test_million_data> select FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm') dt,count(*)

> from t_kafkauser

> group by FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm')

> order by FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm');

+------------------+----------+

| dt | count(*) |

+------------------+----------+

| 2023-01-18 07:42 | 929901 |

| 2023-01-18 07:43 | 5188500 |

| 2023-01-18 07:44 | 5053100 |

| 2023-01-18 07:45 | 5495900 |

| 2023-01-18 07:46 | 5280500 |

| 2023-01-18 07:47 | 5318800 |

| 2023-01-18 07:48 | 5322200 |

| 2023-01-18 07:49 | 5310600 |

| 2023-01-18 07:50 | 5523100 |

| 2023-01-18 07:51 | 5296400 |

| 2023-01-18 07:52 | 5810100 |

| 2023-01-18 07:53 | 5374300 |

| 2023-01-18 07:54 | 5350600 |

| 2023-01-18 07:55 | 5445300 |

| 2023-01-18 07:56 | 5309300 |

| 2023-01-18 07:57 | 5350400 |

| 2023-01-18 07:58 | 5351923 |

| 2023-01-18 07:59 | 5303077 |

| 2023-01-18 08:00 | 5474800 |

| 2023-01-18 08:01 | 2511199 |

+------------------+----------+

Fetched 20 row(s) in 35.12s

[server7:21000] test_million_data> select FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm') dt,count(*)

> from t_kafkauser_orc

> group by FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm')

> order by FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm');

+------------------+----------+

| dt | count(*) |

+------------------+----------+

| 2023-01-18 07:42 | 929901 |

| 2023-01-18 07:43 | 5188500 |

| 2023-01-18 07:44 | 5053100 |

| 2023-01-18 07:45 | 5495900 |

| 2023-01-18 07:46 | 5280500 |

| 2023-01-18 07:47 | 5318800 |

| 2023-01-18 07:48 | 5322200 |

| 2023-01-18 07:49 | 5310600 |

| 2023-01-18 07:50 | 5523100 |

| 2023-01-18 07:51 | 5296400 |

| 2023-01-18 07:52 | 5810100 |

| 2023-01-18 07:53 | 5374300 |

| 2023-01-18 07:54 | 5350600 |

| 2023-01-18 07:55 | 5445300 |

| 2023-01-18 07:56 | 5309300 |

| 2023-01-18 07:57 | 5350400 |

| 2023-01-18 07:58 | 5351923 |

| 2023-01-18 07:59 | 5303077 |

| 2023-01-18 08:00 | 5474800 |

| 2023-01-18 08:01 | 2511199 |

+------------------+----------+

Fetched 20 row(s) in 46.10s

[server7:21000] test_million_data> select FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm') dt,count(*)

> from t_kafkauser_parquet

> group by FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm')

> order by FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm');

+------------------+----------+

| dt | count(*) |

+------------------+----------+

| 2023-01-18 07:42 | 929901 |

| 2023-01-18 07:43 | 5188500 |

| 2023-01-18 07:44 | 5053100 |

| 2023-01-18 07:45 | 5495900 |

| 2023-01-18 07:46 | 5280500 |

| 2023-01-18 07:47 | 5318800 |

| 2023-01-18 07:48 | 5322200 |

| 2023-01-18 07:49 | 5310600 |

| 2023-01-18 07:50 | 5523100 |

| 2023-01-18 07:51 | 5296400 |

| 2023-01-18 07:52 | 5810100 |

| 2023-01-18 07:53 | 5374300 |

| 2023-01-18 07:54 | 5350600 |

| 2023-01-18 07:55 | 5445300 |

| 2023-01-18 07:56 | 5309300 |

| 2023-01-18 07:57 | 5350400 |

| 2023-01-18 07:58 | 5351923 |

| 2023-01-18 07:59 | 5303077 |

| 2023-01-18 08:00 | 5474800 |

| 2023-01-18 08:01 | 2511199 |

+------------------+----------+

Fetched 20 row(s) in 36.08s

[server7:21000] test_million_data> select FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm') dt,count(*)

> from t_kafkauser_parquet_snappy

> group by FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm')

> order by FROM_UNIXTIME(UNIX_TIMESTAMP(create_time),'yyyy-MM-dd HH:mm');

+------------------+----------+

| dt | count(*) |

+------------------+----------+

| 2023-01-18 07:42 | 929901 |

| 2023-01-18 07:43 | 5188500 |

| 2023-01-18 07:44 | 5053100 |

| 2023-01-18 07:45 | 5495900 |

| 2023-01-18 07:46 | 5280500 |

| 2023-01-18 07:47 | 5318800 |

| 2023-01-18 07:48 | 5322200 |

| 2023-01-18 07:49 | 5310600 |

| 2023-01-18 07:50 | 5523100 |

| 2023-01-18 07:51 | 5296400 |

| 2023-01-18 07:52 | 5810100 |

| 2023-01-18 07:53 | 5374300 |

| 2023-01-18 07:54 | 5350600 |

| 2023-01-18 07:55 | 5445300 |

| 2023-01-18 07:56 | 5309300 |

| 2023-01-18 07:57 | 5350400 |

| 2023-01-18 07:58 | 5351923 |

| 2023-01-18 07:59 | 5303077 |

| 2023-01-18 08:00 | 5474800 |

| 2023-01-18 08:01 | 2511199 |

+------------------+----------+

Fetched 20 row(s) in 34.58s

至此,完成了整个比较。文章来源地址https://www.toymoban.com/news/detail-522819.html

到了这里,关于1、通过亿级数据量在hive和impala中查询比较text、orc和parquet性能表现(二)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!