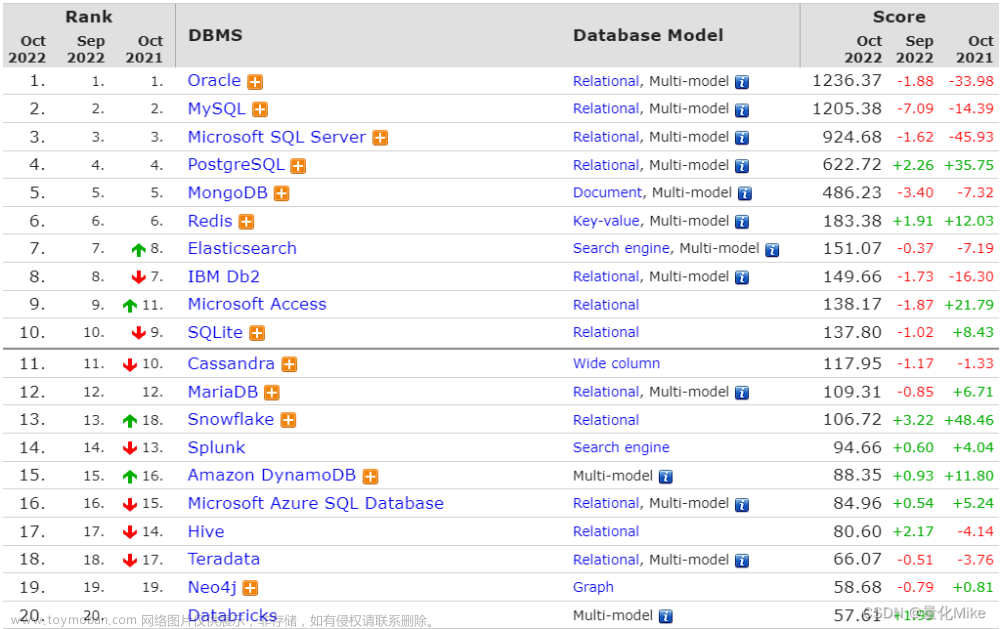

简述:

MongoDB是由C++语言编写一个基于分布式文件存储的开源NoSQL数据库系统。在高负载的情况下,可添加更多的节点(实例),以保证服务性能。在许多场景下用于代替传统的关系型数据库或键/值存储方式。旨在为Web应用提供可扩展的高性能数据存储解决方案。

MongoDB提供了一个面向文档存储方式,操作起来比较简单和容易,可以存储比较复杂的数据类型。最大的特点是支持的查询语言功能非常强大,语法类似于面向对象的查询(Select)语言。几乎可以实现类似关系数据库单表查询的绝大部分功能,而且还支持对数据建立索引。是一个面向集合的,模式自由的文档型数据库。MongoDB 是一个介于关系数据库和非关系数据库之间的产品,是非关系数据库当中功能最丰富,最像关系数据库的。

MongoDB目前只支持单文档事务,需要复杂事务支持的场景暂时不适合,灵活的文档模型JSON 格式存储最接近真实对象模型,对开发者友好,方便快速开发迭代。高可用复制集满足数据高可靠、服务高可用的需求,运维简单,故障自动切换可扩展分片集群海量数据存储,服务能力水平扩展高性能。mmapv1、wiredtiger、mongorocks(rocksdb)、in-memory 等多引擎支持满足各种场景需求强大的索引支持地理位置索引可用于构建各种O2O 应用、文本索引解决搜索的需求、TTL索引解决历史数据自动过期的需求、Gridfs解决文件存储的需求、aggregation & mapreduce解决数据分析场景需求,用户可以自己写查询语句或脚本,将请求都分发到MongoDB 上完成。

从目前阿里云MongoDB 云数据库上的用户应用分析MongoDB的应用已经渗透到各个领域,比如游戏、物流、电商、内容管理、社交、物联网、视频直播等,以下是几个实际的应用案例。

- 游戏场景,使用 MongoDB 存储游戏用户信息,用户的装备、积分等直接以内嵌文档的形式存储,方便查询、更新。

- 物流场景,使用 MongoDB 存储订单信息,订单状态在运送过程中会不断更新,以 MongoDB 内嵌数组的形式来存储,一次查询就能将订单所有的变更读取出来。

- 社交场景,使用 MongoDB 存储存储用户信息,以及用户发表的朋友圈信息,通过地理位置索引实现附近的人、地点等功能。

- 物联网场景,使用 MongoDB 存储所有接入的智能设备信息,以及设备汇报的日志信息,并对这些信息进行多维度的分析。

- 视频直播,使用 MongoDB 存储用户信息、礼物信息等。

开始

部署环境:

一台主机

关闭防火墙规则

[root@localhost ~]# iptables -F

[root@localhost ~]# setenforce 0

[root@localhost ~]# systemctl stop firewalld指定进程最多开启文件数

[root@localhost ~]# ulimit -n

1024

[root@localhost ~]# ulimit -n 65535

[root@localhost ~]# ulimit -n

65535用户最多开启进程数

[root@localhost ~]# ulimit -u

7183

[root@localhost ~]# ulimit -u 65535

[root@localhost ~]# ulimit -u

65535

安装版本下载地址

[root@localhost ~]# wget https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-rhel70-4.0.6.tgz

--2023-07-04 18:18:19-- https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-rhel70-4.0.6.tgz

正在解析主机 fastdl.mongodb.org (fastdl.mongodb.org)... 99.84.238.160, 99.84.238.180, 99.84.238.183, ...

正在连接 fastdl.mongodb.org (fastdl.mongodb.org)|99.84.238.160|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:88105215 (84M) [application/x-gzip]

正在保存至: “mongodb-linux-x86_64-rhel70-4.0.6.tgz”

解压

[root@localhost ~]# tar xf mongodb-linux-x86_64-rhel70-4.0.6.tgz创建链接文件 本地可以识别命令

[root@localhost ~]# mv mongodb-linux-x86_64-rhel70-4.0.6 /usr/local/mongodb

[root@localhost ~]# ln -s /usr/local/mongodb/bin/* /bin

创建数据目录

[root@localhost ~]# mkdir -p /data/mongodb1 数据目录

[root@localhost ~]# mkdir -p /data/logs/mongodb 日志目录

[root@localhost ~]# touch /data/logs/mongodb/mongodb1.log 日志文件

[root@localhost ~]# cd /usr/local/mongodb/

[root@localhost mongodb]# mkdir conf 配置文件目录[root@localhost mongodb]# vim conf/mongodb1.conf 配置文件

port=27017 监听端口

dbpath=/data/mongodb1 指定数据目录

logpath=/data/logs/mongodb/mongodb1.log 指定日志文件路径

logappend=true 允许写入日志

fork=true 允许创建子进程

maxConns=5000 最大连接数

storageEngine=mmapv1 存储引擎启动数据库 -f指定配置文件

[root@localhost mongodb]# /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb1.conf

about to fork child process, waiting until server is ready for connections.

forked process: 69478

child process started successfully, parent exiting

[root@localhost mongodb]# netstat -lnpt|grep mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 69478/mongod[root@localhost mongodb]# ps aux | grep mongod | grep -v grep

root 69478 0.5 5.2 1501400 98532 ? Sl 18:35 0:00 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb1.conf设置开机启动

[root@localhost mongodb]# vim /etc/rc.local

rm -f /data/mongodb1/mongod.lock

mongod -f /usr/local/mongodb/conf/mongodb1.conf使用 rm -f 命令删除了 /data/mongodb1/mongod.lock文件。然后,使用 mongod -f命令以指定的配置文件 /usr/local/mongodb/conf/mongodb1.conf 启动了 MongoDB 服务。这段代码的目的是在系统启动时自动执行这两个命令,以确保 MongoDB 服务正常运行。

连接数据库

[root@localhost mongodb]# mongo

> show dbs

admin 0.078GB

config 0.078GB

local 0.078GB有一些数据库名是保留的,可以直接访问这些有特殊作用的数据库。

- admin:从权限的角度来看,这是"root"数据库。要是将一个用户添加到这个数据库,这个用户自动继承所有数据库的权限。一些特定的服务器端命令也只能从这个数据库运行,比如列出所有的数据库或者关闭服务器。

- local:这个数据永远不会被复制,可以用来存储限于本地单台服务器的任意集合。

- config:当Mongo用于分片设置时,config数据库在内部使用,用于保存分片的相关信息

mongodb 多实例配置

[root@localhost conf]# cp mongodb{1,2}.conf

[root@localhost conf]# ll

总用量 8

-rw-r--r--. 1 root root 133 7月 4 18:35 mongodb1.conf

-rw-r--r--. 1 root root 133 7月 4 18:46 mongodb2.conf[root@localhost conf]# vim mongodb2.conf

port=27027

dbpath=/data/mongodb2

logpath=/data/logs/mongodb/mongodb2.log

logappend=true

fork=true

maxConns=5000

storageEngine=mmapv1[root@localhost conf]# mkdir /data/mongodb2

[root@localhost conf]# touch /data/logs/mongodb/mongodb2.log

[root@localhost conf]# /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb2.conf

about to fork child process, waiting until server is ready for connections.

forked process: 69667

child process started successfully, parent exiting[root@localhost conf]# netstat -lnpt|grep mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 69478/mongod

tcp 0 0 127.0.0.1:27027 0.0.0.0:* LISTEN 69667/mongod

编写启停脚本

[root@localhost ~]# vim /etc/init.d/mongodb

#!/bin/bash

INSTANCE=$1

ACTION=$2

case "$ACTION" in

'start')

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/"$INSTANCE".conf;;

'stop')

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/"$INSTANCE".conf --shutdown;;

'restart')

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/"$INSTANCE".conf --shutdown

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/"$INSTANCE".conf;;

[root@localhost ~]# chmod +x /etc/init.d/mongodb

[root@localhost ~]# netstat -lnpt |grep mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 69478/mongod

tcp 0 0 127.0.0.1:27027 0.0.0.0:* LISTEN 69667/mongod

[root@localhost ~]# /etc/init.d/mongodb mongodb1 stop

killing process with pid: 69478

[root@localhost ~]# /etc/init.d/mongodb mongodb2 stop

killing process with pid: 69667

[root@localhost ~]# netstat -lnpt |grep mongod

[root@localhost ~]#

[root@localhost ~]# /etc/init.d/mongodb mongodb2 start

about to fork child process, waiting until server is ready for connections.

forked process: 69705

child process started successfully, parent exiting

[root@localhost ~]# /etc/init.d/mongodb mongodb1 start

about to fork child process, waiting until server is ready for connections.

forked process: 69727

child process started successfully, parent exiting

[root@localhost ~]# netstat -lnpt |grep mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 69727/mongod

tcp 0 0 127.0.0.1:27027 0.0.0.0:* LISTEN 69705/mongod

基本操作

> show dbs 查看数据库列表

admin 0.078GB

config 0.078GB

local 0.078GB

> show databases; 同上也是查看

admin 0.078GB

config 0.078GB

local 0.078GB

> db 显示数据库

test

> use admin 切换数据库

switched to db admin

> db

admin> use cloud 创建并进入数据库

switched to db cloud

> show dbs

admin 0.078GB

config 0.078GB

local 0.078GB

> db.user.insert({"id":1,"name":"Crushlinux"}); 插入数据

WriteResult({ "nInserted" : 1 })

> show dbs 查看

admin 0.078GB

cloud 0.078GB

config 0.078GB

local 0.078GB> show collections show collections`命令来显示数据库中的集合(类似于表)

user

> db.user.find()

{ "_id" : ObjectId("64a3fe2fa69b718c40e10633"), "id" : 1, "name" : "Crushlinux" } 然后使用`db.user.find()`命令来查询名为"user"的集合中的数据

> ctrl+dbye 最后使用`ctrl+d`命令退出数据库操作。

查看帮助

> help

db.help() help on db methods

db.mycoll.help() help on collection methods

sh.help() sharding helpers

rs.help() replica set helpers

help admin administrative help

help connect connecting to a db help

help keys key shortcuts

help misc misc things to know

help mr mapreduceshow dbs show database names

show collections show collections in current database

show users show users in current database

show profile show most recent system.profile entries with time >= 1ms

show logs show the accessible logger names

show log [name] prints out the last segment of log in memory, 'global' is default

use <db_name> set current database

db.foo.find() list objects in collection foo

db.foo.find( { a : 1 } ) list objects in foo where a == 1

it result of the last line evaluated; use to further iterate

DBQuery.shellBatchSize = x set default number of items to display on shell

exit quit the mongo shell

统计信息

> db.stats();

{

"db" : "test",

"collections" : 0,

"views" : 0,

"objects" : 0,

"avgObjSize" : 0,

"dataSize" : 0,

"storageSize" : 0,

"numExtents" : 0,

"indexes" : 0,

"indexSize" : 0,

"fileSize" : 0,

"fsUsedSize" : 0,

"fsTotalSize" : 0,

"ok" : 1

}这段代码是用于查询MongoDB数据库中的统计信息。根据返回的结果,可以看出当前数据库名为"test",但是该数据库中没有任何集合、视图、对象、索引等数据。各项统计指标的值都为0,表示数据库中没有存储任何数据。最后的"ok"字段值为1,表示查询操作成功。

构建复制集集群

[root@localhost ~]# /etc/init.d/mongodb mongodb1 stop

killing process with pid: 69727

[root@localhost ~]# /etc/init.d/mongodb mongodb2 stop

killing process with pid: 69705

rm -rf /data/

[root@localhost ~]# vim /usr/local/mongodb/conf/mongodb2.conf

[root@localhost ~]# vim /usr/local/mongodb/conf/mongodb1.conf

[root@localhost ~]# vim /usr/local/mongodb/conf/mongodb3.conf

[root@localhost ~]# vim /usr/local/mongodb/conf/mongodb4.conf

下面是两个示例 2 3 也是一样的

[root@localhost ~]# vim /usr/local/mongodb/conf/mongodb3.conf

port=27037

dbpath=/data/mongodb3

logpath=/data/logs/mongodb/mongodb3.log

logappend=true

fork=true

maxConns=5000

storageEngine=mmapv1

slowms=1

profile=1

replSet=crushlinux

[root@localhost ~]# vim /usr/local/mongodb/conf/mongodb1.conf

port=27017

dbpath=/data/mongodb1

logpath=/data/logs/mongodb/mongodb1.log

logappend=true

fork=true

maxConns=5000

storageEngine=mmapv1

slowms=1

profile=1

replSet=crushlinux[root@localhost ~]# mkdir /data/mongodb{1..4} -p

[root@localhost ~]# cd /data/mongodb 查看

mongodb1/ mongodb2/ mongodb3/ mongodb4/

[root@localhost ~]# touch /data/logs/mongodb/mongodb{1..4}.log 创建文件

[root@localhost ~]# chmod 777 /data/logs/mongodb/mongodb* 赋予权限

[root@localhost ~]# ll /data/logs/mongodb/mongodb* 查看

-rwxrwxrwx. 1 root root 19243 7月 4 19:22 /data/logs/mongodb/mongodb1.log

-rwxrwxrwx. 1 root root 16703 7月 4 19:22 /data/logs/mongodb/mongodb2.log

-rwxrwxrwx. 1 root root 0 7月 4 19:40 /data/logs/mongodb/mongodb3.log

-rwxrwxrwx. 1 root root 0 7月 4 19:40 /data/logs/mongodb/mongodb4.log

全部启动

[root@localhost ~]# /etc/init.d/mongodb mongodb1 start

about to fork child process, waiting until server is ready for connections.

forked process: 70115

child process started successfully, parent exiting

[root@localhost ~]# /etc/init.d/mongodb mongodb2 start

about to fork child process, waiting until server is ready for connections.

forked process: 70141

child process started successfully, parent exiting

[root@localhost ~]# /etc/init.d/mongodb mongodb3 start

about to fork child process, waiting until server is ready for connections.

forked process: 70167

child process started successfully, parent exiting

[root@localhost ~]# /etc/init.d/mongodb mongodb4 start

about to fork child process, waiting until server is ready for connections.

forked process: 70193

child process started successfully, parent exiting

[root@localhost ~]# netstat -lnpt | grep mongod

tcp 0 0 127.0.0.1:27047 0.0.0.0:* LISTEN 70193/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 70115/mongod

tcp 0 0 127.0.0.1:27027 0.0.0.0:* LISTEN 70141/mongod

tcp 0 0 127.0.0.1:27037 0.0.0.0:* LISTEN 70167/mongod

进入[root@localhost ~]# mongo

设置副本集 添加节点

> cfg={"_id":"crushlinux","members":[{"_id":0,"host":"127.0.0.1:27017"},{"_id":1,"host":"127.0.0.1:27027"},{"_id":2,"host":"127.0.0.1:27037"}]}

{

"_id" : "crushlinux",

"members" : [

{

"_id" : 0,

"host" : "127.0.0.1:27017"

},

{

"_id" : 1,

"host" : "127.0.0.1:27027"

},

{

"_id" : 2,

"host" : "127.0.0.1:27037"

}

]

}设置一个名为"crushlinux"的副本集。副本集是MongoDB的一种高可用性解决方案,它包含多个MongoDB实例,其中一个是主节点,其他是从节点。这个命令中的配置指定了三个成员,分别是在本地主机上的三个不同端口上运行的MongoDB实例。

初始化

> rs.initiate(cfg)

{

"ok" : 1,

"operationTime" : Timestamp(1688471616, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688471616, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

查看副本集状态

crushlinux:SECONDARY> rs.status() 这里前面已经变了代表现在登陆的是主机

{

"set" : "crushlinux",

"date" : ISODate("2023-07-04T12:02:33.031Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1688472149, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1688472149, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1688472149, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1688472149, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "127.0.0.1:27017",

"health" : 1, 1表示健康 0表示宕机

"state" : 1, 1表示主 2表示从

"stateStr" : "PRIMARY",

"uptime" : 779,

"optime" : {

"ts" : Timestamp(1688472149, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-07-04T12:02:29Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1688471627, 1),

"electionDate" : ISODate("2023-07-04T11:53:47Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "127.0.0.1:27027",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 536,

"optime" : {

"ts" : Timestamp(1688472149, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1688472149, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-07-04T12:02:29Z"),

"optimeDurableDate" : ISODate("2023-07-04T12:02:29Z"),

"lastHeartbeat" : ISODate("2023-07-04T12:02:31.892Z"),

"lastHeartbeatRecv" : ISODate("2023-07-04T12:02:32.916Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:27037",

"syncSourceHost" : "127.0.0.1:27037",

"syncSourceId" : 2,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "127.0.0.1:27037",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 536,

"optime" : {

"ts" : Timestamp(1688472149, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1688472149, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-07-04T12:02:29Z"),

"optimeDurableDate" : ISODate("2023-07-04T12:02:29Z"),

"lastHeartbeat" : ISODate("2023-07-04T12:02:31.892Z"),

"lastHeartbeatRecv" : ISODate("2023-07-04T12:02:32.428Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:27017",

"syncSourceHost" : "127.0.0.1:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1688472149, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688472149, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

刚刚添加三个节点 现在可以在添加一个

crushlinux:PRIMARY> rs.add("127.0.0.1:27047")

{

"ok" : 1,

"operationTime" : Timestamp(1688518763, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688518763, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}在查看 已经添加进去了

"_id" : 3,

"name" : "127.0.0.1:27047",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 2,

"optime" : {

"ts" : Timestamp(1688518763, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1688518763, 1),

"t" : NumberLong(1)删除 欧克成功删除

crushlinux:PRIMARY> rs.remove("127.0.0.1:27047")

{

"ok" : 1,

"operationTime" : Timestamp(1688518882, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688518882, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

模拟故障删除主

[root@localhost ~]# ps aux | grep mongod

root 70115 0.2 3.7 4173628 69200 ? Sl 19:49 0:04 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb1.conf

root 70141 0.2 3.1 4123824 58100 ? Sl 19:49 0:04 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb2.conf

root 70167 0.2 3.0 4151488 56608 ? Sl 19:49 0:04 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb3.conf

root 70193 0.2 3.0 1207572 57508 ? Sl 19:49 0:04 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb4.conf

root 70466 0.0 0.0 112824 980 pts/1 R+ 20:14 0:00 grep --color=auto mongod

[root@localhost ~]# kill -9 70115

[root@localhost ~]# ps aux | grep mongod 在查看已经没了

root 70141 0.2 3.0 4123824 57500 ? Sl 19:49 0:04 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb2.conf

root 70167 0.2 3.0 4151488 57172 ? Sl 19:49 0:04 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb3.conf

root 70193 0.2 3.0 1207572 57516 ? Sl 19:49 0:04 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb4.conf

root 70469 0.0 0.0 112824 980 pts/1 R+ 20:16 0:00 grep --color=auto mongod

再登陆

[root@localhost ~]# mongo 已经登陆不上了

MongoDB shell version v4.0.6

connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb

2023-07-04T20:18:48.202+0800 E QUERY [js] Error: couldn't connect to server 127.0.0.1:27017, connection attempt failed: SocketException: Error connecting to 127.0.0.1:27017 :: caused by :: Connection refused :

connect@src/mongo/shell/mongo.js:343:13

@(connect):1:6

exception: connect failed[root@localhost ~]# mongo --port 27027 登陆mmongodb2

查看

crushlinux:SECONDARY> rs.status() { "set" : "crushlinux", "date" : ISODate("2023-07-05T01:04:24.846Z"), "myState" : 2, "term" : NumberLong(2), "syncingTo" : "127.0.0.1:27037", "syncSourceHost" : "127.0.0.1:27037", "syncSourceId" : 2, "heartbeatIntervalMillis" : NumberLong(2000), "optimes" : { "lastCommittedOpTime" : { "ts" : Timestamp(1688519057, 1), "t" : NumberLong(2) }, "readConcernMajorityOpTime" : { "ts" : Timestamp(1688519057, 1), "t" : NumberLong(2) }, "appliedOpTime" : { "ts" : Timestamp(1688519057, 1), "t" : NumberLong(2) }, "durableOpTime" : { "ts" : Timestamp(1688519057, 1), "t" : NumberLong(2) } }, "members" : [ { "_id" : 0, "name" : "127.0.0.1:27017", "health" : 0, 原来的主已经宕机了 "state" : 8, "stateStr" : "(not reachable/healthy)", "uptime" : 0, "optime" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "optimeDurable" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "optimeDate" : ISODate("1970-01-01T00:00:00Z"), "optimeDurableDate" : ISODate("1970-01-01T00:00:00Z"), "lastHeartbeat" : ISODate("2023-07-05T01:04:24.281Z"), "lastHeartbeatRecv" : ISODate("2023-07-05T01:03:14.004Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "Error connecting to 127.0.0.1:27017 :: caused byonnection refused", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "configVersion" : -1 }, { "_id" : 1, "name" : "127.0.0.1:27027", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 665, "optime" : { "ts" : Timestamp(1688519057, 1), "t" : NumberLong(2) }, "optimeDate" : ISODate("2023-07-05T01:04:17Z"), "syncingTo" : "127.0.0.1:27037", "syncSourceHost" : "127.0.0.1:27037", "syncSourceId" : 2, "infoMessage" : "", "configVersion" : 8, "self" : true, "lastHeartbeatMessage" : "" }, { "_id" : 2, "name" : "127.0.0.1:27037", "health" : 1, 27037被选举成为主 "state" : 1, "stateStr" : "PRIMARY", "uptime" : 515, "optime" : { "ts" : Timestamp(1688519057, 1), "t" : NumberLong(2) }, "optimeDurable" : { "ts" : Timestamp(1688519057, 1), "t" : NumberLong(2) }, "optimeDate" : ISODate("2023-07-05T01:04:17Z"), "optimeDurableDate" : ISODate("2023-07-05T01:04:17Z"), "lastHeartbeat" : ISODate("2023-07-05T01:04:24.276Z"), "lastHeartbeatRecv" : ISODate("2023-07-05T01:04:23.755Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "electionTime" : Timestamp(1688519005, 1), "electionDate" : ISODate("2023-07-05T01:03:25Z"), "configVersion" : 8 }, { "_id" : 3, "name" : "127.0.0.1:27047", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 148, "optime" : { "ts" : Timestamp(1688519057, 1), "t" : NumberLong(2) }, "optimeDurable" : { "ts" : Timestamp(1688519057, 1), "t" : NumberLong(2) }, "optimeDate" : ISODate("2023-07-05T01:04:17Z"), "optimeDurableDate" : ISODate("2023-07-05T01:04:17Z"), "lastHeartbeat" : ISODate("2023-07-05T01:04:24.277Z"), "lastHeartbeatRecv" : ISODate("2023-07-05T01:04:24.132Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "127.0.0.1:27037", "syncSourceHost" : "127.0.0.1:27037", "syncSourceId" : 2, "infoMessage" : "", "configVersion" : 8 } ], "ok" : 1, "operationTime" : Timestamp(1688519057, 1), "$clusterTime" : { "clusterTime" : Timestamp(1688519057, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } }

从新启动宕掉的主

[root@localhost ~]# /etc/init.d/mongodb mongodb1 start

about to fork child process, waiting until server is ready for connections.

forked process: 1939

child process started successfully, parent exiting

并不会变回主

"_id" : 0,

"name" : "127.0.0.1:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 85,

"optime" : {

"ts" : Timestamp(1688519397, 1),

"t" : NumberLong(2)

},

"optimeDurable" : {

"ts" : Timestamp(1688519397, 1),

"t" : NumberLong(2)

设置本机30秒不参加选举为主 主宕机后30内不会参与当主

crushlinux:SECONDARY> rs.freeze(30)

{

"ok" : 1,

"operationTime" : Timestamp(1688519879, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688519879, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

crushlinux:SECONDARY> exit

bye

[root@localhost ~]# ps aux | grep mongod

root 1510 0.3 6.4 3961300 64296 ? Sl 08:53 0:04 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb2.conf

root 1562 0.2 6.9 3965260 69428 ? Sl 08:53 0:04 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb4.conf

root 2055 0.3 8.5 4005364 85432 ? Sl 09:16 0:00 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb3.conf

root 2144 0.0 0.0 112824 980 pts/0 R+ 09:18 0:00 grep --color=auto mongod

[root@localhost ~]# kill -9 2055 宕掉现在的主

现在27选举成为主

"_id" : 1,

"name" : "127.0.0.1:27027",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 198,

"optime" : {

选举的原理

(1)节点类型:标准节点,被动节点,仲裁节点

- 只有标准节点可能被选举为活跃(主)节点,有选举权

- 被动节点有完整副本,不可能成为活跃节点,有选举权

- 仲裁节点不复制数据,不可能成为活跃节点,只有选举权

(2)标准节点与被动节点的区别

priority值高者是标准节点,低者则为被动节点

(3)选举规则

票数高者获胜,priority是优先权0-1000值,相当于额外增加0-1000的票数。

选举结果:票数高者获胜;若票数相同,数据新者获胜。

从新定义集群 把27047设置为总裁节点

配置节点

[root@localhost ~]# /etc/init.d/mongodb mongodb4 stop

killing process with pid: 2887

^[[root@localhost ~]# /etc/init.d/mongodb mongodb3 stop

killing process with pid: 2814

[root@localhost ~]# /etc/init.d/mongodb mongodb2 stop

killing process with pid: 2354

[root@localhost ~]# /etc/init.d/mongodb mongodb1 stop

killing process with pid: 2180

[root@localhost ~]# /etc/init.d/mongodb mongodb1 start

about to fork child process, waiting until server is ready for connections.

forked process: 3045

child process started successfully, parent exiting

[root@localhost ~]# /etc/init.d/mongodb mongodb2 start

about to fork child process, waiting until server is ready for connections.

forked process: 3101

child process started successfully, parent exiting

[root@localhost ~]# /etc/init.d/mongodb mongodb3 start

about to fork child process, waiting until server is ready for connections.

forked process: 3159

child process started successfully, parent exiting

[root@localhost ~]# /etc/init.d/mongodb mongodb4 start

about to fork child process, waiting until server is ready for connections.

forked process: 3225

child process started successfully, parent exiting

[root@localhost ~]# mongo

crushlinux:PRIMARY> cfg={"_id":"crushlinux","protocolVersion":1,"members":[{"_id":0,"host":"127.0.0.1:27017","priority":100}, {"_id":1,"host":"127.0.0.1:27027","priority":100}, {"_id":2,"host":"127.0.0.1:27037","priority":0}, {"_id":3,"host":"127.0.0.1:27047","arbiterOnly":true}]} 代表仲裁节点

crushlinux:PRIMARY> rs.remove("127.0.0.1:27047") 要先移除

{

"ok" : 1,

"operationTime" : Timestamp(1688522558, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688522558, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

crushlinux:PRIMARY> rs.addArb("127.0.0.1:27047") 再添加

{

"ok" : 1,

"operationTime" : Timestamp(1688522567, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688522567, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

crushlinux:PRIMARY> rs.reconfig(cfg) 初始化

{

"ok" : 1,

"operationTime" : Timestamp(1688522571, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688522571, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

crushlinux:PRIMARY> rs.isMaster()

{

"hosts" : [ 标准节点

"127.0.0.1:27017",

"127.0.0.1:27027"

],

"passives" : [ 被动节点

"127.0.0.1:27037"

],

"arbiters" : [ 总裁节点

"127.0.0.1:27047"

],

[root@localhost ~]# /etc/init.d/mongodb mongodb4 start 还要在启动一下

模拟故障

[root@localhost ~]# /etc/init.d/mongodb mongodb1 stop

查看

[root@localhost ~]# mongo --port 27027

crushlinux:PRIMARY> rs.status()

"_id" : 1,

"name" : "127.0.0.1:27027",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1283,

在把mongodb2 故障了

[root@localhost /etc/init.d/mongodb mongodb2 stop

killing process with pid: 3101

[root@localhost ~]# mongo --port 27027 27故障登陆不了

MongoDB shell version v4.0.6

connecting to: mongodb://127.0.0.1:27027/?gssapiServiceName=mongodb

2023-07-05T10:16:26.614+0800 E QUERY [js] Error: couldn't connect to server 127.0.0.1:27027, connection attempt failed: SocketException: Error connecting to 127.0.0.1:27027 :: caused by :: Connection refused :

connect@src/mongo/shell/mongo.js:343:13

@(connect):1:6

exception: connect failed

[root@localhost ~]# mongo --port 27037 用被动节点登陆

"_id" : 0,

"name" : "127.0.0.1:27017",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"optime" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDurable" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"optimeDurableDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("2023-07-05T02:16:32.738Z"),

"lastHeartbeatRecv" : ISODate("2023-07-05T02:16:13.259Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "Error connecting to 127.0.0.1:27017 :: caused by :: Connection refused",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : -1

},

{

"_id" : 1,

"name" : "127.0.0.1:27027",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"optime" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDurable" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"optimeDurableDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("2023-07-05T02:16:32.738Z"),

"lastHeartbeatRecv" : ISODate("2023-07-05T02:16:20.763Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "Error connecting to 127.0.0.1:27027 :: caused by :: Connection refused",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : -1

},

{

"_id" : 2,

"name" : "127.0.0.1:27037",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY", 被动节点不能成为活跃节点

"uptime" : 1578,

"optime" : {

"ts" : Timestamp(1688523374, 1),

"t" : NumberLong(9)

},

"optimeDate" : ISODate("2023-07-05T02:16:14Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"configVersion" : 11,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 3,

"name" : "127.0.0.1:27047",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 303,

"lastHeartbeat" : ISODate("2023-07-05T02:16:32.718Z"),

"lastHeartbeatRecv" : ISODate("2023-07-05T02:16:31.594Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 11

从新启动配置从节点可以读取数据

crushlinux:SECONDARY> show dbs

2023-07-05T10:27:00.727+0800 E QUERY [js] Error: listDatabases failed:{

"operationTime" : Timestamp(1688524017, 1),

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" : "NotMasterNoSlaveOk",

"$clusterTime" : {

"clusterTime" : Timestamp(1688524017, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

} :

_getErrorWithCode@src/mongo/shell/utils.js:25:13

Mongo.prototype.getDBs@src/mongo/shell/mongo.js:139:1

shellHelper.show@src/mongo/shell/utils.js:882:13

shellHelper@src/mongo/shell/utils.js:766:15

@(shellhelp2):1:1

crushlinux:SECONDARY> rs.slaveOk() 使从节点可以读取数据

crushlinux:SECONDARY> show dbs

admin 0.078GB

config 0.078GB

local 1.078GBcrushlinux:SECONDARY> rs.printSlaveReplicationInfo()

查看主从复制的信息。它会显示主节点和从节点的相关信息,包括复制状态、延迟、同步进度等。

source: 127.0.0.1:27027

syncedTo: Wed Jul 05 2023 10:28:17 GMT+0800 (CST)

0 secs (0 hrs) behind the primary

source: 127.0.0.1:27037

syncedTo: Wed Jul 05 2023 10:28:17 GMT+0800 (CST)

0 secs (0 hrs) behind the primary

crushlinux:SECONDARY> rs.printReplicationInfo()通过运行该命令,可以查看数据库的复制状态、复制集成员的信息等。

configured oplog size: 990MB

log length start to end: 5561secs (1.54hrs)

oplog first event time: Wed Jul 05 2023 08:55:46 GMT+0800 (CST)

oplog last event time: Wed Jul 05 2023 10:28:27 GMT+0800 (CST)

now: Wed Jul 05 2023 10:28:29 GMT+0800 (CST)复制集管理

生产常见中oplog文件默认大小是不能满足频繁的更新业务需求的,在配置复制集启动时,就应该针对oplog有大小预计,旧版本修改oplog大小必须重启主数据库。

crushlinux:PRIMARY> use local 切换数据库

switched to db local

crushlinux:PRIMARY> show collections 显示了该数据库中的集合列表

oplog.rs

replset.election

replset.minvalid

replset.oplogTruncateAfterPoint

startup_log

crushlinux:PRIMARY> db.oplog.rs.stats() 获取了oplog.rs集合的统计信息

{

"ns" : "local.oplog.rs",

"size" : 57052,

"count" : 490,

"avgObjSize" : 116,

"numExtents" : 1,

"storageSize" : 1038090240,

"lastExtentSize" : 1038090240,

"paddingFactor" : 1,

"paddingFactorNote" : "paddingFactor is unused and unmaintained in 3.0. It remains hard coded to 1.0 for compatibility only.",

"userFlags" : 1,

"capped" : true,

"max" : NumberLong("9223372036854775807"),

"maxSize" : 1038090240,

"nindexes" : 0,

"totalIndexSize" : 0,

"indexSizes" : {

},

"ok" : 1,

"operationTime" : Timestamp(1688524217, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688524217, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

crushlinux:PRIMARY> db.runCommand({"convertToCapped":"oplog.rs","size":102400000}) 将oplog.rs集合转换为固定大小的集合

{

"ok" : 1,

"operationTime" : Timestamp(1688524237, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688524237, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}crushlinux:SECONDARY> rs.printReplicationInfo() 打印副本集的复制信息

configured oplog size: 97.65625MB

log length start to end: 6102secs (1.7hrs)

oplog first event time: Wed Jul 05 2023 08:55:46 GMT+0800 (CST)

oplog last event time: Wed Jul 05 2023 10:37:28 GMT+0800 (CST)

now: Wed Jul 05 2023 10:37:31 GMT+0800 (CST)

crushlinux:SECONDARY> db.oplog.rs.stats() 获取操作日志的统计信息,包括日志的命名空间、大小、记录数量、平均对象大小等。

{

"ns" : "local.oplog.rs",

"size" : 61964,

"count" : 533,

"avgObjSize" : 116,

"numExtents" : 1,

"storageSize" : 102400000,

"lastExtentSize" : 102400000,

"paddingFactor" : 1,

"paddingFactorNote" : "paddingFactor is unused and unmaintained in 3.0. It remains hard coded to 1.0 for compatibility only.",

"userFlags" : 1,

"capped" : true,

"max" : NumberLong("9223372036854775807"),

"maxSize" : 102400000,

"nindexes" : 0,

"totalIndexSize" : 0,

"indexSizes" : {

},

"ok" : 1,

"operationTime" : Timestamp(1688524658, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1688524658, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

部署认证复制

crushlinux:PRIMARY> use admin

switched to db adminswitched to db admin

crushlinux:PRIMARY> db.createUser({"user":"root","pwd":"123456","roles":["root"]})

Successfully added user: { "user" : "root", "roles" : [ "root" ] };创建一个名为"root"的用户,密码为"123456",并赋予"root"角色。这个命令的执行结果显示成功创建了用户"root"

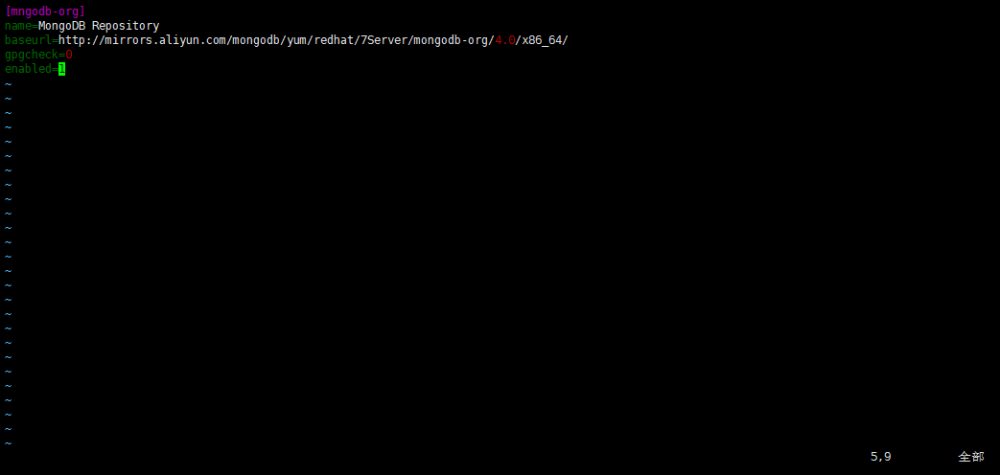

[root@localhost ~]# cat << END >> /usr/local/mongodb/conf/mongodb1.conf

> clusterAuthMode=keyFile

> keyFile=/usr/local/mongodb/conf/crushlinuxkey

> END

[root@localhost ~]# cat << END >> /usr/local/mongodb/conf/mongodb2.conf

> clusterAuthMode=keyFile

> keyFile=/usr/local/mongodb/conf/crushlinuxkey

> END

[root@localhost ~]# cat << END >> /usr/local/mongodb/conf/mongodb3.conf

> clusterAuthMode=keyFile

> keyFile=/usr/local/mongodb/conf/crushlinuxkey

> END

[root@localhost ~]# cat << END >> /usr/local/mongodb/conf/mongodb4.conf

> clusterAuthMode=keyFile

> keyFile=/usr/local/mongodb/conf/crushlinuxkey

> END上述命令的作用是将下面的内容追加到`/usr/local/mongodb/conf/mongodb2.conf`文件中:clusterAuthMode=keyFile keyFile=/usr/local/mongodb/conf/crushlinuxkey 这段内容是用于配置MongoDB集群的认证模式和密钥文件路径。集群的认证模式为keyFile

[root@localhost ~]# echo "123456 key" > /usr/local/mongodb/conf/crushlinuxkey创建了密钥文件`crushlinuxkey`,并将密码设置为`123456 key`。

[root@localhost ~]# chmod 600 /usr/local/mongodb/conf/crushlinuxkey权限设置为只有root用户可读可写

[root@localhost ~]# /etc/init.d/mongodb mongodb1 restart 重启了4个MongoDB实例

killing process with pid: 3933

about to fork child process, waiting until server is ready for connections.

forked process: 4168

child process started successfully, parent exiting

[root@localhost ~]# /etc/init.d/mongodb mongodb2 restart

killing process with pid: 4054

about to fork child process, waiting until server is ready for connections.

forked process: 4312

child process started successfully, parent exiting

[root@localhost ~]# /etc/init.d/mongodb mongodb3 restart

killing process with pid: 3159

about to fork child process, waiting until server is ready for connections.

forked process: 4419

child process started successfully, parent exiting

[root@localhost ~]# /etc/init.d/mongodb mongodb4 restart

killing process with pid: 3535

about to fork child process, waiting until server is ready for connections.

forked process: 4508

child process started successfully, parent exiting[root@localhost ~]# mongo --port 27017文章来源:https://www.toymoban.com/news/detail-524725.html

crushlinux:PRIMARY> use admin

switched to db admin

crushlinux:PRIMARY> db.auth

function () {

var ex;

try {

this._authOrThrow.apply(this, arguments);

} catch (ex) {

print(ex);

return 0;

}

return 1;

}

crushlinux:PRIMARY> db.auth("root","123456") 验证数据库用户名和密码 返回一表示成功

1

crushlinux:PRIMARY> rs.status()

{

"set" : "crushlinux",

"date" : ISODate("2023-07-05T03:00:17.176Z"),

"myState" : 1,

"term" : NumberLong(15),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1688526009, 1),

"t" : NumberLong(15)文章来源地址https://www.toymoban.com/news/detail-524725.html

到了这里,关于MongoDB复制集集群部署及管理的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!