paper:https://arxiv.org/pdf/2202.03046

paper还没怎么看,有时间了再回来把原理补上…

这篇文章的工作是在 FaceShifter 为 baseline 上进行的,提出了:

- 新的 eye-based 损失;

- 新的 face mask 平滑方法;

- 新的视频人脸交换pipeline;

- 新的用于减少相邻帧和SR阶段面部抖动的稳定技术。

git:https://github.com/ai-forever/ghost

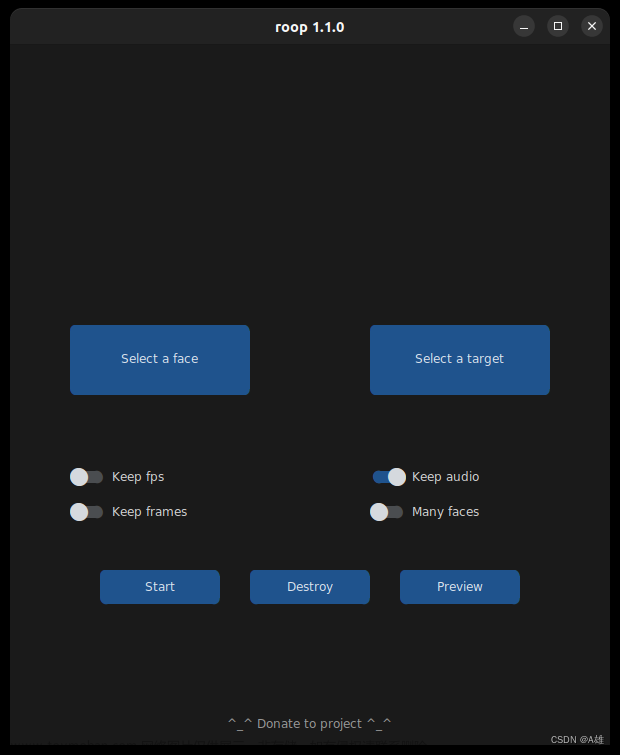

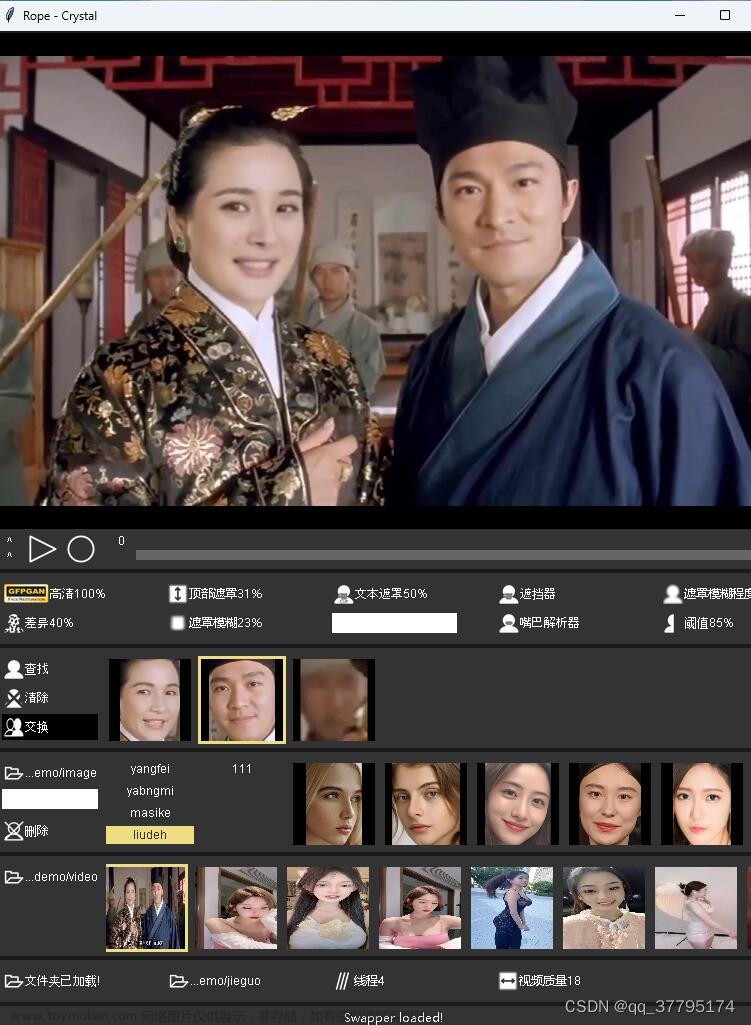

支持 【视频里单个人脸转换】、【视频里多个人脸转换】、【图片换脸】、【训练换脸模型】

我的dockerfile如下:文章来源:https://www.toymoban.com/news/detail-528405.html

FROM pytorch/pytorch:1.6.0-cuda10.1-cudnn7-devel

RUN echo "" > /etc/apt/sources.list.d/cuda.list

RUN sed -i "s@/archive.ubuntu.com/@/mirrors.aliyun.com/@g" /etc/apt/sources.list

RUN sed -i "s@/security.ubuntu.com/@/mirrors.aliyun.com/@g" /etc/apt/sources.list

RUN apt-get update --fix-missing && apt-get install -y fontconfig --fix-missing

RUN apt-get install -y vim

RUN apt-get install -y python3.7 python3-pip

RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo "Asia/Shanghai" > /etc/timezone

RUN pip install -i https://pypi.tuna.tsinghua.edu.cn/simple scipy matplotlib seaborn h5py sklearn numpy==1.20.3 pandas==1.3.5

RUN pip install -i https://pypi.tuna.tsinghua.edu.cn/simple opencv-python

RUN apt-get install libgl1-mesa-glx -y

RUN pip install -i https://pypi.tuna.tsinghua.edu.cn/simple onnx==1.9.0 onnxruntime-gpu==1.4.0

RUN pip install -i https://pypi.tuna.tsinghua.edu.cn/simple mxnet-cu101mkl

RUN pip install -i https://pypi.tuna.tsinghua.edu.cn/simple scikit-image insightface==0.2.1 requests==2.25.1 kornia==0.5.4 dill wandb

RUN pip install -i https://pypi.tuna.tsinghua.edu.cn/simple protobuf==3.19.0

RUN pip install -i https://pypi.tuna.tsinghua.edu.cn/simple ffmpeg

RUN apt-get install ffmpeg -y

WORKDIR /home

# docker build -t wgs-torch/faceswap:ghost -f ./dk/Dockerfile .

shell:文章来源地址https://www.toymoban.com/news/detail-528405.html

#!/bin/bash

today=$(date -d "now" +%Y-%m-%d)

yesterday=$(date -d "yesterday" +%Y-%m-%d)

cd /data/wgs/face_swap/ghost

## 视频里一个人脸

#SOURCE_PATHA="\

# --source_paths ./examples/images/p1.jpg \

# "

#

#VIDEO_PATH="\

# --target_video ./examples/videos/inVideo1.mp4 \

# --out_video_name ./examples/results/o1_1_1.mp4 \

# "

# 视频里多个人脸

SOURCE_PATHA="\

--source_paths ./examples/images/p1.jpg ./examples/images/p2.jpg \

--target_faces_paths ./examples/images/tgt1.png ./examples/images/tgt2.png \

"

VIDEO_PATH="\

--target_video ./examples/videos/dirtydancing.mp4 \

--out_video_name ./examples/results/o_multi.mp4 \

"

options=" \

$SOURCE_PATHA \

$VIDEO_PATH \

"

docker run -d --gpus '"device=1"' \

--rm -it --name face_swap \

--shm-size 15G \

-v /data/wgs/face_swap:/home \

wgs-torch/faceswap:ghost \

sh -c "python3 /home/ghost/inference.py $options 1>>/home/log/ghost.log 2>>/home/log/ghost.err"

# nohup sh /data/wgs/face_swap/dk/ghost.sh &

到了这里,关于基于GHOST-A的AI视频换脸的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!