- 尚硅谷大数据技术-教程-学习路线-笔记汇总表【课程资料下载】

- 视频地址:尚硅谷Docker实战教程(docker教程天花板)_哔哩哔哩_bilibili

- 尚硅谷Docker实战教程-笔记01【基础篇,Docker理念简介、官网介绍、平台入门图解、平台架构图解】

- 尚硅谷Docker实战教程-笔记02【基础篇,Docker安装、镜像加速器配置】

- 尚硅谷Docker实战教程-笔记03【基础篇,Docker常用命令】

- 尚硅谷Docker实战教程-笔记04【基础篇,Docker镜像】

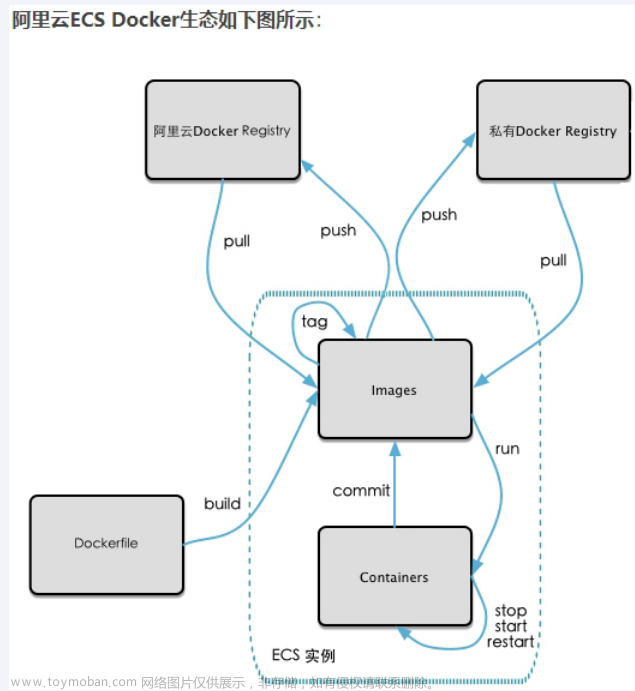

尚硅谷Docker实战教程-笔记05【基础篇,Docker本地镜像发布到阿里云与私有库】

尚硅谷Docker实战教程-笔记06【基础篇,Docker容器数据卷】

尚硅谷Docker实战教程-笔记07【基础篇,Docker常规安装简介】

尚硅谷Docker实战教程-笔记08【高级篇,Docker复杂安装详说】

尚硅谷Docker实战教程-笔记09【高级篇,DockerFile解析】

尚硅谷Docker实战教程-笔记10【高级篇,Docker微服务实战】

尚硅谷Docker实战教程-笔记11【高级篇,Docker网络】

尚硅谷Docker实战教程-笔记12【高级篇,Docker-compose容器编排】

尚硅谷Docker实战教程-笔记13【高级篇,Docker轻量级可视化工具Portainer】

尚硅谷Docker实战教程-笔记14【高级篇,Docker容器监控之CAdvisor+InfluxDB+Granfana】

尚硅谷Docker实战教程-笔记15【高级篇,Docker终章总结】

目录

2.高级篇(大厂进阶)

1.Docker复杂安装详说

P041【41_mysql主从复制docker版】20:48

P042【42_分布式存储之哈希取余算法】09:39

P043【43_分布式存储之一致性哈希算法】13:55

P044【44_分布式存储之哈希槽算法】10:19

P045【45_3主3从redis集群配置上集】08:40

P046【46_3主3从redis集群配置中集】07:05

P047【47_3主3从redis集群配置下集】02:56

P048【48_redis集群读写error说明】05:03

P049【49_redis集群读写路由增强正确案例】03:13

P050【50_查看集群信息cluster check】02:26

P051【51_主从容错切换迁移】09:38

P052【52_主从扩容需求分析】03:36

P053【53_主从扩容案例演示】16:55

P054【54_主从缩容需求分析】02:56

P055【55_主从缩容案例演示】08:50

P056【56_分布式存储案例小总结】05:34

2.高级篇(大厂进阶)

1.Docker复杂安装详说

P040【40_高级篇简介】03:15

P041【41_mysql主从复制docker版】20:48

docker run -p 3307:3306 --name mysql-master \

-v /opt/docker/mysql/mysql-master/log:/var/log/mysql \

-v /opt/docker/mysql/mysql-master/data:/var/lib/mysql \

-v /opt/docker/mysql/mysql-master/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=root \

-d mysql:5.7/opt/docker/mysql/mysql-master/conf/my.cnf

[mysqld]

## 设置server_id,同一局域网中需要唯一

server_id=101

## 指定不需要同步的数据库名称

binlog-ignore-db=mysql

## 开启二进制日志功能

log-bin=mall-mysql-bin

## 设置二进制日志使用内存大小(事务)

binlog_cache_size=1M

## 设置使用的二进制日志格式(mixed,statement,row)

binlog_format=mixed

## 二进制日志过期清理时间。默认值为0,表示不自动清理。

expire_logs_days=7

## 跳过主从复制中遇到的所有错误或指定类型的错误,避免slave端复制中断。

## 如:1062错误是指一些主键重复,1032错误是因为主从数据库数据不一致。

slave_skip_errors=1062docker run -p 3308:3306 --name mysql-slave \

-v /opt/docker/mysql/mysql-slave/log:/var/log/mysql \

-v /opt/docker/mysql/mysql-slave/data:/var/lib/mysql \

-v /opt/docker/mysql/mysql-slave/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=root \

-d mysql:5.7change master to master_host='宿主机ip', master_user='slave', master_password='123456', master_port=3307, master_log_file='mall-mysql-bin.000001', master_log_pos=617, master_connect_retry=30;

change master to master_host='node001', master_user='slave', master_password='123456', master_port=3307, master_log_file='mall-mysql-bin.000001', master_log_pos=617, master_connect_retry=30;

show slave status \G;P042【42_分布式存储之哈希取余算法】09:39

2亿条记录就是2亿个k,v,我们单机不行必须要分布式多机,假设有3台机器构成一个集群,用户每次读写操作都是根据公式: hash(key) % N个机器台数,计算出哈希值,用来决定数据映射到哪一个节点上。优点: 简单粗暴,直接有效,只需要预估好数据规划好节点,例如3台、8台、10台,就能保证一段时间的数据支撑。使用Hash算法让固定的一部分请求落到同一台服务器上,这样每台服务器固定处理一部分请求(并维护这些请求的信息),起到负载均衡+分而治之的作用。缺点: 原来规划好的节点,进行扩容或者缩容就比较麻烦了额,不管扩缩,每次数据变动导致节点有变动,映射关系需要重新进行计算,在服务器个数固定不变时没有问题,如果需要弹性扩容或故障停机的情况下,原来的取模公式就会发生变化:Hash(key)/3会变成Hash(key) /?。此时地址经过取余运算的结果将发生很大变化,根据公式获取的服务器也会变得不可控。某个redis机器宕机了,由于台数数量变化,会导致hash取余全部数据重新洗牌。

P043【43_分布式存储之一致性哈希算法】13:55

一致性Hash算法背景

一致性哈希算法在1997年由麻省理工学院中提出的,设计目标是为了解决分布式缓存数据变动和映射问题,某个机器宕机了,分母数量改变了,自然取余数不OK了。

为了在节点数目发生改变时尽可能少的迁移数据。

将所有的存储节点排列在收尾相接的Hash环上,每个key在计算Hash后会顺时针找到临近的存储节点存放。

而当有节点加入或退出时,仅影响该节点在Hash环上顺时针相邻的后续节点。

优点:加入和删除节点只影响哈希环中顺时针方向的相邻的节点,对其他节点无影响。

缺点:数据的分布和节点的位置有关,因为这些节点不是均匀的分布在哈希环上的,所以数据在进行存储时达不到均匀分布的效果。

P044【44_分布式存储之哈希槽算法】10:19

1 为什么出现

哈希槽实质就是一个数组,数组[0, 2^14 -1]形成hash slot空间。

2 能干什么

解决均匀分配的问题,在数据和节点之间又加入了一层,把这层称为哈希槽(slot),用于管理数据和节点之间的关系,现在就相当于节点上放的是槽,槽里放的是数据。

槽解决的是粒度问题,相当于把粒度变大了,这样便于数据移动。

哈希解决的是映射问题,使用key的哈希值来计算所在的槽,便于数据分配。

3 多少个hash槽

一个集群只能有16384个槽,编号0-16383(0-2^14-1)。这些槽会分配给集群中的所有主节点,分配策略没有要求。可以指定哪些编号的槽分配给哪个主节点。集群会记录节点和槽的对应关系。解决了节点和槽的关系后,接下来就需要对key求哈希值,然后对16384取余,余数是几key就落入对应的槽里。slot = CRC16(key) % 16384。以槽为单位移动数据,因为槽的数目是固定的,处理起来比较容易,这样数据移动问题就解决了。

Redis 集群中内置了 16384 个哈希槽,redis 会根据节点数量大致均等的将哈希槽映射到不同的节点。当需要在 Redis 集群中放置一个 key-value时,redis 先对 key 使用 crc16 算法算出一个结果,然后把结果对 16384 求余数,这样每个 key 都会对应一个编号在 0-16383 之间的哈希槽,也就是映射到某个节点上。如下代码,key之A 、B在Node2, key之C落在Node3上。

P045【45_3主3从redis集群配置上集】08:40

docker run -d --name redis-node-1 --net host --privileged=true -v /opt/docker/redis/share/redis-node-1:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6381

docker run -d --name redis-node-2 --net host --privileged=true -v /opt/docker/redis/share/redis-node-2:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6382

docker run -d --name redis-node-3 --net host --privileged=true -v /opt/docker/redis/share/redis-node-3:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6383

docker run -d --name redis-node-4 --net host --privileged=true -v /opt/docker/redis/share/redis-node-4:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6384

docker run -d --name redis-node-5 --net host --privileged=true -v /opt/docker/redis/share/redis-node-5:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6385

docker run -d --name redis-node-6 --net host --privileged=true -v /opt/docker/redis/share/redis-node-6:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6386[root@node001 ~]# docker run -d --name redis-node-1 --net host --privileged=true -v /opt/docker/redis/share/redis-node-1:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6381

9013d5714ac1dc943cc7bf0a074229f48d9904f861d72f436ee5dcf9b54c747c

[root@node001 ~]#

[root@node001 ~]# docker run -d --name redis-node-2 --net host --privileged=true -v /opt/docker/redis/share/redis-node-2:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6382

5d9503edf84c5e62741c382e23abcf678423023aa0060696b0bed1e88b1315f8

[root@node001 ~]#

[root@node001 ~]# docker run -d --name redis-node-3 --net host --privileged=true -v /opt/docker/redis/share/redis-node-3:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6383

5920e496a5608f833d5eb3cde99912f2b5aea77f37c64da42184694d2673fa86

[root@node001 ~]#

[root@node001 ~]# docker run -d --name redis-node-4 --net host --privileged=true -v /opt/docker/redis/share/redis-node-4:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6384

150bec0dc65dac9787c743ad0aa2fbf823a7643691b32874a7bde86840a46d83

[root@node001 ~]#

[root@node001 ~]# docker run -d --name redis-node-5 --net host --privileged=true -v /opt/docker/redis/share/redis-node-5:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6385

06f08c9945cec03bea6a5076490483e81ecba6e6d2d2cb3445404d8fdaeb2298

[root@node001 ~]#

[root@node001 ~]# docker run -d --name redis-node-6 --net host --privileged=true -v /opt/docker/redis/share/redis-node-6:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6386

20eb1323fb7335a3dbfb5bc637e5f0e0e218781b3e19a57868bca2b17f0b46bb

[root@node001 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

20eb1323fb73 redis:6.0.8 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes redis-node-6

06f08c9945ce redis:6.0.8 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes redis-node-5

150bec0dc65d redis:6.0.8 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes redis-node-4

5920e496a560 redis:6.0.8 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes redis-node-3

5d9503edf84c redis:6.0.8 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes redis-node-2

9013d5714ac1 redis:6.0.8 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes redis-node-1

[root@node001 ~]# redis-cli --cluster create 192.168.111.147:6381 192.168.111.147:6382 192.168.111.147:6383 192.168.111.147:6384 192.168.111.147:6385 192.168.111.147:6386 --cluster-replicas 1

redis-cli --cluster create node001:6381 node001:6382 node001:6383 node001:6384 node001:6385 node001:6386 --cluster-replicas 1

redis-cli --cluster create 192.168.10.101:6381 192.168.10.101:6382 192.168.10.101:6383 192.168.10.101:6384 192.168.10.101:6385 192.168.10.101:6386 --cluster-replicas 1P046【46_3主3从redis集群配置中集】07:05

[root@node001 ~]# docker exec -it redis-node-1 /bin/bash

root@node001:/data# redis-cli --cluster create 192.168.10.101:6381 192.168.10.101:6382 192.168.10.101:6383 192.168.10.101:6384 192.168.10.101:6385 192.168.10.101:6386 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.168.10.101:6385 to 192.168.10.101:6381

Adding replica 192.168.10.101:6386 to 192.168.10.101:6382

Adding replica 192.168.10.101:6384 to 192.168.10.101:6383

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: cec1d6fd56f5c03a6512d95488404f561b26eb7c 192.168.10.101:6381

slots:[0-5460] (5461 slots) master

M: 4478fc7f9a44dda4b4a6ea936614579f98896a13 192.168.10.101:6382

slots:[5461-10922] (5462 slots) master

M: cb54a1df71ce30c579275fb20bacb732d631f02c 192.168.10.101:6383

slots:[10923-16383] (5461 slots) master

S: bb9b7116c665a7e7e6502431a627731040968bc1 192.168.10.101:6384

replicates cb54a1df71ce30c579275fb20bacb732d631f02c

S: d206b3fb74c9747a7a4c42cb30142e28961c4496 192.168.10.101:6385

replicates cec1d6fd56f5c03a6512d95488404f561b26eb7c

S: 6f6915bcae8f1e27ded6fd18bece12930b205e4d 192.168.10.101:6386

replicates 4478fc7f9a44dda4b4a6ea936614579f98896a13

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 192.168.10.101:6381)

M: cec1d6fd56f5c03a6512d95488404f561b26eb7c 192.168.10.101:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: cb54a1df71ce30c579275fb20bacb732d631f02c 192.168.10.101:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 4478fc7f9a44dda4b4a6ea936614579f98896a13 192.168.10.101:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 6f6915bcae8f1e27ded6fd18bece12930b205e4d 192.168.10.101:6386

slots: (0 slots) slave

replicates 4478fc7f9a44dda4b4a6ea936614579f98896a13

S: d206b3fb74c9747a7a4c42cb30142e28961c4496 192.168.10.101:6385

slots: (0 slots) slave

replicates cec1d6fd56f5c03a6512d95488404f561b26eb7c

S: bb9b7116c665a7e7e6502431a627731040968bc1 192.168.10.101:6384

slots: (0 slots) slave

replicates cb54a1df71ce30c579275fb20bacb732d631f02c

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

root@node001:/data# redis-cli -p 6381

127.0.0.1:6381> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:215

cluster_stats_messages_pong_sent:205

cluster_stats_messages_sent:420

cluster_stats_messages_ping_received:200

cluster_stats_messages_pong_received:215

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:420

127.0.0.1:6381> cluster nodes

cb54a1df71ce30c579275fb20bacb732d631f02c 192.168.10.101:6383@16383 master - 0 1688558340209 3 connected 10923-16383

4478fc7f9a44dda4b4a6ea936614579f98896a13 192.168.10.101:6382@16382 master - 0 1688558339000 2 connected 5461-10922

cec1d6fd56f5c03a6512d95488404f561b26eb7c 192.168.10.101:6381@16381 myself,master - 0 1688558338000 1 connected 0-5460

6f6915bcae8f1e27ded6fd18bece12930b205e4d 192.168.10.101:6386@16386 slave 4478fc7f9a44dda4b4a6ea936614579f98896a13 0 1688558340000 2 connected

d206b3fb74c9747a7a4c42cb30142e28961c4496 192.168.10.101:6385@16385 slave cec1d6fd56f5c03a6512d95488404f561b26eb7c 0 1688558341225 1 connected

bb9b7116c665a7e7e6502431a627731040968bc1 192.168.10.101:6384@16384 slave cb54a1df71ce30c579275fb20bacb732d631f02c 0 1688558339000 3 connected

127.0.0.1:6381> P047【47_3主3从redis集群配置下集】02:56

P048【48_redis集群读写error说明】05:03

127.0.0.1:6381> keys *

(empty array)

127.0.0.1:6381> set k1 v1

(error) MOVED 12706 192.168.10.101:6383

127.0.0.1:6381> set k2 v2

OK

127.0.0.1:6381> set k3 v3

OK

127.0.0.1:6381> set k4 v4

(error) MOVED 8455 192.168.10.101:6382

127.0.0.1:6381> set k5 v5

(error) MOVED 12582 192.168.10.101:6383

127.0.0.1:6381> set k6 v6

OK

127.0.0.1:6381> P049【49_redis集群读写路由增强正确案例】03:13

root@node001:/data# redis-cli -p 6381 -c

127.0.0.1:6381> flushall

OK

127.0.0.1:6381> set k1 v1

-> Redirected to slot [12706] located at 192.168.10.101:6383

OK

192.168.10.101:6383> set k1 v2

OK

192.168.10.101:6383> set k2 v2

-> Redirected to slot [449] located at 192.168.10.101:6381

OK

192.168.10.101:6381> set k3 v3

OK

192.168.10.101:6381> set k4 v4

-> Redirected to slot [8455] located at 192.168.10.101:6382

OK

192.168.10.101:6382> P050【50_查看集群信息cluster check】02:26

root@node001:/data# redis-cli --cluster check 192.168.10.101:6381

192.168.10.101:6381 (cec1d6fd...) -> 2 keys | 5461 slots | 1 slaves.

192.168.10.101:6383 (cb54a1df...) -> 1 keys | 5461 slots | 1 slaves.

192.168.10.101:6382 (4478fc7f...) -> 1 keys | 5462 slots | 1 slaves.

[OK] 4 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.10.101:6381)

M: cec1d6fd56f5c03a6512d95488404f561b26eb7c 192.168.10.101:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: cb54a1df71ce30c579275fb20bacb732d631f02c 192.168.10.101:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 4478fc7f9a44dda4b4a6ea936614579f98896a13 192.168.10.101:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 6f6915bcae8f1e27ded6fd18bece12930b205e4d 192.168.10.101:6386

slots: (0 slots) slave

replicates 4478fc7f9a44dda4b4a6ea936614579f98896a13

S: d206b3fb74c9747a7a4c42cb30142e28961c4496 192.168.10.101:6385

slots: (0 slots) slave

replicates cec1d6fd56f5c03a6512d95488404f561b26eb7c

S: bb9b7116c665a7e7e6502431a627731040968bc1 192.168.10.101:6384

slots: (0 slots) slave

replicates cb54a1df71ce30c579275fb20bacb732d631f02c

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

root@node001:/data# P051【51_主从容错切换迁移】09:38

P052【52_主从扩容需求分析】03:36

P053【53_主从扩容案例演示】16:55

P054【54_主从缩容需求分析】02:56

P055【55_主从缩容案例演示】08:50

文章来源:https://www.toymoban.com/news/detail-528484.html

P056【56_分布式存储案例小总结】05:34

文章来源地址https://www.toymoban.com/news/detail-528484.html

到了这里,关于尚硅谷Docker实战教程-笔记08【高级篇,Docker复杂安装详说】的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!