概述

ORB-SLAM3 是第一个同时具备纯视觉(visual)数据处理、视觉+惯性(visual-inertial)数据处理、和构建多地图(multi-map)功能,支持单目、双目以及 RGB-D 相机,同时支持针孔相机、鱼眼相机模型的 SLAM 系统。

主要创新点:

1.在 IMU 初始化阶段引入 MAP。该初始化方法可以实时快速进行,鲁棒性上有很大的提升(在大的场景还是小的场景,不管室内还是室外环境均有较好表现),并且比当前的其他方法具有 2-5 倍的精确度的提升。

2.基于一种召回率大大提高的 place recognition(也就是做回环检测,与历史数据建立联系)方法实现了一个多子地图(multi-maps)系统。ORB-SLAM3 在视觉信息缺乏的情况下更具有 long term 鲁棒性,当跟丢的时候,这个时候就会重新建一个子地图,并且在回环的时候与之前的子地图进行合并。ORB-SLAM3 是第一个可以重用历史所有算法模块的所有信息的系统。

主要结论:

在所有 sensor 配置下,ORB-SLAM3 的鲁棒性与现在的发表的各大系统中相近,精度上有了显著的提高。尤其使用Stereo-Inertial SLAM,在 EuRoC 数据集的平均误差接近 3.6 cm,在一个偏向 AR/VR 场景的 TUM-VI 数据集的平均误差接近 9mm。

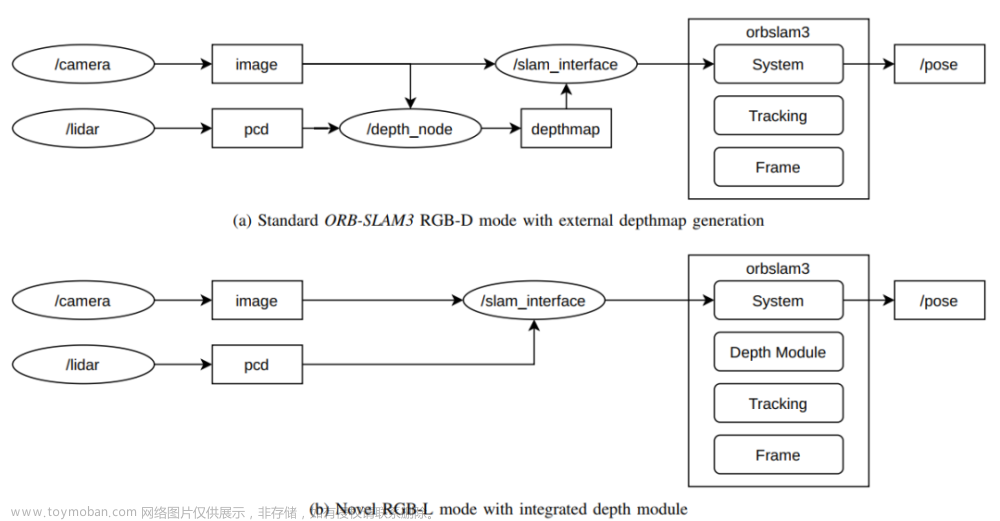

ORB_SLAM3系统框图

ORB_SLAM3启动入口在Examples文件夹中,包含单目、双目、RGB-D,及其惯性组合。本文以双目+惯性作为例子,介绍stereo_inertial_realsense_D435i启动流程。

启动

./Examples/Stereo-Inertial/stereo_inertial_realsense_D435i ./Vocabulary/ORBvoc.txt ./Examples/Stereo-Inertial/Realsense_D435i.yaml

从启动参数可以看到,参数个数argc = 3,参数./Examples/Stereo-Inertial/stereo_inertial_realsense_D435i 为stereo_inertial_realsense_D435i main()函数入口,./Vocabulary/ORBvoc.txt 训练好的ORBvoc字典,参数./Examples/Stereo-Inertial/Realsense_D435i.yaml为D435i的配置文件,可以根据自己手上的D435i进行相机内外参、imu数据修改,具体可以参考系列博文Realsense d435i驱动安装、配置及校准

进入main函数后

一、利用librealsense2 rs接口对d435i就行设备读取,读取得到的句柄放sensors 中。

...

string file_name;

if (argc == 4) {

file_name = string(argv[argc - 1]);

}

struct sigaction sigIntHandler;

sigIntHandler.sa_handler = exit_loop_handler;

sigemptyset(&sigIntHandler.sa_mask);

sigIntHandler.sa_flags = 0;

sigaction(SIGINT, &sigIntHandler, NULL);

b_continue_session = true;

double offset = 0; // ms

rs2::context ctx;

rs2::device_list devices = ctx.query_devices();

rs2::device selected_device;

if (devices.size() == 0)

{

std::cerr << "No device connected, please connect a RealSense device" << std::endl;

return 0;

}

else

selected_device = devices[0];

std::vector<rs2::sensor> sensors = selected_device.query_sensors();

int index = 0;

二、根据传感器的类型、名字进行相应的设置,如使能自动曝光补偿、曝光限制、关闭结构光等。

for (rs2::sensor sensor : sensors)

if (sensor.supports(RS2_CAMERA_INFO_NAME)) {

++index;

if (index == 1) {

sensor.set_option(RS2_OPTION_ENABLE_AUTO_EXPOSURE, 1);

sensor.set_option(RS2_OPTION_AUTO_EXPOSURE_LIMIT,5000);

sensor.set_option(RS2_OPTION_EMITTER_ENABLED, 0); // switch off emitter

}

get_sensor_option(sensor);

if (index == 2){

// RGB camera (not used here...)

sensor.set_option(RS2_OPTION_EXPOSURE,100.f);

}

if (index == 3){

sensor.set_option(RS2_OPTION_ENABLE_MOTION_CORRECTION,0);

}

}

三、此部分可以看出,设置了相机左右目的图片大小640*480,帧率30hz,accel/gyro数据类型为float型。

// Declare RealSense pipeline, encapsulating the actual device and sensors

rs2::pipeline pipe;

// Create a configuration for configuring the pipeline with a non default profile

rs2::config cfg;

cfg.enable_stream(RS2_STREAM_INFRARED, 1, 640, 480, RS2_FORMAT_Y8, 30);

cfg.enable_stream(RS2_STREAM_INFRARED, 2, 640, 480, RS2_FORMAT_Y8, 30);

cfg.enable_stream(RS2_STREAM_ACCEL, RS2_FORMAT_MOTION_XYZ32F);

cfg.enable_stream(RS2_STREAM_GYRO, RS2_FORMAT_MOTION_XYZ32F);

四、读取d435i传感器数据,主要包括左右目相机数据cam_left/cam_right,imu数据imu_stream左目外参Rbc/tbc,右目外参Rbc/tbc,左右目内参intrinsics_left/intrinsics_right

auto imu_callback = [&](const rs2::frame& frame)

{

std::unique_lock<std::mutex> lock(imu_mutex);

if(rs2::frameset fs = frame.as<rs2::frameset>())

{

count_im_buffer++;

double new_timestamp_image = fs.get_timestamp()*1e-3;

if(abs(timestamp_image-new_timestamp_image)<0.001){

// cout << "Two frames with the same timeStamp!!!\n";

count_im_buffer--;

return;

}

rs2::video_frame ir_frameL = fs.get_infrared_frame(1);

rs2::video_frame ir_frameR = fs.get_infrared_frame(2);

imCV = cv::Mat(cv::Size(width_img, height_img), CV_8U, (void*)(ir_frameL.get_data()), cv::Mat::AUTO_STEP);

imRightCV = cv::Mat(cv::Size(width_img, height_img), CV_8U, (void*)(ir_frameR.get_data()), cv::Mat::AUTO_STEP);

timestamp_image = fs.get_timestamp()*1e-3;

image_ready = true;

while(v_gyro_timestamp.size() > v_accel_timestamp_sync.size())

{

int index = v_accel_timestamp_sync.size();

double target_time = v_gyro_timestamp[index];

v_accel_data_sync.push_back(current_accel_data);

v_accel_timestamp_sync.push_back(target_time);

}

lock.unlock();

cond_image_rec.notify_all();

}

else if (rs2::motion_frame m_frame = frame.as<rs2::motion_frame>())

{

if (m_frame.get_profile().stream_name() == "Gyro")

{

// It runs at 200Hz

v_gyro_data.push_back(m_frame.get_motion_data());

v_gyro_timestamp.push_back((m_frame.get_timestamp()+offset)*1e-3);

//rs2_vector gyro_sample = m_frame.get_motion_data();

//std::cout << "Gyro:" << gyro_sample.x << ", " << gyro_sample.y << ", " << gyro_sample.z << std::endl;

}

else if (m_frame.get_profile().stream_name() == "Accel")

{

// It runs at 60Hz

prev_accel_timestamp = current_accel_timestamp;

prev_accel_data = current_accel_data;

current_accel_data = m_frame.get_motion_data();

current_accel_timestamp = (m_frame.get_timestamp()+offset)*1e-3;

while(v_gyro_timestamp.size() > v_accel_timestamp_sync.size())

{

int index = v_accel_timestamp_sync.size();

double target_time = v_gyro_timestamp[index];

rs2_vector interp_data = interpolateMeasure(target_time, current_accel_data, current_accel_timestamp,

prev_accel_data, prev_accel_timestamp);

v_accel_data_sync.push_back(interp_data);

v_accel_timestamp_sync.push_back(target_time);

}

// std::cout << "Accel:" << current_accel_data.x << ", " << current_accel_data.y << ", " << current_accel_data.z << std::endl;

}

}

};

rs2::pipeline_profile pipe_profile = pipe.start(cfg, imu_callback);

vector<ORB_SLAM3::IMU::Point> vImuMeas;

rs2::stream_profile cam_left = pipe_profile.get_stream(RS2_STREAM_INFRARED, 1);

rs2::stream_profile cam_right = pipe_profile.get_stream(RS2_STREAM_INFRARED, 2);

rs2::stream_profile imu_stream = pipe_profile.get_stream(RS2_STREAM_GYRO);

float* Rbc = cam_left.get_extrinsics_to(imu_stream).rotation;

float* tbc = cam_left.get_extrinsics_to(imu_stream).translation;

std::cout << "Tbc (left) = " << std::endl;

for(int i = 0; i<3; i++){

for(int j = 0; j<3; j++)

std::cout << Rbc[i*3 + j] << ", ";

std::cout << tbc[i] << "\n";

}

float* Rlr = cam_right.get_extrinsics_to(cam_left).rotation;

float* tlr = cam_right.get_extrinsics_to(cam_left).translation;

std::cout << "Tlr = " << std::endl;

for(int i = 0; i<3; i++){

for(int j = 0; j<3; j++)

std::cout << Rlr[i*3 + j] << ", ";

std::cout << tlr[i] << "\n";

}

rs2_intrinsics intrinsics_left = cam_left.as<rs2::video_stream_profile>().get_intrinsics();

width_img = intrinsics_left.width;

height_img = intrinsics_left.height;

cout << "Left camera: \n";

std::cout << " fx = " << intrinsics_left.fx << std::endl;

std::cout << " fy = " << intrinsics_left.fy << std::endl;

std::cout << " cx = " << intrinsics_left.ppx << std::endl;

std::cout << " cy = " << intrinsics_left.ppy << std::endl;

std::cout << " height = " << intrinsics_left.height << std::endl;

std::cout << " width = " << intrinsics_left.width << std::endl;

std::cout << " Coeff = " << intrinsics_left.coeffs[0] << ", " << intrinsics_left.coeffs[1] << ", " <<

intrinsics_left.coeffs[2] << ", " << intrinsics_left.coeffs[3] << ", " << intrinsics_left.coeffs[4] << ", " << std::endl;

std::cout << " Model = " << intrinsics_left.model << std::endl;

rs2_intrinsics intrinsics_right = cam_right.as<rs2::video_stream_profile>().get_intrinsics();

width_img = intrinsics_right.width;

height_img = intrinsics_right.height;

cout << "Right camera: \n";

std::cout << " fx = " << intrinsics_right.fx << std::endl;

std::cout << " fy = " << intrinsics_right.fy << std::endl;

std::cout << " cx = " << intrinsics_right.ppx << std::endl;

std::cout << " cy = " << intrinsics_right.ppy << std::endl;

std::cout << " height = " << intrinsics_right.height << std::endl;

std::cout << " width = " << intrinsics_right.width << std::endl;

std::cout << " Coeff = " << intrinsics_right.coeffs[0] << ", " << intrinsics_right.coeffs[1] << ", " <<

intrinsics_right.coeffs[2] << ", " << intrinsics_right.coeffs[3] << ", " << intrinsics_right.coeffs[4] << ", " << std::endl;

std::cout << " Model = " << intrinsics_right.model << std::endl;

从一到四部分可以看出,这部分主要是和d435i的交互,主要包括对其检测、设置、读取数据,这部分可以看做是d435i驱动部分。

五、进入slam系统,主要用于初始化系统线程和准备工作

ORB_SLAM3::System SLAM(argv[1],argv[2],ORB_SLAM3::System::IMU_STEREO, true, 0, file_name);

/**

* @brief 系统的构造函数,将会启动其他的线程

* @param strVocFile 词袋文件所在路径

* @param strSettingsFile 配置文件所在路径

* @param sensor 传感器类型

* @param bUseViewer 是否使用可视化界面

* @param initFr initFr表示初始化帧的id,开始设置为0

* @param strSequence 序列名,在跟踪线程和局部建图线程用得到

*/

System::System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor,

const bool bUseViewer, const int initFr, const string &strSequence):

1.输出welcome信息

// Output welcome message

cout << endl <<

"ORB-SLAM3 Copyright (C) 2017-2020 Carlos Campos, Richard Elvira, Juan J. Gómez, José M.M. Montiel and Juan D. Tardós, University of Zaragoza." << endl <<

"ORB-SLAM2 Copyright (C) 2014-2016 Raúl Mur-Artal, José M.M. Montiel and Juan D. Tardós, University of Zaragoza." << endl <<

"This program comes with ABSOLUTELY NO WARRANTY;" << endl <<

"This is free software, and you are welcome to redistribute it" << endl <<

"under certain conditions. See LICENSE.txt." << endl << endl;

cout << "Input sensor was set to: ";

2.读取配置文件

读取配置文件对应的就是启动./Examples/Stereo-Inertial/Realsense_D435i.yaml文件。针对文件版本不同对于mStrLoadAtlasFromFile、mStrSaveAtlasToFile不同。

//Check settings file

cv::FileStorage fsSettings(strSettingsFile.c_str(), cv::FileStorage::READ);

// 如果打开失败,就输出错误信息

if(!fsSettings.isOpened())

{

cerr << "Failed to open settings file at: " << strSettingsFile << endl;

exit(-1);

}

// 查看配置文件版本,不同版本有不同处理方法

cv::FileNode node = fsSettings["File.version"];

if(!node.empty() && node.isString() && node.string() == "1.0")

{

settings_ = new Settings(strSettingsFile,mSensor);

// 保存及加载地图的名字

mStrLoadAtlasFromFile = settings_->atlasLoadFile();

mStrSaveAtlasToFile = settings_->atlasSaveFile();

cout << (*settings_) << endl;

}

else

{

settings_ = nullptr;

cv::FileNode node = fsSettings["System.LoadAtlasFromFile"];

if(!node.empty() && node.isString())

{

mStrLoadAtlasFromFile = (string)node;

}

node = fsSettings["System.SaveAtlasToFile"];

if(!node.empty() && node.isString())

{

mStrSaveAtlasToFile = (string)node;

}

}

3.是否激活回环

node = fsSettings["loopClosing"];

bool activeLC = true;

if(!node.empty())

{

activeLC = static_cast<int>(fsSettings["loopClosing"]) != 0;

}

4.词袋文件赋值给mStrVocabularyFilePath,对应启动参数./Vocabulary/ORBvoc.txt

mStrVocabularyFilePath = strVocFile;

5.多地图管理功能

根据mStrLoadAtlasFromFile文件中是否有Atlas,进行相应处理。

(1)若文件中存在:

建立一个新的ORB字典,读取预训练好的ORB字典并返回成功/失败标志,创建关键帧数据库,创建多地图;

######(2)若文件中不存在:

建立一个新的ORB字典,读取预训练好的ORB字典并返回成功/失败标志,创建关键帧数据库,创建关键帧数据库,导入Atlas地图,创建新地图。

bool loadedAtlas = false;

if(mStrLoadAtlasFromFile.empty())

{

//Load ORB Vocabulary

cout << endl << "Loading ORB Vocabulary. This could take a while..." << endl;

// 建立一个新的ORB字典

mpVocabulary = new ORBVocabulary();

// 读取预训练好的ORB字典并返回成功/失败标志

bool bVocLoad = mpVocabulary->loadFromTextFile(strVocFile);

// 如果加载失败,就输出错误信息

if(!bVocLoad)

{

cerr << "Wrong path to vocabulary. " << endl;

cerr << "Falied to open at: " << strVocFile << endl;

exit(-1);

}

cout << "Vocabulary loaded!" << endl << endl;

//Create KeyFrame Database

// Step 4 创建关键帧数据库

mpKeyFrameDatabase = new KeyFrameDatabase(*mpVocabulary);

//Create the Atlas

// Step 5 创建多地图,参数0表示初始化关键帧id为0

cout << "Initialization of Atlas from scratch " << endl;

mpAtlas = new Atlas(0);

}

else

{

//Load ORB Vocabulary

cout << endl << "Loading ORB Vocabulary. This could take a while..." << endl;

mpVocabulary = new ORBVocabulary();

bool bVocLoad = mpVocabulary->loadFromTextFile(strVocFile);

if(!bVocLoad)

{

cerr << "Wrong path to vocabulary. " << endl;

cerr << "Falied to open at: " << strVocFile << endl;

exit(-1);

}

cout << "Vocabulary loaded!" << endl << endl;

//Create KeyFrame Database

mpKeyFrameDatabase = new KeyFrameDatabase(*mpVocabulary);

cout << "Load File" << endl;

// Load the file with an earlier session

//clock_t start = clock();

cout << "Initialization of Atlas from file: " << mStrLoadAtlasFromFile << endl;

bool isRead = LoadAtlas(FileType::BINARY_FILE);

if(!isRead)

{

cout << "Error to load the file, please try with other session file or vocabulary file" << endl;

exit(-1);

}

loadedAtlas = true;

mpAtlas->CreateNewMap();

}

6.此部分根据是否存在imu数据进行初始化

// 如果是有imu的传感器类型,设置mbIsInertial = true;以后的跟踪和预积分将和这个标志有关

if (mSensor==IMU_STEREO || mSensor==IMU_MONOCULAR || mSensor==IMU_RGBD)

mpAtlas->SetInertialSensor();

7.依次创建跟踪、局部建图、闭环、显示线程

(1)创建用于显示帧和地图的类,由Viewer调用

//Create Drawers. These are used by the Viewer

mpFrameDrawer = new FrameDrawer(mpAtlas);

mpMapDrawer = new MapDrawer(mpAtlas, strSettingsFile, settings_);

(2)创建跟踪线程(主线程),不会立刻开启,会在对图像和imu预处理后在main主线程种执行,(main)SLAM.TrackStereo()–>mpTracker->GrabImageStereo–>Track()开启跟踪。

//Initialize the Tracking thread

//(it will live in the main thread of execution, the one that called this constructor)

cout << "Seq. Name: " << strSequence << endl;

mpTracker = new Tracking(this, mpVocabulary, mpFrameDrawer, mpMapDrawer, mpAtlas, mpKeyFrameDatabase, strSettingsFile, mSensor, settings_, strSequence);

(3)创建并开启local mapping 线程,线程入口LocalMapping::Run

//Initialize the Local Mapping thread and launch

mpLocalMapper = new LocalMapping(this, mpAtlas, mSensor==MONOCULAR || mSensor==IMU_MONOCULAR,

mSensor==IMU_MONOCULAR || mSensor==IMU_STEREO || mSensor==IMU_RGBD, strSequence);

mptLocalMapping = new thread(&ORB_SLAM3::LocalMapping::Run,mpLocalMapper);

mpLocalMapper->mInitFr = initFr;

(4)设置最远3D地图点的深度值,如果超过阈值,说明可能三角化不成功,丢弃

if(settings_)

mpLocalMapper->mThFarPoints = settings_->thFarPoints();

else

mpLocalMapper->mThFarPoints = fsSettings["thFarPoints"];

if(mpLocalMapper->mThFarPoints!=0)

{

cout << "Discard points further than " << mpLocalMapper->mThFarPoints << " m from current camera" << endl;

mpLocalMapper->mbFarPoints = true;

}

else

mpLocalMapper->mbFarPoints = false;

(5)创建并开启闭环线程,程序入口LoopCloing::Run

//Initialize the Loop Closing thread and launch

// mSensor!=MONOCULAR && mSensor!=IMU_MONOCULAR

mpLoopCloser = new LoopClosing(mpAtlas, mpKeyFrameDatabase, mpVocabulary, mSensor!=MONOCULAR, activeLC); // mSensor!=MONOCULAR);

mptLoopClosing = new thread(&ORB_SLAM3::LoopClosing::Run, mpLoopCloser);

(6)设置线程间指针

//Set pointers between threads

mpTracker->SetLocalMapper(mpLocalMapper);

mpTracker->SetLoopClosing(mpLoopCloser);

mpLocalMapper->SetTracker(mpTracker);

mpLocalMapper->SetLoopCloser(mpLoopCloser);

mpLoopCloser->SetTracker(mpTracker);

mpLoopCloser->SetLocalMapper(mpLocalMapper);

(7)创建并开启显示线程,程序入口Viewer::Run

//Initialize the Viewer thread and launch

if(bUseViewer)

//if(false) // TODO

{

mpViewer = new Viewer(this, mpFrameDrawer,mpMapDrawer,mpTracker,strSettingsFile,settings_);

mptViewer = new thread(&Viewer::Run, mpViewer);

mpTracker->SetViewer(mpViewer);

mpLoopCloser->mpViewer = mpViewer;

mpViewer->both = mpFrameDrawer->both;

}

// Fix verbosity

// 打印输出中间的信息,设置为安静模式

Verbose::SetTh(Verbose::VERBOSITY_QUIET);

至此,Systemf完成。

6.此部分清空imu数据向量

// Clear IMU vectors

v_gyro_data.clear();

v_gyro_timestamp.clear();

v_accel_data_sync.clear();

v_accel_timestamp_sync.clear();

7.开启while(!isShutDown())主循环

8.根据时间戳对加速度进行插值

while(v_gyro_timestamp.size() > v_accel_timestamp_sync.size())

{

int index = v_accel_timestamp_sync.size();

double target_time = v_gyro_timestamp[index];

rs2_vector interp_data = interpolateMeasure(target_time, current_accel_data, current_accel_timestamp, prev_accel_data, prev_accel_timestamp);

v_accel_data_sync.push_back(interp_data);

// v_accel_data_sync.push_back(current_accel_data); // 0 interpolation

v_accel_timestamp_sync.push_back(target_time);

}

九、压入数据放入vImuMeas

for(int i=0; i<vGyro.size(); ++i)

{

ORB_SLAM3::IMU::Point lastPoint(vAccel[i].x, vAccel[i].y, vAccel[i].z,

vGyro[i].x, vGyro[i].y, vGyro[i].z,

vGyro_times[i]);

vImuMeas.push_back(lastPoint);

}

十、正式开启双目追踪线程

// Stereo images are already rectified.

SLAM.TrackStereo(im, imRight, timestamp, vImuMeas);

至此,stereo_inertial_realsense_D435i启动流程就全部完成。其中,跟踪Track、局部建图LocalMapping、闭环LoopCloing、显示线程Viewer后续再分子序列详细叙述。文章来源:https://www.toymoban.com/news/detail-533162.html

参考:

1.https://blog.csdn.net/u010196944/article/details/128972333?spm=1001.2014.3001.5501

2.https://blog.csdn.net/u010196944/article/details/127240169?spm=1001.2014.3001.5501文章来源地址https://www.toymoban.com/news/detail-533162.html

到了这里,关于ORB_SLAM3启动流程以stereo_inertial_realsense_D435i为例的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!