参考地址:https://kubespray.io/#/文章来源地址https://www.toymoban.com/news/detail-535095.html

创建虚拟机

# 安装虚拟机管理工具

$ brew install multipass

# 创建虚拟节点

$ multipass launch -n kubespray -m 1G -c 1 -d 30G

$ multipass launch -n cilium-node1 -m 2G -c 2 -d 30G

$ multipass launch -n cilium-node2 -m 2G -c 2 -d 30G

$ multipass launch -n cilium-node3 -m 2G -c 2 -d 30G

# 查看节点

$ multipass list

Name State IPv4 Image

kubespray Running 192.168.64.7 Ubuntu 22.04 LTS

cilium-node1 Running 192.168.64.8 Ubuntu 22.04 LTS

cilium-node2 Running 192.168.64.9 Ubuntu 22.04 LTS

cilium-node3 Running 192.168.64.10 Ubuntu 22.04 LTS

配置kubespray节点

root@kubespray:~# sudo apt update

root@kubespray:~# sudo apt install git python3 python3-pip -y

root@kubespray:~# git clone https://github.com/kubernetes-incubator/kubespray.git

root@kubespray:~# cd kubespray

root@kubespray:~# pip install -r requirements.txt

验证 Ansible 版本,运行:

root@kubespray:~# ansible --version

ansible [core 2.14.6]

config file = /root/kubespray/ansible.cfg

configured module search path = ['/root/kubespray/library']

ansible python module location = /usr/local/lib/python3.10/dist-packages/ansible

ansible collection location = /root/.ansible/collections:/usr/share/ansible/collections

executable location = /usr/local/bin/ansible

python version = 3.10.6 (main, May 29 2023, 11:10:38) [GCC 11.3.0] (/usr/bin/python3)

jinja version = 3.1.2

libyaml = True

创建主机清单,运行以下命令,替换你部署的节点 IP 地址:

root@kubespray:~# cp -rfp inventory/sample inventory/mycluster

root@kubespray:~# declare -a IPS=(192.168.64.8 192.168.64.9 192.168.64.10)

root@kubespray:~# CONFIG_FILE=inventory/mycluster/hosts.yaml python3 contrib/inventory_builder/inventory.py ${IPS[@]}

规划集群节点名称、角色及分组

root@kubespray# vim inventory/mycluster/hosts.yaml

all:

hosts:

node1:

ansible_host: 192.168.64.8

ip: 192.168.64.8

access_ip: 192.168.64.8

node2:

ansible_host: 192.168.64.9

ip: 192.168.64.9

access_ip: 192.168.64.9

node3:

ansible_host: 192.168.64.10

ip: 192.168.64.10

access_ip: 192.168.64.10

children:

kube_control_plane:

hosts:

node1:

node2:

kube_node:

hosts:

node1:

node2:

node3:

etcd:

hosts:

node1:

node2:

node3:

k8s_cluster:

children:

kube_control_plane:

kube_node:

calico_rr:

hosts: {}

国内能否安装的关键

root@kubespray# cp inventory/mycluster/group_vars/all/offline.yml inventory/mycluster/group_vars/all/mirror.yml

root@kubespray# sed -i -E '/# .*\{\{ files_repo/s/^# //g' inventory/mycluster/group_vars/all/mirror.yml

root@kubespray# tee -a inventory/mycluster/group_vars/all/mirror.yml <<EOF

gcr_image_repo: "gcr.m.daocloud.io"

kube_image_repo: "k8s.m.daocloud.io"

docker_image_repo: "docker.m.daocloud.io"

quay_image_repo: "quay.m.daocloud.io"

github_image_repo: "ghcr.m.daocloud.io"

files_repo: "https://files.m.daocloud.io"

EOF

配置集群版本、网络插件、运行时等

root@kubespray# vim inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.yml

# 选择网络插件,支持 cilium, calico, weave 和 flannel

# 这里我选择的是cilium

kube_network_plugin: cilium

# 如果ip和我不一样的,一定要确认一下这两个网段是否有冲突

# 设置 Service 网段

kube_service_addresses: 10.233.0.0/18

# 设置 Pod 网段

kube_pods_subnet: 10.233.64.0/18

# 支持 docker, crio 和 containerd,推荐 containerd.

container_manager: containerd

# 是否开启 kata containers

kata_containers_enabled: false

# 集群名称 因为带了.符号,所以不建议使用自带的,可以改为自己的

cluster_name: k8s-cilium

# 如果想配置Kuberenetes 仪表板和入口控制器等插件请修改下面的文件

root@kubespray# vim inventory/mycluster/group_vars/k8s_cluster/addons.yml

# 校验改动是否成功

root@kubespray# cat inventory/mycluster/group_vars/all/all.yml

root@kubespray# cat inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.yml

确保每台服务器都能root登录(后面用到root用户,非root用户可以自己探索)

sudo sed -i '/PermitRootLogin /c PermitRootLogin yes' /etc/ssh/sshd_config

sudo systemctl restart sshd

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

配置免密登录

root@kubespray:~# ssh-copy-id root@192.168.64.8

root@kubespray:~# ssh-copy-id root@192.168.64.9

root@kubespray:~# ssh-copy-id root@192.168.64.10

# 如果提示权限不够,手动将kubespray节点的/root/.ssh/id_rsa.pub的内容复制后添加在其他三个节点/root/.ssh/authorized_keys,注意需要另起一行添加

禁用防火墙并启用 IPV4 转发

# 要在所有节点上禁用防火墙,请从 Ansible 节点运行以下 ansible 命令:

root@kubespray:~# cd kubespray

root@kubespray:~# ansible all -i inventory/mycluster/hosts.yaml -m shell -a "sudo systemctl stop firewalld && sudo systemctl disable firewalld"

# 运行以下 ansible 命令以在所有节点上启用 IPv4 转发和禁用交换:

root@kubespray:~# ansible all -i inventory/mycluster/hosts.yaml -m shell -a "echo 'net.ipv4.ip_forward=1' | sudo tee -a /etc/sysctl.conf"

root@kubespray:~# ansible all -i inventory/mycluster/hosts.yaml -m shell -a "sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab && sudo swapoff -a"

启动Kubernetes部署

root@kubespray:~# cd kubespray

root@kubespray:~# ansible-playbook -i inventory/mycluster/hosts.yaml --become --become-user=root cluster.yml

# 等待约30分钟(取决于网络环境)

PLAY RECAP *******************************************************************************************************

localhost : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

node1 : ok=747 changed=125 unreachable=0 failed=0 skipped=1245 rescued=0 ignored=5

node2 : ok=534 changed=76 unreachable=0 failed=0 skipped=736 rescued=0 ignored=1

Tuesday 25 July 2023 10:46:02 +0800 (0:00:00.112) 0:15:28.991 **********

===============================================================================

download : download_file | Download item ---------------------------------------------------------------------- 57.79s

download : download_container | Download image if required ---------------------------------------------------- 52.43s

kubernetes/kubeadm : Join to cluster -------------------------------------------------------------------------- 41.03s

download : download_file | Download item ---------------------------------------------------------------------- 40.75s

download : download_container | Download image if required ---------------------------------------------------- 36.81s

download : download_container | Download image if required ---------------------------------------------------- 36.53s

download : download_file | Validate mirrors ------------------------------------------------------------------- 36.14s

download : download_file | Validate mirrors ------------------------------------------------------------------- 36.10s

download : download_container | Download image if required ---------------------------------------------------- 34.11s

download : download_file | Download item ---------------------------------------------------------------------- 19.51s

container-engine/containerd : download_file | Download item --------------------------------------------------- 18.23s

download : download_container | Download image if required ---------------------------------------------------- 15.44s

container-engine/crictl : download_file | Download item ------------------------------------------------------- 12.44s

network_plugin/cilium : Cilium | Wait for pods to run --------------------------------------------------------- 10.75s

download : download_container | Download image if required ---------------------------------------------------- 10.70s

download : download_file | Download item ---------------------------------------------------------------------- 10.44s

container-engine/runc : download_file | Download item --------------------------------------------------------- 9.63s

etcd : reload etcd -------------------------------------------------------------------------------------------- 9.33s

container-engine/nerdctl : download_file | Download item ------------------------------------------------------ 9.23s

download : download_container | Download image if required ---------------------------------------------------- 8.71s

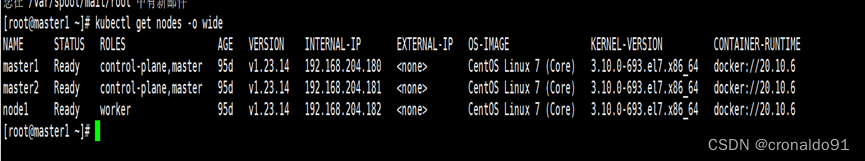

验证集群

root@cilium-node1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

node1 Ready control-plane 3h23m v1.24.6

node2 Ready control-plane 3h21m v1.24.6

node3 Ready <none> 3h17m v1.24.6

root@cilium-node1:~# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true","reason":""}

etcd-2 Healthy {"health":"true","reason":""}

etcd-1 Healthy {"health":"true","reason":""}

controller-manager Healthy ok

scheduler Healthy ok

root@cilium-node1:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-operator-f6648bc78-5qqn8 1/1 Running 2 (17m ago) 67m

kube-system cilium-operator-f6648bc78-v44zf 1/1 Running 1 (17m ago) 67m

kube-system cilium-qwkkf 1/1 Running 0 67m

kube-system cilium-s4rdr 1/1 Running 0 67m

kube-system cilium-v2dnw 1/1 Running 1 (58m ago) 67m

kube-system coredns-665c4cc98d-k47qm 1/1 Running 0 56m

kube-system coredns-665c4cc98d-l7mwz 1/1 Running 0 54m

kube-system dns-autoscaler-6567c8b74f-ql5bw 1/1 Running 0 62m

kube-system kube-apiserver-node1 1/1 Running 1 3h23m

kube-system kube-apiserver-node2 1/1 Running 1 3h21m

kube-system kube-controller-manager-node1 1/1 Running 6 (77m ago) 3h23m

kube-system kube-controller-manager-node2 1/1 Running 7 (45m ago) 3h21m

kube-system kube-proxy-2dhd6 1/1 Running 0 69m

kube-system kube-proxy-92vgt 1/1 Running 0 69m

kube-system kube-proxy-n9vjn 1/1 Running 0 69m

kube-system kube-scheduler-node1 1/1 Running 5 (66m ago) 3h23m

kube-system kube-scheduler-node2 1/1 Running 8 (45m ago) 3h21m

kube-system nginx-proxy-node3 1/1 Running 0 3h17m

kube-system nodelocaldns-jwvjq 1/1 Running 0 61m

kube-system nodelocaldns-l5plk 1/1 Running 0 61m

kube-system nodelocaldns-sjjwk 1/1 Running 0 61m

添加节点

root@abba3b870324:/kubespray# vim inventory/mycluster/hosts.yaml

all:

hosts:

node1:

ansible_host: 192.168.64.8

ip: 192.168.64.8

access_ip: 192.168.64.8

node2:

ansible_host: 192.168.64.9

ip: 192.168.64.9

access_ip: 192.168.64.9

node3:

ansible_host: 192.168.64.10

ip: 192.168.64.10

access_ip: 192.168.64.10

node4: # 添加节点

ansible_host: 192.168.64.11

ip: 192.168.64.11

access_ip: 192.168.64.11

children:

kube_control_plane:

hosts:

node1:

node2:

kube_node:

hosts:

node1:

node2:

node3:

node4: # 添加节点

etcd:

hosts:

node1:

node2:

node3:

k8s_cluster:

children:

kube_control_plane:

kube_node:

calico_rr:

hosts: {}

root@kubespray:~# ansible-playbook -i inventory/mycluster/hosts.yaml --become --become-user=root scale.yml -v -b

移除某个节点

root@kubespray:~# ansible-playbook -i inventory/mycluster/hosts.yaml --become --become-user=root remove-node.yml -v -b --extra-vars "node=node3" # 这里移除node3,注意,节点名称是kubespray自动分配的

一键清理集群

root@kubespray:~# ansible-playbook -i inventory/mycluster/hosts.yaml --become --become-user=root reset.yml

文章来源:https://www.toymoban.com/news/detail-535095.html

到了这里,关于kubespray部署kubernetes(containerd + cilium)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!