前言

通过普通AudioTrack的流程追踪数据流。分析一下声音模块的具体流程。这里比较复杂的是binder以及共享内存。这里不做详细介绍。只介绍原理

正文

java层的AudioTrack主要是通过jni调用到cpp层的AudioTrack。我们只介绍cpp层相关。

初始化

初始化只核心是通过set函数实现的。主要包括三步。

1. 客户端准备数据,

status_t AudioTrack::set(

audio_stream_type_t streamType,

uint32_t sampleRate,

audio_format_t format,

audio_channel_mask_t channelMask,

size_t frameCount,

audio_output_flags_t flags,

callback_t cbf,

void* user,

int32_t notificationFrames,

const sp<IMemory>& sharedBuffer,

bool threadCanCallJava,

audio_session_t sessionId,

transfer_type transferType,

const audio_offload_info_t *offloadInfo,

uid_t uid,

pid_t pid,

const audio_attributes_t* pAttributes,

bool doNotReconnect,

float maxRequiredSpeed,

audio_port_handle_t selectedDeviceId)

{

//如果构造audioTrack时时传入AudioTrack.MODE_STREAM。则sharedBuffer为空

mSharedBuffer = sharedBuffer;

if (cbf != NULL) {

mAudioTrackThread = new AudioTrackThread(*this);

mAudioTrackThread->run("AudioTrack", ANDROID_PRIORITY_AUDIO, 0 /*stack*/);

// thread begins in paused state, and will not reference us until start()

}

// create the IAudioTrack

{

AutoMutex lock(mLock);

//这是核心,通过audiofligure 创建服务端数据,以及拿到共享内存。

status = createTrack_l();

}

mVolumeHandler = new media::VolumeHandler();

}

status_t AudioTrack::createTrack_l()

{

const sp<IAudioFlinger>& audioFlinger = AudioSystem::get_audio_flinger()

IAudioFlinger::CreateTrackInput inpu

IAudioFlinger::CreateTrackOutput output;

//关键部分

sp<IAudioTrack> track = audioFlinger->createTrack(input,output,&status);

sp<IMemory> iMem = track->getCblk();

void *iMemPointer = iMem->pointer();

mAudioTrack = track;

mCblkMemory = iMem;

audio_track_cblk_t* cblk = static_cast<audio_track_cblk_t*>(iMemPointer);

mCblk = cblk;

void* buffers;

buffers = cblk + 1;

mAudioTrack->attachAuxEffect(mAuxEffectId);

if (mFrameCount > mReqFrameCount) {

mReqFrameCount = mFrameCount;

}

// update proxy

if (mSharedBuffer == 0) {

mStaticProxy.clear();

mProxy = new AudioTrackClientProxy(cblk, buffers, mFrameCount, mFrameSize);

}

mProxy->setPlaybackRate(playbackRateTemp);

mProxy->setMinimum(mNotificationFramesAct);

}

}

核心是createTrack,之后拿到关键的共享内存消息,然后写入内容

2. Audiofligure创建远端track。

sp<IAudioTrack> AudioFlinger::createTrack(const CreateTrackInput& input,

CreateTrackOutput& output,

status_t *status)

{

sp<PlaybackThread::Track> track;

sp<TrackHandle> trackHandle;

sp<Client> client;

pid_t clientPid = input.clientInfo.clientPid;

const pid_t callingPid = IPCThreadState::self()->getCallingPid();

{

Mutex::Autolock _l(mLock);

PlaybackThread *thread = checkPlaybackThread_l(output.outputId);

client = registerPid(clientPid);

PlaybackThread *effectThread = NULL;

track = thread->createTrack_l(client, streamType, localAttr, &output.sampleRate,

input.config.format, input.config.channel_mask,

&output.frameCount, &output.notificationFrameCount,

input.notificationsPerBuffer, input.speed,

input.sharedBuffer, sessionId, &output.flags,

callingPid, input.clientInfo.clientTid, clientUid,

&lStatus, portId);

trackHandle = new TrackHandle(track);

return trackHandle;

}

核心是createTrack_l,在混音线程中加入新建一个服务端的Track。新建共享内存,返还给客户端的Track,最终可以共享数据。代码如下:

// PlaybackThread::createTrack_l() must be called with AudioFlinger::mLock held

sp<AudioFlinger::PlaybackThread::Track> AudioFlinger::PlaybackThread::createTrack_l(

const sp<AudioFlinger::Client>& client,

const sp<IMemory>& sharedBuffer,)

{

sp<Track> track;

track = new Track(this, client, streamType, attr, sampleRate, format,

channelMask, frameCount,

nullptr /* buffer */, (size_t)0 /* bufferSize */, sharedBuffer,

sessionId, creatorPid, uid, *flags, TrackBase::TYPE_DEFAULT, portId);

//这个不太重要,后面调用start后,track会进入mActiveTracks列表,最终会在Threadloop中读取数据,经过处理写入out中

mTracks.add(track);

return track;

}

Track的构造函数是关键,主要是拿到共享内存。交给binder服务端的TrackHandle对象。大概代码如下:

AudioFlinger::ThreadBase::TrackBase::TrackBase(

ThreadBase *thread,

const sp<Client>& client

void *buffer,)

: RefBase(),

{

//client是audiofligure中维护的一个变量,主要持有MemoryDealer。用于管理共享内存。

if (client != 0) {

mCblkMemory = client->heap()->allocate(size);

}

}

然后把tracxk对象封装成TrackHandle这个binder对象,传给AudioTrack,通过TrackHandle实现数据传输。他主要实现了start和stop。以及获取共享内存的接口如下

class IAudioTrack : public IInterface

{

public:

DECLARE_META_INTERFACE(AudioTrack);

/* Get this track's control block */

virtual sp<IMemory> getCblk() const = 0;

/* After it's created the track is not active. Call start() to

* make it active.

*/

virtual status_t start() = 0;

/* Stop a track. If set, the callback will cease being called and

* obtainBuffer will return an error. Buffers that are already released

* will continue to be processed, unless/until flush() is called.

*/

virtual void stop() = 0;

/* Flush a stopped or paused track. All pending/released buffers are discarded.

* This function has no effect if the track is not stopped or paused.

*/

virtual void flush() = 0;

/* Pause a track. If set, the callback will cease being called and

* obtainBuffer will return an error. Buffers that are already released

* will continue to be processed, unless/until flush() is called.

*/

virtual void pause() = 0;

/* Attach track auxiliary output to specified effect. Use effectId = 0

* to detach track from effect.

*/

virtual status_t attachAuxEffect(int effectId) = 0;

/* Send parameters to the audio hardware */

virtual status_t setParameters(const String8& keyValuePairs) = 0;

/* Selects the presentation (if available) */

virtual status_t selectPresentation(int presentationId, int programId) = 0;

/* Return NO_ERROR if timestamp is valid. timestamp is undefined otherwise. */

virtual status_t getTimestamp(AudioTimestamp& timestamp) = 0;

/* Signal the playback thread for a change in control block */

virtual void signal() = 0;

/* Sets the volume shaper */

virtual media::VolumeShaper::Status applyVolumeShaper(

const sp<media::VolumeShaper::Configuration>& configuration,

const sp<media::VolumeShaper::Operation>& operation) = 0;

/* gets the volume shaper state */

virtual sp<media::VolumeShaper::State> getVolumeShaperState(int id) = 0;

};

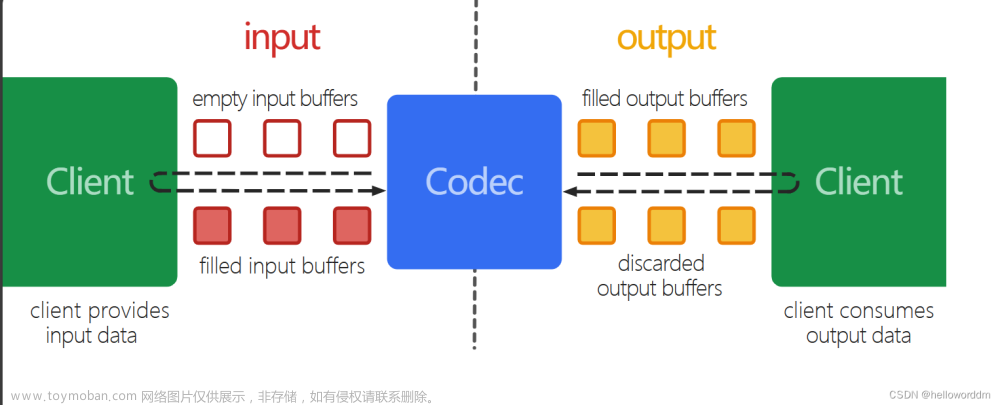

下面大概介绍一下写入数据的过程,因为这个算法比较复杂,主要是通过共享内存,通过共享内存中的结构体,控制共享内存中后半部分的数据写入写出。就不在详细介绍。这里只简要介绍大概流程:

首先是Audiotrack的write方法:

ssize_t AudioTrack::write(const void* buffer, size_t userSize, bool blocking)

//申请空间,

status_t err = obtainBuffer(&audioBuffer,

blocking ? &ClientProxy::kForever : &ClientProxy::kNonBlocking)

//写入内容

memcpy(audioBuffer.i8, buffer, toWrite);

//写入结尾,通知共享内存结构体,已经写入,防止读取错误数据。

releaseBuffer(&audioBuffer);

}

obtainBuffer主要是通过AudioTrackClientProxy这个客户端共享内存控制的,

大概代码如下:

status_t ClientProxy::obtainBuffer(Buffer* buffer, const struct timespec *requested,

struct timespec *elapsed)

{

//核心是这里,方便循环数组的下标的变化。是把长的坐标去掉头部。

rear &= mFrameCountP2 - 1;

//这是取的是AudioTrack,所以需要在结尾添加数据,所以是rear。

buffer->mRaw = part1 > 0 ?

&((char *) mBuffers)[(rear) * mFrameSize] : NULL;

关于releaseBuffer这里就不在详细介绍,因为主要是加锁,然后通知数组下标变化的,具体逻辑这里不详细介绍。

关于循环读取的过程,本篇文章不再详细介绍,主要是通过track类中的getNextBuffer实现的,他主要是audiomixer中被调用,本质上都是通过audiomixerthread这个线程实现的,audiomix通过职责链模式,对声音进行处理,最终写入到hal层。文章来源:https://www.toymoban.com/news/detail-541693.html

后记

这篇文章,这篇文章虽说不够完善,但是基本上解释了声音的大概流向,但是framew层用到比较多的系统组件,还有更底层的锁与同步机制,完全详细介绍清楚,还是分困难了,这里暂时就这样,以后如果有空,进一步补充。文章来源地址https://www.toymoban.com/news/detail-541693.html

到了这里,关于AudioTrack的声音输出流程的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!