本文实现ReLU, Leaky ReLU, Expotential Linear unit, Sigmoid, tanh 激活函数的实现和可视化。

clear all;close all;clc

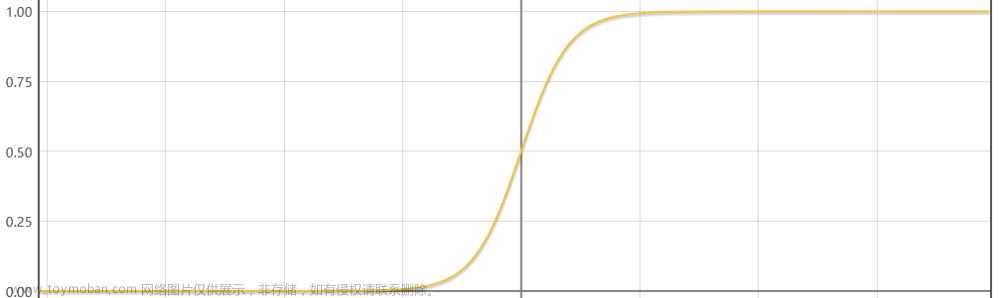

%% sigmoid function

x=linspace(-10.0,10.0);

y=1./(1.0+exp(-1.0*x));

figure(1)

plot(x,y,'k','LineWidth',1)

xlabel('x')

ylabel('y')

legend('sigmoid function','Location','best')

legend boxoff

title('sigmoid')

exportgraphics(gcf,'sigmoid_func.jpg')

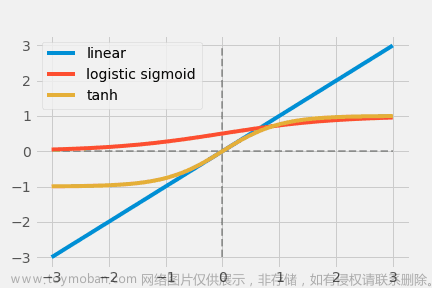

%% tanh function

y = tanh(x);

figure(2)

plot(x,y,'k','LineWidth',1)

xlabel('x')

ylabel('y')

legend('tanh function','Location','best')

legend boxoff

title('tanh')

exportgraphics(gcf,'tanh_func.jpg')

%% ReLU function

relu1=@(x)(x.*(x>=0)+0.*(x<0));

y = relu1(x);

figure(3)

plot(x,y,'k','LineWidth',1)

xlabel('x')

ylabel('y')

legend('relu function','Location','best')

legend boxoff

title('relu')

exportgraphics(gcf,'relu_func.jpg')

%% Leaky ReLU function

scale=0.1;

leakyrelu1=@(x,scale)(x.*(x>=0)+scale.*x.*(x<0));

y = leakyrelu1(x,scale);

figure(4)

plot(x,y,'k','LineWidth',1)

xlabel('x')

ylabel('y')

legend('leaky relu function','Location','best')

legend boxoff

title('leaky relu')

exportgraphics(gcf,'leakyrelu_func.jpg')

%% ELU function

alpha=0.1;

elu=@(x,alpha)(x.*(x>=0)+alpha.*(exp(x)-1).*(x<0));

y = elu(x,alpha);

figure(5)

plot(x,y,'k','LineWidth',1)

xlabel('x')

ylabel('y')

legend('elu function','Location','best')

legend boxoff

title('elu')

exportgraphics(gcf,'elu_func.jpg')

文章来源地址https://www.toymoban.com/news/detail-545250.html

文章来源:https://www.toymoban.com/news/detail-545250.html

文章来源:https://www.toymoban.com/news/detail-545250.html

到了这里,关于深度学习 常见激活函数MATLAB 实现的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!