yolov7的 tensorrt8 推理, c++ 版本

环境

win10

vs2019

opencv4.5.5

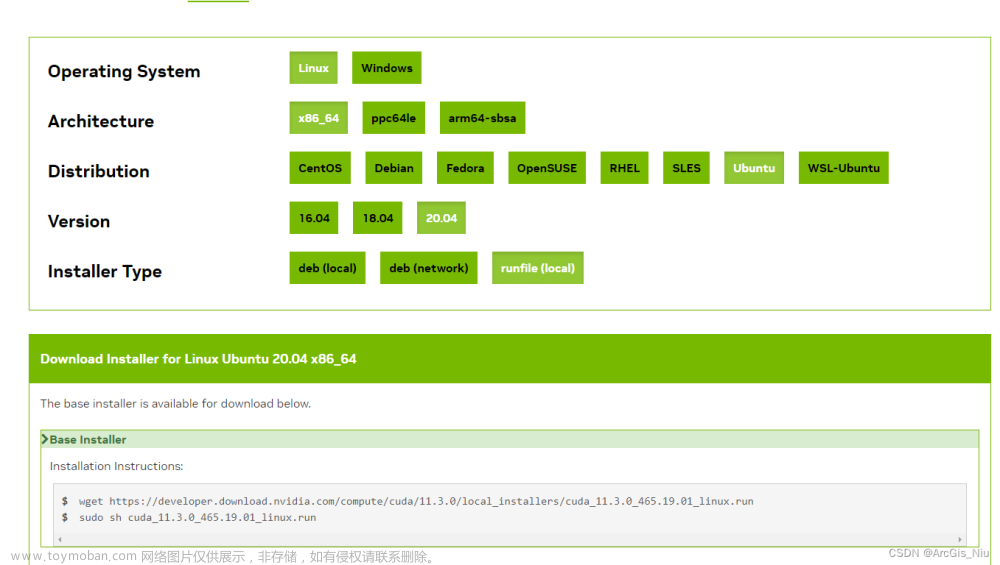

cuda_11.4.3_472.50_win10.exe

cudnn-11.4-windows-x64-v8.2.4.15

TensorRT-8.2.1.8.Windows10.x86_64.cuda-11.4.cudnn8.2.zip

RTX2060推理yolov7, FP32 耗时 28ms ,FP16 耗时 8ms,单帧对应总耗时30ms 和 10ms。

推理yolov7-tiny,FP32 耗时 8ms ,FP16 耗时 2ms。

tensorrtx/yolov7 at master · wang-xinyu/tensorrtx · GitHubhttps://github.com/wang-xinyu/tensorrtx/tree/master/yolov7

GitHub - QIANXUNZDL123/tensorrtx-yolov7: yolov7-tensorrtxhttps://github.com/QIANXUNZDL123/tensorrtx-yolov7

python gen_wts_trtx.py -w yolov7-tiny.pt -o yolov7-tiny.wts根据大神的攻略,通过yolov7-pt文件生成对应的 .wts 文件.

然后根据如下c++代码生成对应的 .engine文件,把自带的engine文件覆盖掉。工程不用cmake了,直接改。 要修改对应自己的文件目录。

由于大神的代码不是win10 cuda11的,以下代码修改好了,只要修改对应目录就可以调通了.

yolov7的win10tensorrt推理c++版本-C++文档类资源-CSDN文库https://download.csdn.net/download/vokxchh/87399894

注意:

需要修改自己对应的目录,显卡对应的算力。。

1.工程设置到release x64。

C:\TensorRT-8.2.1.8\lib 和 C:\opencv4.5.5\build\x64\vc15\bin的dll拷入exe所在文件目录。

2.包含目录:

3.库目录:

4.预处理器定义:

5. 显卡算力:

6.附加依赖项:

opencv_world455.lib

nvinfer.lib

nvinfer_plugin.lib

nvonnxparser.lib

nvparsers.lib

cublas.lib

cublasLt.lib

cuda.lib

cudadevrt.lib

cudart.lib

cudart_static.lib

cudnn.lib

cufft.lib

cufftw.lib

curand.lib

cusolver.lib

cusolverMg.lib

cusparse.lib

nppc.lib

nppial.lib

nppicc.lib

nppidei.lib

nppif.lib

nppig.lib

nppim.lib

nppist.lib

nppisu.lib

nppitc.lib

npps.lib

nvblas.lib

nvjpeg.lib

nvml.lib

nvrtc.lib

OpenCL.lib

---------------------------------------

win10系统环境变量:

7.修改config.h的USE_FP32 ,编译生成自己的FP32的exe。

USE_FP16 ,生成自己的FP16的exe。

8.然后运行FP32的exe,通过yolov7.wts生成FP32的engine文件,运行FP16的exe生成FP16的engine文件。

Project5.exe -s yolov7.wts yolov7-fp32.engine v7

注意,FP32的engine生成时间短,FP16的engine生成时间长。要耐心等待。FP16生成等了50分钟。。

9.运行如下代码预测

Project5.exe -d yolov7-fp32.engine ./samples

//

以下是自己的数据集问题,官方的80分类pt文件它自己处理过了。

1.自己的数据集,需要把config.h的const static int kNumClass =80;改为自己的数量。

2.自己训练的pt,需要重参,不然tensorrt推理出来结果不对。

yolov7/reparameterization.ipynb at main · WongKinYiu/yolov7 · GitHub

# import

from copy import deepcopy

from models.yolo import Model

import torch

from utils.torch_utils import select_device, is_parallel

import yaml

device = select_device('0', batch_size=1)

# model trained by cfg/training/*.yaml

ckpt = torch.load('cfg/training/yolov7_training.pt', map_location=device)

# reparameterized model in cfg/deploy/*.yaml

model = Model('cfg/deploy/yolov7.yaml', ch=3, nc=80).to(device)

with open('cfg/deploy/yolov7.yaml') as f:

yml = yaml.load(f, Loader=yaml.SafeLoader)

anchors = len(yml['anchors'][0]) // 2

# copy intersect weights

state_dict = ckpt['model'].float().state_dict()

exclude = []

intersect_state_dict = {k: v for k, v in state_dict.items() if k in model.state_dict() and not any(x in k for x in exclude) and v.shape == model.state_dict()[k].shape}

model.load_state_dict(intersect_state_dict, strict=False)

model.names = ckpt['model'].names

model.nc = ckpt['model'].nc

# reparametrized YOLOR

for i in range((model.nc+5)*anchors):

model.state_dict()['model.105.m.0.weight'].data[i, :, :, :] *= state_dict['model.105.im.0.implicit'].data[:, i, : :].squeeze()

model.state_dict()['model.105.m.1.weight'].data[i, :, :, :] *= state_dict['model.105.im.1.implicit'].data[:, i, : :].squeeze()

model.state_dict()['model.105.m.2.weight'].data[i, :, :, :] *= state_dict['model.105.im.2.implicit'].data[:, i, : :].squeeze()

model.state_dict()['model.105.m.0.bias'].data += state_dict['model.105.m.0.weight'].mul(state_dict['model.105.ia.0.implicit']).sum(1).squeeze()

model.state_dict()['model.105.m.1.bias'].data += state_dict['model.105.m.1.weight'].mul(state_dict['model.105.ia.1.implicit']).sum(1).squeeze()

model.state_dict()['model.105.m.2.bias'].data += state_dict['model.105.m.2.weight'].mul(state_dict['model.105.ia.2.implicit']).sum(1).squeeze()

model.state_dict()['model.105.m.0.bias'].data *= state_dict['model.105.im.0.implicit'].data.squeeze()

model.state_dict()['model.105.m.1.bias'].data *= state_dict['model.105.im.1.implicit'].data.squeeze()

model.state_dict()['model.105.m.2.bias'].data *= state_dict['model.105.im.2.implicit'].data.squeeze()

# model to be saved

ckpt = {'model': deepcopy(model.module if is_parallel(model) else model).half(),

'optimizer': None,

'training_results': None,

'epoch': -1}

# save reparameterized model

torch.save(ckpt, 'cfg/deploy/yolov7.pt')以上yolov7的重参。

# import

from copy import deepcopy

from models.yolo import Model

import torch

from utils.torch_utils import select_device, is_parallel

import yaml

device = select_device('0', batch_size=1)

# model trained by cfg/training/*.yaml

ckpt = torch.load('best-yolov7-tiny.pt', map_location=device)

# reparameterized model in cfg/deploy/*.yaml

model = Model('cfg/deploy/yolov7-tiny.yaml', ch=3, nc=2).to(device)

with open('cfg/deploy/yolov7-tiny.yaml') as f:

yml = yaml.load(f, Loader=yaml.SafeLoader)

anchors = len(yml['anchors'][0]) // 2

# copy intersect weights

state_dict = ckpt['model'].float().state_dict()

exclude = []

intersect_state_dict = {k: v for k, v in state_dict.items() if k in model.state_dict() and not any(x in k for x in exclude) and v.shape == model.state_dict()[k].shape}

model.load_state_dict(intersect_state_dict, strict=False)

model.names = ckpt['model'].names

model.nc = ckpt['model'].nc

# reparametrized YOLOR

for i in range((model.nc+5)*anchors):

model.state_dict()['model.77.m.0.weight'].data[i, :, :, :] *= state_dict['model.77.im.0.implicit'].data[:, i, : :].squeeze()

model.state_dict()['model.77.m.1.weight'].data[i, :, :, :] *= state_dict['model.77.im.1.implicit'].data[:, i, : :].squeeze()

model.state_dict()['model.77.m.2.weight'].data[i, :, :, :] *= state_dict['model.77.im.2.implicit'].data[:, i, : :].squeeze()

model.state_dict()['model.77.m.0.bias'].data += state_dict['model.77.m.0.weight'].mul(state_dict['model.77.ia.0.implicit']).sum(1).squeeze()

model.state_dict()['model.77.m.1.bias'].data += state_dict['model.77.m.1.weight'].mul(state_dict['model.77.ia.1.implicit']).sum(1).squeeze()

model.state_dict()['model.77.m.2.bias'].data += state_dict['model.77.m.2.weight'].mul(state_dict['model.77.ia.2.implicit']).sum(1).squeeze()

model.state_dict()['model.77.m.0.bias'].data *= state_dict['model.77.im.0.implicit'].data.squeeze()

model.state_dict()['model.77.m.1.bias'].data *= state_dict['model.77.im.1.implicit'].data.squeeze()

model.state_dict()['model.77.m.2.bias'].data *= state_dict['model.77.im.2.implicit'].data.squeeze()

# model to be saved

ckpt = {'model': deepcopy(model.module if is_parallel(model) else model).half(),

'optimizer': None,

'training_results': None,

'epoch': -1}

# save reparameterized model

torch.save(ckpt, 'best-yolov7-tiny-cc.pt')

以上yolov7-tiny的重参。

3.将yolov7_training.pt字段替换为自己训练完成的pt。nc=80字段改为自己的数量。

4.最终生成的pt文件再去转换wts,然后再转为engine。推理就准确了。

总结:

yolov7自己的数据集需要重参,不然tensorrt推理结果不对。文章来源:https://www.toymoban.com/news/detail-550234.html

试了一下推理yolov5-7.0 ,自己数据集不要再重参,tensorrt推理结果也是正确的。文章来源地址https://www.toymoban.com/news/detail-550234.html

到了这里,关于yolov7的 TensorRT c++推理,win10, cuda11.4.3 ,cudnn8.2.4.15,tensorrt8.2.1.8。的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!