先讲一下具体缩写的意思

COT-chain of thoughts

COT-SC (Self-consistency)

Tree of thoughts:Deliberate problem solving with LLM

我理解其实不复杂

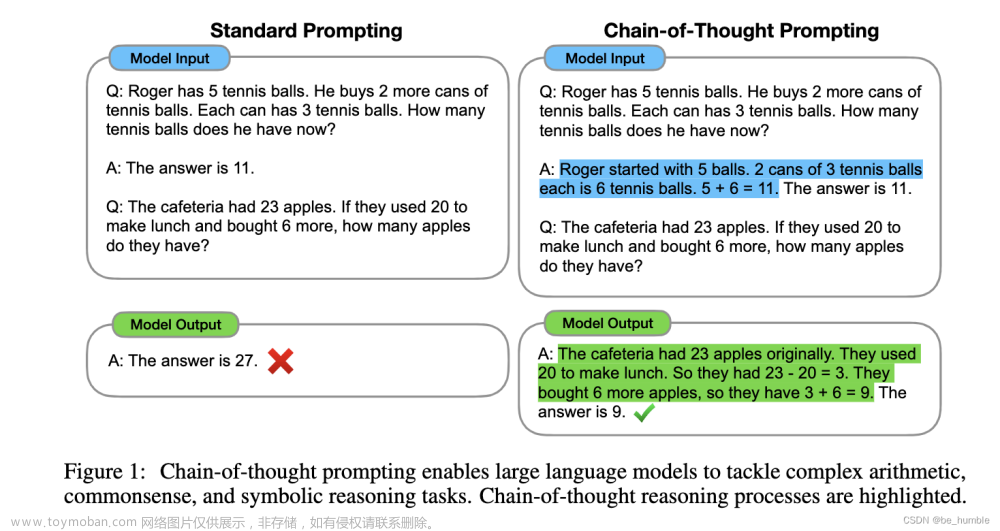

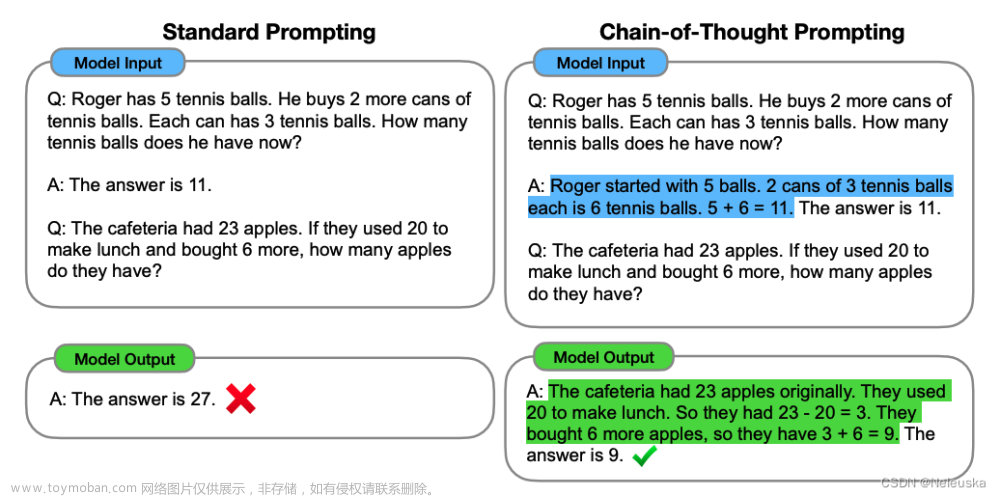

1. 最简单的是:直接大白话问一次 (IO)

2. 进阶一点是:思维链,让一步步思考(CoT)

3. 再进一步是:思维链问多次,出结果后选举——少数服从多数(CoT-SC)

4. 思维树=思维链问多次+链里每一步的逐步选举(ToT)

TOT论文中文解读:

(309条消息) Tree of Thoughts: Deliberate Problem Solving with Large Language Models翻译_nopSled的博客-CSDN博客

Github链接:

princeton-nlp/tree-of-thought-llm: Official Implementation of "Tree of Thoughts: Deliberate Problem Solving with Large Language Models" (github.com)

论文原文:

[2305.10601] Tree of Thoughts: Deliberate Problem Solving with Large Language Models (arxiv.org)

REACT

Reference文章来源:https://www.toymoban.com/news/detail-554428.html

GPT-4使用Tree of Thoughts框架,在解决复杂问题方面的能力提升了900%。_哔哩哔哩_bilibili文章来源地址https://www.toymoban.com/news/detail-554428.html

到了这里,关于COT、COT-SC、TOT 大预言模型思考方式||底层逻辑:prompt设定的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!