前言

YOLO8的集成度比较高,如何在简洁的代码中加入Head Pose的东西,不是一件简单的事情.这里介绍如何插入PnP算法实现头部姿态估计的代码?

一、步骤

修改predict中的模型,改为我们自己训练的YOLOLandmark模型.

修改cfg入口,由于我们修改来config中不少超参数,因此我们干脆修改来为finetune.yaml,替换默认的cfg即可.

funetune.yaml主要修改的点如下:

model: path to model

name: results saved directory.

conf: object confidence threshold

source: source video path

show: True # to show the live demo.

注意这些点需要根据工程去调整.

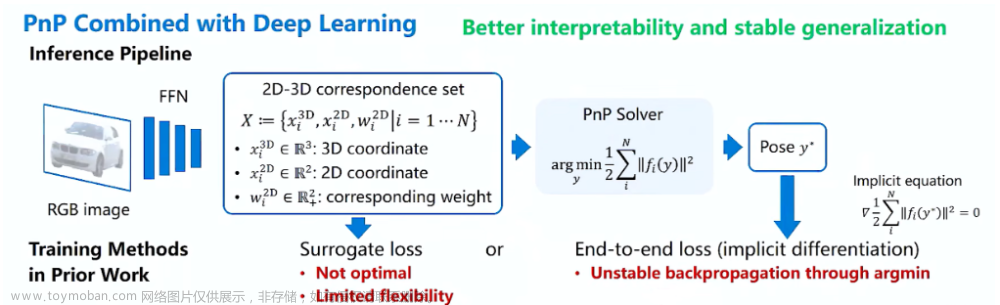

二、PnP估计Head Pose,并显示

1.引入库

参考github VHead的项目,我们将head pose进行模块化,传入图片和keypoints即可通过PnP算法估计出Head Pose

代码如下(示例):

def draw_headpose(im0, kpts):

landmarks = kpts.view(-1).tolist()

POINTS_NUM_LANDMARK = 68

clahe = cv2.createCLAHE(clipLimit=2.0, tileGridSize=(8,8)) # CLAHE Object (for Adaptive histogram equalization)

boxPoints3D = np.array(([500., 500., 500.],

[-500., 500., 500.],

[-500., -500., 500.],

[500., -500., 500.],

[500., 500., -500.],

[-500., 500., -500.],

[-500., -500., -500.],

[500., -500., -500.]))

boxPoints2D = np.zeros((1,1,8,2))

# parameters for mean filter

windowlen_1 = 5

queue3D_points = np.zeros((windowlen_1,POINTS_NUM_LANDMARK,2))

windowlen_2 =5

queue1D = np.zeros(windowlen_2)

# pamameters for kalman filter

XX = 0

PP = 0.01

lms_x = landmarks[0::3]

lms_y = landmarks[1::3]

lms = [lms_x, lms_y] # [[x,y] for x,y in zip(lms_x, lms_y)]

lms = [ ([x,y]) for x,y in zip(lms_x, lms_y)]

test_data = [0]

test_time = [0]

# # Socket Connect

# try:

# client = socket(AF_INET, SOCK_STREAM) # 创建Socket的客户端

# # client.connect(('127.0.0.1',1755))

# client.connect(('10.8.220.128',1755))

# except:

# print("\nERROR: No socket connection.\n")

# sys.exit(0)

# initialize kalman object

KalmanX = KalmanObject(POINTS_NUM_LANDMARK, 1,10) # Tune Q, R to change landmarks_x sensitivity

KalmanY = KalmanObject(POINTS_NUM_LANDMARK, 1,10) # Tune Q, R to change landmarks_y sensitivity

uu_ = np.zeros((POINTS_NUM_LANDMARK))

# initialize PARAMETERS

temp_landmarks = np.zeros((POINTS_NUM_LANDMARK,2))

# Apply kalman filter to landmarks FOR POSE ESTIMATION

KalmanX.kalman_update(uu_, lms_x)

KalmanY.kalman_update(uu_, lms_y)

temp_landmarks[:,0] = KalmanX.xx.astype(np.int32)

temp_landmarks[:,1] = KalmanY.xx.astype(np.int32)

temp_landmarks = mean_filter_for_landmarks(temp_landmarks) # Apply smooth filter to landmarks FOR POSE ESTIMATION

leftEyeWid, rightEyewid, mouthWid,mouthLen = get_feature_parameters(lms) #landmarks_orig)

parameters_str = 'leftEyeWid:{}, rightEyewid:{}, mouthWid:{}, mouthLen:{}'.format(leftEyeWid, rightEyewid, mouthWid, mouthLen)

print(parameters_str)

# Five feature points for pose estimation

# image_points = np.vstack((landmarks[30],landmarks[8],landmarks[36],landmarks[45],landmarks[48],landmarks[54]))

image_points = np.vstack((temp_landmarks[30],temp_landmarks[8],temp_landmarks[36],temp_landmarks[45],temp_landmarks[1],temp_landmarks[15]))

ret, rotation_vector, translation_vector, camera_matrix, dist_coeffs = get_pose_estimation(im0.shape, image_points)

if ret != True:

print('ERROR: get_pose_estimation failed')

# continue

# used_time = time.time() - start_time

# print("used_time:{} sec".format(round(used_time, 3)))

# Convert rotation_vector to quaternion

w,x,y,z = get_quaternion(rotation_vector)

quaternion_str = 'w:{}, x:{}, y:{}, z:{}'.format(w, x, y, z)

print(quaternion_str)

# Packing data and transmit to server through Socket

data = str(translation_vector[0,0])+':'+str(translation_vector[1,0])+':'+str(translation_vector[2,0])+':'+str(w)+':'+str(x)+':'+str(y)+':'+str(z)+':'+str(leftEyeWid)+':'+str(rightEyewid)+':'+str(mouthWid)+':'+str(mouthLen)

# Project a 3D point set onto the image plane

# We use this to draw a bounding box

(nose_end_point2D, jacobian) = cv2.projectPoints(np.array([(0.0, 0.0, 1000)]), rotation_vector, translation_vector, camera_matrix, dist_coeffs)

# global boxPoints2D

for i in range(8):

(boxPoints2D[:,:,i,:], jacobian) = cv2.projectPoints(np.array([boxPoints3D[i]]), rotation_vector, translation_vector, camera_matrix, dist_coeffs)

boxPoints2D = boxPoints2D.astype(int)

for p in image_points:

cv2.circle(im0, (int(p[0]), int(p[1])), 3, (0,0,255), -1)

boxset_1 = boxPoints2D[0,0,0:4,:]

boxset_2 = boxPoints2D[0,0,4:8,:]

boxset_3 = np.vstack((boxPoints2D[0,0,0,:],boxPoints2D[0,0,4,:]))

boxset_4 = np.vstack((boxPoints2D[0,0,1,:],boxPoints2D[0,0,5,:]))

boxset_5 = np.vstack((boxPoints2D[0,0,2,:],boxPoints2D[0,0,6,:]))

boxset_6 = np.vstack((boxPoints2D[0,0,3,:],boxPoints2D[0,0,7,:]))

cv2.polylines(im0, [boxset_1], True, (255,0,0), 3)

cv2.polylines(im0, [boxset_2], True, (255,0,0), 3)

cv2.polylines(im0, [boxset_3], True, (255,0,0), 3)

cv2.polylines(im0, [boxset_4], True, (255,0,0), 3)

cv2.polylines(im0, [boxset_5], True, (255,0,0), 3)

cv2.polylines(im0, [boxset_6], True, (255,0,0), 3)

p1 = ( int(image_points[0][0]), int(image_points[0][1]))

p2 = ( int(nose_end_point2D[0][0][0]), int(nose_end_point2D[0][0][1]))

cv2.line(im0, p1, p2, (0,255,0), 2)

##########################################################################

这里需要判断ktps是否为空,再进行画图操作.

2.结果展示

可以看到左上角,head box的角度基本上与真实的头部姿态相吻合.文章来源:https://www.toymoban.com/news/detail-560808.html

总结

这里,我们使用了Kalman 滤波来消除跳跃的情况,让pose变得更加平滑.但是Kalman 滤波的学习暂时没有具体取探索.在评估学习中.文章来源地址https://www.toymoban.com/news/detail-560808.html

到了这里,关于实验:如何在YOLO8中添加PnP算法来实现Head Pose Estimation的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!