minikube部署ES(单节点)异常定位过程

最近学习k8s, 在win10的minikube上部署ES, 容器一直在重启, 报错提示只有"Back-off restarting failed container", 现将定位过程记录以备日后查阅

问题现象

es容器一直重启, event报错提示只有一句"Back-off restarting failed container"

定位过程

- 网上查到"Back-off restarting failed container"的报错, 一般是容器的启动命令异常退出(exit 1), 容器一直重启, 看不到启动异常的日志, 先想办法不让容器退出, deployment.yaml中替换es容器的启动指令, 改成能执行成功且不会退出的指令, 参考下面command部分:

apiVersion: apps/v1

kind: Deployment

metadata:

name: elasticsearch

spec:

selector:

matchLabels:

name: elasticsearch

replicas: 1

template:

metadata:

labels:

name: elasticsearch

spec:

initContainers:

- name: init-sysctl

image: busybox

command:

- sysctl

- -w

- vm.max_map_count=262144

securityContext:

privileged: true

containers:

- name: elasticsearch

command: [ "/bin/bash", "-c", "--" ]

args: [ "while true; do sleep 30; done;" ]

image: elasticsearch:8.6.2

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

memory: 2Gi

requests:

cpu: 100m

memory: 1Gi

env:

- name: ES_JAVA_OPTS

value: -Xms512m -Xmx512m

ports:

- containerPort: 9200

- containerPort: 9300

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data/

- name: es-config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

subPath: elasticsearch.yml

volumes:

- name: elasticsearch-data

persistentVolumeClaim:

claimName: es-pvc

- name: es-config

configMap:

name: es

- 重新部署, 容器不再退出, deployment状态保持green, 但es服务不可用(预料中, 因为改了command, 所以ES服务压根儿就没启),

kubectl exec -it <pod_name> -c <container_name> /bin/bash进入容器, 手动执行bash /usr/share/elasticsearch/bin/elasticsearch命令启动ES服务, 查看到报错信息如下:

{

"@timestamp": "2023-04-06T10:06:47.648Z",

"log.level": "ERROR",

"message": "fatal exception while booting Elasticsearch",

"ecs.version": "1.2.0",

"service.name": "ES_ECS",

"event.dataset": "elasticsearch.server",

"process.thread.name": "main",

"log.logger": "org.elasticsearch.bootstrap.Elasticsearch",

"elasticsearch.node.name": "node-1",

"elasticsearch.cluster.name": "my-cluster",

"error.type": "java.lang.IllegalStateException",

"error.message": "failed to obtain node locks, tried [/usr/share/elasticsearch/data]; maybe these locations are not writable or multiple nodes were started on the same data path?",

"error.stack_trace": "java.lang.IllegalStateException: failed to obtain node locks, tried [/usr/share/elasticsearch/data]; maybe these locations are not writable or multiple nodes were started on the same data path?\n\tat org.elasticsearch.server@8.6.2/org.elasticsearch.env.NodeEnvironment.<init>(NodeEnvironment.java:285)\n\tat org.elasticsearch.server@8.6.2/org.elasticsearch.node.Node.<init>(Node.java:478)\n\tat org.elasticsearch.server@8.6.2/org.elasticsearch.node.Node.<init>(Node.java:322)\n\tat org.elasticsearch.server@8.6.2/org.elasticsearch.bootstrap.Elasticsearch$2.<init>(Elasticsearch.java:214)\n\tat org.elasticsearch.server@8.6.2/org.elasticsearch.bootstrap.Elasticsearch.initPhase3(Elasticsearch.java:214)\n\tat org.elasticsearch.server@8.6.2/org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:67)\nCaused by: java.io.IOException: failed to obtain lock on /usr/share/elasticsearch/data\n\tat org.elasticsearch.server@8.6.2/org.elasticsearch.env.NodeEnvironment$NodeLock.<init>(NodeEnvironment.java:230)\n\tat org.elasticsearch.server@8.6.2/org.elasticsearch.env.NodeEnvironment$NodeLock.<init>(NodeEnvironment.java:198)\n\tat org.elasticsearch.server@8.6.2/org.elasticsearch.env.NodeEnvironment.<init>(NodeEnvironment.java:277)\n\t... 5 more\nCaused by: java.nio.file.AccessDeniedException: /usr/share/elasticsearch/data/node.lock\n\tat java.base/sun.nio.fs.UnixException.translateToIOException(UnixException.java:90)\n\tat java.base/sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:106)\n\tat java.base/sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:111)\n\tat java.base/sun.nio.fs.UnixFileSystemProvider.newFileChannel(UnixFileSystemProvider.java:181)\n\tat java.base/java.nio.channels.FileChannel.open(FileChannel.java:304)\n\tat java.base/java.nio.channels.FileChannel.open(FileChannel.java:363)\n\tat org.apache.lucene.core@9.4.2/org.apache.lucene.store.NativeFSLockFactory.obtainFSLock(NativeFSLockFactory.java:112)\n\tat org.apache.lucene.core@9.4.2/org.apache.lucene.store.FSLockFactory.obtainLock(FSLockFactory.java:43)\n\tat org.apache.lucene.core@9.4.2/org.apache.lucene.store.BaseDirectory.obtainLock(BaseDirectory.java:44)\n\tat org.elasticsearch.server@8.6.2/org.elasticsearch.env.NodeEnvironment$NodeLock.<init>(NodeEnvironment.java:223)\n\t... 7 more\n"

}

挂载的路径/usr/share/elasticsearch/data不可写, 初步判断为权限问题, 尝试在容器的挂载路径下创建新文件, 果然权限不足:

elasticsearch@elasticsearch-5b75df88cb-xbng8:~/data$ touch demo.txt

touch: cannot touch 'demo.txt': Permission denied

elasticsearch@elasticsearch-5b75df88cb-xbng8:~/data$

网上查阅相关报错的资料, 确实有非root用户启动的容器出现权限问题(elasticsearch服务无法用root启动, 因此elasticsearch容器是以elasticsearch用户启动的)的案例

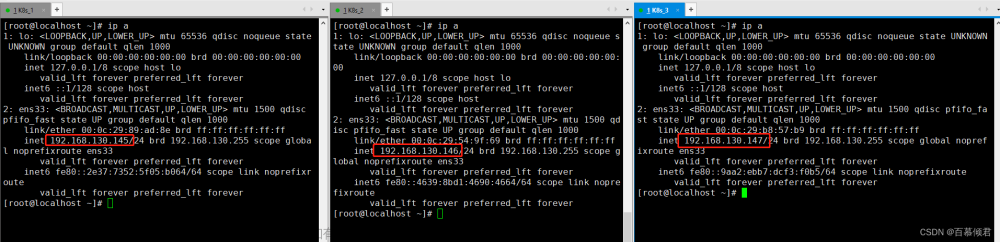

3.修改挂载路径的权限, 此处不能直接在node上直接创建同名用户并赋权, 容器用户与宿主机用户通过uid对应, 所以先确认容器中用户的uid

# es容器中执行

elasticsearch@elasticsearch-5b75df88cb-xbng8:~/data$ id

uid=1000(elasticsearch) gid=1000(elasticsearch) groups=1000(elasticsearch),0(root)

elasticsearch@elasticsearch-5b75df88cb-xbng8:~/data$

# node中执行

root@minikube:/# grep 1000 /etc/passwd

docker:x:1000:999:,,,:/home/docker:/bin/bash

root@minikube:/#

# 查看node挂载的hostPath的权限

root@minikube:/# ll -dh data/es-data

drwxr-xr-x 4 root root 4.0K Apr 6 09:40 data/es-data/

root@minikube:/#

# 将hostPath路径授与上面查到的用户权限

root@minikube:/# chown docker -R data/es-data

# 授权后

root@minikube:/# ll -dh data/es-data

drwxr-xr-x 4 docker root 4.0K Apr 6 09:40 data/es-data/

root@minikube:/#

检查容器中的挂载路径已有写入权限

# es容器中执行

elasticsearch@elasticsearch-5b75df88cb-xbng8:~/data$ touch demo.txt

elasticsearch@elasticsearch-5b75df88cb-xbng8:~/data$ ll demo.txt

-rw-r--r-- 1 elasticsearch elasticsearch 0 Apr 6 10:29 demo.txt

elasticsearch@elasticsearch-5b75df88cb-xbng8:~/data$

4.将deployment.yaml中的调试内容删除(将第3步中修改用户权限的指令放到initC中执行), 重新部署, 问题解决, 完整yaml内容如下:文章来源:https://www.toymoban.com/news/detail-570025.html

# pv和pvc

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-pv

namespace: century-creator

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: es-host

hostPath:

path: /data/es-data

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: es-pvc

namespace: century-creator

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

storageClassName: es-host

---

apiVersion: v1

kind: ConfigMap

metadata:

name: es

namespace: century-creator

data:

elasticsearch.yml: |

cluster.name: my-cluster

discovery.type: single-node

node.name: node-1

network.host: 0.0.0.0

http.port: 9200

http.cors.enabled: true

http.cors.allow-origin: /.*/

ingest.geoip.downloader.enabled: false

xpack.security.enabled: false

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: elasticsearch

namespace: century-creator

spec:

selector:

matchLabels:

name: elasticsearch

replicas: 1

template:

metadata:

labels:

name: elasticsearch

spec:

initContainers:

- name: init-sysctl

image: busybox

command:

- sysctl

- -w

- vm.max_map_count=262144

securityContext:

privileged: true

- name: volume-permissions

image: busybox

command: [ "sh", "-c", "--" ]

args: [ "chown 1000 -R /data/es-data" ]

volumeMounts:

- name: data

mountPath: /data/es-data

securityContext:

privileged: true

containers:

- name: elasticsearch

image: elasticsearch:8.6.2

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

memory: 2Gi

requests:

cpu: 100m

memory: 1Gi

env:

- name: ES_JAVA_OPTS

value: -Xms512m -Xmx512m

ports:

- containerPort: 9200

- containerPort: 9300

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data/

- name: es-config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

subPath: elasticsearch.yml

volumes:

- name: elasticsearch-data

persistentVolumeClaim:

claimName: es-pvc

- name: es-config

configMap:

name: es

- name: data

hostPath:

path: "/data/es-data"

---

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

namespace: century-creator

labels:

name: elasticsearch

spec:

type: NodePort

ports:

- name: web-9200

port: 9200

targetPort: 9200

protocol: TCP

nodePort: 30105

- name: web-9300

port: 9300

targetPort: 9300

protocol: TCP

nodePort: 30106

selector:

name: elasticsearch

参考: https://www.cnblogs.com/v-fan/p/16034960.html文章来源地址https://www.toymoban.com/news/detail-570025.html

到了这里,关于k8s部署es, 容器一直重启, 报错提示“Back-off restarting failed container“的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!