一、实验环境

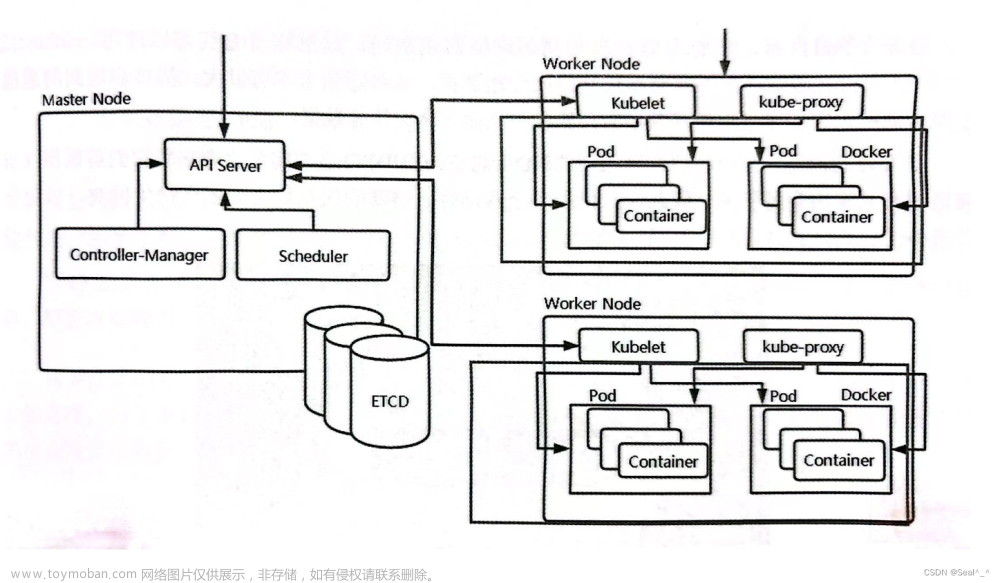

1、k8s环境

版本

v1.26.5

二进制安装Kubernetes(K8s)集群(基于containerd)—从零安装教程(带证书)

| 主机名 | IP | 系统版本 | 安装服务 |

|---|---|---|---|

| master01 | 10.10.10.21 | rhel7.5 | nginx、etcd、api-server、scheduler、controller-manager、kubelet、proxy |

| master02 | 10.10.10.22 | rhel7.5 | nginx、etcd、api-server、scheduler、controller-manager、kubelet、proxy |

| master03 | 10.10.10.23 | rhel7.5 | nginx、etcd、api-server、scheduler、controller-manager、kubelet、proxy |

| node01 | 10.10.10.24 | rhel7.5 | nginx、kubelet、proxy |

| node02 | 10.10.10.25 | rhel7.5 | nginx、kubelet、proxy |

2、Prometheus+Grafana环境

Prometheus+Grafana+Alertmanager监控系统

| 主机名 | IP | 系统版本 |

|---|---|---|

| jenkins | 10.10.10.10 | rhel7.5 |

3、Prometheus部署方式

-

kubernetes内部Prometheus监控k8s集群

- Prometheus监控内部K8S就是把Prometheus部署在K8S集群内,比如部署在K8S集群的monitoring的namespace下,因为K8S在所有的namespace下自动创建了serviceAccount和对应的Secret里自带访问K8S API的token和ca,所以就不需要手动创建serviceAccount和Secret了

-

kubernetes外部Prometheus监控k8s

- kubernetes外部Prometheus监控外部K8S就是把Prometheus部署在虚拟机上,需要自己在Prometheus.yaml手动指定API的地址,ca和Token

4、版本对应

https://github.com/kubernetes/kube-state-metrics

5、采集方式

-

Exporter:是一种将第三方组件的指标转换为Prometheus可识别的格式,并将其暴露为抓取目标的工具。在Kubernetes中,有很多第三方组件(如Etcd、Kube-proxy、Node exporter等)也会产生重要的监控指标。

-

kube-state-metrics:是一个独立的组件,用于暴露Kubernetes集群中各种资源(如Pod、Service、Deployment等)的状态指标,使用起来比较方便,这里我们使用此种方式

二、配置kube-state-metrics

https://github.com/kubernetes/kube-state-metrics/tree/v2.9.2/examples/standard

1、文件下载

[root@master01 kube-state-metrics]# ls

cluster-role-binding.yaml cluster-role.yaml deployment.yaml service-account.yaml service.yaml

[root@master01 kube-state-metrics]# cat cluster-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.9.2

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

[root@master01 kube-state-metrics]# cat cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.9.2

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- serviceaccounts

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

- apiGroups:

- authentication.k8s.io

resources:

- tokenreviews

verbs:

- create

- apiGroups:

- authorization.k8s.io

resources:

- subjectaccessreviews

verbs:

- create

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- list

- watch

- apiGroups:

- admissionregistration.k8s.io

resources:

- mutatingwebhookconfigurations

- validatingwebhookconfigurations

verbs:

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- networkpolicies

- ingressclasses

- ingresses

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- rbac.authorization.k8s.io

resources:

- clusterrolebindings

- clusterroles

- rolebindings

- roles

verbs:

- list

- watch

[root@master01 kube-state-metrics]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.9.2

name: kube-state-metrics

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: kube-state-metrics

template:

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.9.2

spec:

automountServiceAccountToken: true

containers:

- image: bitnami/kube-state-metrics:2.9.2

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

name: kube-state-metrics

ports:

- containerPort: 8080

name: http-metrics

- containerPort: 8081

name: telemetry

readinessProbe:

httpGet:

path: /

port: 8081

initialDelaySeconds: 5

timeoutSeconds: 5

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 65534

seccompProfile:

type: RuntimeDefault

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: kube-state-metrics

[root@master01 kube-state-metrics]# cat service-account.yaml

apiVersion: v1

automountServiceAccountToken: false

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.9.2

name: kube-state-metrics

namespace: kube-system

[root@master01 kube-state-metrics]# cat service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.9.2

name: kube-state-metrics

namespace: kube-system

spec:

type: NodePort

ports:

- name: http-metrics

port: 8080

targetPort: 8080

nodePort: 32080

protocol: TCP

- name: telemetry

port: 8081

targetPort: 8081

nodePort: 32081

protocol: TCP

selector:

app.kubernetes.io/name: kube-state-metrics

2、安装kube-state-metrics

使用NodePort暴漏端口

[root@master01 kube-state-metrics]# kubectl apply -f ./

[root@master01 kube-state-metrics]# kubectl get po -n kube-system -o wide | grep kube-state-metrics

kube-state-metrics-57ddc8c4ff-krsh2 1/1 Running 0 9m5s 10.0.3.1 master02 <none> <none>

[root@master01 kube-state-metrics]# kubectl get svc -n kube-system | grep kube-state-metrics

kube-state-metrics NodePort 10.97.38.90 <none> 8080:32080/TCP,8081:32081/TCP 9m17s

3、测试结果

发现部署在master02,也就是10.10.10.22

[root@master01 kube-state-metrics]# curl http://10.97.38.90:8080/healthz -w '\n'

OK

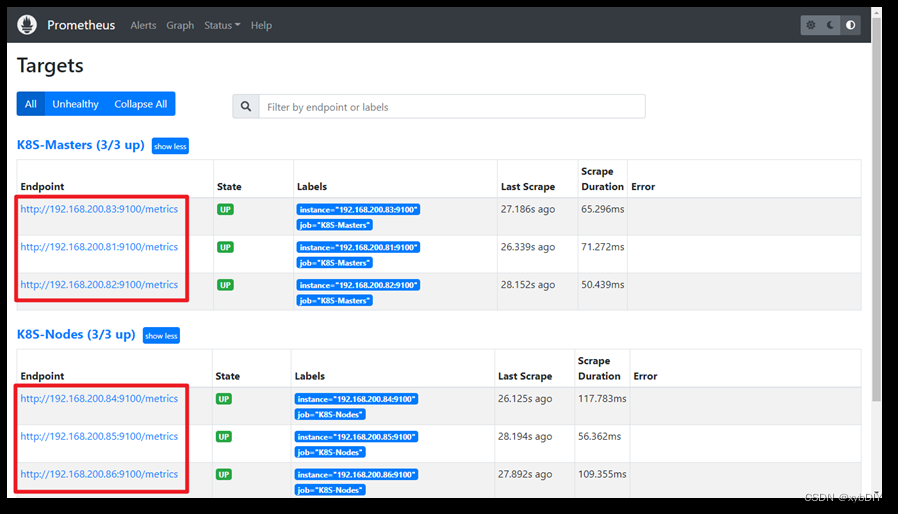

三、配置Prometheus

1、修改prometheus.yml

[root@jenkins ~]# cat Prometheus/prometheus.yml

- job_name: "kube-state-metrics"

static_configs:

- targets: ["10.10.10.22:32080"]

- job_name: "kube-state-telemetry"

static_configs:

- targets: ["10.10.10.22:32081"]

2、重启Prometheus

[root@jenkins ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a0497377cd82 grafana/grafana-enterprise "/run.sh" 13 days ago Up 3 minutes 0.0.0.0:3000->3000/tcp grafana

3e0e4270bd92 prom/prometheus "/bin/prometheus --c…" 13 days ago Up 3 minutes 0.0.0.0:9090->9090/tcp prometheus

[root@jenkins ~]# docker restart prometheus

3、登录查看结果

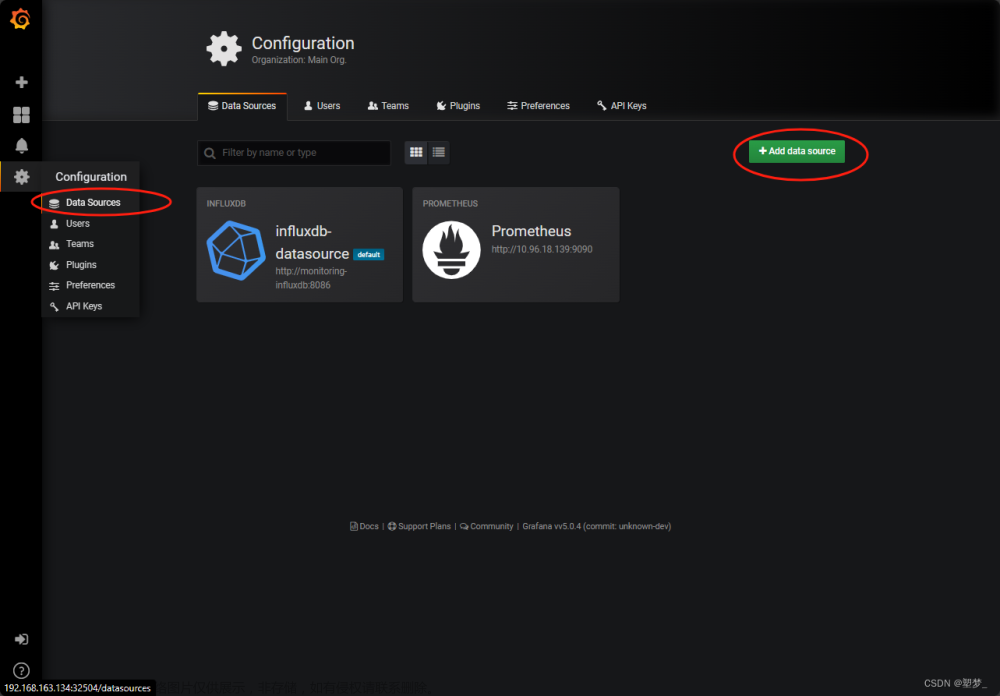

四、配置Grafana

推荐模板:13332、13824、14518

1、导入模板

文章来源:https://www.toymoban.com/news/detail-571762.html

文章来源:https://www.toymoban.com/news/detail-571762.html

2、查看结果

文章来源地址https://www.toymoban.com/news/detail-571762.html

文章来源地址https://www.toymoban.com/news/detail-571762.html

到了这里,关于Prometheus+Grafana(外)监控Kubernetes(K8s)集群(基于containerd)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!