一、前置条件

对于自建 MySQL , 需要先开启 Binlog 写入功能,配置 binlog-format 为 ROW 模式,my.cnf 中配置如下

[mysqld]

log-bin=mysql-bin # 开启 binlog

binlog-format=ROW # 选择 ROW 模式

server_id=1 # 配置 MySQL replaction 需要定义,不要和 canal 的 slaveId 重复

授权链接 MySQL 账号具有作为 MySQL slave 的权限, 如果已有账户可直接 grant

CREATE USER 'user'@'localhost' IDENTIFIED BY 'password';

GRANT SELECT, SHOW DATABASES, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'user' IDENTIFIED BY 'password';

FLUSH PRIVILEGES;

二、创建项目

基于jdk1.8 + springboot2.7.x + elasticsearch7.x

1、pom 主要依赖

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.29</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-elasticsearch</artifactId>

<version>2.7.2</version>

</dependency>

<!-- Flink CDC connector for MySQL -->

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>2.3.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-scala-bridge_2.12</artifactId>

<version>1.14.4</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.12</artifactId>

<version>1.14.4</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.12</artifactId>

<version>1.14.4</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.14.4</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.12</artifactId>

<version>1.14.4</version>

</dependency>

2、yml 配置 MySQL 连接信息

mysql-cdc-es:

infos:

ip: 192.168.xxx.xxx

port: 3306

dbs: 对应MySQL的schema名字,如 mysql-es

user: username

pwd: password

tables: mysql-es.表名

3、MySQL 连接信息对应配置类

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.cloud.context.config.annotation.RefreshScope;

import org.springframework.stereotype.Component;

@Data

@Component

@RefreshScope

@ConfigurationProperties(prefix = "mysql-cdc-es")

public class MysqlCdcInfo {

private String ip;

private int port;

private String dbs;

private String user;

private String pwd;

private String tables;

}

4、创建数据变更对象

import lombok.Data;

@Data

public class DataChangeInfo {

/**

* 变更类型: 0 初始化 1新增 2修改 3删除 4导致源中的现有表被截断的操作

*/

private Integer operatorType;

/**

* 变更前数据

*/

private String beforeData;

/**

* 变更后数据

*/

private String afterData;

/**

* 操作的数据

*/

private String data;

/**

* binlog文件名

*/

private String fileName;

/**

* binlog当前读取点位

*/

private Integer filePos;

/**

* 数据库名

*/

private String database;

/**

* 表名

*/

private String tableName;

/**

* 变更时间

*/

private Long operatorTime;

}

5、实现MySQL消息读取自定义序列化

import com.alibaba.fastjson.JSONObject;

import com.google.common.collect.ImmutableMap;

import com.ververica.cdc.debezium.DebeziumDeserializationSchema;

import io.debezium.data.Envelope;

import lombok.extern.slf4j.Slf4j;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.util.Collector;

import org.apache.kafka.connect.data.Field;

import org.apache.kafka.connect.data.Schema;

import org.apache.kafka.connect.data.Struct;

import org.apache.kafka.connect.source.SourceRecord;

import java.util.List;

import java.util.Map;

import java.util.Optional;

@Slf4j

public class MysqlDeserialization implements DebeziumDeserializationSchema<DataChangeInfo> {

public static final String TS_MS = "ts_ms";

public static final String BIN_FILE = "file";

public static final String POS = "pos";

public static final String BEFORE = "before";

public static final String AFTER = "after";

public static final String SOURCE = "source";

/**

* 获取操作类型 READ CREATE UPDATE DELETE TRUNCATE;

* 变更类型: 0 初始化 1新增 2修改 3删除 4导致源中的现有表被截断的操作

*/

private static final Map<String, Integer> OPERATION_MAP = ImmutableMap.of(

"READ", 0,

"CREATE", 1,

"UPDATE", 2,

"DELETE", 3,

"TRUNCATE", 4);

/**

* 反序列化数据,转为变更JSON对象

*

* @param sourceRecord sourceRecord

* @param collector collector

*/

@Override

public void deserialize(SourceRecord sourceRecord, Collector<DataChangeInfo> collector) {

String topic = sourceRecord.topic();

String[] fields = topic.split("\.");

String database = fields[1];

String tableName = fields[2];

Struct struct = (Struct) sourceRecord.value();

final Struct source = struct.getStruct(SOURCE);

DataChangeInfo dataChangeInfo = new DataChangeInfo();

// 获取操作类型 READ CREATE UPDATE DELETE TRUNCATE;

Envelope.Operation operation = Envelope.operationFor(sourceRecord);

String type = operation.toString().toUpperCase();

int eventType = OPERATION_MAP.get(type);

// fixme 一般情况是无需关心其之前之后数据的,直接获取最新的数据即可,但这里为了演示,都进行输出

dataChangeInfo.setBeforeData(getJsonObject(struct, BEFORE).toJSONString());

dataChangeInfo.setAfterData(getJsonObject(struct, AFTER).toJSONString());

if (eventType == 3) {

dataChangeInfo.setData(getJsonObject(struct, BEFORE).toJSONString());

} else {

dataChangeInfo.setData(getJsonObject(struct, AFTER).toJSONString());

}

dataChangeInfo.setOperatorType(eventType);

dataChangeInfo.setFileName(Optional.ofNullable(source.get(BIN_FILE)).map(Object::toString).orElse(""));

dataChangeInfo.setFilePos(

Optional.ofNullable(source.get(POS))

.map(x -> Integer.parseInt(x.toString()))

.orElse(0)

);

dataChangeInfo.setDatabase(database);

dataChangeInfo.setTableName(tableName);

dataChangeInfo.setOperatorTime(Optional.ofNullable(struct.get(TS_MS))

.map(x -> Long.parseLong(x.toString())).orElseGet(System::currentTimeMillis));

// 输出数据

collector.collect(dataChangeInfo);

}

/**

* 从元素数据获取出变更之前或之后的数据

*

* @param value value

* @param fieldElement fieldElement

* @return JSONObject

*/

private JSONObject getJsonObject(Struct value, String fieldElement) {

Struct element = value.getStruct(fieldElement);

JSONObject jsonObject = new JSONObject();

if (element != null) {

Schema afterSchema = element.schema();

List<Field> fieldList = afterSchema.fields();

for (Field field : fieldList) {

Object afterValue = element.get(field);

jsonObject.put(field.name(), afterValue);

}

}

return jsonObject;

}

@Override

public TypeInformation<DataChangeInfo> getProducedType() {

return TypeInformation.of(DataChangeInfo.class);

}

}

6、自定义实现用户定义的接收器功能

import cn.hutool.core.bean.BeanUtil;

import cn.hutool.extra.spring.SpringUtil;

import com.alibaba.fastjson.JSONObject;

import lombok.extern.slf4j.Slf4j;

import org.apache.flink.streaming.api.functions.sink.SinkFunction;

import org.springframework.stereotype.Component;

@Slf4j

@Component

public class DataChangeSink implements SinkFunction<DataChangeInfo> {

@Override

public void invoke(DataChangeInfo dataChangeInfo, Context context) {

// 变更类型: 0 初始化 1新增 2修改 3删除 4导致源中的现有表被截断的操作

Integer operatorType = dataChangeInfo.getOperatorType();

// TODO 数据处理,不能在方法外注入需要的bean,会报错必须实例化才可以,

// 所以使用SpringUtil 获取需要的 bean,比如获取 extends ElasticsearchRepository<T, ID>的接口如下所示,然后就可以使用封装的方法进行增删改操作了

// XXXXXSearchRepository repository = SpringUtil.getBean(XXXXXSearchRepository.class);

}

}

7、实现MySQL变更监听

import com.ververica.cdc.connectors.mysql.source.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import lombok.RequiredArgsConstructor;

import lombok.extern.slf4j.Slf4j;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.springframework.boot.ApplicationArguments;

import org.springframework.boot.ApplicationRunner;

import org.springframework.stereotype.Component;

import java.util.concurrent.CompletableFuture;

@Slf4j

@Component

@RequiredArgsConstructor

public class MysqlEventListener implements ApplicationRunner {

private final DataChangeSink dataChangeSink;

private final MysqlCdcInfo mysqlCdcInfo;

@Override

public void run(ApplicationArguments args) {

CompletableFuture.runAsync(() -> {

try {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置2个并行源任务

env.setParallelism(2);

MySqlSource<DataChangeInfo> mySqlSource = buildDataChangeSource(mysqlCdcInfo);

DataStream<DataChangeInfo> streamSource = env

.fromSource(mySqlSource, WatermarkStrategy.noWatermarks(), "mysql-source")

//对接收器使用并行1来保持消息的顺序

.setParallelism(1);

streamSource.addSink(dataChangeSink);

env.executeAsync("mysql-cdc-es");

} catch (Exception e) {

log.error("mysql --> es, Exception=", e);

}

}).exceptionally(ex -> {

ex.printStackTrace();

return null;

});

}

/**

* 构造变更数据源

*

* @return DebeziumSourceFunction<DataChangeInfo>

*/

private MySqlSource<DataChangeInfo> buildDataChangeSource(MysqlCdcInfo mysqlCdcInfo) {

return MySqlSource.<DataChangeInfo>builder()

.hostname(mysqlCdcInfo.getIp())

.port(mysqlCdcInfo.getPort())

.databaseList(mysqlCdcInfo.getDbs())

// 支持正则匹配

.tableList(mysqlCdcInfo.getTables())

.username(mysqlCdcInfo.getUser())

.password(mysqlCdcInfo.getPwd())

// initial:初始化快照,即全量导入后增量导入(检测更新数据写入)

.startupOptions(StartupOptions.initial())

.deserializer(new MysqlDeserialization())

.serverTimeZone("GMT+8")

.build();

}

}

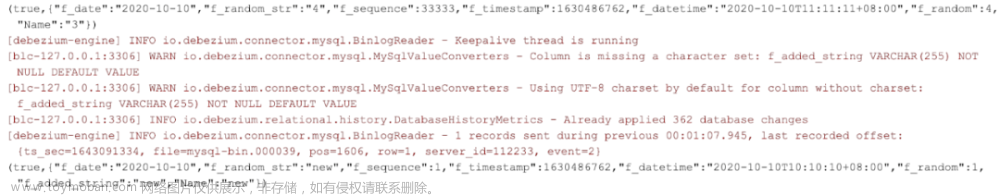

到此就大功告成啦!代码地址:https://gitee.com/qianxkun/lakudouzi-components/tree/master/flink-cdc-mysql2es文章来源:https://www.toymoban.com/news/detail-575365.html

参考文章:https://blog.51cto.com/caidingnu/6100996 非常感谢!文章来源地址https://www.toymoban.com/news/detail-575365.html

到了这里,关于Spring Boot+Flink CDC —— MySQL 同步 Elasticsearch (DataStream方式)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!