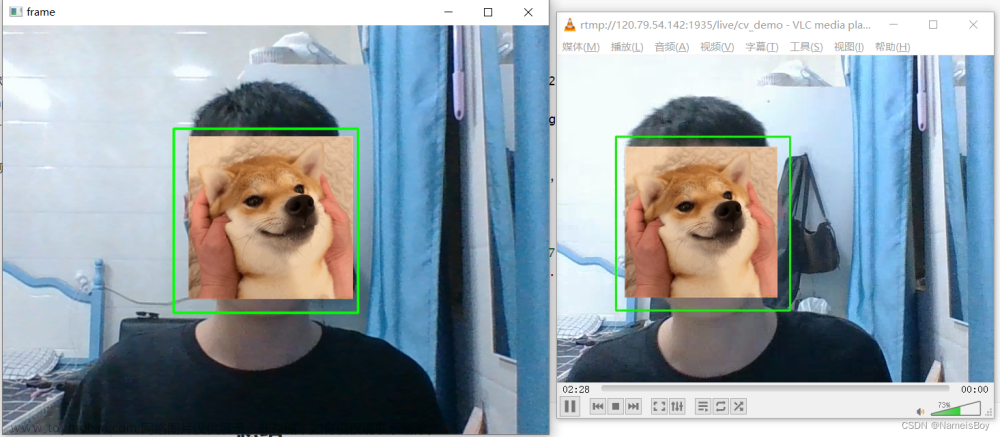

FFmpeg/opencv + C++ 实现直播拉流和直播推流(对视频帧进行处理)

本文主要使用C++ ffmpeg库实现对除去webrtc的视频流进行拉流,而后经过自身的处理,而后通过将处理后的视频帧进行编码,最后进行推流处理。详情请看代码文章来源:https://www.toymoban.com/news/detail-594163.html

extern "C"

{

#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#include <libavutil/error.h>

#include <libavutil/mem.h>

#include <libswscale/swscale.h>

#include <libavdevice/avdevice.h>

#include <libavutil/time.h>

}

#include <opencv2/imgproc/imgproc_c.h>

#include <opencv2/core/opengl.hpp>

#include <opencv2/cudacodec.hpp>

#include <opencv2/freetype.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp>

#include <iostream>

#include <queue>

#include <string>

#include <vector>

/// Mat 转 AVFrame

AVFrame *CVMatToAVFrame(cv::Mat &inMat)

{

//得到Mat信息

AVPixelFormat dstFormat = AV_PIX_FMT_YUV420P;

int width = inMat.cols;

int height = inMat.rows;

//创建AVFrame填充参数 注:调用者释放该frame

AVFrame *frame = av_frame_alloc();

frame->width = width;

frame->height = height;

frame->format = dstFormat;

//初始化AVFrame内部空间

int ret = av_frame_get_buffer(frame, 64);

if (ret < 0)

{

return nullptr;

}

ret = av_frame_make_writable(frame);

if (ret < 0)

{

return nullptr;

}

//转换颜色空间为YUV420

cv::cvtColor(inMat, inMat, cv::COLOR_BGR2YUV_I420);

//按YUV420格式,设置数据地址

int frame_size = width * height;

unsigned char *data = inMat.data;

memcpy(frame->data[0], data, frame_size);

memcpy(frame->data[1], data + frame_size, frame_size/4);

memcpy(frame->data[2], data + frame_size * 5/4, frame_size/4);

return frame;

}

void rtmpPush2(std::string inputUrl, std::string outputUrl){

// 视频流下标

int videoindex = -1;

// 注册相关服务,可以不用

av_register_all();

avformat_network_init();

const char *inUrl = inputUrl.c_str();

const char *outUrl = outputUrl.c_str();

// 使用opencv解码

VideoCapture capture;

cv::Mat frame;

// 像素转换上下文

SwsContext *vsc = NULL;

// 输出的数据结构

AVFrame *yuv = NULL;

// 输出编码器上下文

AVCodecContext *outputVc = NULL;

// rtmp flv 封装器

AVFormatContext *output = NULL;

// 定义描述输入、输出媒体流的构成和基本信息

AVFormatContext *input_ctx = NULL;

AVFormatContext * output_ctx = NULL;

/// 1、加载视频流

// 打开一个输入流并读取报头

int ret = avformat_open_input(&input_ctx, inUrl, 0, NULL);

if (ret < 0)

{

cout << "avformat_open_input failed!" << endl;

return;

}

cout << "avformat_open_input success!" << endl;

// 读取媒体文件的数据包以获取流信息

ret = avformat_find_stream_info(input_ctx, 0);

if (ret != 0)

{

return;

}

// 终端打印相关信息

av_dump_format(input_ctx, 0, inUrl, 0);

//如果是输入文件 flv可以不传,可以从文件中判断。如果是流则必须传

ret = avformat_alloc_output_context2(&output_ctx, NULL, "flv", outUrl);

if (ret < 0)

{

cout << "avformat_alloc_output_context2 failed!" << endl;

return;

}

cout << "avformat_alloc_output_context2 success!" << endl;

cout << "nb_streams: " << input_ctx->nb_streams << endl;

unsigned int i;

for (i = 0; i < input_ctx->nb_streams; i++)

{

AVStream *in_stream = input_ctx->streams[i];

// 根据输入流创建输出流

AVStream *out_stream = avformat_new_stream(output_ctx, in_stream->codec->codec);

if (!out_stream)

{

cout << "Failed to successfully add audio and video stream" << endl;

ret = AVERROR_UNKNOWN;

}

//将输入编解码器上下文信息 copy 给输出编解码器上下文

ret = avcodec_parameters_copy(out_stream->codecpar, in_stream->codecpar);

if (ret < 0)

{

printf("copy 编解码器上下文失败\n");

}

out_stream->codecpar->codec_tag = 0;

out_stream->codec->codec_tag = 0;

if (output_ctx->oformat->flags & AVFMT_GLOBALHEADER)

{

out_stream->codec->flags = out_stream->codec->flags | AV_CODEC_FLAG_GLOBAL_HEADER;

}

}

//查找到当前输入流中的视频流,并记录视频流的索引

for (i = 0; i < input_ctx->nb_streams; i++)

{

if (input_ctx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO)

{

videoindex = i;

break;

}

}

//获取视频流中的编解码上下文

AVCodecContext *pCodecCtx = input_ctx->streams[videoindex]->codec;

//4.根据编解码上下文中的编码id查找对应的解码

AVCodec *pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

// pCodec = avcodec_find_decoder_by_name("flv");

if (pCodec == NULL)

{

std::cout << "找不到解码器" << std::endl;

return;

}

//5.打开解码器

if (avcodec_open2(pCodecCtx, pCodec,NULL)<0)

{

std::cout << "解码器无法打开" << std::endl;

return;

}

//推流每一帧数据

AVPacket pkt;

//获取当前的时间戳 微妙

long long start_time = av_gettime();

long long frame_index = 0;

int count = 0;

int indexCount = 1;

int numberAbs = 1;

bool flag;

int VideoCount = 0;

std::queue<std::string> VideoList;

/// 定义解码过程中相关参数

AVFrame *pFrame = av_frame_alloc();

int got_picture;

int frame_count = 0;

AVFrame* pFrameYUV = av_frame_alloc();

uint8_t *out_buffer;

out_buffer = new uint8_t[avpicture_get_size(AV_PIX_FMT_BGR24, pCodecCtx->width, pCodecCtx->height)];

avpicture_fill((AVPicture *)pFrameYUV, out_buffer, AV_PIX_FMT_BGR24, pCodecCtx->width, pCodecCtx->height);

try{

/// opencv 解码

// capture.open(inUrl);

// while (!capture.isOpened()){

// capture.open(inUrl);

// std::cout << "直播地址无法打开" << endl;

// }

// int inWidth = capture.get(CAP_PROP_FRAME_WIDTH);

// int inHeight = capture.get(CAP_PROP_FRAME_HEIGHT);

// int fps = capture.get(CAP_PROP_FPS);

/// TODO: ffmpeg 解码

/// 2 初始化格式转换上下文

vsc = sws_getCachedContext(vsc,

pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_RGB24, // 源宽、高、像素格式

pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_YUV420P, // 目标宽、高、像素格式

SWS_BICUBIC, // 尺寸变化使用算法

0, 0, 0);

if (!vsc)

{

throw logic_error("sws_getCachedContext failed!"); // 转换失败

}

/// 3 初始化输出的数据结构

yuv = av_frame_alloc();

yuv->format = AV_PIX_FMT_YUV420P;

yuv->width = pCodecCtx->width;

yuv->height = pCodecCtx->height;

yuv->pts = 0;

// 分配yuv空间

int ret = av_frame_get_buffer(yuv, 32);

if (ret != 0)

{

char buf[1024] = {0};

av_strerror(ret, buf, sizeof(buf) - 1);

throw logic_error(buf);

}

/// 4 初始化编码上下文

// a 找到编码器

AVCodec *codec = avcodec_find_encoder(AV_CODEC_ID_H264);

if (!codec)

{

throw logic_error("Can`t find h264 encoder!"); // 找不到264编码器

}

// b 创建编码器上下文

outputVc = avcodec_alloc_context3(codec);

if (!outputVc)

{

throw logic_error("avcodec_alloc_context3 failed!"); // 创建编码器失败

}

// c 配置编码器参数

outputVc->flags |= AV_CODEC_FLAG_GLOBAL_HEADER; // 全局参数

outputVc->codec_id = codec->id;

outputVc->thread_count = 8;

outputVc->bit_rate = 50 * 1024 * 8; // 压缩后每秒视频的bit位大小为50kb

outputVc->width = pCodecCtx->width;

outputVc->height = pCodecCtx->height;

outputVc->time_base = {1, pCodecCtx->time_base.den};

outputVc->framerate = {pCodecCtx->time_base.den, 1};

/// TODO 以下参数可以控制直播画质和清晰度

// 画面组的大小,多少帧一个关键帧

outputVc->gop_size = 30;

outputVc->max_b_frames = 1;

outputVc->qmax = 51;

outputVc->qmin = 10;

outputVc->pix_fmt = AV_PIX_FMT_YUV420P;

// d 打开编码器上下文

ret = avcodec_open2(outputVc, 0, 0);

if (ret != 0)

{

char buf[1024] = {0};

av_strerror(ret, buf, sizeof(buf) - 1);

throw logic_error(buf);

}

cout << "avcodec_open2 success!" << endl;

/// 5 输出封装器和视频流配置

// a 创建输出封装器上下文

ret = avformat_alloc_output_context2(&output, 0, "flv", outUrl);

if (ret != 0)

{

char buf[1024] = {0};

av_strerror(ret, buf, sizeof(buf) - 1);

throw logic_error(buf);

}

// b 添加视频流

AVStream *vs = avformat_new_stream(output, codec);

if (!vs)

{

throw logic_error("avformat_new_stream failed");

}

vs->codecpar->codec_tag = 0;

// 从编码器复制参数

avcodec_parameters_from_context(vs->codecpar, outputVc);

av_dump_format(output, 0, outUrl, 1);

// 写入封装头

ret = avio_open(&output->pb, outUrl, AVIO_FLAG_WRITE);

ret = avformat_write_header(output, NULL);

if (ret != 0)

{

cout << "ret:" << ret << endl;

char buf[1024] = {0};

av_strerror(ret, buf, sizeof(buf) - 1);

throw logic_error(buf);

}

AVPacket pack;

memset(&pack, 0, sizeof(pack));

int vpts = 0;

bool imgFrameFlag = false;

int timeCount = 0;

while (1)

{

/// 读取rtsp视频帧(opencv 读取方式, 如使用opencv方式、则不需要前期的针对输入流的相关处理)

/*

if (!capture.grab()) {

continue;

}

/// yuv转换为rgb

if (!capture.retrieve(frame))

{

continue;

}

if (frame.empty()){

capture.open(inUrl);

while (!capture.isOpened()){

capture.open(inUrl);

std::cout << "直播地址无法打开" << endl;

}

imgFrameFlag = true;

}

if (imgFrameFlag){

imgFrameFlag = false;

continue;

}

*/

ret = av_read_frame(input_ctx, &pkt);

if (ret < 0)

{

continue;

}

//延时

if (pkt.stream_index == videoindex) {

/// TODO: 获取frame

//7.解码一帧视频压缩数据,得到视频像素数据

auto start = std::chrono::system_clock::now();

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, &pkt);

auto end = std::chrono::system_clock::now();

// cout << "The run time is: " <<(double)(endTime - startTime) / CLOCKS_PER_SEC << "s" << endl;

// cout << "Detection time of old version is: " <<std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << endl;

if (ret < 0) {

cout << ret << endl;

std::cout << "解码错误" << std::endl;

av_frame_free(&yuv);

av_free_packet(&pack);

continue;

}

//为0说明解码完成,非0正在解码

if (got_picture) {

SwsContext *img_convert_ctx;

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_BGR24, SWS_BICUBIC,

NULL, NULL, NULL);

sws_scale(img_convert_ctx, (const uint8_t *const *) pFrame->data, pFrame->linesize, 0,

pCodecCtx->height, pFrameYUV->data, pFrameYUV->linesize);

frame_count++;

cv::Mat img;

img = cv::Mat(pCodecCtx->height, pCodecCtx->width, CV_8UC3);

img.data = pFrameYUV->data[0];

/// TODO: 图像处理模块

/*

此处可根据需要进行相关处理,往视频叠加元素、视频检测

*/

/// rgb to yuv

yuv = CVMatToAVFrame(img);

/// h264编码

yuv->pts = vpts;

vpts++;

ret = avcodec_send_frame(outputVc, yuv);

av_frame_free(&yuv);

if (ret != 0){

av_frame_free(&yuv);

av_free_packet(&pack);

continue;

}

ret = avcodec_receive_packet(outputVc, &pack);

if (ret != 0 || pack.size > 0) {}

else {

av_frame_free(&yuv);

av_free_packet(&pack);

continue;

}

int firstFrame = 0;

if (pack.dts < 0 || pack.pts < 0 || pack.dts > pack.pts || firstFrame) {

firstFrame = 0;

pack.dts = pack.pts = pack.duration = 0;

}

// 推流

pack.pts = av_rescale_q(pack.pts, outputVc->time_base, vs->time_base); // 显示时间

pack.dts = av_rescale_q(pack.dts, outputVc->time_base, vs->time_base); // 解码时间

pack.duration = av_rescale_q(pack.duration, outputVc->time_base, vs->time_base); // 数据时长

ret = av_interleaved_write_frame(output, &pack);

if (ret < 0)

{

printf("发送数据包出错\n");

av_frame_free(&yuv);

av_free_packet(&pack);

continue;

}

av_frame_free(&yuv);

av_free_packet(&pack);

}

}

}

}catch (exception e){

if (vsc)

{

sws_freeContext(vsc);

vsc = NULL;

}

if (outputVc)

{

avio_closep(&output->pb);

avcodec_free_context(&outputVc);

}

cerr << e.what() << endl;

}

}

int main() {

av_log_set_level(AV_LOG_TRACE);

rtmpPush2("rtmp://39.170.104.236:28081/live/456","rtmp://39.170.104.237:28081/live/dj/1ZNBJ7C00C009X");

}

参考链接:

https://blog.csdn.net/weixin_45807901/article/details/129086344

https://blog.csdn.net/T__zxt/article/details/126827167文章来源地址https://www.toymoban.com/news/detail-594163.html

到了这里,关于FFmpeg/opencv + C++ 实现直播拉流和直播推流(对视频帧进行处理)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!