实验环境

完成初始化集群的环境:

(vms21)192.168.26.21——master1

(vms22)192.168.26.22——worker1

(vms23)192.168.26.23——worker2

安装了k8s客户端工具的主机:

(vms41)192.168.26.41——client

一、验证

概述

当我们登录master上时,似乎不需要任何验证就可以进行管理操作,这是因为我们使用root用户登录master上并操作,若我们通过其他用户或者在其他节点上进行kubectl相关命令操作时,就会显示拒绝连接

如下图所示:

以使用pc端的微信为例,我们登录pc的账号并不同于登录微信的账号,我们首先登录pc,然后使用微信的账号登录微信

k8s也一样,我们登录os后,需要用k8s的账号登录,只是在k8s中默认已经设置了root账号,所以我们通过root登录os时,便直接可以登录k8s,所以没有感知到登录验证,而使用其他账号(在k8s中并没有进行过设置的账号)则会显示失败

那么在k8s环境里是如何进行认证的?

token 认证方式

用户以登录的方式来认证有以下两种:

(1)basic-auth-file:输入用户名、密码

(2)token-auth-file:输入token进行验证

将用户名、密码或token信息配置在master的/etc/kubernetes/manifests/kube-apiserver.yaml中

但在k8s v1.19之前(不包括v1.19),有basic-auth-file的认证方式(通过用户名密码的方式认证),后来取消了,因此这里我们只需要了解token的认证方式

一:master上生成token

openssl rand -hex 10

#输出:

4a557575eaa52fe0555f

二:master上,生成一个认证文件/etc/kubernetes/pki/xxx.csv,把生成的token写入文件中

如:对mary用户生成一个认证文件bb.csv

vim /etc/kubernetes/pki/bb.csv

#文件中的格式如下:

#token,用户,UUID

4a557575eaa52fe0555f,mary,3

三:master上编辑/etc/kubernetes/manifests/kube-apiserver.yaml文件,在spec.containers.command下配置认证文件的路径

vim /etc/kubernetes/manifests/kube-apiserver.yaml

#在spec.containers.command插入:

- --token-auth-file=/etc/kubernetes/pki/bb.csv

四:重启生效

systemctl restart kubelet

测试:

另启一个虚拟机作为客户端(vms41)

一:安装k8s客户端工具

#添加k8s的国内加速yum源k8s.repo,放入/etc/yum.repos.d/下

rm -rf /etc/yum.repos.d/* ; wget ftp://ftp.rhce.cc/k8s/* -P /etc/yum.repos.d/

yum install -y kubectl-1.24.2-0 --disableexcludes=kubernetes

二:在master查询k8s服务器地址

kubectl cluster-info

#输出:

Kubernetes control plane is running at https://192.168.26.21:6443

CoreDNS is running at https://192.168.26.21:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

三:登录k8s,并执行命令:get pods -n kube-system

# -s:指定k8s服务器

# --token:使用token的验证方式连接

kubectl -s https://192.168.26.21:6443 --token=4a557575eaa52fe0555f get pods -n kube-system

但此时会连接失败:

Unable to connect to the server: x509: certificate signed by unknown authority

这是因为k8s中所有的组件(kube-apiserver、kube-controller-manager…)之间若想要相互进行通信,都需要进行加密认证——证书的认证 TLS

我们可以以跳过认证的方式来连接

# --insecure-skip-tls-verify=true:跳过TLS认证

kubectl --insecure-skip-tls-verify=true -s https://192.168.26.21:6443 --token=4a557575eaa52fe0555f get pods -n kube-system

若出现:

Error from server (Forbidden): pods is forbidden: User "mary" cannot list resource "pods" in API group "" in the namespace "kube-system"

意为mary用户权限不够,只要在master上给mary用户授权即可

kubectl create clusterrolebinding cbind1 --clusterrole cluster-admin --user=mary

授权后就可以连接了

kubectl --insecure-skip-tls-verify=true -s https://192.168.26.21:6443 --token=4a557575eaa52fe0555f get pods -n kube-system

#输出:

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-56cdb7c587-xk97l 1/1 Running 18 (27m ago) 27d

calico-node-4vfw4 1/1 Running 23 (27m ago) 27d

calico-node-69h65 1/1 Running 17 (27m ago) 27d

calico-node-k8btq 1/1 Running 17 (27m ago) 27d

coredns-74586cf9b6-rlkhp 1/1 Running 17 (27m ago) 27d

coredns-74586cf9b6-x5vld 1/1 Running 17 (27m ago) 27d

etcd-vms21.rhce.cc 1/1 Running 22 (27m ago) 27d

kube-apiserver-vms21.rhce.cc 1/1 Running 1 (27m ago) 22h

kube-controller-manager-vms21.rhce.cc 1/1 Running 23 (27m ago) 27d

kube-proxy-d9lsg 1/1 Running 18 (27m ago) 27d

kube-proxy-f6dbr 1/1 Running 18 (27m ago) 27d

kube-proxy-lgjsd 1/1 Running 17 (27m ago) 27d

kube-scheduler-vms21.rhce.cc 1/1 Running 23 (27m ago) 27d

metrics-server-58556b7dd4-cpth2 1/1 Running 16 (27m ago) 27d

删除mary的权限

kubectl delete clusterrolebinding cbind1

kubeconfig 认证方式

k8s中各组件若想要相互通信,则需要TLS认证—mTLS(双向认证)

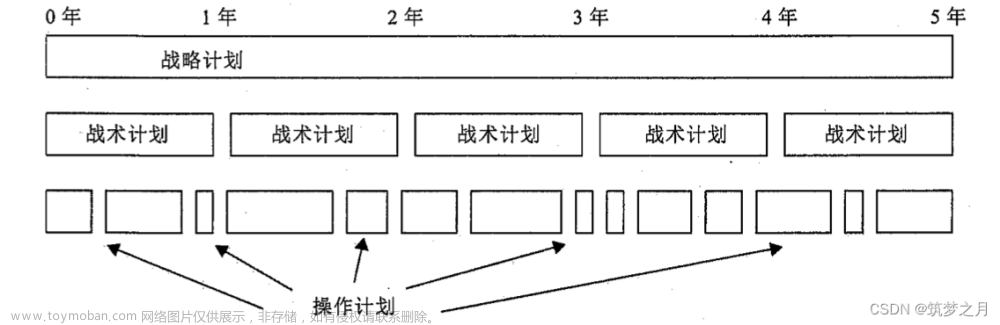

什么是mTLS双向认证?如下图:

apiserver组件要与kubelet组件相互通信,首先由一个权威的机构(CA)来给各组件颁发证书,包含用户证书和CA证书,kubelet连接到apiserver,需要出示自己的用户证书,apiserver通过自己的CA证书来验证对方用户证书的真伪性,同样,apiserver也需要向kubelet出示用户证书,kubelet使用自己CA证书来验证对方用户证书,这种相互认证对方证书即双向认证

同样的,若有某远端客户端连接到apiserver,也需要进行双向认证

使用kubeconfig的认证方式,我们需要编写kubeconfig文件

因为k8s组件间需要TLS认证,因此kubeconfig文件中需要包含三部分信息:

1.cluster信息:

(1)集群的地址

(2)CA证书(CA颁发的证书,用于验证对方出示的用户证书)

2.上下文信息:

(1)关联了集群信息与用户信息

(2)其他一些默认的用户信息,如所处的命名空间等

3.用户信息:

(1)用户私钥

(2)用户证书(CA颁发的证书,出示给对方以供验证)

查看kubeconfig文件结构

kubectl config view

#输出:

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://192.168.26.21:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

namespace: net

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

当我们使用root用户登录k8s的时候,默认使用的就是kubeconfig的认证方式,他使用的kubeconfig文件就是“/etc/kubernetes/admin.conf”

查看/etc/kubernetes/admin.conf

vim /etc/kubernetes/admin.conf

#输出:

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeU1EY3dOakE1TkRjME4xb1hEVE15TURjd016QTVORGMwTjFvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTW1DCjNOZFdJcXpVV1B6Yk1hYS9jZTR3NW14ZjBRUUdHTkhqb1lhOHR6S0c3VDNObVBMTDRpTHlhQ05ESUUxeTBLRlEKK1RtZGFCSll4M1U0ZE5ZTHNTZEVWcUI1ajNSem5xNUo2L1BPbTBjVW5yY0VxUFFYN0w5MXNNZU90QlZOdWYyRApRc01ZV3lpK3NXUVMxYVhqTHZyaUdWYXRGd0dDSmhTbHlLTENvODdNOHdHbjZzeWlyaXhNdG1sZURLdlJMYkZRCnRhMzRWSVRlQVg3R3VsckJKQmZEMmRxZXY5K0EzZytWMU5KeXo5TXJ0Tkh5THM5ZWNUcnZpbE1JTW55MmUyMDcKZHRGc2V6MmhyenZhRkpIdzVzcDE3enozUHZXcS83ang1YU9lWEdtaU1NemxQSWhJV1JlbU11UWhmVjRsekVrRApzYUtrV05NNEpvSDlsdDZpb21NQ0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZMUmlpc0hQMmVERTE4THBGZVFHcVg2WDR4SXFNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBSTBMWXU0Q3ArL1ZZME9oaEU5WgpZT09Ob2JmTTg5SG9wVnFCVkZtd1Q1cGliS1pXYmZidGQ5bjg5T3p0WlJrSlBLOGNpOCtwRmpleDJFVGNzRWVTCkxveisyRFN3T1VrSnJ2OHl1VjJPZDI2Q3hIMDFEZ1NjcTJMSlRhNG1EVi93b0ZFYlNGTVVyaUVtRXZRdHZsengKMTlMTWJTOUdVdWtCaURhVWp2U0xqOVViS3lWZ3JnUVdWUlJaY0hHamY5c3dYRmU4NVdUQVJiR1Q2R09ZVnZyQQoxa3JSQlhZSGhKSGxKeEptVXJTSDc5c2pHeGdCQy9qR1ppZURPa2RNVWhDbTFFQ3BTcnBFakhKTml2KzY3Nnh1CkdUbmR5ejk2TFZUM1A3U0phV0tEcThvNTFNcmRNTExiZGVWSE04TkVrWTR1b3UxTkUrVEJtU2lCQlQvL2ZHRnUKdXUwPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.26.21:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURJVENDQWdtZ0F3SUJBZ0lJUnVuazhVcGVwWFF3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TWpBM01EWXdPVFEzTkRkYUZ3MHlNekEzTURZd09UUTNORGxhTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXEwdnBWZHVRdFJlMHVWY1QKdHBjWURFU05SM1J4Q1pBZ0FkOEw4Visyc0VlelRKKzhld1d2NEs4ZGY0alVjTXREQUdreUFNZWRJd3hHc2JDVgp1UU1HY2V4YzVyWUNiUm81ekNGMUhFM09OMmZvelZtSWVLL0NyUGRJUnJQdFVaS1NDVUlpNDhIL1dvN1ltdG9rCnhUaFZhUW84T0NZSFFtZThPbjZRUW56MTdpTEhSV241NUE0QjlYWlVCRTNUaXFEUGlWbWlJemJLZ1BhSFJDV0IKRWxwUS9XUXNrV1B3WUJkMXR3cFhLRzBsQ2xwcUI3TTJocEo1RmM4WVBKL2pEeE9DTHFZeXdmQWFhUHoyVjNxdQpUcWppaXM4aSt4bEJrbGxXUERldFhwWHB3Y0JTVnIwS3FJRE9BTFdhMGRwWDRoekFnMVB2Y0c3eG9aZkpWeTRzCnNwdFJKUUlEQVFBQm8xWXdWREFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RBWURWUjBUQVFIL0JBSXdBREFmQmdOVkhTTUVHREFXZ0JTMFlvckJ6OW5neE5mQzZSWGtCcWwrbCtNUwpLakFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBY003Vy82YlcwemUyUVMxMThKYlBMeFJlWGV4L2ZTbXZzMFNBCi9qQWlEZXM4RDU4dkVuZmVwTGp1TUR0MTNQRXdyUW5QQXQxY1J4UElqODlwR0FhS2dkNDV3MVE5RzBrbGNsR2UKelcrNkl2TzM5KzVKT1ozSCtzK3UzQlJkTzZJc0RiREN2dE5tVm0vRmt4QTZsSnAwbG9jWE44SW1neWE4SXYxMgo4Y0t1MUx6YWZnK0ZkOEpYcUoyMEt6MVJCZjN3bm5NR3lXZXRkYUN1MmZGb242QVEyaFBaSTNETWdRU01Zbzc0CnErOTdCYm9maklGdkxFclhCRm56dlRLRkQrSzRWcVM3MDQ5N0lDZ3RndUkwbjJ5Zk5LaG1zZmloUGtZQ0k5bG4KdXF1VURYdXpBYWFaZklSbW5WVm5EcldMZktDd1ZPaUFVUmg2Q1Y1YUhrTVcyRS8zUWc9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBcTB2cFZkdVF0UmUwdVZjVHRwY1lERVNOUjNSeENaQWdBZDhMOFYrMnNFZXpUSis4CmV3V3Y0SzhkZjRqVWNNdERBR2t5QU1lZEl3eEdzYkNWdVFNR2NleGM1cllDYlJvNXpDRjFIRTNPTjJmb3pWbUkKZUsvQ3JQZElSclB0VVpLU0NVSWk0OEgvV283WW10b2t4VGhWYVFvOE9DWUhRbWU4T242UVFuejE3aUxIUlduNQo1QTRCOVhaVUJFM1RpcURQaVZtaUl6YktnUGFIUkNXQkVscFEvV1Fza1dQd1lCZDF0d3BYS0cwbENscHFCN00yCmhwSjVGYzhZUEovakR4T0NMcVl5d2ZBYWFQejJWM3F1VHFqaWlzOGkreGxCa2xsV1BEZXRYcFhwd2NCU1ZyMEsKcUlET0FMV2EwZHBYNGh6QWcxUHZjRzd4b1pmSlZ5NHNzcHRSSlFJREFRQUJBb0lCQUY2OWVvMXhCY1VUR25LVAoxYVJjUndHcC9KV3pzajAreUdVZ2p3TnVFNlhGMGtZajV1UUh6akd2eU5uYnZOdXhvQm9mRkhmWDczSU4vUitUCjhndEV3QkRNVU1tTml5UDZxRkxkZ2w4b0xWRDVtSW5TNWljUjF0TkJaV2t0WkttRUxsOE9oQ3VDQlpCNWh4V0cKWjJYbzlWeEdPKzQySWplNUZpTW9Fdk9qRjRZZ01BSmlhTkUwR0xKQldQTDFSYUxqWWdlcVVKdTRuWmVUZUR4LwpEaVdBK0tyTmRBRUpRcXkyTmxmR0JMV25kVHo0N3lLRERzcmhMVU9OOUdFdVQzN0g0SitmR3pJT1JTeDdwSEJ2CjJqZ1lQNnlHbWFOV0Q1bjVKeWhDN0VTdW5NaXFwNEI2WTBUYTNKeVoxcVI4c0p1QUJxUkVqQ3FpTHpXMCs4T3IKS2lnWWhOVUNnWUVBeERKVUJZYlV5ODlqVWYrN2ltalBJMzlJemZ3NFY5VldpaEJtUEpWa3BiNW13cDE5U1d0RApadUZ4c0JPMklRMUcrVlltQmNPZzVIVTBnc0NNdllHelRES3pZQnNVUVRKRVBGNTdBa0tZNGNqcit5OTlXL2F0CmRsdHJYMGhCcGFSMWR4YkVxaWxidm5ydTdhRHN5QzBMbUdLNjQxRXNXZmFkRGltTmxva2YvSDhDZ1lFQTM0S1MKNTlTblQ5cXppSkxJa0tXOE1mQ1lhbVZaT05VK3B5VFpsZU5vMzZ4NWE5c3YyREM0YjM1eW1rekJnUjhqVFZzZgozWklwZ2pzbHRGeVQyZ21ubXJqck5lcU8zdnFISTk2WXNhWHU2TFFjKzdtUEY3N2haUGE5dlBQV0JKSGJ3SzNqCkY0WUxSMVhmdlh0OGJsejRWbXZEcXRxZG44VC9NdENpUXZ1N2NGc0NnWUFDRktLTmVIT1RRYnpFNXRoZlRHTTkKOWlDWWhwODJWejNXc3Z2U2txY0JsdlpTQkFlTEdzY1pOVFRXY0M4VFJLZkhCeUhhRjh3Q0FEZ3hWc2RuVHhQTwpzQTcwNnZTWkNHWnUyWFdtZlh4UGtLam4vZ1h2VHJ0aU1PLy9qNjJhaXhidnoxOEFpdlc5SEdLaVJIMmVWZFAwCnByOWluNzYvcVh6YTVKZnF5OE42RXdLQmdRQ3FseHBRM3ptajlTUTZCTzRYbUtkKzVrY3VUWlEva0dKMVorYTYKUkF0elRFeVFTWWJHMXNpdU1EQ0FIRDFFcytOWjAwY0s4ZGZFa2loQTlMZlVIckpSb1BuRStQVjZzblFhcUhhYQpnQzlNWk13S1JLSTJXWFhtZlh4cmp4KzE5UzFvYms5NVVOR2k3S1FNRndmdG8vL1cxZ0ZOa2ZYa1Q1TUgwYjFHCnFxTnhLUUtCZ1FDNGVDbmZadW1LbGxFeC9CbVB6U2VYSDVYZFh3d2ZVK3UyRU5BL3JYRXJ4dmtmRUVGNEN5OVkKdzB3MXJFRlJnajNpYWRKNDJXQzBGQWsyUFFEMW5pSEtXVG5UVG1ZQURWRTNyYkhMY1RCRFJFaURyalhtazY2bAozSEVUQzBiTWF3cW5PeS92QkM0N1ZMUkQ2WjUzRE50ZDljQTVrS1hTcGdCUTNpdUZNcG9GZmc9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

clusters.cluster.certificate-authority-data——集群的证书

clusters.cluster.server——集群的地址

users.user.client-certificate-data——用户的证书

users.user.client-key-data——用户的私钥

context——上下文信息,关联用户与集群

(参考多集群切换章节)

实验:

一、master上将/etc/kubernetes/admin.conf拷贝至vms41

scp /etc/kubernetes/admin.conf 192.168.26.41:~

二、vms41上使用kubeconfig的认证方式,使用admin.conf作为kubeconfig文件连接k8s,并执行命令get pods

# --kubeconfig指定kubeconfig文件

kubectl --kubeconfig=./admin.conf get pods

#输出:

NAME READY STATUS RESTARTS AGE

default-testpod 1/1 Running 4 (133m ago) 2d5h

pod1 1/1 Running 7 (133m ago) 13d

pod2 1/1 Running 6 (133m ago) 9d

三、若每次连接k8s都要指定–kubeconfig略显麻烦,我们可以通过以下两种方式设置:

方式一:配置环境变量,默认使用某文件作为kubeconfig文件

配置vms41上的环境变量KUBECONFIG=./admin.conf

export KUBECONFIG=./admin.conf

配置完后直接可以kubectl [命令]

方式二:创建配置文件目录“.kube/config”,则默认会读取目录下的文件作为kubeconfig文件

#取消环境变量的设置

unset KUBECONFIG

#创建.kube目录

mkdir .kube

#将admin.conf拷贝至.kube/config下

cp admin.conf .kube/config

此时,就可以操作kubectl命令了

若这时进行重新初始化操作kubeadm reset,则.kube/config/admin.conf文件会发生变化

若设置了变量又存在“.kube/config”目录,则会优先选择变量的设置

但在实际工作中,我们不会直接将master上的/etc/kubernetes/admin.conf拷贝给其他客户端使用,因为这是管理员的kubeconfig认证文件,权限很大,不够安全

因此,我们需要针对客户端创建一个kubeconfig文件

实验:(考试不考)

客户端需要一个ca证书和一个私钥

在k8s集群环境中已经存在ca证书,即/etc/kubernetes/pki下的ca.crt

一、master上查看/etc/kubernetes/pki下的相关证书

ls /etc/kubernetes/pki

#输出:

apiserver.crt apiserver.key bb.csv etcd front-proxy-client.crt sa.pub

apiserver-etcd-client.crt apiserver-kubelet-client.crt ca.crt front-proxy-ca.crt front-proxy-client.key

apiserver-etcd-client.key apiserver-kubelet-client.key ca.key front-proxy-ca.key sa.key

ca.crt——ca证书

ca.key——ca私钥

/var/lib/kubelet/pki下为kubelet的相关证书

ls /var/lib/kubelet/pki

#输出:

kubelet-client-2022-07-06-17-47-50.pem kubelet-client-current.pem kubelet.crt kubelet.key

二、将ca证书拷贝出来到家目录备用

cp /etc/kubernetes/pki/ca.crt .

三、master上生成一个私钥

openssl genrsa -out [文件名].key 2048

#这里生成一个文件名为john的私钥

openssl genrsa -out john.key 2048

四、master上使用私钥向CA证书中心申请证书,首先生成一个csr文件xxx.csr

openssl req -new -key john.key -out [文件名].csr -subj "/CN=john/O=cka2020"

#这里生成一个文件名为john的csr文件

openssl req -new -key john.key -out john.csr -subj "/CN=john/O=cka2020"

五、对csr文件编码

cat john.csr | base64 | tr -d "\n"

#输出:

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1pqQ0NBVTRDQVFBd0lURU5NQXNHQTFVRUF3d0VhbTlvYmpFUU1BNEdBMVVFQ2d3SFkydGhNakF5TURDQwpBU0l3RFFZSktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQUxPV2VMZ2V5L2pkZ25pZWd2dGxQcGpUCmtvbXB6b2ZSN0xFRkw1cGRBQmRHd0N3QkVIWVVCNjVabnM4TGMxWUNnSW1PdjlFUm9DUmU2RHIzQzRRKzVQSXIKVnpaYXZuYW1CdzF5MnRxdm5tTmhxVkRJWUZ4Qzd6VkU3UlVFdTcxaFpwNnFkNWJNbzk3NUFCNXNiSHhJamwwdgp6cFA3eUIybEQvWm1LdmxWdjlLMU90UzhGTDlNTnpoRFVTc2dWWVdrV01LUnpqZ1UwT2RwSFVpYkpUOTRvVW1GCmRKZEh5ZXNyQkdwYTVvN09yZUh6OEliLzJaRGhsMU1xL21GblZobU9SWXpSMlhXQXo0ejU1a3VEakRwbHJSWXUKUGJpZ2FzTmFpVmRKNEpMT0F2MTBaS21FL0tlM1daZHozM2s3V3VZbTRxWWRubm42cWQ3M2FoTXp4STdPWUZrQwpBd0VBQWFBQU1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQUR1cVNYa0pEenVkOUcxVnZLVGZUeVRLYmFGNXRqCkh1dktIQTNwL29nTzdodSs3MU0vNDkrQ0RVQ2NlRlVudVlWUmUrM00yQWdYT1UrVERkZUgwQzY5dzV6TXc2RjUKYmpLbWZncW5PamtTSWVic1o0elBQSWg4WGUydUtGd0NFMnFPN1dRRy9aZGZ4RnJoN1NZVmRHcytRdnRObkZFRAp2VkVWc1hyVG1UR3l4MGMyN0NlNjFMTEI5c0l3a24zUUJ2Y2gwajloRXBWL3hIRnR2VUhHSnM0L3VYQ1RMV0R4CjV5azBQTW9EWjlJQXpRdWlBaStlOENFeTVSMjhZNTIwR0xMUCt3aExrTFA2VzNDOVVyQjh2OVhDeVgxdXRnanYKbjlwWTJlb1BRQ0k5b3dBVTR5czV3TkdLVTVKVE5xdnVCQ3BvTGNRWTM1STRFc0xkNzk3V1FKUzUKLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tCg==

六、编写申请证书请求文件的yaml文件

vim csr.yaml

#插入:

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: john

spec:

groups:

- system:authenticated

signerName: kubernetes.io/kube-apiserver-client

request: [...csr文件内容,第五步输出的内容...]

usages:

- client auth

七、创建证书申请文件

kubectl apply -f csr.yaml

查看

kubectl get csr

#输出:

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

john 46s kubernetes.io/kube-apiserver-client kubernetes-admin <none> Pending

八、颁发证书

kubectl certificate approve john

九、颁发后,再次查看csr,可以看到CONDITION变成了Approved,Issued

以yaml文件的形式查看,可以看到spec.status.certificate下有了CA颁发的证书

kubectl get csr

#输出:

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

john 2m3s kubernetes.io/kube-apiserver-client kubernetes-admin <none> Approved,Issued

kubectl get csr john -o yaml

#输出:

...

status:

certificate: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCekNDQWUrZ0F3SUJBZ0lSQU5seCtMR2dpQk5GeWZOQURJMm9JU0F3RFFZSktvWklodmNOQVFFTEJRQXcKRlRFVE1CRUdBMVVFQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TWpBNE1EWXdOekkzTkRoYUZ3MHlNekE0TURZdwpOekkzTkRoYU1DRXhFREFPQmdOVkJBb1RCMk5yWVRJd01qQXhEVEFMQmdOVkJBTVRCR3B2YUc0d2dnRWlNQTBHCkNTcUdTSWIzRFFFQkFRVUFBNElCRHdBd2dnRUtBb0lCQVFDemxuaTRIc3Y0M1lKNG5vTDdaVDZZMDVLSnFjNkgKMGV5eEJTK2FYUUFYUnNBc0FSQjJGQWV1V1o3UEMzTldBb0NKanIvUkVhQWtYdWc2OXd1RVB1VHlLMWMyV3I1MgpwZ2NOY3RyYXI1NWpZYWxReUdCY1F1ODFSTzBWQkx1OVlXYWVxbmVXektQZStRQWViR3g4U0k1ZEw4NlQrOGdkCnBRLzJaaXI1VmIvU3RUclV2QlMvVERjNFExRXJJRldGcEZqQ2tjNDRGTkRuYVIxSW15VS9lS0ZKaFhTWFI4bnIKS3dScVd1YU96cTNoOC9DRy85bVE0WmRUS3Y1aFoxWVpqa1dNMGRsMWdNK00rZVpMZzR3NlphMFdMajI0b0dyRApXb2xYU2VDU3pnTDlkR1NwaFB5bnQxbVhjOTk1TzFybUp1S21IWjU1K3FuZTkyb1RNOFNPem1CWkFnTUJBQUdqClJqQkVNQk1HQTFVZEpRUU1NQW9HQ0NzR0FRVUZCd01DTUF3R0ExVWRFd0VCL3dRQ01BQXdId1lEVlIwakJCZ3cKRm9BVXRHS0t3Yy9aNE1UWHd1a1Y1QWFwZnBmakVpb3dEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBSVE0QnkzYwpFNWNtRjNVaEFIM3lVdmZEd2Ryb1lxcmFIdHlsNEtRTVhQazhqbXNZWkhaWU9RdG4wVkFhaUF2R1d5Q1Y5YVVRCmd3aUM2Q1Rra285Y0tlOUttUzlyRDMwenhJS2U4MUNnWGVTcXVYcWh4WmduUUxTM2NJejFvT1lIZXFGUFA5WS8KM2hPZUI5a3BsN0xPZzMyU1RFbXRFQXZpbTRRVFBPc2xJaXBKR29ROUc0VUpEYTJIT1VwQXcwNmJkL3I3NzVHOAorc2d1RzdiYjJJWHZuMkI5V1BiWDhVRjZ3RmtqbGNBaFdidU9NTGZQcVllSjQwSzdvMEZiS2FnK05pelVBREZWCk13VHBUeE9PK1ZNeU5aOERUQzA4UjhoZWNEcmE3SThMQy9kV0x4MkFvYzR4aUlJellPSTk1WEtCaXU5dXJwb1QKalRkWFFsdUhRUElZZk5NPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

conditions:

- lastTransitionTime: "2022-08-06T07:32:48Z"

...

十、把证书导出作为用户证书

将证书base64编码后导出为用户证书文件 “john.crt”

kubectl get csr john -o jsonpath='{.status.certificate}' | base64 -d > john.crt

此时就具备了ca证书ca.crt、用户证书john.crt、用户私钥john.key

十一、以命令行的方式创建一个kubeconfig文件

设置集群信息:

文件名为kc1、集群为cluster1、集群地址为https://192.168.26.21:6443、集群证书为ca.crt

embed-certs=true表示将ca.crt证书内容嵌入到yaml文件中

kubectl config --kubeconfig=kc1 set-cluster cluster1 --server=https://192.168.26.21:6443 --certificate-authority=ca.crt --embed-certs=true

设置用户信息:

将用户证书、用户私钥嵌入kc1的kubeconfig文件中

kubectl config --kubeconfig=kc1 set-credentials john --client-certificate=john.crt --client-key=john.key --embed-certs=true

设置上下文信息:

将集群信息和用户信息关联起来

kubectl config --kubeconfig=kc1 set-context context1 --cluster=cluster1 --namespace=default --user=john

编辑kubeconfig文件kc1,将默认上下文配置current-context改为“context1”

...

current=context: "context1"

...

最终,kubeconfig文件kc1如下:

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeU1EY3dOakE1TkRjME4xb1hEVE15TURjd016QTVORGMwTjFvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTW1DCjNOZFdJcXpVV1B6Yk1hYS9jZTR3NW14ZjBRUUdHTkhqb1lhOHR6S0c3VDNObVBMTDRpTHlhQ05ESUUxeTBLRlEKK1RtZGFCSll4M1U0ZE5ZTHNTZEVWcUI1ajNSem5xNUo2L1BPbTBjVW5yY0VxUFFYN0w5MXNNZU90QlZOdWYyRApRc01ZV3lpK3NXUVMxYVhqTHZyaUdWYXRGd0dDSmhTbHlLTENvODdNOHdHbjZzeWlyaXhNdG1sZURLdlJMYkZRCnRhMzRWSVRlQVg3R3VsckJKQmZEMmRxZXY5K0EzZytWMU5KeXo5TXJ0Tkh5THM5ZWNUcnZpbE1JTW55MmUyMDcKZHRGc2V6MmhyenZhRkpIdzVzcDE3enozUHZXcS83ang1YU9lWEdtaU1NemxQSWhJV1JlbU11UWhmVjRsekVrRApzYUtrV05NNEpvSDlsdDZpb21NQ0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZMUmlpc0hQMmVERTE4THBGZVFHcVg2WDR4SXFNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBSTBMWXU0Q3ArL1ZZME9oaEU5WgpZT09Ob2JmTTg5SG9wVnFCVkZtd1Q1cGliS1pXYmZidGQ5bjg5T3p0WlJrSlBLOGNpOCtwRmpleDJFVGNzRWVTCkxveisyRFN3T1VrSnJ2OHl1VjJPZDI2Q3hIMDFEZ1NjcTJMSlRhNG1EVi93b0ZFYlNGTVVyaUVtRXZRdHZsengKMTlMTWJTOUdVdWtCaURhVWp2U0xqOVViS3lWZ3JnUVdWUlJaY0hHamY5c3dYRmU4NVdUQVJiR1Q2R09ZVnZyQQoxa3JSQlhZSGhKSGxKeEptVXJTSDc5c2pHeGdCQy9qR1ppZURPa2RNVWhDbTFFQ3BTcnBFakhKTml2KzY3Nnh1CkdUbmR5ejk2TFZUM1A3U0phV0tEcThvNTFNcmRNTExiZGVWSE04TkVrWTR1b3UxTkUrVEJtU2lCQlQvL2ZHRnUKdXUwPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.26.21:6443

name: cluster1

contexts:

- context:

cluster: cluster1

namespace: default

user: john

name: context1

current-context: "context1"

kind: Config

preferences: {}

users:

- name: john

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCekNDQWUrZ0F3SUJBZ0lSQU5seCtMR2dpQk5GeWZOQURJMm9JU0F3RFFZSktvWklodmNOQVFFTEJRQXcKRlRFVE1CRUdBMVVFQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TWpBNE1EWXdOekkzTkRoYUZ3MHlNekE0TURZdwpOekkzTkRoYU1DRXhFREFPQmdOVkJBb1RCMk5yWVRJd01qQXhEVEFMQmdOVkJBTVRCR3B2YUc0d2dnRWlNQTBHCkNTcUdTSWIzRFFFQkFRVUFBNElCRHdBd2dnRUtBb0lCQVFDemxuaTRIc3Y0M1lKNG5vTDdaVDZZMDVLSnFjNkgKMGV5eEJTK2FYUUFYUnNBc0FSQjJGQWV1V1o3UEMzTldBb0NKanIvUkVhQWtYdWc2OXd1RVB1VHlLMWMyV3I1MgpwZ2NOY3RyYXI1NWpZYWxReUdCY1F1ODFSTzBWQkx1OVlXYWVxbmVXektQZStRQWViR3g4U0k1ZEw4NlQrOGdkCnBRLzJaaXI1VmIvU3RUclV2QlMvVERjNFExRXJJRldGcEZqQ2tjNDRGTkRuYVIxSW15VS9lS0ZKaFhTWFI4bnIKS3dScVd1YU96cTNoOC9DRy85bVE0WmRUS3Y1aFoxWVpqa1dNMGRsMWdNK00rZVpMZzR3NlphMFdMajI0b0dyRApXb2xYU2VDU3pnTDlkR1NwaFB5bnQxbVhjOTk1TzFybUp1S21IWjU1K3FuZTkyb1RNOFNPem1CWkFnTUJBQUdqClJqQkVNQk1HQTFVZEpRUU1NQW9HQ0NzR0FRVUZCd01DTUF3R0ExVWRFd0VCL3dRQ01BQXdId1lEVlIwakJCZ3cKRm9BVXRHS0t3Yy9aNE1UWHd1a1Y1QWFwZnBmakVpb3dEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBSVE0QnkzYwpFNWNtRjNVaEFIM3lVdmZEd2Ryb1lxcmFIdHlsNEtRTVhQazhqbXNZWkhaWU9RdG4wVkFhaUF2R1d5Q1Y5YVVRCmd3aUM2Q1Rra285Y0tlOUttUzlyRDMwenhJS2U4MUNnWGVTcXVYcWh4WmduUUxTM2NJejFvT1lIZXFGUFA5WS8KM2hPZUI5a3BsN0xPZzMyU1RFbXRFQXZpbTRRVFBPc2xJaXBKR29ROUc0VUpEYTJIT1VwQXcwNmJkL3I3NzVHOAorc2d1RzdiYjJJWHZuMkI5V1BiWDhVRjZ3RmtqbGNBaFdidU9NTGZQcVllSjQwSzdvMEZiS2FnK05pelVBREZWCk13VHBUeE9PK1ZNeU5aOERUQzA4UjhoZWNEcmE3SThMQy9kV0x4MkFvYzR4aUlJellPSTk1WEtCaXU5dXJwb1QKalRkWFFsdUhRUElZZk5NPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBczVaNHVCN0wrTjJDZUo2QysyVSttTk9TaWFuT2g5SHNzUVV2bWwwQUYwYkFMQUVRCmRoUUhybG1lend0elZnS0FpWTYvMFJHZ0pGN29PdmNMaEQ3azhpdFhObHErZHFZSERYTGEycStlWTJHcFVNaGcKWEVMdk5VVHRGUVM3dldGbW5xcDNsc3lqM3ZrQUhteHNmRWlPWFMvT2svdklIYVVQOW1ZcStWVy8wclU2MUx3VQp2MHczT0VOUkt5QlZoYVJZd3BIT09CVFE1MmtkU0pzbFAzaWhTWVYwbDBmSjZ5c0VhbHJtanM2dDRmUHdodi9aCmtPR1hVeXIrWVdkV0dZNUZqTkhaZFlEUGpQbm1TNE9NT21XdEZpNDl1S0JxdzFxSlYwbmdrczRDL1hSa3FZVDgKcDdkWmwzUGZlVHRhNWliaXBoMmVlZnFwM3ZkcUV6UEVqczVnV1FJREFRQUJBb0lCQUJjVmVGN3lWbldldzI2TgpzWGh2QXlUUnpUdzB2MzlsMUc3TW85bkxxbjlUY05BM01zNTZ0S0lNa3dVNkM1RTZzUnI5WmxxUlVXeGJacTV0Cm91VlUwRWp3bVQwN1hOQVRkMFNiUnRabFRJNnB0d0hUUDgrY0t2TkY4Tk9LMERiL2xoay9BREE2RGJaVGUxaHQKeDJRU1QzcGE4c3JDeW01RkVWQXl3Y05hNmxWRER6U0l2TExxR3lOclBZa1pKVUhnTnVkN0RaOUd2TmdCZk8zQwpBY3BZd3h3MVN5bWpBZ2RGcVNHSWU3Q0hkYUpYM0JodHBsREFwUlNBRzNHdnNVakYyRy94NXJVQlE2WVF5OEY4Clp1alo1QTJDU1N1ZDhEbjY0VkZqVXBGN3dLQ0dMRjE2dkR5SlNEOUp3cFZtZmY5bnNxV3loWDc0WncyT1llZGUKVmlacUxxRUNnWUVBMlFOb1pWS2xaSUZ2MDE3bXEyV0p0cFBMdGhXemxzUkRsUFovQWNvbDl0MEVTZWFOR01VZgphSHhZNzdoWjFac1pPbHhWdDdkZTlEaWZkVmEzZllzb0JpaHdyMFIwdkdrNUExdTUyK0xHSGpJWE5ONit4Uy9NClh3Zi9XSy9mY1RESmt0RFRhY1NPaHl2b055QzBjTkFqZ2Z3MUR5SFRrQ0FOdElVT05xc2xPUk1DZ1lFQTA5blcKY1kvd2k4ekJlTTI1V1pBRGc0ODdIdDduaTJBNEdqWnRXa1ViekkxUUU2Qi9CSGU5ek5JQUdvdDdPTDBWbDhTLwpGQXBvY2xNSWY0RjVpb0NSZkR6c2dyZjZFSHhhOTBuNml2eHVUTWd1cUNlMGpjRHNaTVZOMEc2SDZURGQ5K2VOCkRlejFuMWxBUEM5b2Eva25EWmo1OTVobUlKMXc1Y0ttOTMvNU9tTUNnWUVBb2VCdTRSWFRGYk9Qck1YZm52NXcKeVdaWjBJdERtVFR4akk2S2t0VXRtSVdwQzA4VTlPTWwySlRZNm9oRFNwa1ZLbmx4MzBiRFo3MU5CUVFZZjJkcwpCWnZvNG5SWDk2c3R3aG1ML0QrZXRTdDhNQTN2azd0aDRZbGZxZElYQktIMTJyUTIzM0NsT0tOQjVzMVlpOFpXCmxrR0JlcllxMEJsNDM2MVl1dUxUTTAwQ2dZQjQwY043QUp3dkhwYzRUaHRtK0xzSVRLZHE4N1VaeDhZM0xOTXcKcURreWIyVTd6RXlrUDZYL0tjVGxYcWJudE82Ym41bFoxSlc4blo0N0dadzNZUnVYdnljalpjazNuYlJEVSsyUApWbWVSWXBrNVRXdXJiRnRsSFNGRHZjWEZPQmxmU0s4cFFmclM1aE84UDAxT1Jzbis5eitYOVZKSlI0RWJoK2V4ClAwcmtBUUtCZ1FDdUZIQXdGSVpLb3dVNStRNG5zTy81ck5jK2xETzdhOHQ0bm0wemEvYTJPUzI5aW8rTGt6Z2IKK3RNYi9oRm8rbER4SW9zdlZzTUJFbFNSK3BleDVrRWhKbWlKaTVhbTBZems0Rk9yblVkUkFlcERPUGVid1VsQQp6eU0zQS9YOVFRc21wZStURlRtZHpibnN5SHNOUFRXOU1SeHh6eHJvL3h0YzZtakJsMStmbnc9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

注意:ca所颁发的证书的有效期都只有1年,1年之后,需要续期

十二、将kc1这个kubeconfig文件拷贝到vms41(client)的家目录中

scp kc1 192.168.26.41:~

十三、vms41上,将kc1拷贝至.kube/config目录下,作为默认的kubeconfig文件

cp kc1 .kube/config

查看上下文信息,可以看设置已经生效

kubectl config get-contexts

#输出:

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

context1 cluster1 john default

十四、最后,在master上给用户john授权

kubectl create clusterrolebinding cbind1 --clusterrole cluster-admin --user=john

测试:

#vms41上测试

kubectl get pods

#master上删除用户john的权限

kubectl delete clusterrolebinding cbind1

oauth2 认证方式

接入第三方认证,如HTTP认证等

二、授权(鉴权)

第一章中讲到了客户端用户如何验证登录k8s,登录后,用户得拥有相应的权限才能做相应的操作

master上/etc/kubernetes/manifests/kube-apiserver.yaml文件中,spec.containers.command下用authorization-mode配置项配置鉴权的模式

鉴权的方式:

- –authorization-mode=Node.RBAC(默认为这种方式)

- –authorization-mode=AlwaysAlow(允许所有请求,若为这个配置,客户端用户无论是否有被授权,总是允许)——不推荐

- –authorization-mode=AlwaysDeny(拒绝所有请求,若为这个配置,客户端用户即使被授权了,也是拒绝)——不推荐

- –authorization-mode=ABAC(这种方式不够灵活,放弃)

ABAC(Attribute-Based Access Contorl)

推荐使用RBAC(Role Based Access Control——基于角色的访问控制)的模式

-

–authorization-mode=RBAC

在这种模式下,我们来给用户授权 -

–authorization-mode=Node

Node授权器主要用于各个node上的kubelet访问apiserver时使用的,其他一般均由RBAC授权器来授权

因此,系统默认的配置为:- --authorization-mode=Node,RBAC,推荐也是这种鉴权方式

三、k8s中的权限、角色、用户

用户——用户不属于任何命名空间,就是一个独立的用户

权限——k8s中有各种权限如:create、update、patch、get、watch、list、delete等

角色——角色是基于命名空间的,我们并不会将某些权限直接授权给用户,而是会先创建某些角色,将权限授权给角色,然后把角色授权给用户,这个授权的过程叫作rolebinding(角色绑定)

注意:角色是基于命名空间的,假设角色role1在命名空间ns1下,将角色role1绑定给用户john,那么john只有在命名空间ns1下才具有角色role1的权限

同时,我们也可以创建clusterrole,clusterrole是全局的,没有命名空间的限制,但是,若通过rolebinding的方式来将clusterrole绑定给用户,那么该用户仍然只有在该rolebinding所在的命名空间下才具有相应权限

若想让用户拥有全局的权限(在所有命名空间下都有权限),则创建一个clusterrolebinding,通过clusterrolebinding来将clusterrole绑定给用户

查看角色

#查看当前命名空间下的角色

kubectl get role

#查看指定命名空间下的角色

kubectl get role -n [命名空间名称]

查看集群角色

kubectl get clusterrole

查看角色有哪些权限

#Verbs下为属性

kubectl describe role [角色名称]

查看集群角色“管理员”的权限

kubectl describe clusterrole admin

创建角色

方式一:命令行的方式

# 命令行中资源类型可以简写,如pod简写为po,但是yaml文件中不能简写

kubectl create role [角色名称] --verb=[拥有的权限,如:get,create...] --resource=[针对什么资源拥有权限,如:pod,svc...]

方式二:yaml文件的方式

通过命令行输出yaml文件模板

kubectl create role [角色名称] --verb=[拥有的权限,如:get,create...] --resource=[针对什么资源拥有权限,如:pod,svc...] --dry-run=client -o yaml > [文件名].yaml

例:

kubectl create role role1 --verb=get,list --resource=po --dry-run=client -o yaml > role1.yaml

输出的yaml文件模板如下:

```bash

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- list

rules.apiGroups——配置资源类型的apiVersion的父级(具体看下面的实验)

rules.resources——在限定的apiVerion父级的范围下,配置资源类型(注意:yaml文件中的资源类型必须是复数)

rules.verbs——配置拥有的权限,若配置为 - “*” ,表示拥有所有权限

rules表示权限规则,一个apiGroups表示一个规则,若多个资源都在一个apiGroups下则应用相同的权限规则,若想对不同的资源分配不同的权限规则,可以配置多个apiGroups,如下:

对于service拥有get、list的权限、对于pod拥有所有的权限

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- “*”

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

创建集群角色同创建角色

#命令行方式创建

kubectl create clusterrole [名称] --verb=[权限] --resource=[资源]

#yaml文件

kubectl create clusterrole [名称] --verb=[权限] --resource=[资源] --dry-run=client -o yaml > [文件名].yaml

也可以直接修改某个角色为集群角色

修改yaml文件将kind改为“ClusterRole”

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

...

查看rolebinding

kubectl get rolebinding

kubectl get rolebinding -n [命名空间]

查看clusterrolebinding

kubectl get clusterrolebinding

创建rolebinding、clusterrolebinding

kubectl create rolebinding [名称] --role=[绑定的角色] --user=[绑定的用户]

kubectl create rolebinding [名称] --clusterrole=[绑定的集群角色] --user=[绑定的用户]

kubectl create clusterrolebinding --clusterrole=[绑定的集群角色] --user=[绑定的用户]

查看用户在所有命名空间有哪些rolebinding

kubectl get rolebinding -A -o wide | grep [用户]

删除角色

kubectl delete role [名称]

删除rolebinding、clusterrolebinding

kubectl delete rolebinding [名称]

kubectl delete clusterrolebinding [名称]

实验:创建role

master上创建角色,并将角色绑定给用户john,以yaml文件的方式创建角色,先通过命令行输出yaml文件目标

(1)角色名为role1,对于pod拥有get,list的权限,输出role1.yaml文件

kubectl create role role1 --verb=get,list --resource=po --dry-run=client -o yaml > role1.yaml

(2)输出的yaml文件模板如下:

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- list

(3)创建该角色

kubectl apply -f role1.yaml

(4)查看该角色及查看有哪些权限

kubectl get role

#输出:

NAME CREATED AT

role1 2022-08-07T08:23:22Z

kbuectl describe role role1

#输出:

Name: role1

Labels: <none>

Annotations: <none>

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

pods [] [] [get list]

(5)创建rolebinding,名为rbind1,绑定role1和john

kubectl create rolebinding rbind1 --role=role1 --user=john

(6)查看rolebinding

kubectl get rolebinding

#输出:

NAME ROLE AGE

rbind1 Role/role1 8s

查看john在所有命名空间下都有哪些rolebinding、角色

kubectl get rolebinding -A -o wide | grep john

#输出:

default rbind1 Role/role1 3m54s john

(7)给角色role1增加create、delete的权限

修改yaml文件,在rules.verbs下增加create、delete

...

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- list

- create

- delete

...

修改后需要重新apply一下生效

kubectl apply -f role1.yaml

(8)此时用户john只有针对资源pod拥有get、list、create、delete的权限,我们想要它也有对于service的权限

修改yaml,rules.resources下增加services(yaml文件中不能使用简写svc)

...

rules:

- apiGroups:

- ""

resources:

- pods

- services

verbs:

- get

- list

- create

- delete

...

重新apply生效

kubectl apply -f role1.yaml

(9)查看role1的权限,可以看到有了对于pod、svc的get、list、create、delete的权限

(10)使其拥有对于pod和svc的所有权限,修改yaml文件如下

...

rules:

- apiGroups:

- ""

resources:

- pods

- services

verbs:

- “*”

#- get

#- list

#- create

#- delete

...

重新apply生效

kubectl apply -f role1.yaml

(11)修改权限规则,使得对于svc只有get、list的权限,对于pod拥有所有的权限

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- “*”

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

重新apply生效

kubectl apply -f role1.yaml

(12)此时,我们想增加对deployment的get、list权限

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- “*”

- apiGroups:

- ""

resources:

- services

- deployments

verbs:

- get

- list

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- “*”

- apiGroups:

- ""

resources:

- services

- deployments

verbs:

- get

- list

重新apply生效

kubectl apply -f role1.yaml

然后来到vms41上测试,发现权限不够

这是因为不同资源类型的apiVersion不一样

通过kubectl api-resources查看,可以看到pods、services的apiVersion为v1,deployments的apiVersion为apps/v1

总的来看,资源类型的apiVersion分成了两种结构

单一结构的xx,如:v1

两层结构的yy/xx,如:apps/v1

对于单层结构的,父级为空 “”,对于两层结构的,父级为yy,如apps

在权限规则中,配置项apiGroups下配置的即为资料类型的apiVersion的父级,该规则就限定了一个范围,只对apiVersion的父级为该配置的资源类型生效

如:

...

rules:

- apiGroups:

- ""

...

就表示该规则只对apiVersion的父级为空(即apiVersion为单层)的资源类型生效

所以,若想让deployment也能生效规则,则要在apiGroups下增加 - “apps”

修改yaml如下:

并增加对于deployment的create权限

...

- apiGroups:

- ""

- "apps"

resources:

- services

- deployments

verbs:

- get

- list

- create

...

重新apply生效

kubectl apply -f role1.yaml

(13)来到vms41上测试创建deploy,设置2个副本数

出现如下报错:

Error from server (Forbidden): deployments.apps "web1" is forbidden: User "john" cannot patch resource "deployments/scale" in API group "apps" in the namespace "default"

意为用户john缺少对资源deployments/scale的patch权限,在apps这个apiGroups下

因此在“apps”的apiGroups下增加对于deployments/scale的patch权限

修改yaml文件如下:

- apiGroups:

- ""

- "apps"

resources:

- services

- deployments

- deployments/scale

verbs:

- get

- list

- create

- patch

...

重新apply生效

kubectl apply -f role1.yaml

实验:创建clusterrole

(1)master上删除role1、rbind1

kubectl delete role role1

kubectl delete rolebinding rbind1

(2)修改role1.yaml,将kind改为ClusterRole,名字改为“crole”

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: null

name: crole

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- "*"

- apiGroups:

- ""

resources:

- services

- deployments

verbs:

- get

- list

(3)创建该clusterrole

kubectl apply -f role1.yaml

(4)查看是否创建成功

kubectl get clusterrole | grep crole

#输出:

crole 2022-08-08T05:57:18Z

(5)通过rolebinding的方式将crole授权给用户john

kubectl create rolebinding rbind1 --clusterrole=crole --user=john

虽然是clusterrole,集群角色是没有命名空间的限制的,但是是通过rolebinding的方式授权给用户,因此用户还是受rolebinding所在的命名空间的限制

来到vms41上,测试发现,只能在当前命名空间(rbind1所在命名空间)才有权限

(6)删除rbind1,通过clusterrolebinding的形式将crole授权给用户john

kubectl delete rolebinding rbind1

kubectl create clusterrolebinding cbind --clusterrole=crole --user=john

此时,用户john才所有命名空间下都具备了集群角色crole的权限

来到vms41下,可以测试查看所有命名空间下的pod

kubectl get pods -A

(7)实验结束,删除clusterrolebinding

kubectl delete clusterrolebinding cbind

四、了解serviceaccount

概述

以上所提到的 “用户”,都属于“用户账户”,系统还有一种账户——serviceaccount

k8s系统中有两种账户:

1.user account(用户账户)——用于登录系统(通过token或者kubeconfig认证方式登录系统)

2.service account(sa)——用于给pod运行的

在每个命名空间里,都有一个默认的sa,这个sa是无法删除的(删除后又会自动重新生成)

#查看当前命名空间下的sa

kubectl get sa

#输出:

NAME SECRETS AGE

default 0 32d

当我们创建、运行一个pod的时候,必须指定这个pod使用哪个sa的身份来运行

我们在创建pod时,若没有指定sa,则会默认使用default下的sa身份来运行

例如我们创建如下pod:

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

name: pod1

spec:

terminationGracePeriodSeconds: 0

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod1

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

创建好后,我们以yaml文件的形式查看该pod

kubectl get pod pod1 -o yaml

#输出:

...

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod1

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-m8z25

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: vms22.rhce.cc

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 0

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: kube-api-access-m8z25

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

status:

...

可以看到serviceAccount的这项属性为default

投射卷projected与sa信息

使用sa,会自动给pod生成一个卷,卷类型为projected,可以看到yaml文件中spec.volumes下名为kube-api-access-m8z25的projected类型的卷

projected卷称为 “投射卷”,主要作用是把sa信息挂载到容器中去

进入pod,可查看投射卷挂载在容器的什么位置

kubectl exec -it pod1 -- df -hT

#输出:

Filesystem Type Size Used Avail Use% Mounted on

overlay overlay 150G 6.9G 144G 5% /

tmpfs tmpfs 64M 0 64M 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/sda1 xfs 150G 6.9G 144G 5% /etc/hosts

shm tmpfs 64M 0 64M 0% /dev/shm

tmpfs tmpfs 3.8G 12K 3.8G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs tmpfs 2.0G 0 2.0G 0% /proc/acpi

tmpfs tmpfs 2.0G 0 2.0G 0% /proc/scsi

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/firmware

可以看到,投射卷是挂载在容器的 “/run/secrets/kubernetes.io/serviceaccount” 下

/run/secrets/kubernetes.io/serviceaccount下会生成一个token

#查看该token

kubectl exec -it pod1 -- cat /run/secrets/kubernetes.io/serviceaccount/token

#输出:

eyJhbGciOiJSUzI1NiIsImtpZCI6IjNJUlNCbDgteVViUHdZeFJsTDMzRkgyZlNYMDFLVndrNXMzU2NxOWV2WUEifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjkxNDc1ODYxLCJpYXQiOjE2NTk5Mzk4NjEsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJkZWZhdWx0IiwicG9kIjp7Im5hbWUiOiJwb2QxIiwidWlkIjoiYmI4OTY5ZGItMzE5OC00ZGYyLTg0ZmQtODljODliZGQ3MTI1In0sInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJkZWZhdWx0IiwidWlkIjoiYThiNzU2YzItNGY2Ny00Nzg3LWE3OGEtNzNmZGE2ZmJjODRhIn0sIndhcm5hZnRlciI6MTY1OTk0MzQ2OH0sIm5iZiI6MTY1OTkzOTg2MSwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRlZmF1bHQ6ZGVmYXVsdCJ9.Tk8oiZpkTWm7qIPqnlOGQy6biue0wPuM6ZFOgr-ixppMfpgipqJh2YVylEZF7RB1DIlqm0aO2cZDy0GDD2F4yBkkOiGo2xpmI1liqDKu-ujsK_SqzZA3_OIKS9VVnTHMAciKg69AHqZVeOIu-n0iY_9pESFtGhn4hEKPyIXTh2QBEwrFdgqW4_mMSlOAJs19akkWoLTILsEBwGX20j-eSAek9zlaSI6iLDFqh5ltsxnAYHxdVRgsQJTdpAtUnXxfYrzALnfvaQeVVsOOZWCug80SN0pdZDDiFqD2XrFyGGcODT3pfedrTKWboRuVsWghcq0NNHJ8XWr3bzTIeXar5Q

这个token就含有sa的信息

可以到https://jwt.io/中解码该token

可以看到sub一项指定的就是default命名空间的sa

因此,若给sa授予了什么权限,这个token中就会包含被授权的权限信息

容器里面的进程会去访问/run/secrets/kubernetes.io/serviceaccount下的token,以获取相应的权限

因此sa的权限越大,容器里面的进程的权限也越大

注:这个token有效期为1小时,过期后kubelet会自动去申请、更新

我们也可以手动创建一个sa,然后创建pod时指定该sa

在k8s-1.20之前(包含1.20):创建sa同时会创建一个secret出来,并且pod里的token就是这个secret的token,并且这个token是永不过期的

k8s-1.21至1.23:创建sa同时也会生成一个secret,但是pod里的token不再使用secret里的token了

在k8s-1.24之后(包括1.24):创建sa时干脆就不再生成secret,但是我们可以手动创建secret

实验:创建pod时指定sa

(1)删除上面的pod1

kubectl delete pod pod1

(2)创建名为sa1的service account

kubectl create sa sa1

(3)查看是否创建成功

kubectl get sa

#输出:

NAME SECRETS AGE

default 0 32d

sa1 0 3s

注:在k8s1.24之前(不包括1.24),创建sa同时会创建一个secret出来,1.24之后就不会了,但是我们可以手动创建secret

(4)创建pod,spec下使用serviceAccount配置项指定sa

指定sa1作为该pod的sa

创建pod1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

name: pod1

spec:

terminationGracePeriodSeconds: 0

serviceAccount: sa1

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod1

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

创建该pod

kubectl apply -f pod1.yaml

(5)检验pod1使用的sa是否是sa1

进入pod1查看/run/secrets/kubernetes.io/serviceaccount下的token

kubectl exec -it pod1 -- cat /run/secrets/kubernetes.io/serviceaccount/token

#输出:

eyJhbGciOiJSUzI1NiIsImtpZCI6IjNJUlNCbDgteVViUHdZeFJsTDMzRkgyZlNYMDFLVndrNXMzU2NxOWV2WUEifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjkxNDc4MzYyLCJpYXQiOjE2NTk5NDIzNjIsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJkZWZhdWx0IiwicG9kIjp7Im5hbWUiOiJwb2QxIiwidWlkIjoiNTFkOWExZDYtNjAxNC00YTYwLTg4MjgtMGYwNWM3ZGI3MzU5In0sInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJzYTEiLCJ1aWQiOiJjOTY4ZThkMy0zMDkwLTQ5ZTktYTczNC02ZDA2ZTBlNjViZjIifSwid2FybmFmdGVyIjoxNjU5OTQ1OTY5fSwibmJmIjoxNjU5OTQyMzYyLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpzYTEifQ.T0hmPRD5-AnYI-BE-HXURAE1mqV3NZEPtoaIwgXq8a6r1y4DJf6b80IjMRnENbew51qABkex8GnVLCALuNym9X_ziIK3LjgTg5Vq1Sk2jM30M3ziclFugI_ZzAdf3Qg1LSWkEIzo7rNOQ-dcnPYFOTHlrsJOXUioXgHj-0qEiSoHKJCc7wIn-yhNsY1mz8DaYvS9OZPJPxw0XXmY5Ov-azCpcta7LPhUONGCg8sYKThAr8fBbSKyGx8cg1WNlzm2GD1IRrHRJtHk0ik-BlKRFYPEK12z1RbT-7n874moLKaay7F51wNz7BWE0-AI2Y7EFtWajvUF9V1GlXSp-Q1Eyg

将token信息复制到https://jwt.io/中解码

可以看到sub一项的值为:“sub”: “system:serviceaccount:default:sa1”

(6)可以给sa1创建一个secret,生成yaml如下:

cat > sa1-secret.yaml<<EOF

apiVersion: v1

kind: Secret

type: kubernetes.io/service-account-token

metadata:

name: sa1

annotations:

kubernetes.io/service-account.name: "sa1"

EOF

创建该secret

kubectl apply -f sa1-secret.yaml

查看secret

kubectl get secrets

#输出:

NAME TYPE DATA AGE

sa1 kubernetes.io/service-account-token 3 57s

查看sa1的secret

kubectl describe secrets sa1

#输出:

Name: sa1

Namespace: default

Labels: <none>

Annotations: kubernetes.io/service-account.name: sa1

kubernetes.io/service-account.uid: c968e8d3-3090-49e9-a734-6d06e0e65bf2

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 7 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjNJUlNCbDgteVViUHdZeFJsTDMzRkgyZlNYMDFLVndrNXMzU2NxOWV2WUEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InNhMSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJzYTEiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJjOTY4ZThkMy0zMDkwLTQ5ZTktYTczNC02ZDA2ZTBlNjViZjIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpzYTEifQ.c1AfEofgEh6Uy6HK7L8hUlndX01rdgsUczbezOHACfqsbHa3_aefc3_ZNUJvhO2Pts6Vkycl_c3yMHqk0NiJ5Q2DQLUR-w3Q5G_USrhRimlSRzQ5uag_bchAEi0DX1HAsVSByvV_8tgfgUSFDHFNPXqvZpkhY4aNfahmYH3o9MKRHTj1P0Lcn2Q-LhS4wMs_MN55q0Wu7LGPIPrxytxC7K4uBO5ZuTh0QNP_bZSML6tGECvWwo_yQEQ1fqSvcgd-q7NzbQT7GnjhuhwZ1yID5E-G3qz6uzB-CnVydQS0qswat8EGvNPRVqFuJ9ibKaJhSZvmahT0n76FqDWxtTRnBw

但是pod里并没有应用这个token(指k8s-1.21之后,包括1.21)

对sa进行授权

综上,我们在创建、运行一个pod时,必会指定一个sa(没有指定则使用default下的sa)

sa的应用:

假如公司要为k8s环境开发一套程序,程序进程运行在一个容器中,这个程序对用户提供了可视化操作界面,使得用户“点一点”就可以完成对k8s的相关操作,程序底层会将可视化操作转换为相关的命令,因此在创建这个容器时,给他指定的sa就必须得具备相应的权限,容器里的进程才能实现以上的功能

将角色、集群角色授权给sa的语法

kubectl create rolebinding [名称] --role=[角色名称] --serviceaccount=[命名空间]:[sa名称]

kubectl create clusterrolebinding [名称] --clusterrole=[角色名称] --serviceaccount=[命名空间]:[sa名称]

五、k8s可视化工具安装:dashboard、kuboard

安装dashboard

Dashboard 是基于网页的 Kubernetes 用户界面。 你可以使用 Dashboard 将容器应用部署到 Kubernetes 集群中,也可以对容器应用排错,还能管理集群资源。 你可以使用 Dashboard 获取运行在集群中的应用的概览信息,也可以创建或者修改 Kubernetes 资源 (如 Deployment,Job,DaemonSet 等等)。 例如,你可以对 Deployment 实现弹性伸缩、发起滚动升级、重启 Pod 或者使用向导创建新的应用

安装:

(1)到k8s官方文档中搜索dashboard:https://kubernetes.io/zh-cn/docs/home/

选择 “部署和访问Kubernetes仪表板(Dashboard)”

下载所需yaml文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.0/aio/deploy/recommended.yaml

(2)查看所需镜像

grep image recommended.yaml

#输出:

image: kubernetesui/dashboard:v2.5.0

imagePullPolicy: Always

image: kubernetesui/metrics-scraper:v1.0.7

(3)所有节点上拉取镜像

nerdctl pull kubernetesui/dashboard:v2.5.0

nerdctl pull kubernetesui/metrics-scraper:v1.0.7

(4)master上安装

kubectl apply -f recommended.yaml

(5)安装完毕后,查看所有命名空间,可以看到帮我们自动创建了一个kubernetes-dashboard的命名空间

kubectl get ns

#输出:

NAME STATUS AGE

default Active 5d2h

kube-node-lease Active 5d2h

kube-public Active 5d2h

kube-system Active 5d2h

kubernetes-dashboard Active 7s

(6)查看命名空间kubernetes-dashboard下的pod、svc

kubectl get pods -n kubernetes-dashboard

#输出:

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7bfdf779ff-fq7f6 0/1 ContainerCreating 0 107m

kubernetes-dashboard-6cdd697d84-25zw2 0/1 ContainerCreating 0 107m

kubectl get svc -n kubernetes-dashboard

#输出:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.104.139.159 <none> 8000/TCP 107m

kubernetes-dashboard ClusterIP 10.96.48.40 <none> 443/TCP 107m

(7)编辑kubernetes-dashboard这个svc,将type原ClusterIP改为NodePort

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

#yaml:

...

ports:

- port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort

status:

...

(8)查看修改后的这个svc的IP和端口

kubectl get svc -n kubernetes-dashboard

#输出:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.104.139.159 <none> 8000/TCP 111m

kubernetes-dashboard NodePort 10.96.48.40 <none> 443:32296/TCP 111m

(9)用浏览器访问这个地址:https://192.168.26.21:32296

选择用Token认证登入

创建sa1

kubectl create sa sa1

为这个sa1生成token

kubectl create token sa1

然后用此token登录

登录后会发现权限不足的提醒,这是因为还没有给sa1授权

为sa1授权管理员的权限

kubectl create clusterrolebinding cbind1 --clusterrole=cluster-admin --serviceaccount=default:sa1

除了dashboard这个可视化界面外,还有其他工具如:kuboard

安装kuboard

(1)确保已经安装好了metric-server

(2)wget https://kuboard.cn/install-script/kuboard.yaml

(3)所有节点拉取镜像nerdctl pull eipwork/kuboard:latest

(4)修改kuboard.yaml,把策略改为IfNotPresent

(5)kubectl apply -f kuboard.yaml

(6)确保kuboard运行,查看podkubectl get pods -n kube-system

(7)获取token文章来源:https://www.toymoban.com/news/detail-596324.html

echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk'{print $1}')-o go-template='{{.data.token}}' | base64-d)

(8)登录http://192.168.26.21:32567,用上面获取的token登录文章来源地址https://www.toymoban.com/news/detail-596324.html

到了这里,关于【CKA考试笔记】十五、安全管理:验证与授权的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![2023全国安全生产合格证危险化学品生产单位安全管理人员模拟考试试卷一[安考星]](https://imgs.yssmx.com/Uploads/2024/02/599483-1.png)