省略开启本机摄像头的过程

以下和WebSocket通信的时候,是通过Gson转对象为字符串的方式传输的数据文章来源地址https://www.toymoban.com/news/detail-601080.html

-

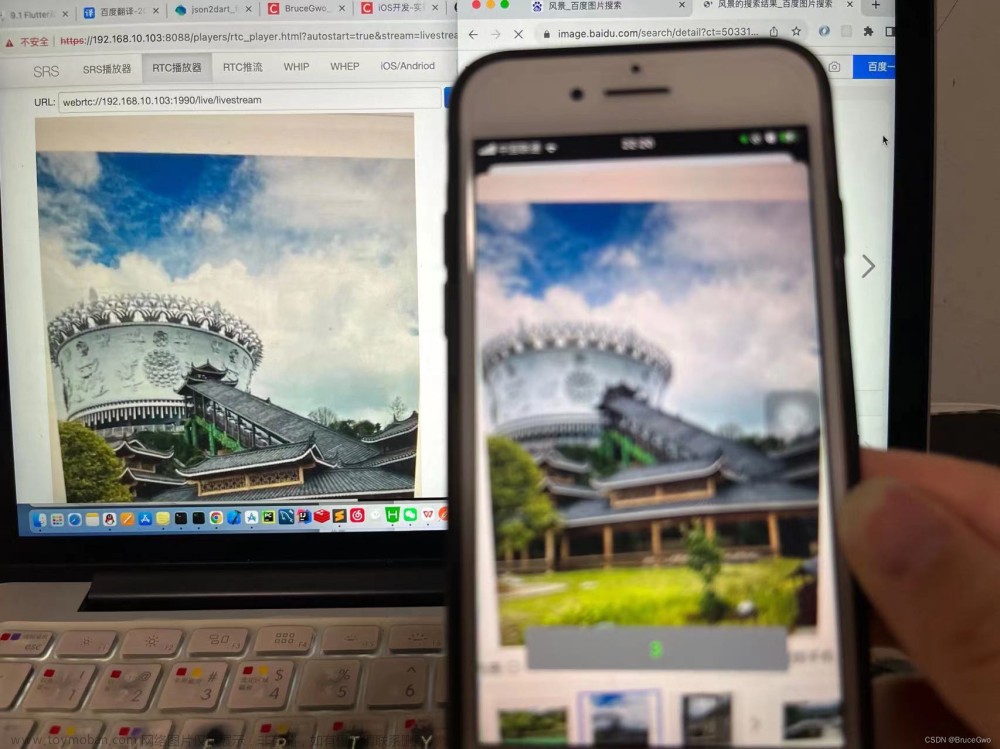

整个过程

- layout_rtc.xml

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:padding="@dimen/dp_20"

>

<org.webrtc.SurfaceViewRenderer

android:id="@+id/localView"

android:layout_width="match_parent"

android:layout_height="0dp"

app:layout_constraintHeight_percent="0.5"

app:layout_constraintLeft_toLeftOf="parent"

app:layout_constraintTop_toTopOf="parent" />

<org.webrtc.SurfaceViewRenderer

android:id="@+id/remoteView"

android:layout_width="match_parent"

android:layout_height="0dp"

app:layout_constraintHeight_percent="0.5"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintLeft_toLeftOf="parent"

/>

</androidx.constraintlayout.widget.ConstraintLayout>

- RtcActivity

package com.dream.app.activity;

import android.app.Activity;

import android.os.Bundle;

import android.util.Log;

import androidx.annotation.Nullable;

import com.dream.app.adapter.PeerConnectionAdapter;

import com.dream.app.adapter.SdpAdapter;

import com.google.gson.Gson;

import com.google.gson.reflect.TypeToken;

import org.java_websocket.client.WebSocketClient;

import org.java_websocket.handshake.ServerHandshake;

import org.webrtc.Camera1Enumerator;

import org.webrtc.DefaultVideoDecoderFactory;

import org.webrtc.DefaultVideoEncoderFactory;

import org.webrtc.EglBase;

import org.webrtc.IceCandidate;

import org.webrtc.MediaConstraints;

import org.webrtc.MediaStream;

import org.webrtc.PeerConnection;

import org.webrtc.PeerConnectionFactory;

import org.webrtc.SessionDescription;

import org.webrtc.SurfaceTextureHelper;

import org.webrtc.SurfaceViewRenderer;

import org.webrtc.VideoCapturer;

import org.webrtc.VideoSource;

import org.webrtc.VideoTrack;

import java.lang.reflect.Type;

import java.net.URI;

import java.net.URISyntaxException;

import java.util.Collections;

import java.util.Random;

public class RtcActivity extends Activity {

private static final String TAG = "RtcRemoteActivity";

private WebSocketClient webSocketClient = null;

private PeerConnection peerConnection = null;

private static final Type sessionDescriptionType = new TypeToken<SessionDescription>(){}.getType();

private static final Type iceCandidateType = new TypeToken<IceCandidate>(){}.getType();

private VideoTrack videoTrack;

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_rtc);

// factory static init

PeerConnectionFactory.initialize(PeerConnectionFactory.InitializationOptions

.builder(this)

.createInitializationOptions());

EglBase.Context eglBaseContext = EglBase.create().getEglBaseContext();

PeerConnectionFactory.Options options = new PeerConnectionFactory.Options();

// create video encoder/decoder

DefaultVideoEncoderFactory defaultVideoEncoderFactory =

new DefaultVideoEncoderFactory(eglBaseContext, true, true); // encoder

DefaultVideoDecoderFactory defaultVideoDecoderFactory =

new DefaultVideoDecoderFactory(eglBaseContext); // decoder

// factory create

PeerConnectionFactory peerConnectionFactory = PeerConnectionFactory.builder()

.setOptions(options)

.setVideoEncoderFactory(defaultVideoEncoderFactory)

.setVideoDecoderFactory(defaultVideoDecoderFactory)

.createPeerConnectionFactory();

// create videoCapturer

Camera1Enumerator camera1Enumerator = new Camera1Enumerator(false);// false输出到surface

String[] deviceNames = camera1Enumerator.getDeviceNames();

VideoCapturer videoCapturer = null;

for (String deviceName : deviceNames) {

if (camera1Enumerator.isFrontFacing(deviceName)) {

VideoCapturer capturer = camera1Enumerator.createCapturer(deviceName, null);

if (capturer != null) {

videoCapturer = capturer;

break;

}

Log.e(TAG, "onCreate: create capturer fail");

return;

}

}

if (videoCapturer == null) {

Log.e(TAG, "onCreate: create capturer fail");

return;

}

// create videoSource

VideoSource videoSource = peerConnectionFactory.createVideoSource(videoCapturer.isScreencast());

// init videoCapturer

SurfaceTextureHelper surfaceTextureHelper = SurfaceTextureHelper.create("surfaceTexture", eglBaseContext);

videoCapturer.initialize(surfaceTextureHelper, this, videoSource.getCapturerObserver());

videoCapturer.startCapture(480, 640, 30);// width, height, frame

// create videoTrack

videoTrack = peerConnectionFactory.createVideoTrack("videoTrack-1", videoSource);

// get show view

SurfaceViewRenderer localView = findViewById(R.id.localView);

localView.setMirror(true);// 镜像

localView.init(eglBaseContext, null);

// link track to view so that data show in view

videoTrack.addSink(localView);

Activity activity = this;

// link websocket

try {

String uriStr = "ws://host/webrtc/" + Math.abs(new Random().nextInt()) % 100;

Log.e(TAG, "onCreate: " + uriStr);

webSocketClient = new WebSocketClient(new URI(uriStr)) {

@Override

public void onOpen(ServerHandshake handshakedata) {

// create peerConnection

peerConnection = peerConnectionFactory.createPeerConnection(Collections.singletonList(PeerConnection.IceServer

.builder("turn:host:3478")

.setUsername("admin")

.setPassword("123456")

.createIceServer()), new PeerConnectionAdapter() {

@Override

public void onIceGatheringChange(PeerConnection.IceGatheringState iceGatheringState) {

super.onIceGatheringChange(iceGatheringState);

Log.e(TAG, "onIceGatheringChange: " + iceGatheringState);

}

@Override

public void onIceCandidate(IceCandidate iceCandidate) {

super.onIceCandidate(iceCandidate);

//TODO send to remote the local iceCandidate

Log.e(TAG, "onIceCandidate: ICE CANDIDATE");

webSocketClient.send(new Gson().toJson(iceCandidate, iceCandidateType));

}

@Override

public void onAddStream(MediaStream mediaStream) { // turn deal with

super.onAddStream(mediaStream);

Log.e(TAG, "onAddStream: ICE STREAM");

// get remote videoTrack

VideoTrack remoteVideoTrack = mediaStream.videoTracks.get(0);

runOnUiThread(() -> {

SurfaceViewRenderer remoteView = findViewById(R.id.remoteView);

remoteView.setMirror(false);

remoteView.init(eglBaseContext, null);

// link track to view so that data show in view

remoteVideoTrack.addSink(remoteView);

});

}

});

if (peerConnection == null) {

Log.e(TAG, "onCreate: peerConnection fail");

webSocketClient.close();

activity.finish();

}

// create stream

MediaStream stream = peerConnectionFactory.createLocalMediaStream("stream");

stream.addTrack(videoTrack);

peerConnection.addStream(stream);

}

@Override

public void onMessage(String message) {

Log.e(TAG, "onMessage: " + message);

/**

* SDP change

*/

if ("offer".equals(message)) {

// peerConnection offer

peerConnection.createOffer(new SdpAdapter("offer") {

@Override

public void onCreateSuccess(SessionDescription sessionDescription) {

super.onCreateSuccess(sessionDescription);

peerConnection.setLocalDescription(new SdpAdapter("local set local sd"), sessionDescription);

//TODO send sessionDescription to remote

webSocketClient.send("offer-" + new Gson().toJson(sessionDescription, sessionDescriptionType));

}

}, new MediaConstraints());

return;

}

if (message.startsWith("answer-")) {

peerConnection.setRemoteDescription(new SdpAdapter()

, new Gson().fromJson(message.substring("answer-".length()), sessionDescriptionType));

peerConnection.createAnswer(new SdpAdapter() {

@Override

public void onCreateSuccess(SessionDescription sessionDescription) {

super.onCreateSuccess(sessionDescription);

Log.e(TAG, "onCreateSuccess: createAnswer");

peerConnection.setLocalDescription(new SdpAdapter(), sessionDescription);

// send local sd to call

webSocketClient.send("answer-offer-" + new Gson().toJson(sessionDescription, sessionDescriptionType));

}

@Override

public void onCreateFailure(String s) {

super.onCreateFailure(s);

Log.e(TAG, "onCreateFailure: fail " + s);

}

}, new MediaConstraints());

return;

}

if (message.startsWith("offer-receiver-")) {

peerConnection.setRemoteDescription(new SdpAdapter()

, new Gson().fromJson(message.substring("offer-receiver-".length()), sessionDescriptionType));

return;

}

// ------------------------ sdp change -----------------------------------

/**

* ICE change

*/

// get remote IceCandidate

IceCandidate remoteIceCandidate = new Gson().fromJson(message, iceCandidateType);

peerConnection.addIceCandidate(remoteIceCandidate);

// ------------------------ ice change -----------------------------------

}

@Override

public void onClose(int code, String reason, boolean remote) {

}

@Override

public void onError(Exception ex) {

ex.printStackTrace();

}

};

} catch (URISyntaxException e) {

Log.e(TAG, "onCreate: ", e);

return;

}

webSocketClient.connect();

}

@Override

protected void onDestroy() {

super.onDestroy();

if (webSocketClient.isOpen()) {

webSocketClient.close();

webSocketClient = null;

}

if (peerConnection != null) {

peerConnection.dispose();

peerConnection = null;

}

if (videoTrack != null) {

videoTrack.dispose();

videoTrack = null;

}

}

}

文章来源:https://www.toymoban.com/news/detail-601080.html

到了这里,关于Android-WebRTC-双人视频的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!