参考网上资料并将异常问题解决,经测试可正常安装集群。

1.我的环境准备

本人使用vmware pro 17新建三个centos7虚拟机,每个2cpu,20+GB磁盘存储,内存2GB+,其中主节点的内存3GB,可使用外网.

2.所有节点安装Docker

#查看系统是否已安装docker

rpm -qa|grep docker

#卸载旧版本docker

sudo yum remove docker*

#安装yum工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

#配置docker的yum下载地址

sudo yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#生成缓存

sudo yum makecache

#查看docker版本

yum list docker-ce --showduplicates | sort -r

#安装docker的指定版本

sudo yum install -y docker-ce-19.03.9-3.el7 docker-ce-cli-19.03.9-3.el7 containerd.io

#配置开机启动且立即启动docker容器

systemctl enable docker --now

#创建docker配置

sudo mkdir -p /etc/docker

#配置docker的镜像加速

sudo tee /etc/docker/daemon.json <<-EOF

{

"registry-mirrors": ["http://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

#加载配置

sudo systemctl daemon-reload

#重启docker

sudo systemctl restart docker

##查看docker版本,看是否安装成功

[root@localhost ~]# docker version

Client: Docker Engine - Community

Version: 19.03.9

API version: 1.40

Go version: go1.13.10

Git commit: 9d988398e7

Built: Fri May 15 00:25:27 2020

OS/Arch: linux/amd64

Experimental: false

ps:这个镜像地址(http://hub-mirror.c.163.com)真的非常好用,下载镜像非常快!!!

3.安装kubernetes

3.1 所有机器配置自己的hostname(不能是localhost)

我的集群192.168.209.132配置为master,192.168.209.133为node1,192.168.209.134为node2。

hostnamectl set-hostname master #在192.168.209.132执行

hostnamectl set-hostname node1 #在192.168.209.133执行

hostnamectl set-hostname node2 #在192.168.209.134执行

3.2 所有机器必须关闭swap分区,不为0则说明没有关闭;禁用selinux;允许 iptables 检查桥接流量(k8s官网)。

##关闭swap分区

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

## 把SELinux 设置为 permissive 模式(相当于禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

## 允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

3.3 所有机器关闭防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service

4. 安装kubelet、kubeadm、kubectl

4.1 所有机器配置k8s的yum源地址及安装并启动kubelet。

#配置k8s的yum源地址

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#安装 kubelet,kubeadm,kubectl

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9

#启动kubelet

sudo systemctl enable --now kubelet

#所有机器配置master域名

echo "192.168.209.132 master" >> /etc/hosts

4.2 初始化master主节点

我这里是把192.168.209.132作为master,–apiserver-advertise-address值为master的IP、–control-plane-endpoint值为master的域名、–image-repository 值为镜像仓库、–kubernetes-version指定k8s的版本、–service-cidr指定service的网段、–pod-network-cidr指定pod的网段。

注意:pod-network-cidr指定的网段不要和master在同一个网段

kubeadm init \--apiserver-advertise-address=192.168.209.132 \--control-plane-endpoint=master \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.20.9 \--service-cidr=10.96.0.0/16 \--pod-network-cidr=192.169.0.0/16

初始化完毕后,需要记录如下信息,后续会使用到。

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join master:6443 --token qvwpva.w2tzw5bgwvswloho \

--discovery-token-ca-cert-hash sha256:31e38d3227593fa4e5de5fb7e6a868cf927a0936c221d20efbe638daf8827ecd \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

#后续会用到此部分,工作节点加入主节点成为集群

kubeadm join master:6443 --token qvwpva.w2tzw5bgwvswloho \

--discovery-token-ca-cert-hash sha256:31e38d3227593fa4e5de5fb7e6a868cf927a0936c221d20efbe638daf8827ecd

4.3 为执行kubectl

目前仅在在master节点执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

4.4 安装网络插件

4.4.1 更改kube-proxy的模式为ipvs

##更改kube-proxy的模式为ipvs

[root@master ~]# kubectl edit configMap kube-proxy -n kube-system

ipvs:

......

kind: KubeProxyConfiguration

metricsBindAddress: ""

mode: "ipvs" #设置为ipvs,不设置默认使用iptables

##重启所有的kube-proxy

[root@master ~]# kubectl get pod -A | grep kube-proxy | awk '{system("kubectl delete pod "$2" -n kube-system")}'

pod "kube-proxy-689h8" deleted

##查看k8s主节点运行情况

[root@master ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-5897cd56c4-56mmj 0/1 Pending 0 8m38s

kube-system coredns-5897cd56c4-mgfmh 0/1 Pending 0 8m38s

kube-system etcd-master 1/1 Running 0 8m51s

kube-system kube-apiserver-master 1/1 Running 0 8m51s

kube-system kube-controller-manager-master 1/1 Running 0 8m51s

kube-system kube-proxy-l6946 1/1 Running 0 8m38s

kube-system kube-scheduler-master 1/1 Running 0 8m51s

##查看proxy是否以ipvs模式运行,发现已经换成了IPv4

[root@master ~]# kubectl logs kube-proxy-l6946 -n kube-system

......

I0623 09:03:38.347008 1 server_others.go:258] Using ipvs Proxier.

4.4.2 安装网络插件

4.4.2.1 下载calico.yaml

[root@master ~]# curl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -O >> calico.yaml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 198k 100 198k 0 0 229k 0 --:--:-- --:--:-- --:--:-- 229k

[root@master ~]#

4.4.2.2 查看部署calico.yaml需要的镜像,先进行下载

# 先登录docker

docker login

#查看需要的镜像

grep image calico.yaml

image: docker.io/calico/cni:v3.20.6

image: docker.io/calico/cni:v3.20.6

image: docker.io/calico/pod2daemon-flexvol:v3.20.6

image: docker.io/calico/node:v3.20.6

image: docker.io/calico/kube-controllers:v3.20.6

#全部下载到本地(特别慢就换docker镜像源地址,我之前配的就是换的,特别快,见本文上面部分)

docker pull docker.io/calico/cni:v3.20.6

docker pull docker.io/calico/pod2daemon-flexvol:v3.20.6

docker pull docker.io/calico/node:v3.20.6

docker pull docker.io/calico/kube-controllers:v3.20.6

4.4.2.3 部署

[root@master ~]# kubectl apply -f calico.yaml #卸载则使用kubectl delete -f calico.yaml

configmap/calico-config unchanged

......

[root@master ~]#

# 查看部署成功

[root@master ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6d9cdcd744-49vrr 1/1 Running 0 12m

kube-system calico-node-8gpm2 1/1 Running 0 13m

kube-system coredns-5897cd56c4-56mmj 1/1 Running 0 28m

kube-system coredns-5897cd56c4-mgfmh 1/1 Running 0 28m

kube-system etcd-master 1/1 Running 0 28m

kube-system kube-apiserver-master 1/1 Running 0 28m

kube-system kube-controller-manager-master 1/1 Running 0 28m

kube-system kube-proxy-l6946 1/1 Running 0 119s

kube-system kube-scheduler-master 1/1 Running 0 28m

5.node两个工作节点加入master节点(每个都要执行)

5.1 还记得4.4步骤,初始化master节点后的信息吗?work加入master节点的命令如下,需要切换到非master节点的机器上执行。

kubeadm join master:6443 --token qvwpva.w2tzw5bgwvswloho \

--discovery-token-ca-cert-hash sha256:31e38d3227593fa4e5de5fb7e6a868cf927a0936c221d20efbe638daf8827ecd

5.2 将主节点的/etc/kubernetes/admin.conf配置在工作节点也生成一份然后保存

vi /etc/kubernetes/admin.conf

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

6.各节点查看kubectl get nodes都是ready

7.安装Ingress Controller

7.1 下载yaml文件

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/mandatory.yaml -O ./ingress-nginx.yaml

7.2 修改yaml配置文件

$ grep -n5 nodeSelector ingress-nginx.yaml

replicas: 2 #设置副本数,host模式不会被调度到同一个node

spec:

hostNetwork: true #添加为host模式

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

ingress: "true" #替换此处,来决定将ingress部署在哪些机器

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.30.0

args:

7.3 为安装ingress的node节点添加label

kubectl label node master ingress=true

kubectl label node node1 ingress=true

kubectl label node node2 ingress=true

7.4 创建ingress-controller

kubectl apply -f ingress-nginx.yaml

PS:若有报错则如下,我是把报错有关的标签去掉了,然后重新执行就成功了文章来源:https://www.toymoban.com/news/detail-613999.html

Warning FailedScheduling 38s (x7 over 6m4s) default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 node(s) didn't have free ports for the requested pod ports.

[root@master k8s-app]# kubectl get nodes --show-labels -l "ingress=true"

NAME STATUS ROLES AGE VERSION LABELS

master Ready control-plane,master 94m v1.20.9 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=

node1 Ready <none> 89m v1.20.9 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linux

node2 Ready <none> 89m v1.20.9 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux

[root@master k8s-app]# kubectl taint nodes --all node-role.kubernetes.io/master-

node/master untainted

taint "node-role.kubernetes.io/master" not found

taint "node-role.kubernetes.io/master" not found

# 去掉标签

[root@master k8s-app]# kubectl label nodes master node-role.kubernetes.io/control-plane-

node/master labeled

[root@master k8s-app]# kubectl label nodes master node-role.kubernetes.io/master-ls

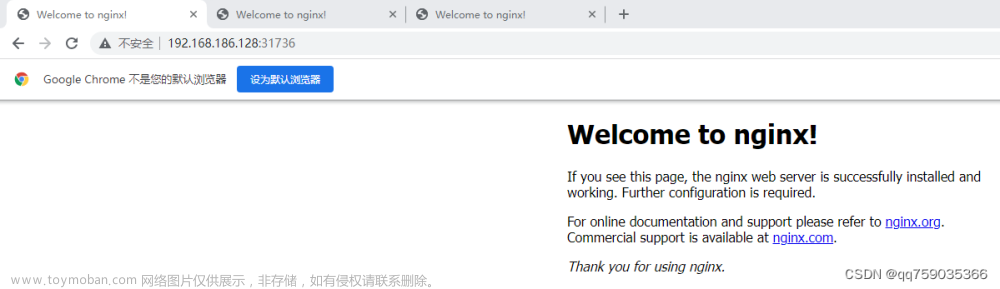

8.部署成功

[root@master istio]# kubectl version

Client Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.9", GitCommit:"7a576bc3935a6b555e33346fd73ad77c925e9e4a", GitTreeState:"clean", BuildDate:"2021-07-15T21:01:38Z", GoVersion:"go1.15.14", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.9", GitCommit:"7a576bc3935a6b555e33346fd73ad77c925e9e4a", GitTreeState:"clean", BuildDate:"2021-07-15T20:56:38Z", GoVersion:"go1.15.14", Compiler:"gc", Platform:"linux/amd64"}

[root@master istio]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready <none> 25h v1.20.9

node1 Ready <none> 25h v1.20.9

node2 Ready <none> 25h v1.20.9

我们就拥有了k8s集群!文章来源地址https://www.toymoban.com/news/detail-613999.html

到了这里,关于k8s集群安装v1.20.9的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!