需求:需要将下面类似的数据分词为:GB,T,32403,1,2015

"text": "GB/T 32403.1-2015"

1、调研

现在用的ik分词器效果

POST _analyze

{

"analyzer": "ik_max_word",

"text": "GB/T 32403.1-2015"

}

{

"tokens" : [

{

"token" : "gb",

"start_offset" : 0,

"end_offset" : 2,

"type" : "ENGLISH",

"position" : 0

},

{

"token" : "t",

"start_offset" : 3,

"end_offset" : 4,

"type" : "ENGLISH",

"position" : 1

},

{

"token" : "32403.1-2015",

"start_offset" : 5,

"end_offset" : 17,

"type" : "LETTER",

"position" : 2

},

{

"token" : "32403.1",

"start_offset" : 5,

"end_offset" : 12,

"type" : "ARABIC",

"position" : 3

},

{

"token" : "2015",

"start_offset" : 13,

"end_offset" : 17,

"type" : "ARABIC",

"position" : 4

}

]

}

发现并没有将32403.1分出来,导致检索32403就检索不到数据

解决方案:使用自定义分词器

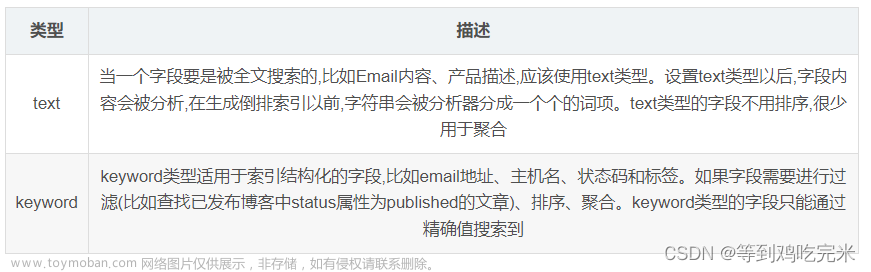

我们使用的Unicode进行正则匹配,Unicode将字符编码分为了七类,其中

- P代表标点

- L 代表字母

- Z 代表分隔符(空格,换行)

- S 代表数学符号,货币符号

- M代表标记符号

- N 阿拉伯数字,罗马数字

- C其他字符

例如:所以\pP的作用是匹配中英文标点,比如, . > 》?,而\pS代表的是数学符号,货币符号等

#自定义分词器

PUT punctuation_analyzer

{

"settings": {

"analysis": {

"analyzer": {

"punctuation_analyzer":{

"type":"custom",

"tokenizer": "punctuation"

}

},

"tokenizer": {

"punctuation":{

"type":"pattern",

"pattern":"[\\pP\\pZ\\pS]"

}

}

}

}

}

测试分词器效果

POST punctuation_analyzer/_analyze

{

"analyzer": "punctuation_analyzer",

"text": "GB/T 32403.1-2015"

}

{

"tokens" : [

{

"token" : "GB",

"start_offset" : 0,

"end_offset" : 2,

"type" : "word",

"position" : 0

},

{

"token" : "T",

"start_offset" : 3,

"end_offset" : 4,

"type" : "word",

"position" : 1

},

{

"token" : "32403",

"start_offset" : 5,

"end_offset" : 10,

"type" : "word",

"position" : 2

},

{

"token" : "1",

"start_offset" : 11,

"end_offset" : 12,

"type" : "word",

"position" : 3

},

{

"token" : "2015",

"start_offset" : 13,

"end_offset" : 17,

"type" : "word",

"position" : 4

}

]

}

发现效果符合我们的需求

2、使用新索引替换旧索引

1、新建工具人索引:old_copy

新建之前需要将旧的设置和索引查出来

#单独查询某个索引的设置

GET /testnamenew/_settings

#查询testnamenew索引的document的结构

GET /testnamenew/_mapping

使用命令

PUT /old_copy

{

"settings": {

//这里使用上面查出来的settings

},

"mappings": {

//这里使用上面查出来的mappings

}

}

拷贝数据wait_for_completion=false 表示使用异步,因为有可能数据量太大,ES默认1分钟超时

POST _reindex?slices=9&refresh&wait_for_completion=false

{

"source": {

"index": "old"

},

"dest": {

"index": "old_copy"

}

}

//查看任务进度

GET /_tasks/m-o_8yECRIOiUwxBeSWKsg:132452

2、删除old索引

DELETE std_v3

3、新建old索引,并添加自定义分词器

对比:

old的mapping,可以看到使用的ik

"name": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword"

}

},

"analyzer": "ik_max_word",

"search_analyzer": "ik_smart"

},

使用自定义分词器

PUT /mapping_analyzer

{

"settings": {

"analysis": {

"analyzer": {

"punctuation_analyzer":{// 分词器的名字

"type":"custom", //类型是自定义的

"tokenizer": "punctuation" //分词组件是punctuation,下面自定义的

}

},

"tokenizer": {

"punctuation":{

"type":"pattern",

"pattern":"[\\pP\\pZ\\pS]"

}

}

}

},

"mappings": {

"dynamic": "strict",

"properties": {

"name": {

"type": "text",

"analyzer": "punctuation_analyzer",

"search_analyzer": "punctuation_analyzer"

}

}

}

}

4、数据迁移

POST _reindex?slices=9&refresh&wait_for_completion=false

{

"source": {

"index": "old_copy"

},

"dest": {

"index": "old"

}

}

//查看任务进度

GET /_tasks/m-o_8yECRIOiUwxBeSWKsg:132452

测试效果

插入一条数据,如果有数据可跳过

PUT /old/_doc/1

{

"name": "GB/T 32403.1-2015"

}

GET /old/_search

{

"query": {

"match": {

"name": "32403"

}

}

}

{

"took" : 5,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 0.2876821,

"hits" : [

{

"_index" : "mapping_analyzer",

"_type" : "_doc",

"_id" : "1",

"_score" : 0.2876821,

"_source" : {

"name" : "GB/T 32403.1-2015"

}

}

]

}

}

成功命中

5、最后有个小bug

当我处理好之后上线测试,发现还是搜不到,发现代码里面指定的索引名是别名

立马加上,搞定

POST _aliases

{

"actions": [

{

"add": {

"index": "old",

"alias": "dd" //填别名

}

}

]

}

6、附加内容

#自定义分词器

PUT myindex 自己定义一个索引

{

"settings": { # 在setting里面配置分词配置

"analysis": {

"analyzer": {

"my_div_analyzer":{ # 分词器的名字叫my_div_analyzer

"type":"custom", # 类型是自定义的

"char_filter":["emoticons"], # 过滤器是emoticons,下面自定义的

"tokenizer": "punctuation", # 分词组件是punctuation,下面自定义的

"filter":[ # 过滤器是大写转小写的,还有english_stop,这个english_stop是自己下面定义的

"lowercase",

"english_stop"

]

}

},

"tokenizer": {

"punctuation":{ # 自己定义的,名字自取。类型就是正则匹配,正则表达式自己写就行,按照逗号分词

"type":"pattern",

"pattern":"[.,!?]"

}

},

"char_filter": {

"emoticons":{ # 自己定义的,名字自取,类型是mapping的,笑脸转为happy,哭脸是sad

"type" : "mapping",

"mappings" : [

":) => _happy_",

":( => _sad_"

]

}

},

"filter": {

"english_stop":{ # 自己定义的,名字自取,类型就是stop,禁用词类型是_english_,前面有说是默认的

"type":"stop",

"stopwords":"_english_"

}

}

}

}

}

结果文章来源:https://www.toymoban.com/news/detail-616572.html

POST myindex/_analyze

{

"analyzer": "my_div_analyzer",

"text": "I am a :) person,and you?"

}

分词结果是:

{

"tokens" : [

{

"token" : "i am a _happy_ person",

"start_offset" : 0,

"end_offset" : 17,

"type" : "word",

"position" : 0

},

{

"token" : "and you",

"start_offset" : 18,

"end_offset" : 25,

"type" : "word",

"position" : 1

}

]

}

我们看到大写被转了小写,笑脸被转了happy,而且分词分开的也是按逗号分开的,这就是我们定义分词器的效果。

常用命令文章来源地址https://www.toymoban.com/news/detail-616572.html

# 查询

GET _search

{

"query": {

"match_all": {}

}

}

# 分词结果查看

POST punctuation_analyzer/_analyze

{

"analyzer": "punctuation_analyzer",

"text": "GB/T 32403.1-2015"

}

# ik分词

POST _analyze

{

"analyzer": "ik_max_word",

"text": "GB/T 32403.1-2015"

}

# 条件查询

GET std_v3/_search

{

"query" : {

"bool" : {

"must" : [

{

"bool" : {

"should" : [

{

"match_phrase" : {

"stdNo" : {

"query" : "32403"

}

}

}

]

}

}

]

}

}

}

#自定义分词器

PUT punctuation_analyzer

{

"settings": {

"analysis": {

"analyzer": {

"punctuation_analyzer":{

"type":"custom",

"tokenizer": "punctuation"

}

},

"tokenizer": {

"punctuation":{

"type":"pattern",

"pattern":"[\\pP\\pZ\\pS]"

}

}

}

}

}

#自定义分词器

PUT punctuation_analyzer

{

"settings": {

"analysis": {

"analyzer": {

"punctuation_analyzer":{

"type":"custom",

"tokenizer": "keyword",

"char_filter":["punctuation_filter"]

}

},

"tokenizer": {

"punctuation":{

"type":"pattern",

"pattern":"[.,!? ]"

}

},

"char_filter": {

"punctuation_filter": {

"type": "pattern_replace",

"pattern": "[\\p{Punct}\\pP]",

"replacement": ""

}

}

}

}

}

PUT /mapping_analyzer

{

"settings": {

"analysis": {

"analyzer": {

"punctuation_analyzer":{

"type":"custom",

"tokenizer": "punctuation"

}

},

"tokenizer": {

"punctuation":{

"type":"pattern",

"pattern":"[\\pP\\pZ\\pS]"

}

}

}

},

"mappings": {

"dynamic": "strict",

"properties": {

"name": {

"type": "text",

"analyzer": "punctuation_analyzer",

"search_analyzer": "punctuation_analyzer"

}

}

}

}

# 存数据

PUT /mapping_analyzer/_doc/1

{

"name": "GB/T 32403.1-2015"

}

# 查询

GET /mapping_analyzer/_search

{

"query": {

"match": {

"name": "32403"

}

}

}

# 取别名

POST _aliases

{

"actions": [

{

"add": {

"index": "std_v3",

"alias": "std"

}

}

]

}

# 移除别名

POST /_aliases

{

"actions":[

{

"remove":{

"index": "mapping_analyzer",

"alias": "std"

}

}

]

}

GET _template/hzeg-search-*

# 数据迁移(异步

POST _reindex?slices=9&refresh&wait_for_completion=false

{

"source": {

"index": "file_info_sps"

},

"dest": {

"index": "file_info_sps_demo1"

}

}

# 删除索引

DELETE std_v3

# 获取任务进度

GET /_tasks/m-o_8yECRIOiUwxBeSWKsg:2672628

# 新增索引

PUT file_sps_demo1

{

"settings": {

"index": {

"number_of_shards": "1",

"max_result_window": "500000",

"number_of_replicas": "1"

}

},

"mappings": {

"properties": {

}

}

}

到了这里,关于ES自定义分词,对数字进行分词的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!