方法1 不推荐

package com.yy.uniq

import org.apache.flink.configuration.{Configuration, RestOptions}

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.table.api.bridge.scala.StreamTableEnvironment

import java.time.ZoneId

/**

* desc:

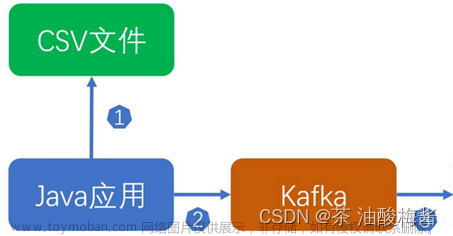

* stream1 join id去重后的stream1 on l.时间戳=r.时间戳 确保同一个id只输出一行.

* 通过group by也可以.

* {"order_id":9999,"ts":1627660800000}

*/

object KafkaSchemaUniqIdDemo1 {

def main(args: Array[String]): Unit = {

val conf = new Configuration

conf.setInteger(RestOptions.PORT, 28080)

val env = StreamExecutionEnvironment.createLocalEnvironmentWithWebUI(conf)

val tEnv: StreamTableEnvironment = StreamTableEnvironment.create(env)

// val tEnv: TableEnvironment = TableEnvironment.create(EnvironmentSettings.newInstance().build())

tEnv.getConfig.setLocalTimeZone(ZoneId.of("Asia/Shanghai"))

// val Use文章来源地址https://www.toymoban.com/news/detail-618307.html

文章来源:https://www.toymoban.com/news/detail-618307.html

到了这里,关于flink数据流 单(kafka)流根据id去重的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!