1、docker安装postgres

执行命令:docker pull postgres 拉取最新版postgres

2、查看postgres镜像是否安装成功: docker imags(查看镜像),可以看到已经拉取到了最新版本 的postgres镜像

3、编辑一个docker-compose.yml文件,账号是postgres,密码是123456,data目录会自动创建。

version: "3.8"

services:

dev-postgres:

image: postgres:latest

container_name: postgres

environment:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: 123456

ports:

- 15433:5432

volumes:

- ./data:/var/lib/postgresql/data

- /etc/localtime:/etc/localtime:ro #将外边时间直接挂载到容器内部,权限只读

restart: always

4、使用docker-compose up -d 后台启动postgres,启动成功使用docker ps -a 查看docker容器,可以看到postgres容器已经创建,对外端口号是15433。

5、使用客户端工具连接postgres,输入ip,端口号,账号,密码连接postgres

6、添加一个nacos数据库,导入相关表。相关表我放在网盘里可以直接提取nacos-config.sql文件导入。我把sql放在文章最后,不想用百度网盘可以直接复制运行。

链接:https://pan.baidu.com/s/1ZOfrc2yWllBSgcgV8-o0-Q

提取码:e2hb

7、创建nacos目录,并上传相关文件plugins是一个postgres插件,因为nacos是mysql,想要支持postgres需要插件。conf下的application.properties是nacos配置文件。可进行nacos配置。相关文件我上传到百度网盘可进行提取。nacos安装需要jdk环境如果没有请参考https://editor.csdn.net/md/?articleId=131560825安装

application.properties文件内容

#

# Copyright 1999-2021 Alibaba Group Holding Ltd.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

#*************** Spring Boot Related Configurations ***************#

### Default web context path:

server.servlet.contextPath=/nacos

### Include message field

server.error.include-message=ALWAYS

### Default web server port:

server.port=8848

#*************** Network Related Configurations ***************#

### If prefer hostname over ip for Nacos server addresses in cluster.conf:

# nacos.inetutils.prefer-hostname-over-ip=false

### Specify local server's IP:

# nacos.inetutils.ip-address=

#*************** Config Module Related Configurations ***************#

### If use MySQL as datasource:

### Deprecated configuration property, it is recommended to use `spring.sql.init.platform` replaced.

# spring.datasource.platform=postgresql

#spring.sql.init.platform=mysql

### Count of DB:

#db.num=1

### Connect URL of DB:

#db.url.0=jdbc:mysql://127.0.0.1:3306/ry-config?characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useUnicode=true&useSSL=false&serverTimezone=UTC

#db.user.0=root

#db.password.0=root

spring.datasource.platform=postgresql

db.num=1

db.url.0=jdbc:postgresql://localhost:15433/nacos_config?tcpKeepAlive=true&reWriteBatchedInserts=true&ApplicationName=nacos_java

db.user=postgres

db.password=123456

db.pool.config.driverClassName=org.postgresql.Driver

### Connection pool configuration: hikariCP

db.pool.config.connectionTimeout=30000

db.pool.config.validationTimeout=10000

db.pool.config.maximumPoolSize=20

db.pool.config.minimumIdle=2

#*************** Naming Module Related Configurations ***************#

### If enable data warmup. If set to false, the server would accept request without local data preparation:

# nacos.naming.data.warmup=true

### If enable the instance auto expiration, kind like of health check of instance:

# nacos.naming.expireInstance=true

### Add in 2.0.0

### The interval to clean empty service, unit: milliseconds.

# nacos.naming.clean.empty-service.interval=60000

### The expired time to clean empty service, unit: milliseconds.

# nacos.naming.clean.empty-service.expired-time=60000

### The interval to clean expired metadata, unit: milliseconds.

# nacos.naming.clean.expired-metadata.interval=5000

### The expired time to clean metadata, unit: milliseconds.

# nacos.naming.clean.expired-metadata.expired-time=60000

### The delay time before push task to execute from service changed, unit: milliseconds.

# nacos.naming.push.pushTaskDelay=500

### The timeout for push task execute, unit: milliseconds.

# nacos.naming.push.pushTaskTimeout=5000

### The delay time for retrying failed push task, unit: milliseconds.

# nacos.naming.push.pushTaskRetryDelay=1000

### Since 2.0.3

### The expired time for inactive client, unit: milliseconds.

# nacos.naming.client.expired.time=180000

#*************** CMDB Module Related Configurations ***************#

### The interval to dump external CMDB in seconds:

# nacos.cmdb.dumpTaskInterval=3600

### The interval of polling data change event in seconds:

# nacos.cmdb.eventTaskInterval=10

### The interval of loading labels in seconds:

# nacos.cmdb.labelTaskInterval=300

### If turn on data loading task:

# nacos.cmdb.loadDataAtStart=false

#*************** Metrics Related Configurations ***************#

### Metrics for prometheus

#management.endpoints.web.exposure.include=*

### Metrics for elastic search

management.metrics.export.elastic.enabled=false

#management.metrics.export.elastic.host=http://localhost:9200

### Metrics for influx

management.metrics.export.influx.enabled=false

#management.metrics.export.influx.db=springboot

#management.metrics.export.influx.uri=http://localhost:8086

#management.metrics.export.influx.auto-create-db=true

#management.metrics.export.influx.consistency=one

#management.metrics.export.influx.compressed=true

#*************** Access Log Related Configurations ***************#

### If turn on the access log:

server.tomcat.accesslog.enabled=true

### The access log pattern:

server.tomcat.accesslog.pattern=%h %l %u %t "%r" %s %b %D %{User-Agent}i %{Request-Source}i

### The directory of access log:

server.tomcat.basedir=file:.

#*************** Access Control Related Configurations ***************#

### If enable spring security, this option is deprecated in 1.2.0:

#spring.security.enabled=false

### The ignore urls of auth

nacos.security.ignore.urls=/,/error,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-ui/public/**,/v1/auth/**,/v1/console/health/**,/actuator/**,/v1/console/server/**

### The auth system to use, currently only 'nacos' and 'ldap' is supported:

nacos.core.auth.system.type=nacos

### If turn on auth system:

nacos.core.auth.enabled=true

### Turn on/off caching of auth information. By turning on this switch, the update of auth information would have a 15 seconds delay.

nacos.core.auth.caching.enabled=true

### Since 1.4.1, Turn on/off white auth for user-agent: nacos-server, only for upgrade from old version.

nacos.core.auth.enable.userAgentAuthWhite=false

### Since 1.4.1, worked when nacos.core.auth.enabled=true and nacos.core.auth.enable.userAgentAuthWhite=false.

### The two properties is the white list for auth and used by identity the request from other server.

nacos.core.auth.server.identity.key=test

nacos.core.auth.server.identity.value=test

### worked when nacos.core.auth.system.type=nacos

### The token expiration in seconds:

nacos.core.auth.plugin.nacos.token.cache.enable=false

nacos.core.auth.plugin.nacos.token.expire.seconds=18000

### The default token (Base64 String):

nacos.core.auth.plugin.nacos.token.secret.key=SecretKey012345678901234567890123456789012345678901234567890123456789

### worked when nacos.core.auth.system.type=ldap,{0} is Placeholder,replace login username

#nacos.core.auth.ldap.url=ldap://localhost:389

#nacos.core.auth.ldap.basedc=dc=example,dc=org

#nacos.core.auth.ldap.userDn=cn=admin,${nacos.core.auth.ldap.basedc}

#nacos.core.auth.ldap.password=admin

#nacos.core.auth.ldap.userdn=cn={0},dc=example,dc=org

#nacos.core.auth.ldap.filter.prefix=uid

#nacos.core.auth.ldap.case.sensitive=true

#*************** Istio Related Configurations ***************#

### If turn on the MCP server:

nacos.istio.mcp.server.enabled=false

### nacos访问地址的密码

nacos.config.password=nacos

### nacos访问地址的用户名

nacos.config.username=nacos

#*************** Core Related Configurations ***************#

### set the WorkerID manually

# nacos.core.snowflake.worker-id=

### Member-MetaData

# nacos.core.member.meta.site=

# nacos.core.member.meta.adweight=

# nacos.core.member.meta.weight=

### MemberLookup

### Addressing pattern category, If set, the priority is highest

# nacos.core.member.lookup.type=[file,address-server]

## Set the cluster list with a configuration file or command-line argument

# nacos.member.list=192.168.16.101:8847?raft_port=8807,192.168.16.101?raft_port=8808,192.168.16.101:8849?raft_port=8809

## for AddressServerMemberLookup

# Maximum number of retries to query the address server upon initialization

# nacos.core.address-server.retry=5

## Server domain name address of [address-server] mode

# address.server.domain=jmenv.tbsite.net

## Server port of [address-server] mode

# address.server.port=8080

## Request address of [address-server] mode

# address.server.url=/nacos/serverlist

#*************** JRaft Related Configurations ***************#

### Sets the Raft cluster election timeout, default value is 5 second

# nacos.core.protocol.raft.data.election_timeout_ms=5000

### Sets the amount of time the Raft snapshot will execute periodically, default is 30 minute

# nacos.core.protocol.raft.data.snapshot_interval_secs=30

### raft internal worker threads

# nacos.core.protocol.raft.data.core_thread_num=8

### Number of threads required for raft business request processing

# nacos.core.protocol.raft.data.cli_service_thread_num=4

### raft linear read strategy. Safe linear reads are used by default, that is, the Leader tenure is confirmed by heartbeat

# nacos.core.protocol.raft.data.read_index_type=ReadOnlySafe

### rpc request timeout, default 5 seconds

# nacos.core.protocol.raft.data.rpc_request_timeout_ms=5000

#*************** Distro Related Configurations ***************#

### Distro data sync delay time, when sync task delayed, task will be merged for same data key. Default 1 second.

# nacos.core.protocol.distro.data.sync.delayMs=1000

### Distro data sync timeout for one sync data, default 3 seconds.

# nacos.core.protocol.distro.data.sync.timeoutMs=3000

### Distro data sync retry delay time when sync data failed or timeout, same behavior with delayMs, default 3 seconds.

# nacos.core.protocol.distro.data.sync.retryDelayMs=3000

### Distro data verify interval time, verify synced data whether expired for a interval. Default 5 seconds.

# nacos.core.protocol.distro.data.verify.intervalMs=5000

### Distro data verify timeout for one verify, default 3 seconds.

# nacos.core.protocol.distro.data.verify.timeoutMs=3000

### Distro data load retry delay when load snapshot data failed, default 30 seconds.

# nacos.core.protocol.distro.data.load.retryDelayMs=30000

### enable to support prometheus service discovery

#nacos.prometheus.metrics.enabled=true

### Since 2.3

#*************** Grpc Configurations ***************#

## sdk grpc(between nacos server and client) configuration

## Sets the maximum message size allowed to be received on the server.

#nacos.remote.server.grpc.sdk.max-inbound-message-size=10485760

## Sets the time(milliseconds) without read activity before sending a keepalive ping. The typical default is two hours.

#nacos.remote.server.grpc.sdk.keep-alive-time=7200000

## Sets a time(milliseconds) waiting for read activity after sending a keepalive ping. Defaults to 20 seconds.

#nacos.remote.server.grpc.sdk.keep-alive-timeout=20000

## Sets a time(milliseconds) that specify the most aggressive keep-alive time clients are permitted to configure. The typical default is 5 minutes

#nacos.remote.server.grpc.sdk.permit-keep-alive-time=300000

## cluster grpc(inside the nacos server) configuration

#nacos.remote.server.grpc.cluster.max-inbound-message-size=10485760

## Sets the time(milliseconds) without read activity before sending a keepalive ping. The typical default is two hours.

#nacos.remote.server.grpc.cluster.keep-alive-time=7200000

## Sets a time(milliseconds) waiting for read activity after sending a keepalive ping. Defaults to 20 seconds.

#nacos.remote.server.grpc.cluster.keep-alive-timeout=20000

## Sets a time(milliseconds) that specify the most aggressive keep-alive time clients are permitted to configure. The typical default is 5 minutes

#nacos.remote.server.grpc.cluster.permit-keep-alive-time=300000

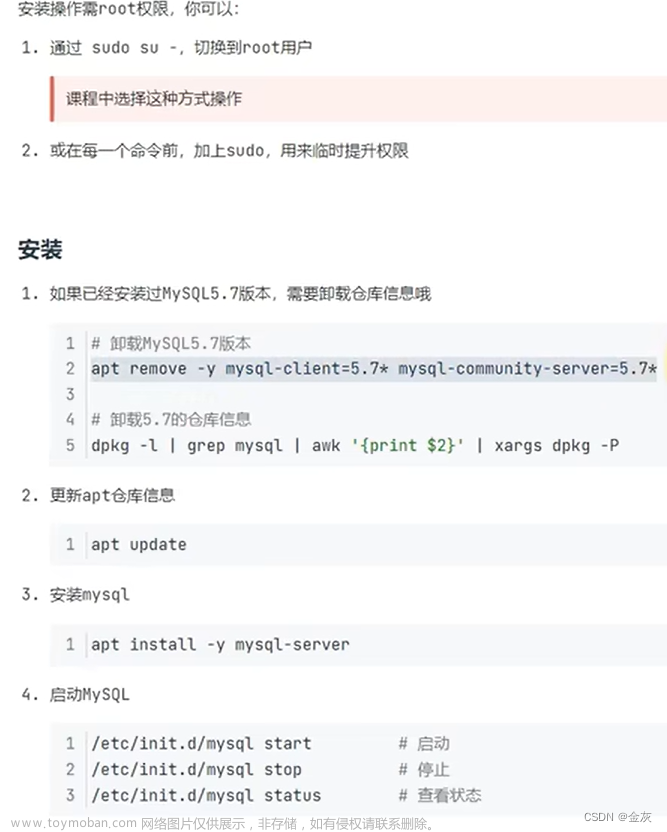

如下图修改数据库连接,和nacos登录账号密码,默认账号密码都是nacos

docker-compos.yml文件(对外映射端口为18848)

version: "3"

services:

nacos-pgsql:

image: nacos/nacos-server:v2.2.3

container_name: nacos-pgsql

volumes:

- ./logs:/home/nacos/logs

- ./data:/home/nacos/data

- ./plugins/:/home/nacos/plugins

- ./conf/application.properties:/home/nacos/conf/application.properties:rw

ports:

- '18848:8848'

- '19848:9848'

environment:

- MODE=standalone

- PREFER_HOST_MODE=hostname

- NACOS_SERVER_PORT=8848

restart: always

8、执行命令docker-compose up -d 启动nacos后可使用 docker logs -f --tail=1000 nacos-pgsql 查看启动日志

9、docker ps -a 查看nacos容器已经启动成功

10、页面访问:

这里的配置信息是导入的config_info表,我默认添加的是ruoyi微服务版本的配置信息,可根据实际情况修改。

11、最后附上导入的sql:nacos_config.sql文件文章来源:https://www.toymoban.com/news/detail-632006.html

/*

Navicat Premium Data Transfer

Source Server : 本地postgres

Source Server Type : PostgreSQL

Source Server Version : 140001

Source Host : 192.168.159.141:15433

Source Catalog : nacos_config

Source Schema : public

Target Server Type : PostgreSQL

Target Server Version : 140001

File Encoding : 65001

Date: 07/07/2023 13:04:39

*/

-- ----------------------------

-- Sequence structure for config_info_aggr_id_seq

-- ----------------------------

DROP SEQUENCE IF EXISTS "public"."config_info_aggr_id_seq";

CREATE SEQUENCE "public"."config_info_aggr_id_seq"

INCREMENT 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1

CACHE 1;

-- ----------------------------

-- Sequence structure for config_info_beta_id_seq

-- ----------------------------

DROP SEQUENCE IF EXISTS "public"."config_info_beta_id_seq";

CREATE SEQUENCE "public"."config_info_beta_id_seq"

INCREMENT 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1

CACHE 1;

-- ----------------------------

-- Sequence structure for config_info_id_seq

-- ----------------------------

DROP SEQUENCE IF EXISTS "public"."config_info_id_seq";

CREATE SEQUENCE "public"."config_info_id_seq"

INCREMENT 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1

CACHE 1;

-- ----------------------------

-- Sequence structure for config_info_tag_id_seq

-- ----------------------------

DROP SEQUENCE IF EXISTS "public"."config_info_tag_id_seq";

CREATE SEQUENCE "public"."config_info_tag_id_seq"

INCREMENT 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1

CACHE 1;

-- ----------------------------

-- Sequence structure for config_tags_relation_id_seq

-- ----------------------------

DROP SEQUENCE IF EXISTS "public"."config_tags_relation_id_seq";

CREATE SEQUENCE "public"."config_tags_relation_id_seq"

INCREMENT 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1

CACHE 1;

-- ----------------------------

-- Sequence structure for config_tags_relation_nid_seq

-- ----------------------------

DROP SEQUENCE IF EXISTS "public"."config_tags_relation_nid_seq";

CREATE SEQUENCE "public"."config_tags_relation_nid_seq"

INCREMENT 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1

CACHE 1;

-- ----------------------------

-- Sequence structure for group_capacity_id_seq

-- ----------------------------

DROP SEQUENCE IF EXISTS "public"."group_capacity_id_seq";

CREATE SEQUENCE "public"."group_capacity_id_seq"

INCREMENT 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1

CACHE 1;

-- ----------------------------

-- Sequence structure for his_config_info_nid_seq

-- ----------------------------

DROP SEQUENCE IF EXISTS "public"."his_config_info_nid_seq";

CREATE SEQUENCE "public"."his_config_info_nid_seq"

INCREMENT 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1

CACHE 1;

-- ----------------------------

-- Sequence structure for tenant_capacity_id_seq

-- ----------------------------

DROP SEQUENCE IF EXISTS "public"."tenant_capacity_id_seq";

CREATE SEQUENCE "public"."tenant_capacity_id_seq"

INCREMENT 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1

CACHE 1;

-- ----------------------------

-- Sequence structure for tenant_info_id_seq

-- ----------------------------

DROP SEQUENCE IF EXISTS "public"."tenant_info_id_seq";

CREATE SEQUENCE "public"."tenant_info_id_seq"

INCREMENT 1

MINVALUE 1

MAXVALUE 9223372036854775807

START 1

CACHE 1;

-- ----------------------------

-- Table structure for config_info

-- ----------------------------

DROP TABLE IF EXISTS "public"."config_info";

CREATE TABLE "public"."config_info" (

"id" int8 NOT NULL DEFAULT nextval('config_info_id_seq'::regclass),

"data_id" varchar(255) COLLATE "pg_catalog"."default" NOT NULL,

"group_id" varchar(255) COLLATE "pg_catalog"."default",

"content" text COLLATE "pg_catalog"."default" NOT NULL,

"md5" varchar(32) COLLATE "pg_catalog"."default",

"gmt_create" timestamp(6) NOT NULL,

"gmt_modified" timestamp(6) NOT NULL,

"src_user" text COLLATE "pg_catalog"."default",

"src_ip" varchar(20) COLLATE "pg_catalog"."default",

"app_name" varchar(128) COLLATE "pg_catalog"."default",

"tenant_id" varchar(128) COLLATE "pg_catalog"."default",

"c_desc" varchar(256) COLLATE "pg_catalog"."default",

"c_use" varchar(64) COLLATE "pg_catalog"."default",

"effect" varchar(64) COLLATE "pg_catalog"."default",

"type" varchar(64) COLLATE "pg_catalog"."default",

"c_schema" text COLLATE "pg_catalog"."default",

"encrypted_data_key" text COLLATE "pg_catalog"."default" NOT NULL

)

;

COMMENT ON COLUMN "public"."config_info"."id" IS 'id';

COMMENT ON COLUMN "public"."config_info"."data_id" IS 'data_id';

COMMENT ON COLUMN "public"."config_info"."content" IS 'content';

COMMENT ON COLUMN "public"."config_info"."md5" IS 'md5';

COMMENT ON COLUMN "public"."config_info"."gmt_create" IS '创建时间';

COMMENT ON COLUMN "public"."config_info"."gmt_modified" IS '修改时间';

COMMENT ON COLUMN "public"."config_info"."src_user" IS 'source user';

COMMENT ON COLUMN "public"."config_info"."src_ip" IS 'source ip';

COMMENT ON COLUMN "public"."config_info"."tenant_id" IS '租户字段';

COMMENT ON COLUMN "public"."config_info"."encrypted_data_key" IS '秘钥';

COMMENT ON TABLE "public"."config_info" IS 'config_info';

-- ----------------------------

-- Records of config_info

-- ----------------------------

INSERT INTO "public"."config_info" VALUES (1, 'application-dev.yml', 'DEFAULT_GROUP', 'spring:

autoconfigure:

exclude: com.alibaba.druid.spring.boot.autoconfigure.DruidDataSourceAutoConfigure

mvc:

pathmatch:

matching-strategy: ant_path_matcher

# feign 配置

feign:

sentinel:

enabled: true

okhttp:

enabled: true

httpclient:

enabled: false

client:

config:

default:

connectTimeout: 10000

readTimeout: 10000

compression:

request:

enabled: true

response:

enabled: true

# 暴露监控端点

management:

endpoints:

web:

exposure:

include: ''*''

', '09ac789741e880f0a19053afad53bf8e', '2020-05-20 12:00:00', '2023-07-07 12:22:59.258', 'nacos', '192.168.159.1', '', '', '通用配置', 'null', 'null', 'yaml', '', '');

INSERT INTO "public"."config_info" VALUES (4, 'ruoyi-monitor-dev.yml', 'DEFAULT_GROUP', '# spring

spring:

security:

user:

name: ruoyi

password: 123456

boot:

admin:

ui:

title: 若依服务状态监控

', '6f122fd2bfb8d45f858e7d6529a9cd44', '2020-11-20 00:00:00', '2022-09-29 02:48:54', 'nacos', '0:0:0:0:0:0:0:1', '', '', '监控中心', 'null', 'null', 'yaml', '', '');

INSERT INTO "public"."config_info" VALUES (8, 'ruoyi-file-dev.yml', 'DEFAULT_GROUP', '# 本地文件上传

file:

domain: http://127.0.0.1:9300

path: D:/ruoyi/uploadPath

prefix: /statics

# FastDFS配置

fdfs:

domain: http://8.129.231.12

soTimeout: 3000

connectTimeout: 2000

trackerList: 8.129.231.12:22122

# Minio配置

minio:

url: http://8.129.231.12:9000

accessKey: minioadmin

secretKey: minioadmin

bucketName: test', '5382b93f3d8059d6068c0501fdd41195', '2020-11-20 00:00:00', '2020-12-21 21:01:59', NULL, '0:0:0:0:0:0:0:1', '', '', '文件服务', 'null', 'null', 'yaml', NULL, '');

INSERT INTO "public"."config_info" VALUES (3, 'ruoyi-auth-dev.yml', 'DEFAULT_GROUP', 'spring:

redis:

host: 127.0.0.1

port: 16379

database: 6

password: 123456

', '46e7fed554a8e3b1df989db8f43cab45', '2020-11-20 00:00:00', '2023-07-07 12:31:26.316', 'nacos', '192.168.159.1', '', '', '认证中心', 'null', 'null', 'yaml', '', '');

INSERT INTO "public"."config_info" VALUES (7, 'ruoyi-job-dev.yml', 'DEFAULT_GROUP', '# spring配置

spring:

redis:

host: 127.0.0.1

port: 16379

database: 6

password: 123456

datasource:

driver-class-name: org.postgresql.Driver

url: jdbc:postgresql://127.0.0.1:15433/postgres?stringtype=unspecified&useUnicode=true&characterEncoding=utf8&zeroDateTimeBehavior=convertToNull&useSSL=true&serverTimezone=GMT%2B8

username: postgres

password: 123456

# mybatis配置

mybatis:

# 搜索指定包别名

typeAliasesPackage: com.ruoyi.job.domain

# 配置mapper的扫描,找到所有的mapper.xml映射文件

mapperLocations: classpath:mapper/**/*.xml

# swagger配置

swagger:

title: 定时任务接口文档

license: Powered By ruoyi

licenseUrl: https://ruoyi.vip

', '660b961752d9802be227915a9a0bd95f', '2020-11-20 00:00:00', '2023-07-07 12:32:12.452', 'nacos', '192.168.159.1', '', '', '定时任务', 'null', 'null', 'yaml', '', '');

INSERT INTO "public"."config_info" VALUES (6, 'ruoyi-gen-dev.yml', 'DEFAULT_GROUP', '# spring配置

spring:

redis:

host: 127.0.0.1

port: 16379

database: 6

password: 123456

datasource:

driver-class-name: org.postgresql.Driver

url: jdbc:postgresql://127.0.0.1:15433/postgres?stringtype=unspecified&useUnicode=true&characterEncoding=utf8&zeroDateTimeBehavior=convertToNull&useSSL=true&serverTimezone=GMT%2B8

username: postgres

password: 123456

# mybatis配置

mybatis:

# 搜索指定包别名

typeAliasesPackage: com.ruoyi.gen.domain

# 配置mapper的扫描,找到所有的mapper.xml映射文件

mapperLocations: classpath:mapper/**/*.xml

# swagger配置

swagger:

title: 代码生成接口文档

license: Powered By ruoyi

licenseUrl: https://ruoyi.vip

# 代码生成

gen:

# 作者

author: ruoyi

# 默认生成包路径 system 需改成自己的模块名称 如 system monitor tool

packageName: com.ruoyi.system

# 自动去除表前缀,默认是false

autoRemovePre: false

# 表前缀(生成类名不会包含表前缀,多个用逗号分隔)

tablePrefix: sys_

', '9f25e70735722cba18ed13252a844226', '2020-11-20 00:00:00', '2023-07-07 12:35:33.763', 'nacos', '192.168.159.1', '', '', '代码生成', 'null', 'null', 'yaml', '', '');

INSERT INTO "public"."config_info" VALUES (2, 'ruoyi-gateway-dev.yml', 'DEFAULT_GROUP', 'spring:

redis:

host: 127.0.0.1

port: 16379

database: 6

password: 123456

cloud:

gateway:

discovery:

locator:

lowerCaseServiceId: true

enabled: true

routes:

# 认证中心

- id: ruoyi-auth

uri: lb://ruoyi-auth

predicates:

- Path=/auth/**

filters:

# 验证码处理

- CacheRequestFilter

- ValidateCodeFilter

- StripPrefix=1

# 代码生成

- id: ruoyi-gen

uri: lb://ruoyi-gen

predicates:

- Path=/code/**

filters:

- StripPrefix=1

# 定时任务

- id: ruoyi-job

uri: lb://ruoyi-job

predicates:

- Path=/schedule/**

filters:

- StripPrefix=1

# 系统模块

- id: ruoyi-system

uri: lb://ruoyi-system

predicates:

- Path=/system/**

filters:

- StripPrefix=1

# 文件服务

- id: ruoyi-file

uri: lb://ruoyi-file

predicates:

- Path=/file/**

filters:

- StripPrefix=1

# 安全配置

security:

# 验证码

captcha:

enabled: true

type: math

# 防止XSS攻击

xss:

enabled: true

excludeUrls:

- /system/notice

# 不校验白名单

ignore:

whites:

- /auth/logout

- /auth/login

- /auth/register

- /*/v2/api-docs

- /csrf

', 'b664a860ff9867716baa3eb77e245f21', '2020-05-14 14:17:55', '2023-07-07 12:34:01.656', 'nacos', '192.168.159.1', '', '', '网关模块', 'null', 'null', 'yaml', '', '');

INSERT INTO "public"."config_info" VALUES (9, 'sentinel-ruoyi-gateway', 'DEFAULT_GROUP', '[

{

"resource": "ruoyi-auth",

"count": 500,

"grade": 1,

"limitApp": "default",

"strategy": 0,

"controlBehavior": 0

},

{

"resource": "ruoyi-system",

"count": 1000,

"grade": 1,

"limitApp": "default",

"strategy": 0,

"controlBehavior": 0

},

{

"resource": "ruoyi-gen",

"count": 200,

"grade": 1,

"limitApp": "default",

"strategy": 0,

"controlBehavior": 0

},

{

"resource": "ruoyi-job",

"count": 300,

"grade": 1,

"limitApp": "default",

"strategy": 0,

"controlBehavior": 0

}

]', '411936d945573749e5956f2df0b04989', '2020-11-20 00:00:00', '2023-07-07 12:31:01.346', 'nacos', '192.168.159.1', '', '', '限流策略', 'null', 'null', 'json', '', '');

INSERT INTO "public"."config_info" VALUES (5, 'ruoyi-system-dev.yml', 'DEFAULT_GROUP', '# spring配置

spring:

redis:

host: 127.0.0.1

port: 16379

database: 6

password: 123456

datasource:

druid:

stat-view-servlet:

enabled: true

loginUsername: admin

loginPassword: 123456

dynamic:

druid:

initial-size: 5

min-idle: 5

maxActive: 20

maxWait: 60000

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 20

filters: stat,slf4j

connectionProperties: druid.stat.mergeSql\=true;druid.stat.slowSqlMillis\=5000

datasource:

# 主库数据源

master:

driver-class-name: org.postgresql.Driver

url: jdbc:postgresql://127.0.0.1:15433/postgres?stringtype=unspecified&useUnicode=true&characterEncoding=utf8&zeroDateTimeBehavior=convertToNull&useSSL=true&serverTimezone=GMT%2B8

username: postgres

password: 123456

# 从库数据源

# slave:

# username:

# password:

# url:

# driver-class-name:

# mybatis配置

mybatis:

# 搜索指定包别名

typeAliasesPackage: com.ruoyi.system

# 配置mapper的扫描,找到所有的mapper.xml映射文件

mapperLocations: classpath:mapper/**/*.xml

configuration:

map-underscore-to-camel-case: true

cache-enabled: false

log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

logging:

level:

com.ruoyi.system.mapper: debug

# swagger配置

swagger:

title: 系统模块接口文档

license: Powered By ruoyi

licenseUrl: https://ruoyi.vip', '86213807e390739d81a7eadfadf68cea', '2020-11-20 00:00:00', '2023-07-07 12:33:06.76', 'nacos', '192.168.159.1', '', '', '系统模块', 'null', 'null', 'yaml', '', '');

-- ----------------------------

-- Table structure for config_info_aggr

-- ----------------------------

DROP TABLE IF EXISTS "public"."config_info_aggr";

CREATE TABLE "public"."config_info_aggr" (

"id" int8 NOT NULL DEFAULT nextval('config_info_aggr_id_seq'::regclass),

"data_id" varchar(255) COLLATE "pg_catalog"."default" NOT NULL,

"group_id" varchar(255) COLLATE "pg_catalog"."default" NOT NULL,

"datum_id" varchar(255) COLLATE "pg_catalog"."default" NOT NULL,

"content" text COLLATE "pg_catalog"."default" NOT NULL,

"gmt_modified" timestamp(6) NOT NULL,

"app_name" varchar(128) COLLATE "pg_catalog"."default",

"tenant_id" varchar(128) COLLATE "pg_catalog"."default"

)

;

COMMENT ON COLUMN "public"."config_info_aggr"."id" IS 'id';

COMMENT ON COLUMN "public"."config_info_aggr"."data_id" IS 'data_id';

COMMENT ON COLUMN "public"."config_info_aggr"."group_id" IS 'group_id';

COMMENT ON COLUMN "public"."config_info_aggr"."datum_id" IS 'datum_id';

COMMENT ON COLUMN "public"."config_info_aggr"."content" IS '内容';

COMMENT ON COLUMN "public"."config_info_aggr"."gmt_modified" IS '修改时间';

COMMENT ON COLUMN "public"."config_info_aggr"."tenant_id" IS '租户字段';

COMMENT ON TABLE "public"."config_info_aggr" IS '增加租户字段';

-- ----------------------------

-- Records of config_info_aggr

-- ----------------------------

-- ----------------------------

-- Table structure for config_info_beta

-- ----------------------------

DROP TABLE IF EXISTS "public"."config_info_beta";

CREATE TABLE "public"."config_info_beta" (

"id" int8 NOT NULL DEFAULT nextval('config_info_beta_id_seq'::regclass),

"data_id" varchar(255) COLLATE "pg_catalog"."default" NOT NULL,

"group_id" varchar(128) COLLATE "pg_catalog"."default" NOT NULL,

"app_name" varchar(128) COLLATE "pg_catalog"."default",

"content" text COLLATE "pg_catalog"."default" NOT NULL,

"beta_ips" varchar(1024) COLLATE "pg_catalog"."default",

"md5" varchar(32) COLLATE "pg_catalog"."default",

"gmt_create" timestamp(6) NOT NULL,

"gmt_modified" timestamp(6) NOT NULL,

"src_user" text COLLATE "pg_catalog"."default",

"src_ip" varchar(20) COLLATE "pg_catalog"."default",

"tenant_id" varchar(128) COLLATE "pg_catalog"."default",

"encrypted_data_key" text COLLATE "pg_catalog"."default" NOT NULL

)

;

COMMENT ON COLUMN "public"."config_info_beta"."id" IS 'id';

COMMENT ON COLUMN "public"."config_info_beta"."data_id" IS 'data_id';

COMMENT ON COLUMN "public"."config_info_beta"."group_id" IS 'group_id';

COMMENT ON COLUMN "public"."config_info_beta"."app_name" IS 'app_name';

COMMENT ON COLUMN "public"."config_info_beta"."content" IS 'content';

COMMENT ON COLUMN "public"."config_info_beta"."beta_ips" IS 'betaIps';

COMMENT ON COLUMN "public"."config_info_beta"."md5" IS 'md5';

COMMENT ON COLUMN "public"."config_info_beta"."gmt_create" IS '创建时间';

COMMENT ON COLUMN "public"."config_info_beta"."gmt_modified" IS '修改时间';

COMMENT ON COLUMN "public"."config_info_beta"."src_user" IS 'source user';

COMMENT ON COLUMN "public"."config_info_beta"."src_ip" IS 'source ip';

COMMENT ON COLUMN "public"."config_info_beta"."tenant_id" IS '租户字段';

COMMENT ON COLUMN "public"."config_info_beta"."encrypted_data_key" IS '秘钥';

COMMENT ON TABLE "public"."config_info_beta" IS 'config_info_beta';

-- ----------------------------

-- Records of config_info_beta

-- ----------------------------

-- ----------------------------

-- Table structure for config_info_tag

-- ----------------------------

DROP TABLE IF EXISTS "public"."config_info_tag";

CREATE TABLE "public"."config_info_tag" (

"id" int8 NOT NULL DEFAULT nextval('config_info_tag_id_seq'::regclass),

"data_id" varchar(255) COLLATE "pg_catalog"."default" NOT NULL,

"group_id" varchar(128) COLLATE "pg_catalog"."default" NOT NULL,

"tenant_id" varchar(128) COLLATE "pg_catalog"."default",

"tag_id" varchar(128) COLLATE "pg_catalog"."default" NOT NULL,

"app_name" varchar(128) COLLATE "pg_catalog"."default",

"content" text COLLATE "pg_catalog"."default" NOT NULL,

"md5" varchar(32) COLLATE "pg_catalog"."default",

"gmt_create" timestamp(6) NOT NULL,

"gmt_modified" timestamp(6) NOT NULL,

"src_user" text COLLATE "pg_catalog"."default",

"src_ip" varchar(20) COLLATE "pg_catalog"."default"

)

;

COMMENT ON COLUMN "public"."config_info_tag"."id" IS 'id';

COMMENT ON COLUMN "public"."config_info_tag"."data_id" IS 'data_id';

COMMENT ON COLUMN "public"."config_info_tag"."group_id" IS 'group_id';

COMMENT ON COLUMN "public"."config_info_tag"."tenant_id" IS 'tenant_id';

COMMENT ON COLUMN "public"."config_info_tag"."tag_id" IS 'tag_id';

COMMENT ON COLUMN "public"."config_info_tag"."app_name" IS 'app_name';

COMMENT ON COLUMN "public"."config_info_tag"."content" IS 'content';

COMMENT ON COLUMN "public"."config_info_tag"."md5" IS 'md5';

COMMENT ON COLUMN "public"."config_info_tag"."gmt_create" IS '创建时间';

COMMENT ON COLUMN "public"."config_info_tag"."gmt_modified" IS '修改时间';

COMMENT ON COLUMN "public"."config_info_tag"."src_user" IS 'source user';

COMMENT ON COLUMN "public"."config_info_tag"."src_ip" IS 'source ip';

COMMENT ON TABLE "public"."config_info_tag" IS 'config_info_tag';

-- ----------------------------

-- Records of config_info_tag

-- ----------------------------

-- ----------------------------

-- Table structure for config_tags_relation

-- ----------------------------

DROP TABLE IF EXISTS "public"."config_tags_relation";

CREATE TABLE "public"."config_tags_relation" (

"id" int8 NOT NULL DEFAULT nextval('config_tags_relation_id_seq'::regclass),

"tag_name" varchar(128) COLLATE "pg_catalog"."default" NOT NULL,

"tag_type" varchar(64) COLLATE "pg_catalog"."default",

"data_id" varchar(255) COLLATE "pg_catalog"."default" NOT NULL,

"group_id" varchar(128) COLLATE "pg_catalog"."default" NOT NULL,

"tenant_id" varchar(128) COLLATE "pg_catalog"."default",

"nid" int8 NOT NULL DEFAULT nextval('config_tags_relation_nid_seq'::regclass)

)

;

COMMENT ON COLUMN "public"."config_tags_relation"."id" IS 'id';

COMMENT ON COLUMN "public"."config_tags_relation"."tag_name" IS 'tag_name';

COMMENT ON COLUMN "public"."config_tags_relation"."tag_type" IS 'tag_type';

COMMENT ON COLUMN "public"."config_tags_relation"."data_id" IS 'data_id';

COMMENT ON COLUMN "public"."config_tags_relation"."group_id" IS 'group_id';

COMMENT ON COLUMN "public"."config_tags_relation"."tenant_id" IS 'tenant_id';

COMMENT ON TABLE "public"."config_tags_relation" IS 'config_tag_relation';

-- ----------------------------

-- Records of config_tags_relation

-- ----------------------------

-- ----------------------------

-- Table structure for group_capacity

-- ----------------------------

DROP TABLE IF EXISTS "public"."group_capacity";

CREATE TABLE "public"."group_capacity" (

"id" int8 NOT NULL DEFAULT nextval('group_capacity_id_seq'::regclass),

"group_id" varchar(128) COLLATE "pg_catalog"."default" NOT NULL,

"quota" int4 NOT NULL,

"usage" int4 NOT NULL,

"max_size" int4 NOT NULL,

"max_aggr_count" int4 NOT NULL,

"max_aggr_size" int4 NOT NULL,

"max_history_count" int4 NOT NULL,

"gmt_create" timestamp(6) NOT NULL,

"gmt_modified" timestamp(6) NOT NULL

)

;

COMMENT ON COLUMN "public"."group_capacity"."id" IS '主键ID';

COMMENT ON COLUMN "public"."group_capacity"."group_id" IS 'Group ID,空字符表示整个集群';

COMMENT ON COLUMN "public"."group_capacity"."quota" IS '配额,0表示使用默认值';

COMMENT ON COLUMN "public"."group_capacity"."usage" IS '使用量';

COMMENT ON COLUMN "public"."group_capacity"."max_size" IS '单个配置大小上限,单位为字节,0表示使用默认值';

COMMENT ON COLUMN "public"."group_capacity"."max_aggr_count" IS '聚合子配置最大个数,,0表示使用默认值';

COMMENT ON COLUMN "public"."group_capacity"."max_aggr_size" IS '单个聚合数据的子配置大小上限,单位为字节,0表示使用默认值';

COMMENT ON COLUMN "public"."group_capacity"."max_history_count" IS '最大变更历史数量';

COMMENT ON COLUMN "public"."group_capacity"."gmt_create" IS '创建时间';

COMMENT ON COLUMN "public"."group_capacity"."gmt_modified" IS '修改时间';

COMMENT ON TABLE "public"."group_capacity" IS '集群、各Group容量信息表';

-- ----------------------------

-- Records of group_capacity

-- ----------------------------

-- ----------------------------

-- Table structure for his_config_info

-- ----------------------------

DROP TABLE IF EXISTS "public"."his_config_info";

CREATE TABLE "public"."his_config_info" (

"id" int8 NOT NULL,

"nid" int8 NOT NULL DEFAULT nextval('his_config_info_nid_seq'::regclass),

"data_id" varchar(255) COLLATE "pg_catalog"."default" NOT NULL,

"group_id" varchar(128) COLLATE "pg_catalog"."default" NOT NULL,

"app_name" varchar(128) COLLATE "pg_catalog"."default",

"content" text COLLATE "pg_catalog"."default" NOT NULL,

"md5" varchar(32) COLLATE "pg_catalog"."default",

"gmt_create" timestamp(6) NOT NULL DEFAULT '2010-05-05 00:00:00'::timestamp without time zone,

"gmt_modified" timestamp(6) NOT NULL,

"src_user" text COLLATE "pg_catalog"."default",

"src_ip" varchar(20) COLLATE "pg_catalog"."default",

"op_type" char(10) COLLATE "pg_catalog"."default",

"tenant_id" varchar(128) COLLATE "pg_catalog"."default",

"encrypted_data_key" text COLLATE "pg_catalog"."default" NOT NULL

)

;

COMMENT ON COLUMN "public"."his_config_info"."app_name" IS 'app_name';

COMMENT ON COLUMN "public"."his_config_info"."tenant_id" IS '租户字段';

COMMENT ON COLUMN "public"."his_config_info"."encrypted_data_key" IS '秘钥';

COMMENT ON TABLE "public"."his_config_info" IS '多租户改造';

-- ----------------------------

-- Records of his_config_info

-- ----------------------------

-- ----------------------------

-- Table structure for permissions

-- ----------------------------

DROP TABLE IF EXISTS "public"."permissions";

CREATE TABLE "public"."permissions" (

"role" varchar(50) COLLATE "pg_catalog"."default" NOT NULL,

"resource" varchar(512) COLLATE "pg_catalog"."default" NOT NULL,

"action" varchar(8) COLLATE "pg_catalog"."default" NOT NULL

)

;

-- ----------------------------

-- Records of permissions

-- ----------------------------

-- ----------------------------

-- Table structure for roles

-- ----------------------------

DROP TABLE IF EXISTS "public"."roles";

CREATE TABLE "public"."roles" (

"username" varchar(50) COLLATE "pg_catalog"."default" NOT NULL,

"role" varchar(50) COLLATE "pg_catalog"."default" NOT NULL

)

;

-- ----------------------------

-- Records of roles

-- ----------------------------

INSERT INTO "public"."roles" VALUES ('nacos', 'ROLE_ADMIN');

-- ----------------------------

-- Table structure for tenant_capacity

-- ----------------------------

DROP TABLE IF EXISTS "public"."tenant_capacity";

CREATE TABLE "public"."tenant_capacity" (

"id" int8 NOT NULL DEFAULT nextval('tenant_capacity_id_seq'::regclass),

"tenant_id" varchar(128) COLLATE "pg_catalog"."default" NOT NULL,

"quota" int4 NOT NULL,

"usage" int4 NOT NULL,

"max_size" int4 NOT NULL,

"max_aggr_count" int4 NOT NULL,

"max_aggr_size" int4 NOT NULL,

"max_history_count" int4 NOT NULL,

"gmt_create" timestamp(6) NOT NULL,

"gmt_modified" timestamp(6) NOT NULL

)

;

COMMENT ON COLUMN "public"."tenant_capacity"."id" IS '主键ID';

COMMENT ON COLUMN "public"."tenant_capacity"."tenant_id" IS 'Tenant ID';

COMMENT ON COLUMN "public"."tenant_capacity"."quota" IS '配额,0表示使用默认值';

COMMENT ON COLUMN "public"."tenant_capacity"."usage" IS '使用量';

COMMENT ON COLUMN "public"."tenant_capacity"."max_size" IS '单个配置大小上限,单位为字节,0表示使用默认值';

COMMENT ON COLUMN "public"."tenant_capacity"."max_aggr_count" IS '聚合子配置最大个数';

COMMENT ON COLUMN "public"."tenant_capacity"."max_aggr_size" IS '单个聚合数据的子配置大小上限,单位为字节,0表示使用默认值';

COMMENT ON COLUMN "public"."tenant_capacity"."max_history_count" IS '最大变更历史数量';

COMMENT ON COLUMN "public"."tenant_capacity"."gmt_create" IS '创建时间';

COMMENT ON COLUMN "public"."tenant_capacity"."gmt_modified" IS '修改时间';

COMMENT ON TABLE "public"."tenant_capacity" IS '租户容量信息表';

-- ----------------------------

-- Records of tenant_capacity

-- ----------------------------

-- ----------------------------

-- Table structure for tenant_info

-- ----------------------------

DROP TABLE IF EXISTS "public"."tenant_info";

CREATE TABLE "public"."tenant_info" (

"id" int8 NOT NULL DEFAULT nextval('tenant_info_id_seq'::regclass),

"kp" varchar(128) COLLATE "pg_catalog"."default" NOT NULL,

"tenant_id" varchar(128) COLLATE "pg_catalog"."default",

"tenant_name" varchar(128) COLLATE "pg_catalog"."default",

"tenant_desc" varchar(256) COLLATE "pg_catalog"."default",

"create_source" varchar(32) COLLATE "pg_catalog"."default",

"gmt_create" int8 NOT NULL,

"gmt_modified" int8 NOT NULL

)

;

COMMENT ON COLUMN "public"."tenant_info"."id" IS 'id';

COMMENT ON COLUMN "public"."tenant_info"."kp" IS 'kp';

COMMENT ON COLUMN "public"."tenant_info"."tenant_id" IS 'tenant_id';

COMMENT ON COLUMN "public"."tenant_info"."tenant_name" IS 'tenant_name';

COMMENT ON COLUMN "public"."tenant_info"."tenant_desc" IS 'tenant_desc';

COMMENT ON COLUMN "public"."tenant_info"."create_source" IS 'create_source';

COMMENT ON COLUMN "public"."tenant_info"."gmt_create" IS '创建时间';

COMMENT ON COLUMN "public"."tenant_info"."gmt_modified" IS '修改时间';

COMMENT ON TABLE "public"."tenant_info" IS 'tenant_info';

-- ----------------------------

-- Records of tenant_info

-- ----------------------------

-- ----------------------------

-- Table structure for users

-- ----------------------------

DROP TABLE IF EXISTS "public"."users";

CREATE TABLE "public"."users" (

"username" varchar(50) COLLATE "pg_catalog"."default" NOT NULL,

"password" varchar(500) COLLATE "pg_catalog"."default" NOT NULL,

"enabled" bool NOT NULL

)

;

-- ----------------------------

-- Records of users

-- ----------------------------

INSERT INTO "public"."users" VALUES ('nacos', '$2a$10$EuWPZHzz32dJN7jexM34MOeYirDdFAZm2kuWj7VEOJhhZkDrxfvUu', 't');

-- ----------------------------

-- Alter sequences owned by

-- ----------------------------

ALTER SEQUENCE "public"."config_info_aggr_id_seq"

OWNED BY "public"."config_info_aggr"."id";

SELECT setval('"public"."config_info_aggr_id_seq"', 2, false);

-- ----------------------------

-- Alter sequences owned by

-- ----------------------------

ALTER SEQUENCE "public"."config_info_beta_id_seq"

OWNED BY "public"."config_info_beta"."id";

SELECT setval('"public"."config_info_beta_id_seq"', 2, false);

-- ----------------------------

-- Alter sequences owned by

-- ----------------------------

ALTER SEQUENCE "public"."config_info_id_seq"

OWNED BY "public"."config_info"."id";

SELECT setval('"public"."config_info_id_seq"', 8, true);

-- ----------------------------

-- Alter sequences owned by

-- ----------------------------

ALTER SEQUENCE "public"."config_info_tag_id_seq"

OWNED BY "public"."config_info_tag"."id";

SELECT setval('"public"."config_info_tag_id_seq"', 2, false);

-- ----------------------------

-- Alter sequences owned by

-- ----------------------------

ALTER SEQUENCE "public"."config_tags_relation_id_seq"

OWNED BY "public"."config_tags_relation"."id";

SELECT setval('"public"."config_tags_relation_id_seq"', 2, false);

-- ----------------------------

-- Alter sequences owned by

-- ----------------------------

ALTER SEQUENCE "public"."config_tags_relation_nid_seq"

OWNED BY "public"."config_tags_relation"."nid";

SELECT setval('"public"."config_tags_relation_nid_seq"', 2, false);

-- ----------------------------

-- Alter sequences owned by

-- ----------------------------

ALTER SEQUENCE "public"."group_capacity_id_seq"

OWNED BY "public"."group_capacity"."id";

SELECT setval('"public"."group_capacity_id_seq"', 2, false);

-- ----------------------------

-- Alter sequences owned by

-- ----------------------------

ALTER SEQUENCE "public"."his_config_info_nid_seq"

OWNED BY "public"."his_config_info"."nid";

SELECT setval('"public"."his_config_info_nid_seq"', 9, true);

-- ----------------------------

-- Alter sequences owned by

-- ----------------------------

ALTER SEQUENCE "public"."tenant_capacity_id_seq"

OWNED BY "public"."tenant_capacity"."id";

SELECT setval('"public"."tenant_capacity_id_seq"', 2, false);

-- ----------------------------

-- Alter sequences owned by

-- ----------------------------

ALTER SEQUENCE "public"."tenant_info_id_seq"

OWNED BY "public"."tenant_info"."id";

SELECT setval('"public"."tenant_info_id_seq"', 2, false);

-- ----------------------------

-- Indexes structure for table config_info

-- ----------------------------

CREATE UNIQUE INDEX "uk_configinfo_datagrouptenant" ON "public"."config_info" USING btree (

"data_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"group_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"tenant_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

-- ----------------------------

-- Primary Key structure for table config_info

-- ----------------------------

ALTER TABLE "public"."config_info" ADD CONSTRAINT "config_info_pkey" PRIMARY KEY ("id");

-- ----------------------------

-- Indexes structure for table config_info_aggr

-- ----------------------------

CREATE UNIQUE INDEX "uk_configinfoaggr_datagrouptenantdatum" ON "public"."config_info_aggr" USING btree (

"data_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"group_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"tenant_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"datum_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

-- ----------------------------

-- Primary Key structure for table config_info_aggr

-- ----------------------------

ALTER TABLE "public"."config_info_aggr" ADD CONSTRAINT "config_info_aggr_pkey" PRIMARY KEY ("id");

-- ----------------------------

-- Indexes structure for table config_info_beta

-- ----------------------------

CREATE UNIQUE INDEX "uk_configinfobeta_datagrouptenant" ON "public"."config_info_beta" USING btree (

"data_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"group_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"tenant_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

-- ----------------------------

-- Primary Key structure for table config_info_beta

-- ----------------------------

ALTER TABLE "public"."config_info_beta" ADD CONSTRAINT "config_info_beta_pkey" PRIMARY KEY ("id");

-- ----------------------------

-- Indexes structure for table config_info_tag

-- ----------------------------

CREATE UNIQUE INDEX "uk_configinfotag_datagrouptenanttag" ON "public"."config_info_tag" USING btree (

"data_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"group_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"tenant_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"tag_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

-- ----------------------------

-- Primary Key structure for table config_info_tag

-- ----------------------------

ALTER TABLE "public"."config_info_tag" ADD CONSTRAINT "config_info_tag_pkey" PRIMARY KEY ("id");

-- ----------------------------

-- Indexes structure for table config_tags_relation

-- ----------------------------

CREATE INDEX "idx_tenant_id" ON "public"."config_tags_relation" USING btree (

"tenant_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

CREATE UNIQUE INDEX "uk_configtagrelation_configidtag" ON "public"."config_tags_relation" USING btree (

"id" "pg_catalog"."int8_ops" ASC NULLS LAST,

"tag_name" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"tag_type" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

-- ----------------------------

-- Primary Key structure for table config_tags_relation

-- ----------------------------

ALTER TABLE "public"."config_tags_relation" ADD CONSTRAINT "config_tags_relation_pkey" PRIMARY KEY ("nid");

-- ----------------------------

-- Indexes structure for table group_capacity

-- ----------------------------

CREATE UNIQUE INDEX "uk_group_id" ON "public"."group_capacity" USING btree (

"group_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

-- ----------------------------

-- Primary Key structure for table group_capacity

-- ----------------------------

ALTER TABLE "public"."group_capacity" ADD CONSTRAINT "group_capacity_pkey" PRIMARY KEY ("id");

-- ----------------------------

-- Indexes structure for table his_config_info

-- ----------------------------

CREATE INDEX "idx_did" ON "public"."his_config_info" USING btree (

"data_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

CREATE INDEX "idx_gmt_create" ON "public"."his_config_info" USING btree (

"gmt_create" "pg_catalog"."timestamp_ops" ASC NULLS LAST

);

CREATE INDEX "idx_gmt_modified" ON "public"."his_config_info" USING btree (

"gmt_modified" "pg_catalog"."timestamp_ops" ASC NULLS LAST

);

-- ----------------------------

-- Primary Key structure for table his_config_info

-- ----------------------------

ALTER TABLE "public"."his_config_info" ADD CONSTRAINT "his_config_info_pkey" PRIMARY KEY ("nid");

-- ----------------------------

-- Indexes structure for table permissions

-- ----------------------------

CREATE UNIQUE INDEX "uk_role_permission" ON "public"."permissions" USING btree (

"role" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"resource" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"action" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

-- ----------------------------

-- Indexes structure for table roles

-- ----------------------------

CREATE UNIQUE INDEX "uk_username_role" ON "public"."roles" USING btree (

"username" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"role" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

-- ----------------------------

-- Indexes structure for table tenant_capacity

-- ----------------------------

CREATE UNIQUE INDEX "uk_tenant_id" ON "public"."tenant_capacity" USING btree (

"tenant_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

-- ----------------------------

-- Primary Key structure for table tenant_capacity

-- ----------------------------

ALTER TABLE "public"."tenant_capacity" ADD CONSTRAINT "tenant_capacity_pkey" PRIMARY KEY ("id");

-- ----------------------------

-- Indexes structure for table tenant_info

-- ----------------------------

CREATE UNIQUE INDEX "uk_tenant_info_kptenantid" ON "public"."tenant_info" USING btree (

"kp" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST,

"tenant_id" COLLATE "pg_catalog"."default" "pg_catalog"."text_ops" ASC NULLS LAST

);

创作不易,大家帮忙关注一下我的公众号,感谢。 文章来源地址https://www.toymoban.com/news/detail-632006.html

文章来源地址https://www.toymoban.com/news/detail-632006.html

到了这里,关于docker环境部署postgres版本nacos的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!