代码及工程见https://download.csdn.net/download/daqinzl/88155241

开发工具:visual studio 2019

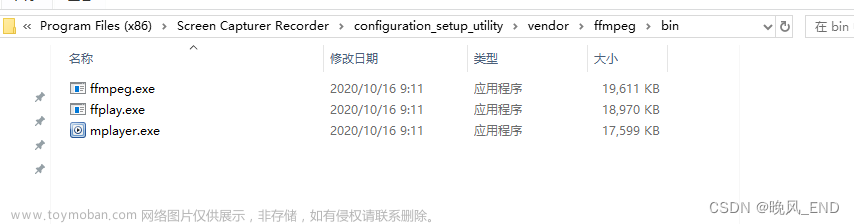

播放,采用ffmpeg工具集里的ffplay.exe, 执行命令 ffplay udp://224.1.1.1:5001

主要代码如下:

#include "pch.h"

#include <iostream>

using namespace std;

#include <stdio.h>

#define __STDC_CONSTANT_MACROS

extern "C"

{

#include "include/libavcodec/avcodec.h"

#include "include/libavformat/avformat.h"

#include "include/libswscale/swscale.h"

#include "include/libavdevice/avdevice.h"

#include "include/libavutil/imgutils.h"

#include "include/libavutil/opt.h"

#include "include/libavutil/imgutils.h"

#include "include/libavutil/mathematics.h"

#include "include/libavutil/time.h"

};

#pragma comment (lib,"avcodec.lib")

#pragma comment (lib,"avdevice.lib")

#pragma comment (lib,"avfilter.lib")

#pragma comment (lib,"avformat.lib")

#pragma comment (lib,"avutil.lib")

#pragma comment (lib,"swresample.lib")

#pragma comment (lib,"swscale.lib")

int main(int argc, char* argv[])

{

AVFormatContext* m_fmt_ctx = NULL;

AVInputFormat* m_input_fmt = NULL;

int video_stream = -1;

avdevice_register_all();

avcodec_register_all();

const char* deviceName = "desktop";

const char* inputformat = "gdigrab";

int FPS = 23; //15

m_fmt_ctx = avformat_alloc_context();

m_input_fmt = av_find_input_format(inputformat);

AVDictionary* deoptions = NULL;

av_dict_set_int(&deoptions, "framerate", FPS, AV_DICT_MATCH_CASE);

av_dict_set_int(&deoptions, "rtbufsize", 3041280 * 100 * 5, 0);

//如果不设置的话,在输入源是直播流的时候,会花屏。单位bytes

//av_dict_set(&deoptions, "buffer_size", "10485760", 0);

//av_dict_set(&deoptions, "reuse", "1", 0);

int ret = avformat_open_input(&m_fmt_ctx, deviceName, m_input_fmt, &deoptions);

if (ret != 0) {

return ret;

}

av_dict_free(&deoptions);

ret = avformat_find_stream_info(m_fmt_ctx, NULL);

if (ret < 0) {

return ret;

}

av_dump_format(m_fmt_ctx, 0, deviceName, 0);

video_stream = av_find_best_stream(m_fmt_ctx, AVMEDIA_TYPE_VIDEO, -1, -1, NULL, 0);

if (video_stream < 0) {

return -1;

}

AVCodecContext * _codec_ctx = m_fmt_ctx->streams[video_stream]->codec;

AVCodec* _codec = avcodec_find_decoder(_codec_ctx->codec_id);

if (_codec == NULL) {

return -1;

}

ret = avcodec_open2(_codec_ctx, _codec, NULL);

if (ret != 0) {

return -1;

}

int width = m_fmt_ctx->streams[video_stream]->codec->width;

int height = m_fmt_ctx->streams[video_stream]->codec->height;

int fps = m_fmt_ctx->streams[video_stream]->codec->framerate.num > 0 ? m_fmt_ctx->streams[video_stream]->codec->framerate.num : 25;

AVPixelFormat videoType = m_fmt_ctx->streams[video_stream]->codec->pix_fmt;

std::cout << "avstream timebase : " << m_fmt_ctx->streams[video_stream]->time_base.num << " / " << m_fmt_ctx->streams[video_stream]->time_base.den << endl;

AVDictionary* enoptions = 0;

//av_dict_set(&enoptions, "preset", "superfast", 0);

//av_dict_set(&enoptions, "tune", "zerolatency", 0);

av_dict_set(&enoptions, "preset", "ultrafast", 0);

av_dict_set(&enoptions, "tune", "zerolatency", 0);

//TODO

//av_dict_set(&enoptions, "pkt_size", "1316", 0); //Maximum UDP packet size

av_dict_set(&dic, "fifo_size", "18800", 0);

av_dict_set(&enoptions, "buffer_size", "0", 1);

av_dict_set(&dic, "bitrate", "11000000", 0);

av_dict_set(&dic, "buffer_size", "1000000", 0);//1316

//av_dict_set(&enoptions, "reuse", "1", 0);文章来源:https://www.toymoban.com/news/detail-633408.html

AVCodec* codec = avcodec_find_encoder(AV_CODEC_ID_H264);

if (!codec)

{

std::cout << "avcodec_find_encoder failed!" << endl;

return NULL;

}

AVCodecContext* vc = avcodec_alloc_context3(codec);

if (!vc)

{

std::cout << "avcodec_alloc_context3 failed!" << endl;

return NULL;

}

std::cout << "avcodec_alloc_context3 success!" << endl;

vc->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

vc->codec_id = AV_CODEC_ID_H264;

vc->codec_type = AVMEDIA_TYPE_VIDEO;

vc->pix_fmt = AV_PIX_FMT_YUV420P;

vc->width = width;

vc->height = height;

vc->time_base.num = 1;

vc->time_base.den = FPS;

vc->framerate = { FPS,1 };

vc->bit_rate = 10241000;

vc->gop_size = 120;

vc->qmin = 10;

vc->qmax = 51;

vc->max_b_frames = 0;

vc->profile = FF_PROFILE_H264_MAIN;

ret = avcodec_open2(vc, codec, &enoptions);

if (ret != 0)

{

return ret;

}

std::cout << "avcodec_open2 success!" << endl;

av_dict_free(&enoptions);

SwsContext *vsc = nullptr;

vsc = sws_getCachedContext(vsc,

width, height, (AVPixelFormat)videoType, //源宽、高、像素格式

width, height, AV_PIX_FMT_YUV420P,//目标宽、高、像素格式

SWS_BICUBIC, // 尺寸变化使用算法

0, 0, 0

);

if (!vsc)

{

cout << "sws_getCachedContext failed!";

return false;

}

AVFrame* yuv = av_frame_alloc();

yuv->format = AV_PIX_FMT_YUV420P;

yuv->width = width;

yuv->height = height;

yuv->pts = 0;

ret = av_frame_get_buffer(yuv, 32);

if (ret != 0)

{

return ret;

}

//const char* rtmpurl = "rtmp://192.168.0.105:1935/live/desktop";

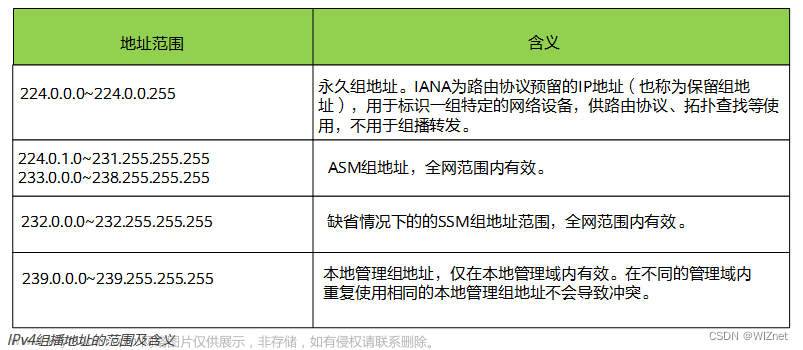

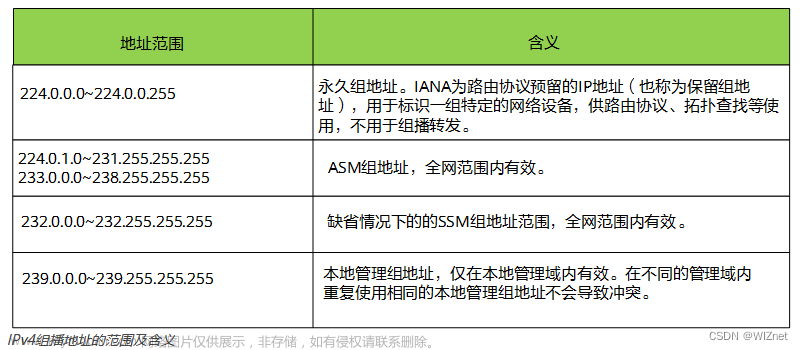

const char* rtmpurl = "udp://224.1.1.1:5001";

AVFormatContext * ic = NULL;

//ret = avformat_alloc_output_context2(&ic, 0, "flv", rtmpurl);

ret = avformat_alloc_output_context2(&ic, NULL, "mpegts", rtmpurl);//UDP

if (ret < 0)

{

return ret;

}

AVStream* st = avformat_new_stream(ic, NULL);

if (!st)

{

return -1;

}

st->codecpar->codec_tag = 0;

avcodec_parameters_from_context(st->codecpar, vc);

av_dump_format(ic, 0, rtmpurl, 1);

ret = avio_open(&ic->pb, rtmpurl, AVIO_FLAG_WRITE);

if (ret != 0)

{

return ret;

}

ret = avformat_write_header(ic, NULL);

if (ret != 0)

{

return ret;

}

AVPacket* packet = av_packet_alloc();

AVPacket* Encodepacket = av_packet_alloc();

int frameIndex = 0;

int EncodeIndex = 0;

AVFrame* rgb = av_frame_alloc();

AVBitStreamFilterContext* h264bsfc = av_bitstream_filter_init("h264_mp4toannexb");

long long startpts = m_fmt_ctx->start_time;

long long lastpts = 0;

long long duration = av_rescale_q(1, { 1,FPS }, { 1,AV_TIME_BASE });

int got_picture = 0;

while (frameIndex < 2000000)

{

ret = av_read_frame(m_fmt_ctx, packet);

if (ret < 0) {

break;

}

if (packet->stream_index == video_stream)

{

ret = avcodec_decode_video2(_codec_ctx, rgb, &got_picture, packet);

if (ret < 0) {

printf("Decode Error.\n");

return ret;

}

if (got_picture) {

int h = sws_scale(vsc, rgb->data, rgb->linesize, 0, height, //源数据

yuv->data, yuv->linesize);

int guesspts = frameIndex * duration;

yuv->pts = guesspts;

frameIndex++;

ret = avcodec_encode_video2(vc, Encodepacket, yuv, &got_picture);

if (ret < 0) {

printf("Failed to encode!\n");

break;

}

if (got_picture == 1) {

Encodepacket->pts = av_rescale_q(EncodeIndex, vc->time_base, st->time_base);

Encodepacket->dts = Encodepacket->pts;

std::cout << "frameindex : " << EncodeIndex << " pts : " << Encodepacket->pts << " dts: " << Encodepacket->dts << " encodeSize:" << Encodepacket->size << " curtime - lasttime " << Encodepacket->pts - lastpts << endl;

lastpts = Encodepacket->pts;

ret = av_interleaved_write_frame(ic, Encodepacket);

EncodeIndex++;

av_packet_unref(Encodepacket);

}

}

}

av_packet_unref(packet);

}

ret = avcodec_send_frame(vc, NULL);

while (ret >= 0) {

ret = avcodec_receive_packet(vc, Encodepacket);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

}

if (ret < 0) {

break;

}

ret = av_interleaved_write_frame(ic, Encodepacket);

EncodeIndex++;

}

av_write_trailer(ic);

av_packet_free(&packet);

av_packet_free(&Encodepacket);

av_frame_free(&rgb);

av_frame_free(&yuv);

av_bitstream_filter_close(h264bsfc);

h264bsfc = NULL;

if (vsc)

{

sws_freeContext(vsc);

vsc = NULL;

}

if (_codec_ctx)

avcodec_close(_codec_ctx);

_codec_ctx = NULL;

_codec = NULL;

if (vc)

avcodec_free_context(&vc);

if (m_fmt_ctx)

avformat_close_input(&m_fmt_ctx);

if (ic && !(ic->flags & AVFMT_NOFILE))

avio_closep(&ic->pb);

if (ic) {

avformat_free_context(ic);

ic = NULL;

}

m_input_fmt = NULL;

return 0;

}

文章来源地址https://www.toymoban.com/news/detail-633408.html

到了这里,关于c++调用ffmpeg api录屏 并进行udp组播推流的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!