VGG首先引入块的思想将模型通用模板化

VGG模型的特点

与AlexNet,LeNet一样,VGG网络可以分为两部分,第一部分主要由卷积层和汇聚层组成,第二部分由全连接层组成。

VGG有5个卷积块,前两个块包含一个卷积层,后三个块包含两个卷积层。 2 * 1 + 3 * 2 = 8个卷积层和后面3个全连接层,所以它被称为VGG11

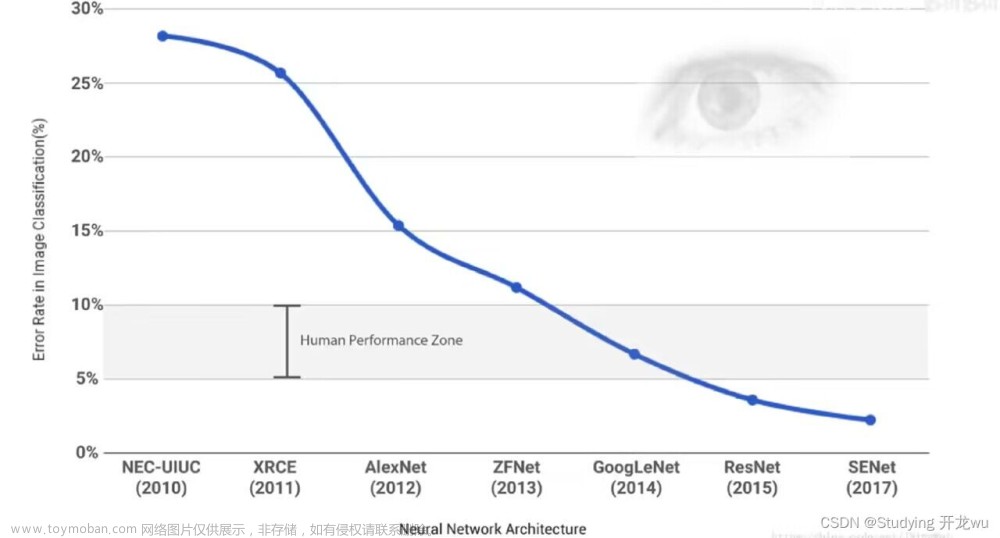

AlexNet模型架构与VGG模型架构对比

文章来源:https://www.toymoban.com/news/detail-641373.html

文章来源:https://www.toymoban.com/news/detail-641373.html

import torch

from torch import nn

from d2l import torch as d2l

import time

# 卷积块函数

def vgg_block(num_convs,in_channels,out_channels):

layers = []

for _ in range(num_convs):

layers.append(nn.Conv2d(in_channels,out_channels,kernel_size=3,padding=1))

layers.append(nn.ReLU())

in_channels = out_channels

layers.append(nn.MaxPool2d(kernel_size=2,stride=2))

'''

`nn.Sequential(*layers)`中的`*layers`将会展开`layers`列表,将其中的每个层作为单独的参数传递给`nn.Sequential`函数,以便构建一个顺序模型。

'''

return nn.Sequential(*layers)

# 定义卷积块的输入输出

conv_arch = ((1,64),(1,128),(2,256),(2,512),(2,512))

# VGG有5个卷积块,前两个块包含一个卷积层,后三个块包含两个卷积层。 2 * 1 + 3 * 2 = 8个卷积层和后面3个全连接层,所以它被称为VGG11

def vgg(conv_arch):

conv_blks = []

in_channels = 1

# 卷积层部分

for (num_convs,out_channels) in conv_arch:

conv_blks.append(vgg_block(num_convs,in_channels,out_channels))

in_channels = out_channels

return nn.Sequential(

# 5个卷积块部分

*conv_blks,nn.Flatten(),

# 3个全连接部分

nn.Linear(out_channels*7*7,4096),nn.ReLU(),nn.Dropout(0.5),

nn.Linear(4096,4096),nn.ReLU(),nn.Dropout(0.5),

nn.Linear(4096,10)

)

net = vgg(conv_arch)

X = torch.randn(size=(1,1,224,224))

for blk in net:

X = blk(X)

print(blk.__class__.__name__,'output shape:\t',X.shape)

Sequential output shape: torch.Size([1, 64, 112, 112])

Sequential output shape: torch.Size([1, 128, 56, 56])

Sequential output shape: torch.Size([1, 256, 28, 28])

Sequential output shape: torch.Size([1, 512, 14, 14])

Sequential output shape: torch.Size([1, 512, 7, 7])

Flatten output shape: torch.Size([1, 25088])

Linear output shape: torch.Size([1, 4096])

ReLU output shape: torch.Size([1, 4096])

Dropout output shape: torch.Size([1, 4096])

Linear output shape: torch.Size([1, 4096])

ReLU output shape: torch.Size([1, 4096])

Dropout output shape: torch.Size([1, 4096])

Linear output shape: torch.Size([1, 10])

为了使用Fashion-MNIST数据集,使用缩小VGG11的通道数的VGG11

# 由于VGG11比AlexNet计算量更大,所以构建一个通道数校小的网络

ratio = 4

# 样本数pair[0]不变,通道数pair[1]缩小四倍

small_conv_arch = [(pair[0],pair[1] // ratio) for pair in conv_arch]

net = vgg(small_conv_arch)

X = torch.randn(size=(1,1,224,224))

for blk in net:

X = blk(X)

print(blk.__class__.__name__,'output shape:\t',X.shape)

Sequential output shape: torch.Size([1, 16, 112, 112])

Sequential output shape: torch.Size([1, 32, 56, 56])

Sequential output shape: torch.Size([1, 64, 28, 28])

Sequential output shape: torch.Size([1, 128, 14, 14])

Sequential output shape: torch.Size([1, 128, 7, 7])

Flatten output shape: torch.Size([1, 6272])

Linear output shape: torch.Size([1, 4096])

ReLU output shape: torch.Size([1, 4096])

Dropout output shape: torch.Size([1, 4096])

Linear output shape: torch.Size([1, 4096])

ReLU output shape: torch.Size([1, 4096])

Dropout output shape: torch.Size([1, 4096])

Linear output shape: torch.Size([1, 10])

'''开始计时'''

start_time = time.time()

lr,num_epochs,batch_size = 0.05,10,128

train_iter,test_iter = d2l.load_data_fashion_mnist(batch_size,resize=224)

d2l.train_ch6(net,train_iter,test_iter,num_epochs,lr,d2l.try_gpu())

'''时间结束'''

end_time = time.time()

run_time = end_time - start_time

# 将输出的秒数保留两位小数

print(f'{round(run_time,2)}s')

文章来源地址https://www.toymoban.com/news/detail-641373.html

文章来源地址https://www.toymoban.com/news/detail-641373.html

到了这里,关于7.2 手撕VGG11模型 & 使用Fashion_mnist数据训练VGG的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!