通过kubeadm部署k8s 1.27高可有集群

本次部署使用外部etcd集群+LB+K8S集群方案。如下图:

软件列表及软件版本:CentOS7U9, Linux kernel 5.4,docker-ce 23.0.6,cri-dockerd v0.3.1,k8s集群为1.27.1

一、k8s集群节点准备

1.1 配置主机名

# hostnamectl set-hostname k8s-xxx

修改xxx为当前主机分配的主机名

1.2 配置主机IP地址

[root@xxx ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

[root@xxx ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="none"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens33"

UUID="063bfc1c-c7c2-4c62-89d0-35ae869e44e7"

DEVICE="ens33"

ONBOOT="yes"

IPADDR="192.168.10.16X" 修改X为当前主机分配的IP地址

PREFIX="24"

GATEWAY="192.168.10.2"

DNS1="119.29.29.29"

[root@xxx ~]# systemctl restart network

1.3 配置主机名与IP地址解析

[root@xxx ~]# vim /etc/hosts

[root@xxx ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.10.160 k8s-master01

192.168.10.161 k8s-master02

192.168.10.162 k8s-master03

192.168.10.163 k8s-worker01

192.168.10.164 k8s-worker02

1.4 主机安全设置

# systemctl disable --now firewalld

# sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

# sestatus

注意:修改后需要重启计算机才能生效

1.5 时钟同步

# crontab -l

0 */1 * * * /usr/sbin/ntpdate time1.aliyun.com

1.6 升级操作系统内核

所有主机均需要操作。

导入elrepo gpg key

# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

安装elrepo YUM源仓库

# yum -y install https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

安装kernel-ml版本,ml为长期稳定版本,lt为长期维护版本

# yum --enablerepo="elrepo-kernel" -y install kernel-lt.x86_64

设置grub2默认引导为0

# grub2-set-default 0

重新生成grub2引导文件

# grub2-mkconfig -o /boot/grub2/grub.cfg

更新后,需要重启,使用升级的内核生效。

# reboot

重启后,需要验证内核是否为更新对应的版本

# uname -r

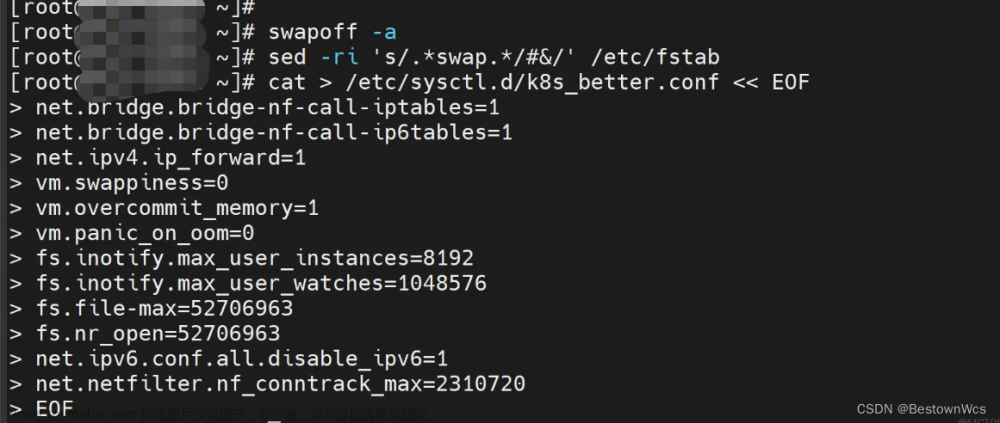

1.7 配置内核路由转发及网桥过滤

所有主机均需要操作。

添加网桥过滤及内核转发配置文件

# cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

EOF

加载br_netfilter模块

# modprobe br_netfilter

查看是否加载

# lsmod | grep br_netfilter

br_netfilter 22256 0

bridge 151336 1 br_netfilter

1.8 安装ipset及ipvsadm

所有主机均需要操作。

安装ipset及ipvsadm

# yum -y install ipset ipvsadm

配置ipvsadm模块加载方式

添加需要加载的模块

# cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

授权、运行、检查是否加载

# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

1.9 关闭SWAP分区

修改完成后需要重启操作系统,如不重启,可临时关闭,命令为swapoff -a

永远关闭swap分区,需要重启操作系统

# cat /etc/fstab

......

# /dev/mapper/centos-swap swap swap defaults 0 0

在上一行中行首添加#

1.10 配置ssh免密登录

在k8s-master01节点生成证书,并创建authorized_keys文件

[root@k8s-master01 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:4khS62jcABgQd7BIAEu/soZCf7ngqZLD1P4i0rrz+Ho root@k8s-master01

The key's randomart image is:

+---[RSA 2048]----+

|X+o.. |

|+=.o |

|= ... |

| . ... |

| o+.o . S |

|oo+O o.. |

|===o+o. |

|O=Eo+ . |

|B@=ooo |

+----[SHA256]-----+

[root@k8s-master01 ~]# cd /root/.ssh

[root@k8s-master01 .ssh]# ls

id_rsa id_rsa.pub

[root@k8s-master01 .ssh]# cp id_rsa.pub authorized_keys

[root@k8s-master01 .ssh]# ls

authorized_keys id_rsa id_rsa.pub

[root@k8s-master01 ~]# for i in 161 162 163 164

> do

> scp -r /root/.ssh 192.168.10.$i:/root/

> done

需要在每台主机上验证是否可以相互免密登录。

二、etcd集群部署

本次在k8s-master01、k8s-master02、k8s-master03上进行etcd集群部署

2.1 在k8s集群master节点上安装etcd

# yum -y install etcd

2.2 在k8s-master01节点上生成etcd配置相关文件

[root@k8s-master01 ~]# vim etcd_install.sh

[root@k8s-master01 ~]# cat etcd_install.sh

etcd1=192.168.10.160

etcd2=192.168.10.161

etcd3=192.168.10.162

TOKEN=smartgo

ETCDHOSTS=($etcd1 $etcd2 $etcd3)

NAMES=("k8s-master01" "k8s-master02" "k8s-master03")

for i in "${!ETCDHOSTS[@]}"; do

HOST=${ETCDHOSTS[$i]}

NAME=${NAMES[$i]}

cat << EOF > /tmp/$NAME.conf

# [member]

ETCD_NAME=$NAME

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://$HOST:2380"

ETCD_LISTEN_CLIENT_URLS="http://$HOST:2379,http://127.0.0.1:2379"

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://$HOST:2380"

ETCD_INITIAL_CLUSTER="${NAMES[0]}=http://${ETCDHOSTS[0]}:2380,${NAMES[1]}=http://${ETCDHOSTS[1]}:2380,${NAMES[2]}=http://${ETCDHOSTS[2]}:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="$TOKEN"

ETCD_ADVERTISE_CLIENT_URLS="http://$HOST:2379"

EOF

done

ls /tmp/k8s-master*

scp /tmp/k8s-master02.conf $etcd2:/etc/etcd/etcd.conf

scp /tmp/k8s-master03.conf $etcd3:/etc/etcd/etcd.conf

cp /tmp/k8s-master01.conf /etc/etcd/etcd.conf

rm -f /tmp/k8s-master*.conf

[root@k8s-master01 ~]# sh etcd_install.sh

2.3 在k8s集群master节点上启动etcd

# systemctl enable --now etcd

2.4 检查etcd集群是否正常

[root@k8s-master01 ~]# etcdctl member list

5c6e7b164c56b0ef: name=k8s-master03 peerURLs=http://192.168.10.162:2380 clientURLs=http://192.168.10.162:2379 isLeader=false

b069d39a050d98b3: name=k8s-master02 peerURLs=http://192.168.10.161:2380 clientURLs=http://192.168.10.161:2379 isLeader=false

f83acb046b7e55b3: name=k8s-master01 peerURLs=http://192.168.10.160:2380 clientURLs=http://192.168.10.160:2379 isLeader=true

[root@k8s-master01 ~]# etcdctl cluster-health

member 5c6e7b164c56b0ef is healthy: got healthy result from http://192.168.10.162:2379

member b069d39a050d98b3 is healthy: got healthy result from http://192.168.10.161:2379

member f83acb046b7e55b3 is healthy: got healthy result from http://192.168.10.160:2379

cluster is healthy

三、负载均衡器部署haproxy+keepalived

本次在k8s-master01及k8s-master02节点上部署即可 。

3.1 安装haproxy与keepalived

# yum -y install haproxy keepalived

3.2 HAProxy配置

本次haproxy配置文件需要同步到k8s-master01及k8s-master02

# cat > /etc/haproxy/haproxy.cfg << EOF

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-masters

backend k8s-masters

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 192.168.10.160:6443 check

server k8s-master02 192.168.10.161:6443 check

server k8s-master03 192.168.10.162:6443 check

EOF

3.3 KeepAlived

主从配置不一致,需要注意。

ha1:

# cat >/etc/keepalived/keepalived.conf<<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.10.160

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.10.200

}

track_script {

chk_apiserver

}

}

EOF

ha2:

# cat >/etc/keepalived/keepalived.conf<<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

mcast_src_ip 192.168.10.161

virtual_router_id 51

priority 99

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.10.200

}

track_script {

chk_apiserver

}

}

EOF

3.4 健康检查脚本

ha1及ha2均要配置

# cat > /etc/keepalived/check_apiserver.sh <<"EOF"

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

# chmod +x /etc/keepalived/check_apiserver.sh

3.5 启动服务并验证

# systemctl daemon-reload

# systemctl enable --now haproxy

# systemctl enable --now keepalived

# ip address show

四、K8S高可用集群部署

4.1 docker安装

4.1.1 Docker安装YUM源准备

使用阿里云开源软件镜像站。

# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

4.1.2 Docker安装

# yum -y install docker-ce

4.1.3 启动Docker服务

# systemctl enable --now docker

4.1.4 修改cgroup方式

/etc/docker/daemon.json 默认没有此文件,需要单独创建

在/etc/docker/daemon.json添加如下内容

# cat > /etc/docker/daemon.json << EOF

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

# systemctl restart docker

4.2 cri-dockerd安装

# wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.1/cri-dockerd-0.3.1-3.el7.x86_64.rpm

# yum install cri-dockerd-0.3.1-3.el7.x86_64.rpm

# vim /usr/lib/systemd/system/cri-docker.service

修改第10行内容

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.k8s.io/pause:3.9 --container-runtime-endpoint fd://

# systemctl start cri-docker

# systemctl enable cri-docker

4.3 K8S软件YUM源准备

# cat > /etc/yum.repos.d/k8s.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

4.4 K8S软件安装

4.4.1 软件安装

所有节点均可安装

默认安装

# yum -y install kubeadm kubelet kubectl

查看指定版本

# yum list kubeadm.x86_64 --showduplicates | sort -r

# yum list kubelet.x86_64 --showduplicates | sort -r

# yum list kubectl.x86_64 --showduplicates | sort -r

安装指定版本

# yum -y install kubeadm-1.27.X-0 kubelet-1.27.X-0 kubectl-1.27.X-0

4.4.2 kubelet配置

为了实现docker使用的cgroupdriver与kubelet使用的cgroup的一致性,建议修改如下文件内容。

# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

设置kubelet为开机自启动即可,由于没有生成配置文件,集群初始化后自动启动

# systemctl enable kubelet

4.5 k8s集群容器镜像准备

可使用VPN实现下载。

# kubeadm config images list --kubernetes-version=v1.27.X

# cat image_download.sh

#!/bin/bash

images_list='

镜像列表'

for i in $images_list

do

docker pull $i

done

docker save -o k8s-1-27-X.tar $images_list

4.6 K8S集群初始化配置文件准备

4.6.1 查看不同 kind默认配置

kubeadm config print init-defaults --component-configs KubeletConfiguration

kubeadm config print init-defaults --component-configs InitConfiguration

kubeadm config print init-defaults --component-configs ClusterConfiguration

4.6.2 生成配置文件样例 kubeadm-config.yaml

[root@k8s-master01 ~]# cat > kubeadm-config.yaml << EOF

---

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.10.160

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

kubernetesVersion: 1.27.1

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

apiServerCertSANs:

- 192.168.10.200

controlPlaneEndpoint: "192.168.10.200:16443"

etcd:

external:

endpoints:

- http://192.168.10.160:2379

- http://192.168.10.161:2379

- http://192.168.10.162:2379

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

EOF

4.7 使用初始化配置文件初始化集群第一个master节点

# kubeadm init --config kubeadm-config.yaml --upload-certs --v=9

输出以下内容,说明初始化成功。

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.10.200:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:d7cd6b10f262d654889574b079ea81bcabc926f1b2b45b9facc62918135a10e3 \

--control-plane --certificate-key 59395603df760897c1d058f83c22f81a7c20c9b4101d799d9830bb12d3af08ec

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.10.200:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:d7cd6b10f262d654889574b079ea81bcabc926f1b2b45b9facc62918135a10e3

4.8 准备kubelet配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

4.9 加入其它K8S集群master节点

4.9.1 复制K8S集群证书到k8s-master01及k8s-master02节点

[root@k8s-master01 ~]# vim cp-k8s-cert.sh

[root@k8s-master01 ~]# cat cp-k8s-cert.sh

for host in 161 162; do

scp -r /etc/kubernetes/pki 192.168.10.$host:/etc/kubernetes/

done

[root@k8s-master01 ~]# sh cp-k8s-cert.sh

可以把不需要的证书删除掉,k8s-master02及k8s-master03操作方法一致。

[root@k8s-master02 ~]# cd /etc/kubernetes/pki/

[root@k8s-master02 pki]# ls

apiserver.crt apiserver-kubelet-client.crt ca.crt front-proxy-ca.crt front-proxy-client.crt sa.key

apiserver.key apiserver-kubelet-client.key ca.key front-proxy-ca.key front-proxy-client.key sa.pub

[root@k8s-master02 pki]# rm -rf api*

[root@k8s-master02 pki]# ls

ca.crt ca.key front-proxy-ca.crt front-proxy-ca.key front-proxy-client.crt front-proxy-client.key sa.key sa.pub

[root@k8s-master02 pki]# rm -rf front-proxy-client.*

[root@k8s-master02 pki]# ls

ca.crt ca.key front-proxy-ca.crt front-proxy-ca.key sa.key sa.pub

4.9.2 执行加入命令及验证

kubeadm join 192.168.10.200:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:d7cd6b10f262d654889574b079ea81bcabc926f1b2b45b9facc62918135a10e3 \

--control-plane --certificate-key 59395603df760897c1d058f83c22f81a7c20c9b4101d799d9830bb12d3af08ec \

--cri-socket unix:///var/run/cri-dockerd.sock

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 23m v1.27.1

k8s-master02 NotReady control-plane 2m33s v1.27.1

k8s-master03 NotReady control-plane 29s v1.27.1

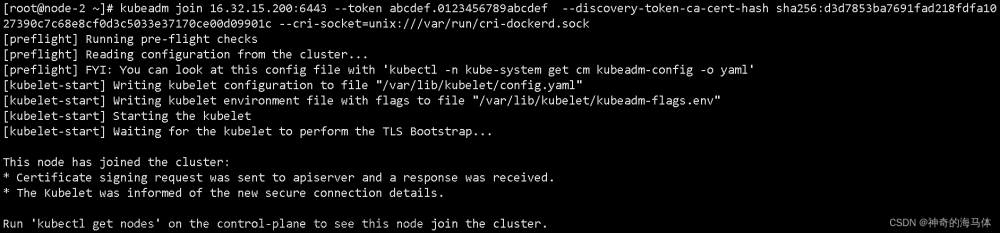

4.10 加入worker节点

kubeadm join 192.168.10.200:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:d7cd6b10f262d654889574b079ea81bcabc926f1b2b45b9facc62918135a10e3 --cri-socket unix:///var/run/cri-dockerd.sock

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 26m v1.27.1

k8s-master02 NotReady control-plane 5m6s v1.27.1

k8s-master03 NotReady control-plane 3m2s v1.27.1

k8s-worker01 NotReady <none> 68s v1.27.1

k8s-worker02 NotReady <none> 71s v1.27.1

4.11 网络插件calico部署及验证

使用calico部署集群网络

安装参考网址:https://projectcalico.docs.tigera.io/about/about-calico

文章来源:https://www.toymoban.com/news/detail-643462.html

文章来源:https://www.toymoban.com/news/detail-643462.html

应用operator资源清单文件

[root@k8s-master01 ~]# kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.25.1/manifests/tigera-operator.yaml

通过自定义资源方式安装

[root@k8s-master01 ~]# wget https://raw.githubusercontent.com/projectcalico/calico/v3.25.1/manifests/custom-resources.yaml

修改文件第13行,修改为使用kubeadm init ----pod-network-cidr对应的IP地址段

[root@k8s-master01 ~]# vim custom-resources.yaml

......

11 ipPools:

12 - blockSize: 26

13 cidr: 10.244.0.0/16

14 encapsulation: VXLANCrossSubnet

......

应用资源清单文件

[root@k8s-master01 ~]# kubectl create -f custom-resources.yaml

监视calico-sysem命名空间中pod运行情况

[root@k8s-master01 ~]# watch kubectl get pods -n calico-system

Wait until each pod has the

STATUSofRunning.文章来源地址https://www.toymoban.com/news/detail-643462.html

到了这里,关于通过kubeadm部署k8s 1.27高可有集群的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!