上一篇文章:yolov5目标检测多线程C++部署

V1 基本功能实现

mainwindow.h

#pragma once

#include <iostream>

#include <QMainWindow>

#include <QFileDialog>

#include <QThread>

#include <opencv2/opencv.hpp>

#include "yolov5.h"

#include "blockingconcurrentqueue.h"

QT_BEGIN_NAMESPACE

namespace Ui { class MainWindow; }

using namespace moodycamel;

QT_END_NAMESPACE

class Infer1 : public QThread

{

Q_OBJECT

public slots:

void receive_image(){};

private:

void run();

private:

cv::Mat input_image;

cv::Mat blob;

cv::Mat output_image;

std::vector<cv::Mat> network_outputs;

signals:

void send_image();

};

class Infer2 : public QThread

{

Q_OBJECT

public slots:

void receive_image(){};

private:

void run();

private:

cv::Mat input_image;

cv::Mat blob;

cv::Mat output_image;

std::vector<cv::Mat> network_outputs;

signals:

void send_image();

};

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

MainWindow(QWidget *parent = nullptr);

~MainWindow();

private slots:

void on_pushButton_open_video_clicked();

void receive_image();

private:

Ui::MainWindow *ui;

Infer1 *infer1;

Infer2 *infer2;

signals:

void send_image();

};

mainwindow.cpp

#include "mainwindow.h"

#include "ui_mainwindow.h"

bool stop = false;

BlockingConcurrentQueue<cv::Mat> bcq_capture1, bcq_infer1;

BlockingConcurrentQueue<cv::Mat> bcq_capture2, bcq_infer2;

void print_time(int id)

{

auto now = std::chrono::system_clock::now();

uint64_t dis_millseconds = std::chrono::duration_cast<std::chrono::milliseconds>(now.time_since_epoch()).count()

- std::chrono::duration_cast<std::chrono::seconds>(now.time_since_epoch()).count() * 1000;

time_t tt = std::chrono::system_clock::to_time_t(now);

auto time_tm = localtime(&tt);

char time[100] = { 0 };

sprintf(time, "%d-%02d-%02d %02d:%02d:%02d %03d", time_tm->tm_year + 1900,

time_tm->tm_mon + 1, time_tm->tm_mday, time_tm->tm_hour,

time_tm->tm_min, time_tm->tm_sec, (int)dis_millseconds);

std::cout << "infer" << std::to_string(id) << " 当前时间为:" << time << std::endl;

}

void Infer1::run()

{

cv::dnn::Net net = cv::dnn::readNet("yolov5n-w640h352.onnx");

while (true)

{

if(stop) break;

if(bcq_capture1.try_dequeue(input_image))

{

pre_process(input_image, blob);

process(blob, net, network_outputs);

post_process(input_image, output_image, network_outputs);

bcq_infer1.enqueue(output_image);

emit send_image();

print_time(1);

}

}

}

void Infer2::run()

{

cv::dnn::Net net = cv::dnn::readNet("yolov5s-w640h352.onnx");

while (true)

{

if(stop) break;

if(bcq_capture2.try_dequeue(input_image))

{

pre_process(input_image, blob);

process(blob, net, network_outputs);

post_process(input_image, output_image, network_outputs);

bcq_infer2.enqueue(output_image);

emit send_image();

print_time(2);

}

}

}

MainWindow::MainWindow(QWidget *parent)

: QMainWindow(parent)

, ui(new Ui::MainWindow)

{

ui->setupUi(this);

infer1 = new Infer1;

infer2 = new Infer2;

connect(infer1, &Infer1::send_image, this, &MainWindow::receive_image);

connect(infer2, &Infer2::send_image, this, &MainWindow::receive_image);

}

MainWindow::~MainWindow()

{

delete ui;

}

void MainWindow::receive_image()

{

cv::Mat output_image;

if(bcq_infer1.try_dequeue(output_image))

{

QImage image = QImage((const uchar*)output_image.data, output_image.cols, output_image.rows, QImage::Format_RGB888).rgbSwapped();

ui->label_1->clear();

ui->label_1->setPixmap(QPixmap::fromImage(image));

ui->label_1->show();

}

if(bcq_infer2.try_dequeue(output_image))

{

QImage image = QImage((const uchar*)output_image.data, output_image.cols, output_image.rows, QImage::Format_RGB888).rgbSwapped();

ui->label_2->clear();

ui->label_2->setPixmap(QPixmap::fromImage(image));

ui->label_2->show();

}

}

void MainWindow::on_pushButton_open_video_clicked()

{

QString qstr = QFileDialog::getOpenFileName(this, tr("Open Video"), "", tr("(*.mp4 *.avi *.mkv)"));

if(qstr.isEmpty()) return;

infer1->start();

infer2->start();

cv::VideoCapture cap;

cap.open(qstr.toStdString());

while (cv::waitKey(1) < 0)

{

cv::Mat frame;

cap.read(frame);

if (frame.empty())

{

stop = true;

break;

}

bcq_capture1.enqueue(frame);

bcq_capture2.enqueue(frame);

}

}

这里引入的第三方库moodycamel::ConcurrentQueue是一个用C++11实现的多生产者、多消费者无锁队列。

程序输出:

infer1 当前时间为:2023-08-12 13:17:14 402

infer2 当前时间为:2023-08-12 13:17:14 424

infer1 当前时间为:2023-08-12 13:17:14 448

infer2 当前时间为:2023-08-12 13:17:14 480

infer1 当前时间为:2023-08-12 13:17:14 494

infer2 当前时间为:2023-08-12 13:17:14 532

infer1 当前时间为:2023-08-12 13:17:14 544

infer2 当前时间为:2023-08-12 13:17:14 586

infer1 当前时间为:2023-08-12 13:17:14 590

infer1 当前时间为:2023-08-12 13:17:14 637

infer2 当前时间为:2023-08-12 13:17:14 645

infer1 当前时间为:2023-08-12 13:17:14 678

infer2 当前时间为:2023-08-12 13:17:14 702

infer1 当前时间为:2023-08-12 13:17:14 719

infer2 当前时间为:2023-08-12 13:17:14 758

infer1 当前时间为:2023-08-12 13:17:14 760

infer1 当前时间为:2023-08-12 13:17:14 808

infer2 当前时间为:2023-08-12 13:17:14 817

infer1 当前时间为:2023-08-12 13:17:14 852

infer2 当前时间为:2023-08-12 13:17:14 881

...

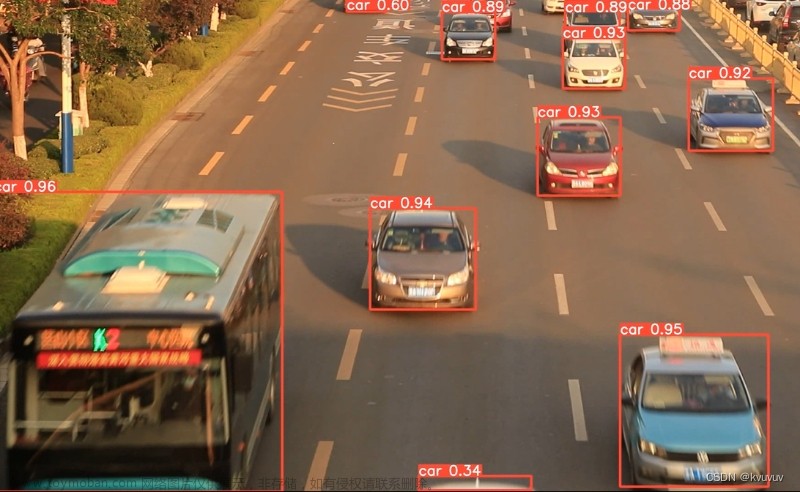

界面效果:

可以看到,上面的程序实现了两个模型的多线程推理,但由于不同模型推理速度有差异,导致画面显示不同步。另外,把读取视频帧的实现写入主线程时,一旦视频帧读取结束则无法处理后面的帧,导致显示卡死。

V2 修正画面不同步问题

mainwindow.h

#pragma once

#include <iostream>

#include <QMainWindow>

#include <QFileDialog>

#include <QThread>

#include <opencv2/opencv.hpp>

#include "yolov5.h"

#include "blockingconcurrentqueue.h"

QT_BEGIN_NAMESPACE

namespace Ui { class MainWindow; }

using namespace moodycamel;

QT_END_NAMESPACE

class Capture : public QThread

{

Q_OBJECT

public:

void set_video(QString video)

{

cap.open(video.toStdString());

}

private:

void run();

private:

cv::VideoCapture cap;

};

class Infer1 : public QThread

{

Q_OBJECT

public slots:

void receive_image(){};

private:

void run();

private:

cv::Mat input_image;

cv::Mat blob;

cv::Mat output_image;

std::vector<cv::Mat> network_outputs;

signals:

void send_image();

};

class Infer2 : public QThread

{

Q_OBJECT

public slots:

void receive_image(){};

private:

void run();

private:

cv::Mat input_image;

cv::Mat blob;

cv::Mat output_image;

std::vector<cv::Mat> network_outputs;

signals:

void send_image();

};

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

MainWindow(QWidget *parent = nullptr);

~MainWindow();

private slots:

void on_pushButton_open_video_clicked();

void receive_image();

private:

Ui::MainWindow *ui;

QString video;

Capture *capture;

Infer1 *infer1;

Infer2 *infer2;

signals:

void send_image();

};

mainwindow.cpp

#include "mainwindow.h"

#include "ui_mainwindow.h"

bool stop = false;

BlockingConcurrentQueue<cv::Mat> bcq_capture1, bcq_infer1;

BlockingConcurrentQueue<cv::Mat> bcq_capture2, bcq_infer2;

void print_time(int id)

{

auto now = std::chrono::system_clock::now();

uint64_t dis_millseconds = std::chrono::duration_cast<std::chrono::milliseconds>(now.time_since_epoch()).count()

- std::chrono::duration_cast<std::chrono::seconds>(now.time_since_epoch()).count() * 1000;

time_t tt = std::chrono::system_clock::to_time_t(now);

auto time_tm = localtime(&tt);

char time[100] = { 0 };

sprintf(time, "%d-%02d-%02d %02d:%02d:%02d %03d", time_tm->tm_year + 1900,

time_tm->tm_mon + 1, time_tm->tm_mday, time_tm->tm_hour,

time_tm->tm_min, time_tm->tm_sec, (int)dis_millseconds);

std::cout << "infer" << std::to_string(id) << " 当前时间为:" << time << std::endl;

}

void Capture::run()

{

while (cv::waitKey(50) < 0)

{

cv::Mat frame;

cap.read(frame);

if (frame.empty())

{

stop = true;

break;

}

bcq_capture1.enqueue(frame);

bcq_capture2.enqueue(frame);

}

}

void Infer1::run()

{

cv::dnn::Net net = cv::dnn::readNet("yolov5n-w640h352.onnx");

while (true)

{

if(stop) break;

if(bcq_capture1.try_dequeue(input_image))

{

pre_process(input_image, blob);

process(blob, net, network_outputs);

post_process(input_image, output_image, network_outputs);

bcq_infer1.enqueue(output_image);

emit send_image();

print_time(1);

}

}

}

void Infer2::run()

{

cv::dnn::Net net = cv::dnn::readNet("yolov5s-w640h352.onnx");

while (true)

{

if(stop) break;

if(bcq_capture2.try_dequeue(input_image))

{

pre_process(input_image, blob);

process(blob, net, network_outputs);

post_process(input_image, output_image, network_outputs);

bcq_infer2.enqueue(output_image);

emit send_image();

print_time(2);

}

}

}

MainWindow::MainWindow(QWidget *parent)

: QMainWindow(parent)

, ui(new Ui::MainWindow)

{

ui->setupUi(this);

capture = new Capture;

infer1 = new Infer1;

infer2 = new Infer2;

connect(infer1, &Infer1::send_image, this, &MainWindow::receive_image);

connect(infer2, &Infer2::send_image, this, &MainWindow::receive_image);

}

MainWindow::~MainWindow()

{

delete ui;

}

void MainWindow::receive_image()

{

cv::Mat output_image;

if(bcq_infer1.try_dequeue(output_image))

{

QImage image = QImage((const uchar*)output_image.data, output_image.cols, output_image.rows, QImage::Format_RGB888).rgbSwapped();

ui->label_1->clear();

ui->label_1->setPixmap(QPixmap::fromImage(image));

ui->label_1->show();

}

if(bcq_infer2.try_dequeue(output_image))

{

QImage image = QImage((const uchar*)output_image.data, output_image.cols, output_image.rows, QImage::Format_RGB888).rgbSwapped();

ui->label_2->clear();

ui->label_2->setPixmap(QPixmap::fromImage(image));

ui->label_2->show();

}

}

void MainWindow::on_pushButton_open_video_clicked()

{

video = QFileDialog::getOpenFileName(this, tr("Open Video"), "", tr("(*.mp4 *.avi *.mkv)"));

if(video.isEmpty()) return;

capture->set_video(video);

capture->start();

infer1->start();

infer2->start();

}

界面显示:

V3 修正视频播放完成界面显示问题

和V2比较,V3的改动不大,仅增加在视频播放完成时发出信号调用清除界面显示的功能。

mainwindow.h

#pragma once

#include <iostream>

#include <QMainWindow>

#include <QFileDialog>

#include <QThread>

#include <opencv2/opencv.hpp>

#include "yolov5.h"

#include "blockingconcurrentqueue.h"

QT_BEGIN_NAMESPACE

namespace Ui { class MainWindow; }

using namespace moodycamel;

QT_END_NAMESPACE

class Capture : public QThread

{

Q_OBJECT

public:

void set_video(QString video)

{

cap.open(video.toStdString());

}

private:

void run();

private:

cv::VideoCapture cap;

signals:

void stop();

};

class Infer1 : public QThread

{

Q_OBJECT

private:

void run();

private:

cv::Mat input_image;

cv::Mat blob;

cv::Mat output_image;

std::vector<cv::Mat> network_outputs;

signals:

void send_image();

};

class Infer2 : public QThread

{

Q_OBJECT

private:

void run();

private:

cv::Mat input_image;

cv::Mat blob;

cv::Mat output_image;

std::vector<cv::Mat> network_outputs;

signals:

void send_image();

};

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

MainWindow(QWidget *parent = nullptr);

~MainWindow();

private slots:

void on_pushButton_open_video_clicked();

void receive_image();

void clear_image();

private:

Ui::MainWindow *ui;

QString video;

Capture *capture;

Infer1 *infer1;

Infer2 *infer2;

};

mainwindow.cpp

#include "mainwindow.h"

#include "ui_mainwindow.h"

bool flag = false;

BlockingConcurrentQueue<cv::Mat> bcq_capture1, bcq_infer1;

BlockingConcurrentQueue<cv::Mat> bcq_capture2, bcq_infer2;

void print_time(int id)

{

auto now = std::chrono::system_clock::now();

uint64_t dis_millseconds = std::chrono::duration_cast<std::chrono::milliseconds>(now.time_since_epoch()).count()

- std::chrono::duration_cast<std::chrono::seconds>(now.time_since_epoch()).count() * 1000;

time_t tt = std::chrono::system_clock::to_time_t(now);

auto time_tm = localtime(&tt);

char time[100] = { 0 };

sprintf(time, "%d-%02d-%02d %02d:%02d:%02d %03d", time_tm->tm_year + 1900,

time_tm->tm_mon + 1, time_tm->tm_mday, time_tm->tm_hour,

time_tm->tm_min, time_tm->tm_sec, (int)dis_millseconds);

std::cout << "infer" << std::to_string(id) << " 当前时间为:" << time << std::endl;

}

void Capture::run()

{

while (cv::waitKey(50) < 0)

{

cv::Mat frame;

cap.read(frame);

if (frame.empty())

{

flag = true;

emit stop();

break;

}

bcq_capture1.enqueue(frame);

bcq_capture2.enqueue(frame);

}

}

void Infer1::run()

{

cv::dnn::Net net = cv::dnn::readNet("yolov5n-w640h352.onnx");

while (true)

{

if(flag) break;

if(bcq_capture1.try_dequeue(input_image))

{

pre_process(input_image, blob);

process(blob, net, network_outputs);

post_process(input_image, output_image, network_outputs);

bcq_infer1.enqueue(output_image);

emit send_image();

print_time(1);

}

std::this_thread::yield();

}

}

void Infer2::run()

{

cv::dnn::Net net = cv::dnn::readNet("yolov5s-w640h352.onnx");

while (true)

{

if(flag) break;

if(bcq_capture2.try_dequeue(input_image))

{

pre_process(input_image, blob);

process(blob, net, network_outputs);

post_process(input_image, output_image, network_outputs);

bcq_infer2.enqueue(output_image);

emit send_image();

print_time(2);

}

std::this_thread::yield();

}

}

MainWindow::MainWindow(QWidget *parent)

: QMainWindow(parent)

, ui(new Ui::MainWindow)

{

ui->setupUi(this);

capture = new Capture;

infer1 = new Infer1;

infer2 = new Infer2;

connect(infer1, &Infer1::send_image, this, &MainWindow::receive_image);

connect(infer2, &Infer2::send_image, this, &MainWindow::receive_image);

connect(capture, &Capture::stop, this, &MainWindow::clear_image);

}

MainWindow::~MainWindow()

{

delete ui;

}

void MainWindow::on_pushButton_open_video_clicked()

{

video = QFileDialog::getOpenFileName(this, tr("Open Video"), "", tr("(*.mp4 *.avi *.mkv)"));

if(video.isEmpty()) return;

capture->set_video(video);

capture->start();

infer1->start();

infer2->start();

}

void MainWindow::receive_image()

{

cv::Mat output_image;

if(bcq_infer1.try_dequeue(output_image))

{

QImage image = QImage((const uchar*)output_image.data, output_image.cols, output_image.rows, QImage::Format_RGB888).rgbSwapped();

ui->label_1->clear();

ui->label_1->setPixmap(QPixmap::fromImage(image));

ui->label_1->show();

}

if(bcq_infer2.try_dequeue(output_image))

{

QImage image = QImage((const uchar*)output_image.data, output_image.cols, output_image.rows, QImage::Format_RGB888).rgbSwapped();

ui->label_2->clear();

ui->label_2->setPixmap(QPixmap::fromImage(image));

ui->label_2->show();

}

}

void MainWindow::clear_image()

{

ui->label_1->clear();

ui->label_2->clear();

}

V4 通过Qt自带QThread、QMutex、QWaitCondition实现

mainwindow.h

#pragma once

#include <iostream>

#include <QMainWindow>

#include <QFileDialog>

#include <QThread>

#include <QMutex>

#include <QWaitCondition>

#include <opencv2/opencv.hpp>

#include "yolov5.h"

QT_BEGIN_NAMESPACE

namespace Ui { class MainWindow; }

QT_END_NAMESPACE

class Capture : public QThread

{

Q_OBJECT

public:

void set_video(QString video)

{

cap.open(video.toStdString());

}

private:

void run();

private:

cv::VideoCapture cap;

signals:

void stop();

};

class Infer1 : public QThread

{

Q_OBJECT

public:

void set_model(QString model)

{

net = cv::dnn::readNet(model.toStdString());

}

private:

void run();

private:

cv::dnn::Net net;

cv::Mat input_image;

cv::Mat blob;

cv::Mat output_image;

std::vector<cv::Mat> network_outputs;

signals:

void send_image();

void stop();

};

class Infer2 : public QThread

{

Q_OBJECT

public:

void set_model(QString model)

{

net = cv::dnn::readNet(model.toStdString());

}

private:

void run();

private:

cv::dnn::Net net;

cv::Mat input_image;

cv::Mat blob;

cv::Mat output_image;

std::vector<cv::Mat> network_outputs;

signals:

void send_image();

void stop();

};

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

MainWindow(QWidget *parent = nullptr);

~MainWindow();

private slots:

void on_pushButton_open_video_clicked();

void receive_image();

void clear_image();

private:

Ui::MainWindow *ui;

QString video;

Capture *capture;

Infer1 *infer1;

Infer2 *infer2;

};

mainwindow.cpp

#include "mainwindow.h"

#include "ui_mainwindow.h"

bool video_end = false;

QMutex mutex1, mutex2;

QWaitCondition qwc1, qwc2;

cv::Mat g_frame1, g_frame2;

cv::Mat g_result1, g_result2;

void print_time(int id)

{

auto now = std::chrono::system_clock::now();

uint64_t dis_millseconds = std::chrono::duration_cast<std::chrono::milliseconds>(now.time_since_epoch()).count()

- std::chrono::duration_cast<std::chrono::seconds>(now.time_since_epoch()).count() * 1000;

time_t tt = std::chrono::system_clock::to_time_t(now);

auto time_tm = localtime(&tt);

char time[100] = { 0 };

sprintf(time, "%d-%02d-%02d %02d:%02d:%02d %03d", time_tm->tm_year + 1900,

time_tm->tm_mon + 1, time_tm->tm_mday, time_tm->tm_hour,

time_tm->tm_min, time_tm->tm_sec, (int)dis_millseconds);

std::cout << "infer" << std::to_string(id) << " 当前时间为:" << time << std::endl;

}

void Capture::run()

{

while (cv::waitKey(1) < 0)

{

cv::Mat frame;

cap.read(frame);

if (frame.empty())

{

video_end = true;

cap.release();

emit stop();

break;

}

g_frame1 = frame;

qwc1.wakeAll();

g_frame2 = frame;

qwc2.wakeAll();

}

}

void Infer1::run()

{

while (true)

{

if(video_end)

{

emit stop();

break;

}

mutex1.lock();

qwc1.wait(&mutex1);

input_image = g_frame1;

pre_process(input_image, blob);

process(blob, net, network_outputs);

post_process(input_image, output_image, network_outputs);

g_result1 = output_image;

emit send_image();

mutex1.unlock();

print_time(1);

}

}

void Infer2::run()

{

while (true)

{

if(video_end)

{

emit stop();

break;

}

mutex2.lock();

qwc2.wait(&mutex2);

input_image = g_frame2;

pre_process(input_image, blob);

process(blob, net, network_outputs);

post_process(input_image, output_image, network_outputs);

g_result2 = output_image;

emit send_image();

mutex2.unlock();

print_time(2);

}

}

MainWindow::MainWindow(QWidget *parent)

: QMainWindow(parent)

, ui(new Ui::MainWindow)

{

ui->setupUi(this);

capture = new Capture;

infer1 = new Infer1;

infer2 = new Infer2;

connect(capture, &Capture::stop, this, &MainWindow::clear_image);

connect(infer1, &Infer1::send_image, this, &MainWindow::receive_image);

connect(infer1, &Infer1::stop, this, &MainWindow::clear_image);

connect(infer2, &Infer2::send_image, this, &MainWindow::receive_image);

connect(infer2, &Infer2::stop, this, &MainWindow::clear_image);

}

MainWindow::~MainWindow()

{

delete ui;

}

void MainWindow::on_pushButton_open_video_clicked()

{

video = QFileDialog::getOpenFileName(this, tr("Open Video"), "", tr("(*.mp4 *.avi *.mkv)"));

if(video.isEmpty()) return;

video_end = false;

capture->set_video(video);

infer1->set_model("yolov5n-w640h352.onnx");

infer2->set_model("yolov5s-w640h352.onnx");

capture->start();

infer1->start();

infer2->start();

}

void MainWindow::receive_image()

{

QImage image1 = QImage((const uchar*)g_result1.data, g_result1.cols, g_result1.rows, QImage::Format_RGB888).rgbSwapped();

ui->label_1->clear();

ui->label_1->setPixmap(QPixmap::fromImage(image1));

ui->label_1->show();

QImage image2 = QImage((const uchar*)g_result2.data, g_result2.cols, g_result2.rows, QImage::Format_RGB888).rgbSwapped();

ui->label_2->clear();

ui->label_2->setPixmap(QPixmap::fromImage(image2));

ui->label_2->show();

}

void MainWindow::clear_image()

{

ui->label_1->clear();

ui->label_2->clear();

capture->quit();

infer1->quit();

infer2->quit();

}

V5 通过std::mutex、std::condition_variable、std::promise实现

mainwindow.h

#pragma once

#include <iostream>

#include <string>

#include <queue>

#include <thread>

#include <mutex>

#include <condition_variable>

#include <future>

#include <ctime>

#include <windows.h>

#include <QMainWindow>

#include <QFileDialog>

#include <QThread>

#include <QMutex>

#include <QWaitCondition>

#include <opencv2/opencv.hpp>

#include "yolov5.h"

QT_BEGIN_NAMESPACE

namespace Ui { class MainWindow; }

QT_END_NAMESPACE

class Capture : public QThread

{

Q_OBJECT

public:

void set_capture(QString video)

{

cap.open(video.toStdString());

}

private:

void run();

private:

cv::VideoCapture cap;

signals:

void show();

void stop();

};

class Infer1 : public QThread

{

Q_OBJECT

public:

void set_model(QString model)

{

net = cv::dnn::readNet(model.toStdString());

}

private:

void run();

private:

cv::dnn::Net net;

cv::Mat input_image;

cv::Mat blob;

cv::Mat output_image;

std::vector<cv::Mat> network_outputs;

};

class Infer2 : public QThread

{

Q_OBJECT

public:

void set_model(QString model)

{

net = cv::dnn::readNet(model.toStdString());

}

private:

void run();

private:

cv::dnn::Net net;

cv::Mat input_image;

cv::Mat blob;

cv::Mat output_image;

std::vector<cv::Mat> network_outputs;

};

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

MainWindow(QWidget *parent = nullptr);

~MainWindow();

private slots:

void receive_image();

void clear_image();

void on_pushButton_open_video_clicked();

private:

Ui::MainWindow *ui;

QString video;

Capture *capture;

Infer1 *infer1;

Infer2 *infer2;

};

mainwindow.cpp文章来源:https://www.toymoban.com/news/detail-644750.html

#include "mainwindow.h"

#include "ui_mainwindow.h"

struct Job

{

cv::Mat input_image;

std::shared_ptr<std::promise<cv::Mat>> output_image;

};

std::queue<Job> jobs1, jobs2;

std::mutex lock1, lock2;

std::condition_variable cv1, cv2;

cv::Mat result1, result2;

const int limit = 10;

bool video_end = false;

void print_time(int id)

{

auto now = std::chrono::system_clock::now();

uint64_t dis_millseconds = std::chrono::duration_cast<std::chrono::milliseconds>(now.time_since_epoch()).count()

- std::chrono::duration_cast<std::chrono::seconds>(now.time_since_epoch()).count() * 1000;

time_t tt = std::chrono::system_clock::to_time_t(now);

auto time_tm = localtime(&tt);

char time[100] = { 0 };

sprintf(time, "%d-%02d-%02d %02d:%02d:%02d %03d", time_tm->tm_year + 1900,

time_tm->tm_mon + 1, time_tm->tm_mday, time_tm->tm_hour,

time_tm->tm_min, time_tm->tm_sec, (int)dis_millseconds);

std::cout << "infer" << std::to_string(id) << ": 当前时间为:" << time << std::endl;

}

void Capture::run()

{

while (cv::waitKey(1) < 0)

{

Job job1, job2;

cv::Mat frame;

cap.read(frame);

if (frame.empty())

{

video_end = true;

emit stop();

break;

}

{

std::unique_lock<std::mutex> l1(lock1);

cv1.wait(l1, [&]() { return jobs1.size()<limit; });

job1.input_image = frame;

job1.output_image.reset(new std::promise<cv::Mat>());

jobs1.push(job1);

}

{

std::unique_lock<std::mutex> l2(lock2);

cv1.wait(l2, [&]() { return jobs2.size() < limit; });

job2.input_image = frame;

job2.output_image.reset(new std::promise<cv::Mat>());

jobs2.push(job2);

}

result1 = job1.output_image->get_future().get();

result2 = job2.output_image->get_future().get();

emit show();

}

}

void Infer1::run()

{

while (true)

{

if (video_end)

break; //不加线程无法退出

if (!jobs1.empty())

{

std::lock_guard<std::mutex> l1(lock1);

auto job = jobs1.front();

jobs1.pop();

cv1.notify_all();

cv::Mat input_image = job.input_image, blob, output_image;

pre_process(input_image, blob);

std::vector<cv::Mat> network_outputs;

process(blob, net, network_outputs);

post_process(input_image, output_image, network_outputs);

job.output_image->set_value(output_image);

print_time(0);

}

std::this_thread::yield(); //不加线程无法退出

}

}

void Infer2::run()

{

cv::dnn::Net net = cv::dnn::readNet("yolov5s-w640h352.onnx");

while (true)

{

if (video_end)

break;

if (!jobs2.empty())

{

std::lock_guard<std::mutex> l2(lock2);

auto job = jobs2.front();

jobs2.pop();

cv2.notify_all();

cv::Mat input_image = job.input_image, blob, output_image;

pre_process(input_image, blob);

std::vector<cv::Mat> network_outputs;

process(blob, net, network_outputs);

post_process(input_image, output_image, network_outputs);

job.output_image->set_value(output_image);

print_time(1);

}

std::this_thread::yield();

}

}

MainWindow::MainWindow(QWidget *parent)

: QMainWindow(parent)

, ui(new Ui::MainWindow)

{

ui->setupUi(this);

capture = new Capture;

infer1 = new Infer1;

infer2 = new Infer2;

connect(capture, &Capture::stop, this, &MainWindow::clear_image);

connect(capture, &Capture::show, this, &MainWindow::receive_image);

}

MainWindow::~MainWindow()

{

delete ui;

}

void MainWindow::receive_image()

{

QImage image1 = QImage((const uchar*)result1.data, result1.cols, result1.rows, QImage::Format_RGB888).rgbSwapped();

ui->label_1->clear();

ui->label_1->setPixmap(QPixmap::fromImage(image1));

ui->label_1->show();

QImage image2 = QImage((const uchar*)result2.data, result2.cols, result2.rows, QImage::Format_RGB888).rgbSwapped();

ui->label_2->clear();

ui->label_2->setPixmap(QPixmap::fromImage(image2));

ui->label_2->show();

}

void MainWindow::clear_image()

{

ui->label_1->clear();

ui->label_2->clear();

capture->quit();

infer1->quit();

infer2->quit();

}

void MainWindow::on_pushButton_open_video_clicked()

{

video = QFileDialog::getOpenFileName(this, tr("Open Video"), "", tr("(*.mp4 *.avi *.mkv *mpg *wmv)"));

if(video.isEmpty()) return;

video_end = false;

capture->set_capture(video);

infer1->set_model("yolov5n-w640h352.onnx");

infer2->set_model("yolov5s-w640h352.onnx");

capture->start();

infer1->start();

infer2->start();

}

完整工程下载链接:yolov5目标检测多线程Qt界面文章来源地址https://www.toymoban.com/news/detail-644750.html

到了这里,关于yolov5目标检测多线程Qt界面的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!