出错背景:在我的训练过程中,因为任务特殊性,用的是多卡训练单卡测试策略。模型测试的时候,由于数据集太大且测试过程指标计算量大,因此测试时间较长。

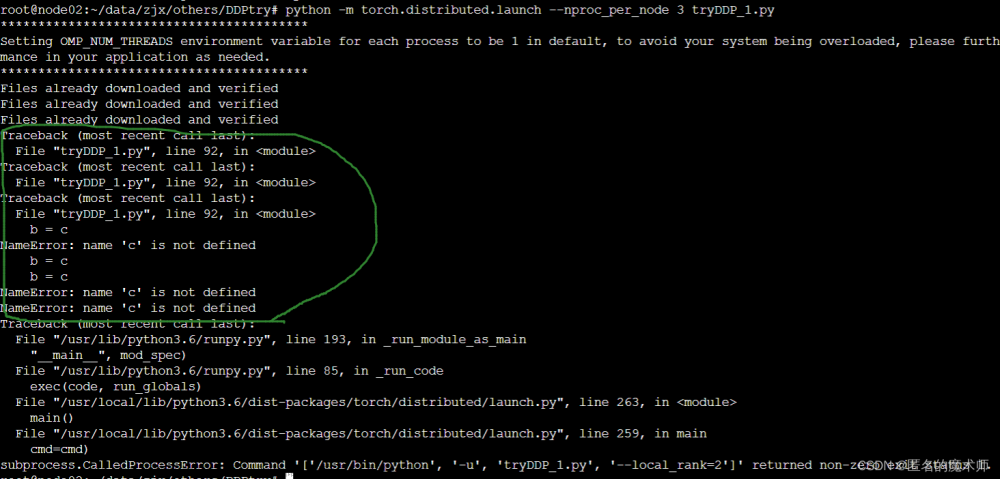

报错信息:

File "/home/anys/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 940, in __init__

self._reset(loader, first_iter=True)

File "/home/anys/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 971, in _reset

self._try_put_index()

File "/home/anys/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 1205, in _try_put_index

index = self._next_index()

File "/home/anys/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 508, in _next_index

return next(self._sampler_iter) # may raise StopIteration

File "/home/anys/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/utils/data/sampler.py", line 227, in __iter__

for idx in self.sampler:

File "/home/anys/GRALF/AlignTransReID/TransReID/datasets/sampler_ddp.py", line 148, in __iter__

seed = shared_random_seed()

File "/home/anys/GRALF/AlignTransReID/TransReID/datasets/sampler_ddp.py", line 108, in shared_random_seed

all_ints = all_gather(ints)

File "/home/anys/GRALF/AlignTransReID/TransReID/datasets/sampler_ddp.py", line 77, in all_gather

group = _get_global_gloo_group()

File "/home/anys/GRALF/AlignTransReID/TransReID/datasets/sampler_ddp.py", line 18, in _get_global_gloo_group

return dist.new_group(backend="gloo")

File "/home/anys/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/distributed/distributed_c10d.py", line 2503, in new_group

pg = _new_process_group_helper(group_world_size,

File "/home/anys/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/distributed/distributed_c10d.py", line 588, in _new_process_group_helper

pg = ProcessGroupGloo(

RuntimeError: Socket Timeout从报错信息中可以看到是数据加载的时候,创建进程引起的超时,解决方法就是将“进程”的“存活”时间加大:文章来源:https://www.toymoban.com/news/detail-645554.html

torch.distributed.new_group(backend="gloo",timeout=datetime.timedelta(days=1))当出现超时报错时,可以先检查所有创建子进程的地方,将timeout调大。文章来源地址https://www.toymoban.com/news/detail-645554.html

到了这里,关于pytorch分布式训练报错RuntimeError: Socket Timeout的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!