代码如下:

from PIL import Image

from torchvision import transforms

import os

import torch

import torchvision

import torch.nn.functional as F

class VGGSim(torch.nn.Module):

def __init__(self):

super(VGGSim, self).__init__()

blocks = []

blocks.append(torchvision.models.vgg16(pretrained=True).features[:4].eval())

blocks.append(torchvision.models.vgg16(pretrained=True).features[4:9].eval())

blocks.append(torchvision.models.vgg16(pretrained=True).features[9:16].eval())

blocks.append(torchvision.models.vgg16(pretrained=True).features[16:23].eval())

for bl in blocks:

for p in bl:

p.requires_grad = False

self.blocks = torch.nn.ModuleList(blocks)

self.transform = torch.nn.functional.interpolate

self.mean = torch.nn.Parameter(torch.tensor([0.485, 0.456, 0.406]).view(1,3,1,1))

self.std = torch.nn.Parameter(torch.tensor([0.229, 0.224, 0.225]).view(1,3,1,1))

def forward(self, input, target):

if input.shape[1] != 3:

input = input.repeat(1, 3, 1, 1)

target = target.repeat(1, 3, 1, 1)

input = (input-self.mean) / self.std

target = (target-self.mean) / self.std

x = input

y = target

res = []

for block in self.blocks:

x = block(x)

y = block(y)

x_flat = torch.flatten(x, start_dim=1)

y_flat = torch.flatten(y, start_dim=1)

similarity = torch.nn.functional.cosine_similarity(x_flat, y_flat)

res.append(similarity.cpu().item())

# 仅利用VGG最后一层的全局(分类)特征计算余弦相似度

# return res[-1]

# 或者,利用VGG各Block的特征计算余弦相似度

return sum(res)

def load_image(path):

image = Image.open(path).convert('RGB')

image = transforms.Resize([224,224])(image)

image = transforms.ToTensor()(image)

image = image.unsqueeze(0)

return image.cuda()

query_image_path = "query.jpeg" # 想要查找的图像

query_image = load_image(query_image_path)

target_image_dir = "cat_images/" # 待搜索的相册

target_images = [os.path.join(target_image_dir, name) for name in os.listdir(target_image_dir)]

vgg_sim = VGGSim().cuda()

scores = []

for path in target_images:

target_image = load_image(path)

score = vgg_sim(query_image, target_image)

scores.append([path, score])

scores.sort(key=lambda x: -x[1])

for i in range(5):

print("Top", (i + 1), "similiar =>", scores[i][0].split("/")[-1])

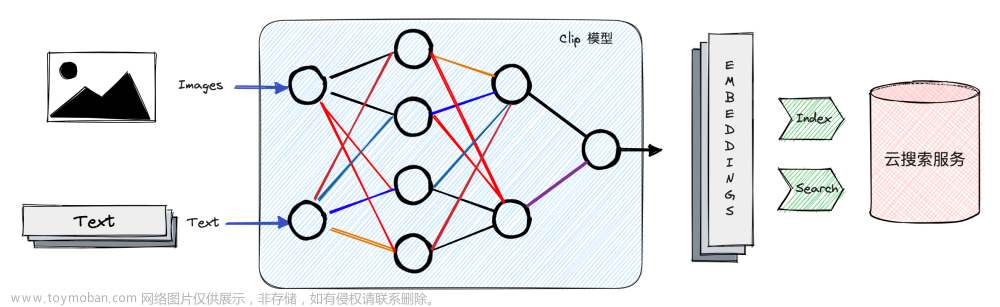

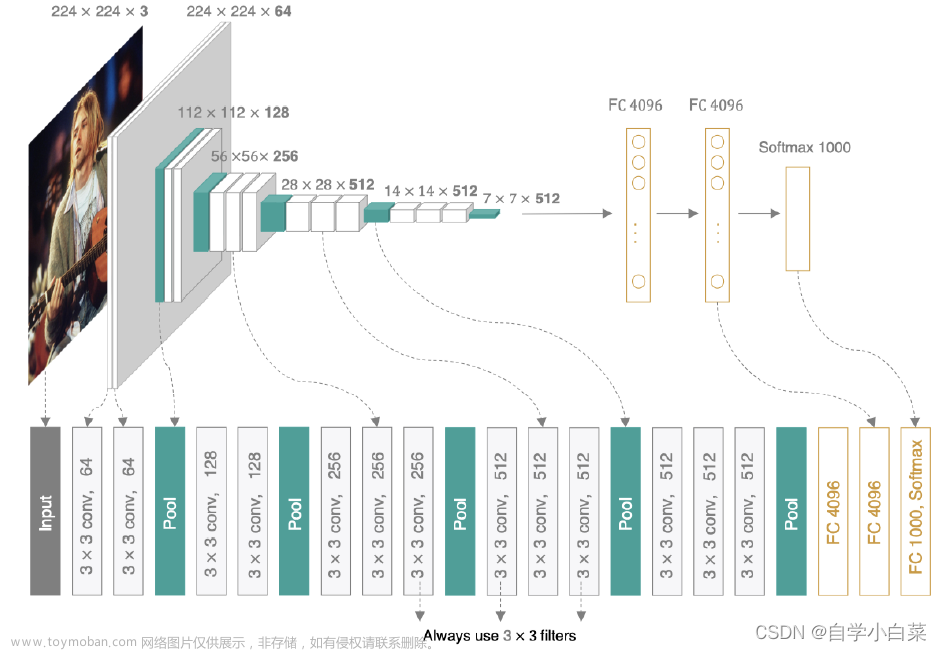

上述代码的核心思想类似于感知损失(Perceptual Loss),利用VGG提取图像的多级特征,从而比较两张图像之间的相似性。区别在于Perceptual Loss中一般使用MAE,MSE比较特征的距离,而这里的代码使用余弦相似度。

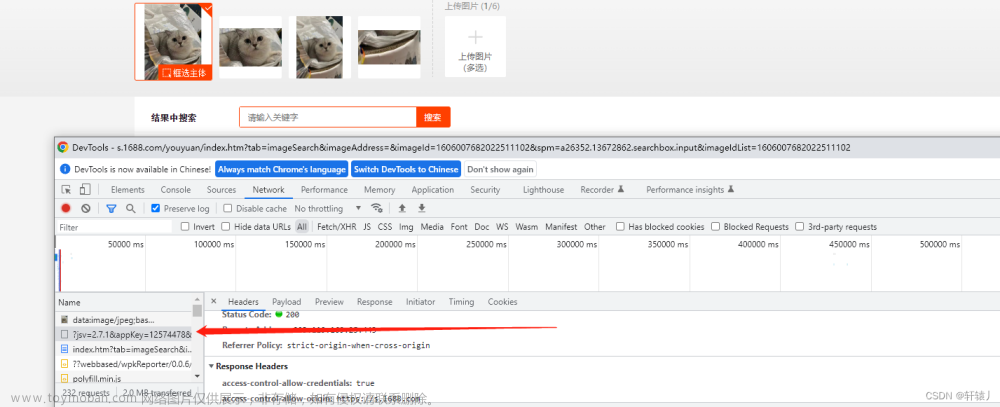

一个例子如下,给定一张狸花的图像(query)如下:

我们希望找到相册中其他狸花的图像:

上述数据集中,编号01到10的为奶牛猫,编号11到20的则为狸花猫。运行代码,结果如下:文章来源:https://www.toymoban.com/news/detail-647422.html

Top 1 similiar => 04.jpeg

Top 2 similiar => 20.jpeg

Top 3 similiar => 14.jpeg

Top 4 similiar => 12.jpeg

Top 5 similiar => 15.jpeg

可以看到,检索基本是正确的,20,14,12,15均为狸花猫。04得到最高相似度的原因是其与query的姿势十分相似,且环境也差不多(地板),这也是另一种层面上的两图像相似。文章来源地址https://www.toymoban.com/news/detail-647422.html

到了这里,关于Pytorch基于VGG cosine similarity实现简单的以图搜图(图像检索)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!