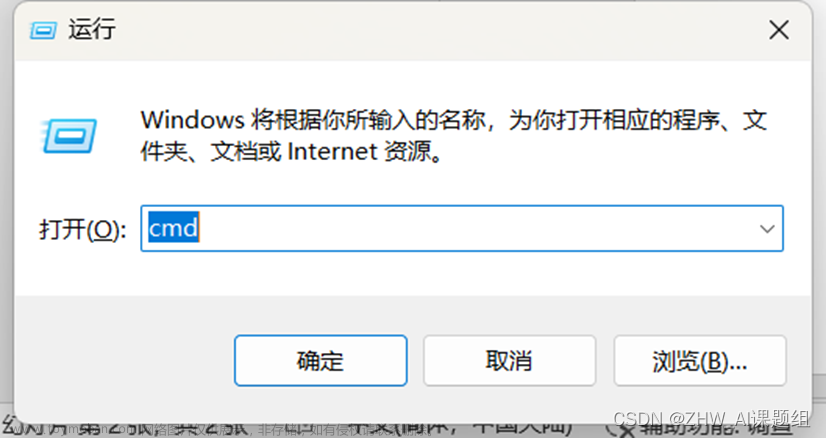

# -*- coding: utf-8 -*-

"""

Created on Wed Aug 16 09:53:13 2023

@author: chengxf2

"""

import torch

# check if there are gpu devices are available

print("\n cuda available: ",torch.cuda.is_available())

#check the number of available gpu device

print("\n device count:",torch.cuda.device_count())

#check index of the currently seletected device

print("\n current device",torch.cuda.current_device())

#view the properties of the gpu with the id:0

print("\n properties: ",torch.cuda.get_device_properties('cuda:0'))

#check name of currently selected device

print("\n name of currently selected device",torch.cuda.get_device_name(0))

#select gpu device is available

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

a = torch.rand(2,3)

print("\n the tensor is on",a.device)

#move the tensor to the gpu deivce

a = a.to(device)

print("\n the tensor is on",a.device)

![[PyTorch][chapter 51][PyTorch GPU],深度学习,人工智能,机器学习](https://imgs.yssmx.com/Uploads/2023/08/652336-1.png) 文章来源:https://www.toymoban.com/news/detail-652336.html

文章来源:https://www.toymoban.com/news/detail-652336.html

二 查看具体使用情况文章来源地址https://www.toymoban.com/news/detail-652336.html

import pynvml

pynvml.nvmlInit()

handle = pynvml.nvmlDeviceGetHandleByIndex(0) # 0表示显卡标号

# 在每一个要查看的地方都要重新定义一个meminfo

meminfo = pynvml.nvmlDeviceGetMemoryInfo(handle)

print("\n #总的显存大小",meminfo.total/1024**2)

print("\n 已用显存大小",meminfo.used/1024**2) #已用显存大小

print("\n 剩余显存",meminfo.free/1024**2) #剩余显存大小

# 单位是MB,如果想看G就再除以一个1024

到了这里,关于[PyTorch][chapter 51][PyTorch GPU]的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!