项目网址:

OpenNMT - Open-Source Neural Machine Translation

logo:

一,从应用的层面先跑通 Harvard transformer

GitHub - harvardnlp/annotated-transformer: An annotated implementation of the Transformer paper.

git clone https://github.com/harvardnlp/annotated-transformer.git

cd annotated-transformer/

1. 环境搭建

conda create --name ilustrate_transformer_env python=3.9

conda activate ilustrate_transformer_env

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple问题:TypeError: issubclass() arg 1 must be a class

原因: 这是由python中的后端包之一的兼容性问题引起的问题,包“pydantic”

执行下面命令可以解决

python -m pip install -U pydantic spacy

会遇到下载不到数据的问题,因为有个网址废弃了:www.quest......

改成最新版本的torchtext的内容即可:

/home/hipper/anaconda3/envs/ilustrate_transformer_env/lib/python3.9/site-packages/torchtext/datasets/multi30k.py

13 '''LL::

14 URL = {

15 "train": r"http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/training.tar.gz",

16 "valid": r"http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/validation.tar.gz",

17 "test": r"http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/mmt16_task1_test.tar.gz",

18 }

19

20 MD5 = {

21 "train": "20140d013d05dd9a72dfde46478663ba05737ce983f478f960c1123c6671be5e",

22 "valid": "a7aa20e9ebd5ba5adce7909498b94410996040857154dab029851af3a866da8c",

23 "test": "0681be16a532912288a91ddd573594fbdd57c0fbb81486eff7c55247e35326c2",

24 }

25 '''

26 # TODO: Update URL to original once the server is back up (see https://github.com/pytorch/text/issues/1756)

27 URL = {

28 "train": r"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/training.tar.gz",

29 "valid": r"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/validation.tar.gz",

30 "test": r"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/mmt16_task1_test.tar.gz",

31 }

32

33 MD5 = {

34 "train": "20140d013d05dd9a72dfde46478663ba05737ce983f478f960c1123c6671be5e",

35 "valid": "a7aa20e9ebd5ba5adce7909498b94410996040857154dab029851af3a866da8c",

36 "test": "6d1ca1dba99e2c5dd54cae1226ff11c2551e6ce63527ebb072a1f70f72a5cd36",

37 }运行:

未完待续 ...

__________________________________________________

参考:

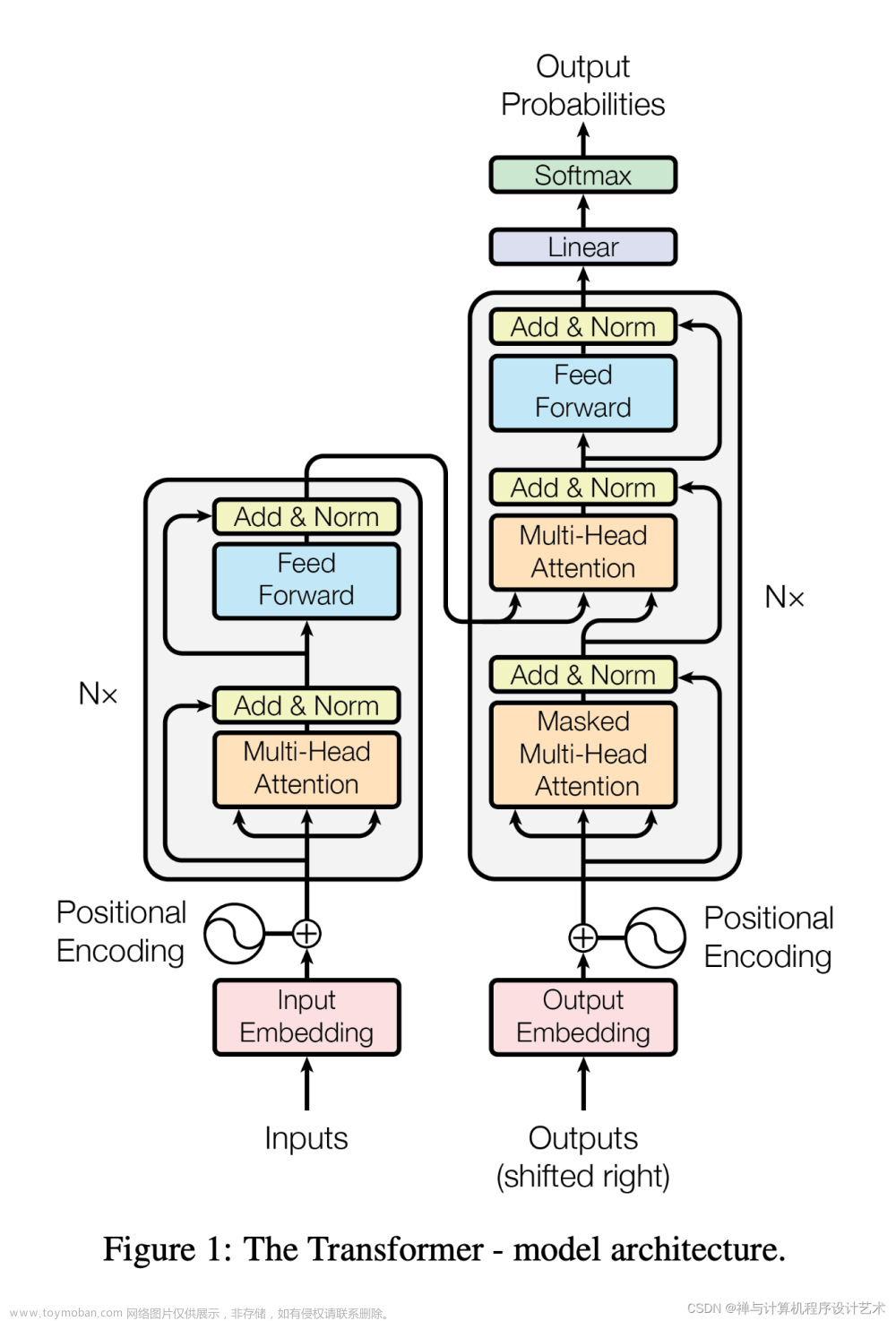

《The Annotated Transformer》翻译——注释和代码实现《Attention Is All You Need》_神洛华的博客-CSDN博客

图解transformer | The Illustrated Transformer_Ann's Blog的博客-CSDN博客

GitHub - harvardnlp/annotated-transformer: An annotated implementation of the Transformer paper.

OpenNMT - Open-Source Neural Machine Translation

flash attention 1,2:

Stanford CRFM文章来源:https://www.toymoban.com/news/detail-657387.html

GitHub - Dao-AILab/flash-attention: Fast and memory-efficient exact attention文章来源地址https://www.toymoban.com/news/detail-657387.html

到了这里,关于Harvard transformer NLP 模型 openNMT 简介入门的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!