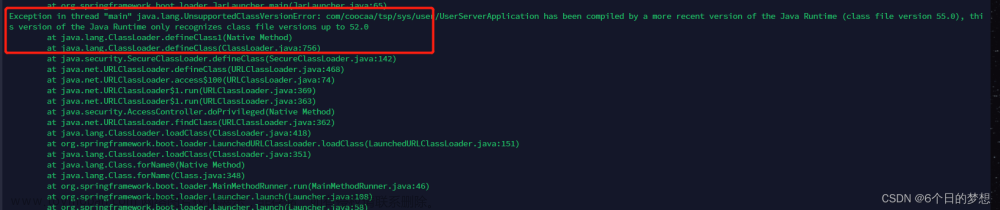

Exception in thread “main” java.lang.UnsatisfiedLinkError: org.apache.hadoop.io.nativeio.NativeIO P O S I X . s t a t ( L j a v a / l a n g / S t r i n g ; ) L o r g / a p a c h e / h a d o o p / i o / n a t i v e i o / N a t i v e I O POSIX.stat(Ljava/lang/String;)Lorg/apache/hadoop/io/nativeio/NativeIO POSIX.stat(Ljava/lang/String;)Lorg/apache/hadoop/io/nativeio/NativeIOPOSIX$Stat;

报错如下图所示

报错原因:就是你导入的依赖的hadoop版本和你本地window的hadoop版本不匹配的而导致的,解法方法就是把导入的依赖的版本换成和window版本一样的就可以了。

解决方法如下:

之前导入的依赖是:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>Mapreduce</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<!--设置项目的编码为UTF-8-->

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<!--使用java8进行编码-->

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<!--设置hadoop的版本-->

<hadoop.version>3.1.2</hadoop.version>

</properties>

<!--jar包的依赖-->

<dependencies>

<!--测试的依赖坐标-->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

</dependency>

<!--日志打印的依赖坐标-->

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.8.2</version>

</dependency>

<!--hadoop的通用模块的依赖坐标-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!--hadoop的对HDFS分布式文件系统访问的技术支持的依赖坐标-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!--hadoop的客户端访问的依赖坐标-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

</dependencies>

</project>

也就是说我之前导入的依赖版本是3.1.2,和我虚拟机上面是一样的,而我自己本地的是:

所有就把导入的依赖换成了本地有的3.0.0

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>java</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<hadoop.version>3.0.0</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.0.0</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.30</version>

</dependency>

<!--hadoop的通用模块的依赖坐标-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!--hadoop的对HDFS分布式文件系统访问的技术支持的依赖坐标-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!--hadoop的客户端访问的依赖坐标-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

</dependencies>

</project>

然后重写运行mapreduce经典案例倒排索引

代码如下:

package com.atguigu.mapreduce.mapreducedemo;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class InvertedIndex {

public static class Map extends Mapper<Object, Text, Text, Text> {

private Text keyInfo = new Text(); // 存储单词和URL组合

private Text valueInfo = new Text(); // 存储词频

private FileSplit split; // 存储Split对象

// 实现map函数

public void map(Object key, Text value, Context context) throws IOException, InterruptedException {

// 获得<key,value>对所属的FileSplit对象

split = (FileSplit) context.getInputSplit();

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

// key值由单词和URL组成,如"MapReduce:file1.txt"

// 获取文件的完整路径

// keyInfo.set(itr.nextToken()+":"+split.getPath().toString());

// 这里为了好看,只获取文件的名称。

int splitIndex = split.getPath().toString().indexOf("file");

keyInfo.set(itr.nextToken() + ":" + split.getPath().toString().substring(splitIndex));

// 词频初始化为1

valueInfo.set("1");

context.write(keyInfo, valueInfo);

}

}

}

public static class Combine extends Reducer<Text, Text, Text, Text> {

private Text info = new Text();

// 实现reduce函数

public void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

// 统计词频

int sum = 0;

for (Text value : values) {

sum += Integer.parseInt(value.toString());

}

int splitIndex = key.toString().indexOf(":");

// 重新设置value值由URL和词频组成

info.set(key.toString().substring(splitIndex + 1) + ":" + sum);

// 重新设置key值为单词

key.set(key.toString().substring(0, splitIndex));

context.write(key, info);

}

}

public static class Reduce extends Reducer<Text, Text, Text, Text> {

private Text result = new Text();

// 实现reduce函数

public void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

// 生成文档列表

String fileList = new String();

for (Text value : values) {

fileList += value.toString() + ";";

}

result.set(fileList);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(InvertedIndex.class);

// 设置Map、Combine和Reduce处理类

job.setMapperClass(Map.class);

job.setCombinerClass(Combine.class);

job.setReducerClass(Reduce.class);

// 设置Map输出类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

// 设置Reduce输出类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

// 设置输入和输出目录

FileInputFormat.addInputPath(job, new Path("D:\\input"));

FileOutputFormat.setOutputPath(job, new Path("D:\\output"));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

运行结果:文章来源:https://www.toymoban.com/news/detail-661506.html

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Process finished with exit code 0文章来源地址https://www.toymoban.com/news/detail-661506.html

到了这里,关于Exception in thread “main“ java.lang.UnsatisfiedLinkError: org.apache.hadoop.io.nativeio.NativeIO$PO的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!