huggingface相关diffusers等库的下载暂不提供,可以轻易找到。

直接放代码。

import torch

import datetime

import cv2

import numpy as np

from PIL import Image

import PIL.Image

import PIL.ImageOps

from controlnet_aux import OpenposeDetector

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, UniPCMultistepScheduler, \

StableDiffusionPipeline

def canny_img_process(imagepath, low_threshold, high_threshold):

img = cv2.imread(imagepath)

image = cv2.Canny(img, low_threshold, high_threshold)

# zero_start = image.shape[1] // 4

# zero_end = zero_start + image.shape[1] // 2

# image[:, zero_start:zero_end] = 0

image = image[:, :, None]

image = np.concatenate([image, image, image], axis=2)

image = Image.fromarray(image)

return image

def openpose_img_process(imagepath):

image = PIL.Image.open(imagepath)

openpose = OpenposeDetector.from_pretrained(

# 'lllyasviel/ControlNet'

'./ControlNet_Cache/',

filename="body_pose_model.pth",

hand_filename="hand_pose_model.pth",

face_filename="facenet.pth"

)

image = PIL.ImageOps.exif_transpose(image)

image = image.convert("RGB")

image = openpose(image)

return image

def multi_control_sd(prompt, control_mode=None, images_PATH=None, low_threshold=100,

high_threshold=200, negative_prompt=None, GenNum=20, num_inference_steps=50, sketch_mode=True,

controlnet_conditioning_scale=None):

"""

:param control_mode: only support canny openpose

"""

controlnets = []

inference_img = []

for mode, image_path in zip(control_mode, images_PATH):

if mode == "canny":

controlnets.append(ControlNetModel.from_pretrained("./Control/canny/", torch_dtype=torch.float16))

cur_img = canny_img_process(image_path, low_threshold, high_threshold)

inference_img.append(cur_img)

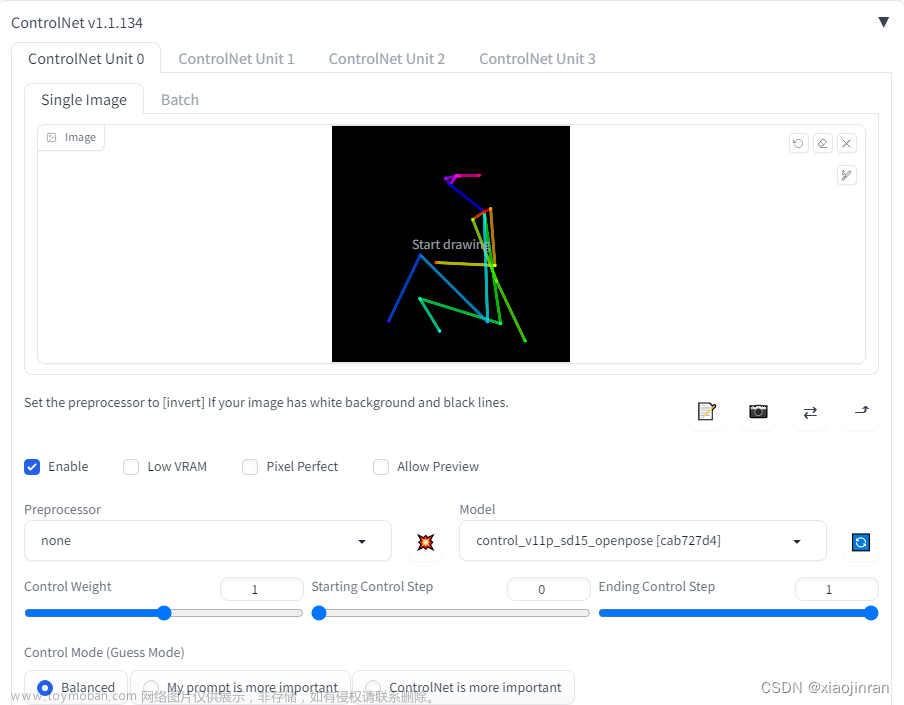

if mode == "openpose":

if sketch_mode:

controlnets.append(ControlNetModel.from_pretrained("./Control/openpose/", torch_dtype=torch.float16))

image = PIL.Image.open(image_path)

inference_img.append(image)

else:

controlnets.append(ControlNetModel.from_pretrained("./Control/openpose/", torch_dtype=torch.float16))

cur_img = openpose_img_process(image_path)

inference_img.append(cur_img)

pipe = StableDiffusionControlNetPipeline.from_pretrained("./stable-diffusion-v1-5/", controlnet=controlnets,

torch_dtype=torch.float16)

pipe = pipe.to("cuda")

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

pipe.enable_model_cpu_offload()

for index in range(GenNum):

print("第{}次生成".format(index + 1))

# random seed

seed_id = int(datetime.datetime.now().timestamp())

generator = torch.Generator("cuda").manual_seed(seed_id)

final_image = \

pipe(prompt, inference_img, num_inference_steps=num_inference_steps, negative_prompt=negative_prompt,

generator=generator, controlnet_conditioning_scale=controlnet_conditioning_scale).images[0] # PIL格式 (https://pillow.readthedocs.io/en/stable/)

time = datetime.datetime.now().strftime('%Y_%m_%d_%H_%M')

name = time + "_" + str(seed_id)

final_image.save("./control_generate_image/{}.png".format(name))

if __name__ == '__main__':

prompt = "a giant standing in a fantasy landscape, best quality"

#prompt = "a boy is watching a cute cat"

negative_prompt = "nsfw,disfigured,long neck, deformed,ugly, malformed hands,floating limbs"

control_mode = ["openpose", "canny"]

images_path = ["./person_pose.png", "./landscape.png"]

control_scale = [1.0, 0.6]

multi_control_sd(prompt, control_mode, images_path, negative_prompt=negative_prompt, num_inference_steps=399,

controlnet_conditioning_scale=control_scale)

# control_stable_diffusion(prompt, canny_mode=False, openpose_mode=True, imagepath="./ceshi.png",

# GenNum=10, num_inference_steps=50,sketch_mode=False)

# simple_stable_diffusion(prompt,negative_prompt)

以上设置都下载了相关权重文件,所以可以本地使用。在openpose处理部分需要修改源码才能实现本地部署,不然的话会连接huggingface官方,离线就不能运行了。相关操作如下:

首先进入 from_pretrained的源码,然后做如下修改:文章来源:https://www.toymoban.com/news/detail-662566.html

def from_pretrained(cls, pretrained_model_or_path, filename=None, hand_filename=None, face_filename=None, cache_dir=None):

if pretrained_model_or_path == "lllyasviel/ControlNet":

filename = filename or "annotator/ckpts/body_pose_model.pth"

hand_filename = hand_filename or "annotator/ckpts/hand_pose_model.pth"

face_filename = face_filename or "facenet.pth"

face_pretrained_model_or_path = "lllyasviel/Annotators"

else:

filename = filename or "body_pose_model.pth"

hand_filename = hand_filename or "hand_pose_model.pth"

face_filename = face_filename or "facenet.pth"

face_pretrained_model_or_path = pretrained_model_or_path

# body_model_path = hf_hub_download(pretrained_model_or_path, filename, cache_dir=cache_dir)

# hand_model_path = hf_hub_download(pretrained_model_or_path, hand_filename, cache_dir=cache_dir)

# face_model_path = hf_hub_download(face_pretrained_model_or_path, face_filename, cache_dir=cache_dir)

body_model_path = pretrained_model_or_path + filename

hand_model_path = pretrained_model_or_path + hand_filename

face_model_path = pretrained_model_or_path + face_filename

body_estimation = Body(body_model_path)

hand_estimation = Hand(hand_model_path)

face_estimation = Face(face_model_path)

return cls(body_estimation, hand_estimation, face_estimation)该部分是用来处理人体姿态的,有很多其他的方法,也可以不使用他们huggingface提供的一个方法。这样就省了这个操作。文章来源地址https://www.toymoban.com/news/detail-662566.html

到了这里,关于多重controlnet控制(使用huggingface提供的API)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!