一、nfs-client-provisioner简介

Kubernetes集群中NFS类型的存储没有内置 Provisioner。但是你可以在集群中为NFS配置外部Provisioner。

Nfs-client-provisioner是一个开源的NFS 外部Provisioner,利用NFS Server为Kubernetes集群提供持久化存储,并且支持动态购买PV。但是nfs-client-provisioner本身不提供NFS,需要现有的NFS服务器提供存储。持久卷目录的命名规则为: n a m e s p a c e − {namespace}- namespace−{pvcName}-${pvName}。

K8S的外部NFS驱动可以按照其工作方式(是作为NFS server还是NFS client)分为两类:

nfs-client:

它通过K8S内置的NFS驱动挂载远端的NFS服务器到本地目录;然后将自身作为storage provider关联storage class。当用户创建对应的PVC来申请PV时,该provider就将PVC的要求与自身的属性比较,一旦满足就在本地挂载好的NFS目录中创建PV所属的子目录,为Pod提供动态的存储服务。

nfs-server:

与nfs-client不同,该驱动并不使用k8s的NFS驱动来挂载远端的NFS到本地再分配,而是直接将本地文件映射到容器内部,然后在容器内使用ganesha.nfsd来对外提供NFS服务;在每次创建PV的时候,直接在本地的NFS根目录中创建对应文件夹,并export出该子目录。文章来源:https://www.toymoban.com/news/detail-664860.html

本文将介绍使用nfs-client-provisioner这个应用,利用NFS Server给Kubernetes作为持久存储的后端,并且动态提供PV。前提条件是有已经安装好的NFS服务器,并且NFS服务器与Kubernetes的Slave节点网络能够连通。将nfs-client驱动做为一个deployment部署到K8S集群中,然后对外提供存储服务文章来源地址https://www.toymoban.com/news/detail-664860.html

二、准备NFS服务端

2.0 当前环境信息

[root@master1 ~]# kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master1.k8s.test Ready <none> 5d21h v1.22.17 10.140.20.141 <none> CentOS Linux 7 (Core) 6.3.2-1.el7.elrepo.x86_64 docker://19.3.15

master2.k8s.test Ready <none> 5d21h v1.22.17 10.140.20.142 <none> CentOS Linux 7 (Core) 6.3.2-1.el7.elrepo.x86_64 docker://19.3.15

master3.k8s.test Ready <none> 5d21h v1.22.17 10.140.20.143 <none> CentOS Linux 7 (Core) 6.3.2-1.el7.elrepo.x86_64 docker://19.3.15

node1.k8s.test Ready <none> 5d21h v1.22.17 10.140.20.156 <none> CentOS Linux 7 (Core) 6.3.2-1.el7.elrepo.x86_64 docker://19.3.15

2.1 通过yum安装nfs server端

[root@master1 ~]# rpm -qa|egrep "nfs|rpc"

[root@master1 ~]# yum -y install nfs-utils rpcbind

2.2 启动服务和设置开机启动

#启动nfs-server,并加入开机启动

[root@master1 ~]# systemctl start rpcbind.service

[root@master1 ~]# systemctl enable rpcbind.service

[root@master1 ~]# systemctl start nfs

[root@master1 ~]# systemctl enable nfs-server --now

#查看nfs server是否已经正常启动

[root@master1 ~]# systemctl status nfs-server

2.3 编辑配置文件,设置共享目录

[root@master1 ~]# mkdir /data/nfs-provisioner -p

[root@master1 ~]# cat > /etc/exports <<EOF

/data/nfs_provisioner 10.140.20.0/24(rw,no_root_squash)

EOF

#不用重启nfs服务,配置文件就会生效

[root@master1 ~]# exportfs -arv

exporting 10.140.20.0/24:/data/nfs_provisioner

用于配置NFS服务程序配置文件的参数:

| 参数 | 含义 |

|---|---|

| ro | 只读 |

| rw | 读写 |

| root_squash | 当NFS客户端以root管理员访问时,映射未NFS服务器的匿名用户 |

| no_root_squash | 当NFS客户端以root管理员访问时,映射未NFS服务器的root管理员 |

| all_suash | 无论NFS客户端使用什么账户访问,均映射未NFS服务器的匿名用户 |

| sync | 同时将数据写入到内存与硬盘中,保证不丢失数据 |

| async | 优先将数据保存到内存,然后再写入硬盘。效率高但易丢失数据 |

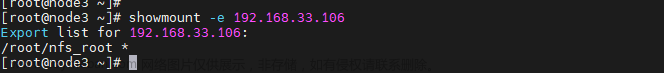

2.4 客户端测试

客户端需要安装nfs-utils

[root@master1 ~]# yum -y install nfs-utils

[root@master1 ~]# systemctl enable nfs --now

[root@master1 ~]# systemctl status nfs

客户端验证

[root@master2 ~]# showmount -e 10.140.20.141

Export list for 10.140.20.141:

/data/nfs_provisioner 10.140.20.0/24

三、部署nfs-provisioner

3.1.0 创建namespace、工作目录

[root@master1 ~]# kubectl create namespace test

[root@master1 ~]# mkdir nfs-provisioner

[root@master1 ~]# cd nfs-provisioner

3.1 创建ServiceAccount

[root@master1 ~]# cat > nfs-sa.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: test

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: test

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: test

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: test

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: test

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

EOF

应用生效

[root@master1 nfs-provisioner]# kubectl apply -f nfs-sa.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

3.2 创建Deployment

[root@master1 ~]# cat > nfs-deployment.yaml << EOF

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

namespace: test

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/mydlq/nfs-subdir-external-provisioner:v4.0.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: nfs-provisioner # 和3.Storage中provisioner保持一致便可

- name: NFS_SERVER

value: 10.140.20.141

- name: NFS_PATH

value: /data/nfs_provisioner

volumes:

- name: nfs-client-root

nfs:

server: 10.140.20.141

path: /data/nfs_provisioner

EOF

应用生效

[root@master1 nfs-provisioner]# kubectl apply -f nfs-deployment.yaml

deployment.apps/nfs-client-provisioner created

3.3 创建storageclass

[root@master1 ~]# cat > nfs-sc.yaml << EOF

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

annotations:

storageclass.kubernetes.io/is-test-class: "true"

name: nfs-storage

namespace: test

provisioner: nfs-provisioner

volumeBindingMode: Immediate

reclaimPolicy: Delete

EOF

应用生效

[root@master1 nfs-provisioner]# kubectl apply -f nfs-sc.yaml

storageclass.storage.k8s.io/nfs-storage created

3.4 创建pvc

[root@master yaml]# cat > nfs-pvc.yaml << EOF

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

namespace: test

labels:

app: nfs-pvc

spec:

accessModes: #指定访问类型

- ReadWriteOnce

volumeMode: Filesystem #指定卷类型

resources:

requests:

storage: 2Gi

storageClassName: nfs-storage #指定创建的存储类的名字

EOF

#创建pvc

[root@master1 nfs-provisioner]# kubectl apply -n test -f nfs-pvc.yaml

persistentvolumeclaim/nfs-pvc created

#查看pvc

[root@master1 nfs-provisioner]# kubectl get pvc -n test

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs-pvc Bound pvc-791dc175-c068-4977-a14b-02f8cb153bc3 2Gi RWO nfs-storage 8s

www-web-0 Bound pvc-b666f81e-9723-4e88-8e81-157b9e081577 10Mi RWO nfs-storage 17m

www-web-1 Bound pvc-a900806b-47e0-432e-81fd-865c5ff6e3ba 10Mi RWO nfs-storage 16m

#查看nfs共享目录

[root@master1 nfs-provisioner]# ls /data/nfs_provisioner/

test-nfs-pvc-pvc-791dc175-c068-4977-a14b-02f8cb153bc3

test-www-web-0-pvc-b666f81e-9723-4e88-8e81-157b9e081577

test-www-web-1-pvc-a900806b-47e0-432e-81fd-865c5ff6e3ba

#总结:创建pvc使使用storageclass,那么将会自动创建pv并进行绑定

四、创建应用测试动态添加PV

4.1 创建一个nginx应用

cat > nginx_sts_pvc.yaml << EOF

apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: test

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

namespace: test

spec:

serviceName: "nginx"

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "nfs-storage" #使用新建的sc

resources:

requests:

storage: 10Mi

EOF

应用生效

[root@master1 nfs-provisioner]# kubectl apply -f nginx_sts_pvc.yaml

service/nginx created

statefulset.apps/web created

4.2 检查结果

检查deployment、statefulset状态

[root@master1 nfs-provisioner]# kubectl get sts,deploy -n test

NAME READY AGE

statefulset.apps/web 2/2 4m49s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nfs-client-provisioner 1/1 1 1 15m

检查pod状态

[root@master1 nfs-provisioner]# kubectl get pods -n test

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-fb55999fb-pcrqt 1/1 Running 0 9m30s

web-0 1/1 Running 0 3m40s

web-1 1/1 Running 0 3m15s

检查nfs-server服务器是否创建pv持久卷:

[root@master1 nfs-provisioner]# kubectl get pvc,pv -n test

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/nfs-pvc Bound pvc-791dc175-c068-4977-a14b-02f8cb153bc3 2Gi RWO nfs-storage 2m45s

persistentvolumeclaim/www-web-0 Bound pvc-b666f81e-9723-4e88-8e81-157b9e081577 10Mi RWO nfs-storage 19m

persistentvolumeclaim/www-web-1 Bound pvc-a900806b-47e0-432e-81fd-865c5ff6e3ba 10Mi RWO nfs-storage 19m

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-791dc175-c068-4977-a14b-02f8cb153bc3 2Gi RWO Delete Bound test/nfs-pvc nfs-storage 2m45s

persistentvolume/pvc-a900806b-47e0-432e-81fd-865c5ff6e3ba 10Mi RWO Delete Bound test/www-web-1 nfs-storage 19m

persistentvolume/pvc-b666f81e-9723-4e88-8e81-157b9e081577 10Mi RWO Delete Bound test/www-web-0 nfs-storage 19m

[root@master1 nfs-provisioner]# kubectl exec -it -n test web-0 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@web-0:/# echo 1 > /usr/share/nginx/html/1.txt

root@web-0:/# exit

[root@master1 nfs-provisioner]# ls /data/nfs_provisioner/

test-nfs-pvc-pvc-791dc175-c068-4977-a14b-02f8cb153bc3

test-www-web-0-pvc-b666f81e-9723-4e88-8e81-157b9e081577

test-www-web-1-pvc-a900806b-47e0-432e-81fd-865c5ff6e3ba

[root@master1 nfs-provisioner]# cat /data/nfs_provisioner/test-www-web-0-pvc-b666f81e-9723-4e88-8e81-157b9e081577/1.txt

1

到了这里,关于k8s通过nfs-provisioner配置持久化存储的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!