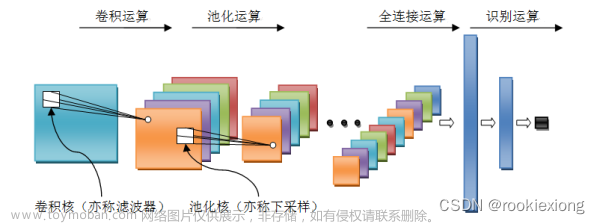

卷积、下采样、经典卷积网络

1. 对图像进行卷积处理

import cv2

path = 'data\instance\p67.jpg'

input_img = cv2.imread(path)

import cv2

import numpy as np

#分别将三个通道进行卷积,然后合并通道

def conv(image, kernel):

conv_b = convolve(image[:, :, 0], kernel)

conv_g = convolve(image[:, :, 1], kernel)

conv_r = convolve(image[:, :, 2], kernel)

output = np.dstack([conv_b, conv_g, conv_r])

return output

#卷积处理

def convolve(image, kernel):

h_kernel, w_kernel = kernel.shape

h_image, w_image = image.shape

h_output = h_image - h_kernel + 1

w_output = w_image - w_kernel + 1

output = np.zeros((h_output, w_output), np.uint8)

for i in range(h_output):

for j in range(w_output):

output[i, j] = np.multiply(image[i:i + h_kernel, j:j + w_kernel], kernel).sum()

return output

if __name__ == '__main__':

path = 'data\instance\p67.jpg'

input_img = cv2.imread(path)

# 1.锐化卷积核

#kernel = np.array([[-1,-1,-1],[-1,9,-1],[-1,-1,-1]])

# 2.模糊卷积核

kernel = np.array([[0.1,0.1,0.1],[0.1,0.2,0.1],[0.1,0.1,0.1]])

output_img = conv(input_img, kernel)

cv2.imwrite(path.replace('.jpg', '-processed.jpg'), output_img)

cv2.imshow('Output Image', output_img)

cv2.waitKey(0)

2. 池化

img = cv2.imread('data\instance\dog.jpg')

img.shape

(4064, 3216, 3)

import numpy as np

from PIL import Image

import cv2

import matplotlib.pyplot as plt

#均值池化

def AVGpooling(imgData, strdW, strdH):

W,H = imgData.shape

newImg = []

for i in range(0,W,strdW):

line = []

for j in range(0,H,strdH):

x = imgData[i:i+strdW,j:j+strdH] #获取当前待池化区域

avgValue=np.sum(x)/(strdW*strdH) #求该区域的均值

line.append(avgValue)

newImg.append(line)

return np.array(newImg)

#最大池化

def MAXpooling(imgData, strdW, strdH):

W,H = imgData.shape

newImg = []

for i in range(0,W,strdW):

line = []

for j in range(0,H,strdH):

x = imgData[i:i+strdW,j:j+strdH] #获取当前待池化区域

maxValue=np.max(x) #求该区域的最大值

line.append(maxValue)

newImg.append(line)

return np.array(newImg)

img = cv2.imread('data\instance\dog.jpg')

imgData= img[:,:,1] #绿色通道

#显示原图

plt.subplot(221)

plt.imshow(img)

plt.axis('off')

#显示原始绿通道图

plt.subplot(222)

plt.imshow(imgData)

plt.axis('off')

#显示平均池化结果图

AVGimg = AVGpooling(imgData, 2, 2)

plt.subplot(223)

plt.imshow(AVGimg)

plt.axis('off')

#显示最大池化结果图

MAXimg = MAXpooling(imgData, 2, 2)

plt.subplot(224)

plt.imshow(MAXimg)

plt.axis('off')

plt.show()

3. VGGNET

import numpy as np

from tensorflow.keras import backend as K

import matplotlib.pyplot as plt

from tensorflow.keras.applications import vgg16 # Keras内置 VGG-16模块,直接可调用。

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications.vgg16 import preprocess_input

import math

input_size = 224 # 网络输入图像的大小,长宽相等

kernel_size = 64 # 可视化卷积核的大小,长宽相等

layer_vis = True # 特征图是否可视化

kernel_vis = True # 卷积核是否可视化

each_layer = False # 卷积核可视化是否每层都做

which_layer = 1 # 如果不是每层都做,那么第几个卷积层

path = 'data\instance\p67.jpg'

img = image.load_img(path, target_size=(input_size, input_size))

img = image.img_to_array(img)

img = np.expand_dims(img, axis=0)

img = preprocess_input(img) #标准化预处理

model = vgg16.VGG16(include_top=True, weights='imagenet')

def network_configuration():

all_channels = [64, 64, 64, 128, 128, 128, 256, 256, 256, 256, 512, 512, 512, 512, 512, 512, 512, 512]

down_sampling = [1, 1, 1 / 2, 1 / 2, 1 / 2, 1 / 4, 1 / 4, 1 / 4, 1 / 4, 1 / 8, 1 / 8, 1 / 8, 1 / 8, 1 / 16, 1 / 16, 1 / 16, 1 / 16, 1 / 32]

conv_layers = [1, 2, 4, 5, 7, 8, 9, 11, 12, 13, 15, 16, 17]

conv_channels = [64, 64, 128, 128, 256, 256, 256, 512, 512, 512, 512, 512, 512]

return all_channels, down_sampling, conv_layers, conv_channels

def layer_visualization(model, img, layer_num, channel, ds):

# 设置可视化的层

layer = K.function([model.layers[0].input], [model.layers[layer_num].output])

f = layer([img])[0]

feature_aspect = math.ceil(math.sqrt(channel))

single_size = int(input_size * ds)

plt.figure(figsize=(8, 8.5))

plt.suptitle('Layer-' + str(layer_num), fontsize=22)

plt.subplots_adjust(left=0.02, bottom=0.02, right=0.98, top=0.94, wspace=0.05, hspace=0.05)

for i_channel in range(channel):

print('Channel-{} in Layer-{} is running.'.format(i_channel + 1, layer_num))

show_img = f[:, :, :, i_channel]

show_img = np.reshape(show_img, (single_size, single_size))

plt.subplot(feature_aspect, feature_aspect, i_channel + 1)

plt.imshow(show_img)

plt.axis('off')

fig = plt.gcf()

fig.savefig('data/instance/feature_kernel_images/layer_' + str(layer_num).zfill(2) + '.png', format='png', dpi=300)

plt.show()

all_channels, down_sampling, conv_layers, conv_channels = network_configuration()

if layer_vis:

for i in range(len(all_channels)):

layer_visualization(model, img, i + 1, all_channels[i], down_sampling[i])

4. 采用预训练的Resnet实现猫狗识别

from tensorflow.keras.applications.resnet50 import ResNet50

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications.resnet50 import preprocess_input, decode_predictions

import numpy as np

from PIL import ImageFont, ImageDraw, Image

import cv2

img_path = 'data\instance\dog.jpg' #进行狗的判断

#img_path = 'cat.jpg' #进行猫的判断

#img_path = 'deer.jpg' #进行鹿的判断

weights_path = 'resnet50_weights.h5'

img = image.load_img(img_path, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

def get_model():

model = ResNet50(weights=weights_path)

# 导入模型以及预训练权重

print(model.summary()) # 打印模型概况

return model

model = get_model()

Model: "resnet50"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_4 (InputLayer) [(None, 224, 224, 3 0 []

)]

conv1_pad (ZeroPadding2D) (None, 230, 230, 3) 0 ['input_4[0][0]']

conv1_conv (Conv2D) (None, 112, 112, 64 9472 ['conv1_pad[0][0]']

)

conv1_bn (BatchNormalization) (None, 112, 112, 64 256 ['conv1_conv[0][0]']

)

conv1_relu (Activation) (None, 112, 112, 64 0 ['conv1_bn[0][0]']

)

pool1_pad (ZeroPadding2D) (None, 114, 114, 64 0 ['conv1_relu[0][0]']

)

pool1_pool (MaxPooling2D) (None, 56, 56, 64) 0 ['pool1_pad[0][0]']

conv2_block1_1_conv (Conv2D) (None, 56, 56, 64) 4160 ['pool1_pool[0][0]']

conv2_block1_1_bn (BatchNormal (None, 56, 56, 64) 256 ['conv2_block1_1_conv[0][0]']

ization)

conv2_block1_1_relu (Activatio (None, 56, 56, 64) 0 ['conv2_block1_1_bn[0][0]']

n)

conv2_block1_2_conv (Conv2D) (None, 56, 56, 64) 36928 ['conv2_block1_1_relu[0][0]']

conv2_block1_2_bn (BatchNormal (None, 56, 56, 64) 256 ['conv2_block1_2_conv[0][0]']

ization)

conv2_block1_2_relu (Activatio (None, 56, 56, 64) 0 ['conv2_block1_2_bn[0][0]']

n)

conv2_block1_0_conv (Conv2D) (None, 56, 56, 256) 16640 ['pool1_pool[0][0]']

conv2_block1_3_conv (Conv2D) (None, 56, 56, 256) 16640 ['conv2_block1_2_relu[0][0]']

conv2_block1_0_bn (BatchNormal (None, 56, 56, 256) 1024 ['conv2_block1_0_conv[0][0]']

ization)

conv2_block1_3_bn (BatchNormal (None, 56, 56, 256) 1024 ['conv2_block1_3_conv[0][0]']

ization)

conv2_block1_add (Add) (None, 56, 56, 256) 0 ['conv2_block1_0_bn[0][0]',

'conv2_block1_3_bn[0][0]']

conv2_block1_out (Activation) (None, 56, 56, 256) 0 ['conv2_block1_add[0][0]']

conv2_block2_1_conv (Conv2D) (None, 56, 56, 64) 16448 ['conv2_block1_out[0][0]']

conv2_block2_1_bn (BatchNormal (None, 56, 56, 64) 256 ['conv2_block2_1_conv[0][0]']

ization)

conv2_block2_1_relu (Activatio (None, 56, 56, 64) 0 ['conv2_block2_1_bn[0][0]']

n)

conv2_block2_2_conv (Conv2D) (None, 56, 56, 64) 36928 ['conv2_block2_1_relu[0][0]']

conv2_block2_2_bn (BatchNormal (None, 56, 56, 64) 256 ['conv2_block2_2_conv[0][0]']

ization)

conv2_block2_2_relu (Activatio (None, 56, 56, 64) 0 ['conv2_block2_2_bn[0][0]']

n)

conv2_block2_3_conv (Conv2D) (None, 56, 56, 256) 16640 ['conv2_block2_2_relu[0][0]']

conv2_block2_3_bn (BatchNormal (None, 56, 56, 256) 1024 ['conv2_block2_3_conv[0][0]']

ization)

conv2_block2_add (Add) (None, 56, 56, 256) 0 ['conv2_block1_out[0][0]',

'conv2_block2_3_bn[0][0]']

conv2_block2_out (Activation) (None, 56, 56, 256) 0 ['conv2_block2_add[0][0]']

conv2_block3_1_conv (Conv2D) (None, 56, 56, 64) 16448 ['conv2_block2_out[0][0]']

conv2_block3_1_bn (BatchNormal (None, 56, 56, 64) 256 ['conv2_block3_1_conv[0][0]']

ization)

conv2_block3_1_relu (Activatio (None, 56, 56, 64) 0 ['conv2_block3_1_bn[0][0]']

n)

conv2_block3_2_conv (Conv2D) (None, 56, 56, 64) 36928 ['conv2_block3_1_relu[0][0]']

conv2_block3_2_bn (BatchNormal (None, 56, 56, 64) 256 ['conv2_block3_2_conv[0][0]']

ization)

conv2_block3_2_relu (Activatio (None, 56, 56, 64) 0 ['conv2_block3_2_bn[0][0]']

n)

conv2_block3_3_conv (Conv2D) (None, 56, 56, 256) 16640 ['conv2_block3_2_relu[0][0]']

conv2_block3_3_bn (BatchNormal (None, 56, 56, 256) 1024 ['conv2_block3_3_conv[0][0]']

ization)

conv2_block3_add (Add) (None, 56, 56, 256) 0 ['conv2_block2_out[0][0]',

'conv2_block3_3_bn[0][0]']

conv2_block3_out (Activation) (None, 56, 56, 256) 0 ['conv2_block3_add[0][0]']

conv3_block1_1_conv (Conv2D) (None, 28, 28, 128) 32896 ['conv2_block3_out[0][0]']

conv3_block1_1_bn (BatchNormal (None, 28, 28, 128) 512 ['conv3_block1_1_conv[0][0]']

ization)

conv3_block1_1_relu (Activatio (None, 28, 28, 128) 0 ['conv3_block1_1_bn[0][0]']

n)

conv3_block1_2_conv (Conv2D) (None, 28, 28, 128) 147584 ['conv3_block1_1_relu[0][0]']

conv3_block1_2_bn (BatchNormal (None, 28, 28, 128) 512 ['conv3_block1_2_conv[0][0]']

ization)

conv3_block1_2_relu (Activatio (None, 28, 28, 128) 0 ['conv3_block1_2_bn[0][0]']

n)

conv3_block1_0_conv (Conv2D) (None, 28, 28, 512) 131584 ['conv2_block3_out[0][0]']

conv3_block1_3_conv (Conv2D) (None, 28, 28, 512) 66048 ['conv3_block1_2_relu[0][0]']

conv3_block1_0_bn (BatchNormal (None, 28, 28, 512) 2048 ['conv3_block1_0_conv[0][0]']

ization)

conv3_block1_3_bn (BatchNormal (None, 28, 28, 512) 2048 ['conv3_block1_3_conv[0][0]']

ization)

conv3_block1_add (Add) (None, 28, 28, 512) 0 ['conv3_block1_0_bn[0][0]',

'conv3_block1_3_bn[0][0]']

conv3_block1_out (Activation) (None, 28, 28, 512) 0 ['conv3_block1_add[0][0]']

conv3_block2_1_conv (Conv2D) (None, 28, 28, 128) 65664 ['conv3_block1_out[0][0]']

conv3_block2_1_bn (BatchNormal (None, 28, 28, 128) 512 ['conv3_block2_1_conv[0][0]']

ization)

conv3_block2_1_relu (Activatio (None, 28, 28, 128) 0 ['conv3_block2_1_bn[0][0]']

n)

conv3_block2_2_conv (Conv2D) (None, 28, 28, 128) 147584 ['conv3_block2_1_relu[0][0]']

conv3_block2_2_bn (BatchNormal (None, 28, 28, 128) 512 ['conv3_block2_2_conv[0][0]']

ization)

conv3_block2_2_relu (Activatio (None, 28, 28, 128) 0 ['conv3_block2_2_bn[0][0]']

n)

conv3_block2_3_conv (Conv2D) (None, 28, 28, 512) 66048 ['conv3_block2_2_relu[0][0]']

conv3_block2_3_bn (BatchNormal (None, 28, 28, 512) 2048 ['conv3_block2_3_conv[0][0]']

ization)

conv3_block2_add (Add) (None, 28, 28, 512) 0 ['conv3_block1_out[0][0]',

'conv3_block2_3_bn[0][0]']

conv3_block2_out (Activation) (None, 28, 28, 512) 0 ['conv3_block2_add[0][0]']

conv3_block3_1_conv (Conv2D) (None, 28, 28, 128) 65664 ['conv3_block2_out[0][0]']

conv3_block3_1_bn (BatchNormal (None, 28, 28, 128) 512 ['conv3_block3_1_conv[0][0]']

ization)

conv3_block3_1_relu (Activatio (None, 28, 28, 128) 0 ['conv3_block3_1_bn[0][0]']

n)

conv3_block3_2_conv (Conv2D) (None, 28, 28, 128) 147584 ['conv3_block3_1_relu[0][0]']

conv3_block3_2_bn (BatchNormal (None, 28, 28, 128) 512 ['conv3_block3_2_conv[0][0]']

ization)

conv3_block3_2_relu (Activatio (None, 28, 28, 128) 0 ['conv3_block3_2_bn[0][0]']

n)

conv3_block3_3_conv (Conv2D) (None, 28, 28, 512) 66048 ['conv3_block3_2_relu[0][0]']

conv3_block3_3_bn (BatchNormal (None, 28, 28, 512) 2048 ['conv3_block3_3_conv[0][0]']

ization)

conv3_block3_add (Add) (None, 28, 28, 512) 0 ['conv3_block2_out[0][0]',

'conv3_block3_3_bn[0][0]']

conv3_block3_out (Activation) (None, 28, 28, 512) 0 ['conv3_block3_add[0][0]']

conv3_block4_1_conv (Conv2D) (None, 28, 28, 128) 65664 ['conv3_block3_out[0][0]']

conv3_block4_1_bn (BatchNormal (None, 28, 28, 128) 512 ['conv3_block4_1_conv[0][0]']

ization)

conv3_block4_1_relu (Activatio (None, 28, 28, 128) 0 ['conv3_block4_1_bn[0][0]']

n)

conv3_block4_2_conv (Conv2D) (None, 28, 28, 128) 147584 ['conv3_block4_1_relu[0][0]']

conv3_block4_2_bn (BatchNormal (None, 28, 28, 128) 512 ['conv3_block4_2_conv[0][0]']

ization)

conv3_block4_2_relu (Activatio (None, 28, 28, 128) 0 ['conv3_block4_2_bn[0][0]']

n)

conv3_block4_3_conv (Conv2D) (None, 28, 28, 512) 66048 ['conv3_block4_2_relu[0][0]']

conv3_block4_3_bn (BatchNormal (None, 28, 28, 512) 2048 ['conv3_block4_3_conv[0][0]']

ization)

conv3_block4_add (Add) (None, 28, 28, 512) 0 ['conv3_block3_out[0][0]',

'conv3_block4_3_bn[0][0]']

conv3_block4_out (Activation) (None, 28, 28, 512) 0 ['conv3_block4_add[0][0]']

conv4_block1_1_conv (Conv2D) (None, 14, 14, 256) 131328 ['conv3_block4_out[0][0]']

conv4_block1_1_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block1_1_conv[0][0]']

ization)

conv4_block1_1_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block1_1_bn[0][0]']

n)

conv4_block1_2_conv (Conv2D) (None, 14, 14, 256) 590080 ['conv4_block1_1_relu[0][0]']

conv4_block1_2_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block1_2_conv[0][0]']

ization)

conv4_block1_2_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block1_2_bn[0][0]']

n)

conv4_block1_0_conv (Conv2D) (None, 14, 14, 1024 525312 ['conv3_block4_out[0][0]']

)

conv4_block1_3_conv (Conv2D) (None, 14, 14, 1024 263168 ['conv4_block1_2_relu[0][0]']

)

conv4_block1_0_bn (BatchNormal (None, 14, 14, 1024 4096 ['conv4_block1_0_conv[0][0]']

ization) )

conv4_block1_3_bn (BatchNormal (None, 14, 14, 1024 4096 ['conv4_block1_3_conv[0][0]']

ization) )

conv4_block1_add (Add) (None, 14, 14, 1024 0 ['conv4_block1_0_bn[0][0]',

) 'conv4_block1_3_bn[0][0]']

conv4_block1_out (Activation) (None, 14, 14, 1024 0 ['conv4_block1_add[0][0]']

)

conv4_block2_1_conv (Conv2D) (None, 14, 14, 256) 262400 ['conv4_block1_out[0][0]']

conv4_block2_1_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block2_1_conv[0][0]']

ization)

conv4_block2_1_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block2_1_bn[0][0]']

n)

conv4_block2_2_conv (Conv2D) (None, 14, 14, 256) 590080 ['conv4_block2_1_relu[0][0]']

conv4_block2_2_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block2_2_conv[0][0]']

ization)

conv4_block2_2_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block2_2_bn[0][0]']

n)

conv4_block2_3_conv (Conv2D) (None, 14, 14, 1024 263168 ['conv4_block2_2_relu[0][0]']

)

conv4_block2_3_bn (BatchNormal (None, 14, 14, 1024 4096 ['conv4_block2_3_conv[0][0]']

ization) )

conv4_block2_add (Add) (None, 14, 14, 1024 0 ['conv4_block1_out[0][0]',

) 'conv4_block2_3_bn[0][0]']

conv4_block2_out (Activation) (None, 14, 14, 1024 0 ['conv4_block2_add[0][0]']

)

conv4_block3_1_conv (Conv2D) (None, 14, 14, 256) 262400 ['conv4_block2_out[0][0]']

conv4_block3_1_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block3_1_conv[0][0]']

ization)

conv4_block3_1_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block3_1_bn[0][0]']

n)

conv4_block3_2_conv (Conv2D) (None, 14, 14, 256) 590080 ['conv4_block3_1_relu[0][0]']

conv4_block3_2_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block3_2_conv[0][0]']

ization)

conv4_block3_2_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block3_2_bn[0][0]']

n)

conv4_block3_3_conv (Conv2D) (None, 14, 14, 1024 263168 ['conv4_block3_2_relu[0][0]']

)

conv4_block3_3_bn (BatchNormal (None, 14, 14, 1024 4096 ['conv4_block3_3_conv[0][0]']

ization) )

conv4_block3_add (Add) (None, 14, 14, 1024 0 ['conv4_block2_out[0][0]',

) 'conv4_block3_3_bn[0][0]']

conv4_block3_out (Activation) (None, 14, 14, 1024 0 ['conv4_block3_add[0][0]']

)

conv4_block4_1_conv (Conv2D) (None, 14, 14, 256) 262400 ['conv4_block3_out[0][0]']

conv4_block4_1_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block4_1_conv[0][0]']

ization)

conv4_block4_1_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block4_1_bn[0][0]']

n)

conv4_block4_2_conv (Conv2D) (None, 14, 14, 256) 590080 ['conv4_block4_1_relu[0][0]']

conv4_block4_2_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block4_2_conv[0][0]']

ization)

conv4_block4_2_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block4_2_bn[0][0]']

n)

conv4_block4_3_conv (Conv2D) (None, 14, 14, 1024 263168 ['conv4_block4_2_relu[0][0]']

)

conv4_block4_3_bn (BatchNormal (None, 14, 14, 1024 4096 ['conv4_block4_3_conv[0][0]']

ization) )

conv4_block4_add (Add) (None, 14, 14, 1024 0 ['conv4_block3_out[0][0]',

) 'conv4_block4_3_bn[0][0]']

conv4_block4_out (Activation) (None, 14, 14, 1024 0 ['conv4_block4_add[0][0]']

)

conv4_block5_1_conv (Conv2D) (None, 14, 14, 256) 262400 ['conv4_block4_out[0][0]']

conv4_block5_1_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block5_1_conv[0][0]']

ization)

conv4_block5_1_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block5_1_bn[0][0]']

n)

conv4_block5_2_conv (Conv2D) (None, 14, 14, 256) 590080 ['conv4_block5_1_relu[0][0]']

conv4_block5_2_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block5_2_conv[0][0]']

ization)

conv4_block5_2_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block5_2_bn[0][0]']

n)

conv4_block5_3_conv (Conv2D) (None, 14, 14, 1024 263168 ['conv4_block5_2_relu[0][0]']

)

conv4_block5_3_bn (BatchNormal (None, 14, 14, 1024 4096 ['conv4_block5_3_conv[0][0]']

ization) )

conv4_block5_add (Add) (None, 14, 14, 1024 0 ['conv4_block4_out[0][0]',

) 'conv4_block5_3_bn[0][0]']

conv4_block5_out (Activation) (None, 14, 14, 1024 0 ['conv4_block5_add[0][0]']

)

conv4_block6_1_conv (Conv2D) (None, 14, 14, 256) 262400 ['conv4_block5_out[0][0]']

conv4_block6_1_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block6_1_conv[0][0]']

ization)

conv4_block6_1_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block6_1_bn[0][0]']

n)

conv4_block6_2_conv (Conv2D) (None, 14, 14, 256) 590080 ['conv4_block6_1_relu[0][0]']

conv4_block6_2_bn (BatchNormal (None, 14, 14, 256) 1024 ['conv4_block6_2_conv[0][0]']

ization)

conv4_block6_2_relu (Activatio (None, 14, 14, 256) 0 ['conv4_block6_2_bn[0][0]']

n)

conv4_block6_3_conv (Conv2D) (None, 14, 14, 1024 263168 ['conv4_block6_2_relu[0][0]']

)

conv4_block6_3_bn (BatchNormal (None, 14, 14, 1024 4096 ['conv4_block6_3_conv[0][0]']

ization) )

conv4_block6_add (Add) (None, 14, 14, 1024 0 ['conv4_block5_out[0][0]',

) 'conv4_block6_3_bn[0][0]']

conv4_block6_out (Activation) (None, 14, 14, 1024 0 ['conv4_block6_add[0][0]']

)

conv5_block1_1_conv (Conv2D) (None, 7, 7, 512) 524800 ['conv4_block6_out[0][0]']

conv5_block1_1_bn (BatchNormal (None, 7, 7, 512) 2048 ['conv5_block1_1_conv[0][0]']

ization)

conv5_block1_1_relu (Activatio (None, 7, 7, 512) 0 ['conv5_block1_1_bn[0][0]']

n)

conv5_block1_2_conv (Conv2D) (None, 7, 7, 512) 2359808 ['conv5_block1_1_relu[0][0]']

conv5_block1_2_bn (BatchNormal (None, 7, 7, 512) 2048 ['conv5_block1_2_conv[0][0]']

ization)

conv5_block1_2_relu (Activatio (None, 7, 7, 512) 0 ['conv5_block1_2_bn[0][0]']

n)

conv5_block1_0_conv (Conv2D) (None, 7, 7, 2048) 2099200 ['conv4_block6_out[0][0]']

conv5_block1_3_conv (Conv2D) (None, 7, 7, 2048) 1050624 ['conv5_block1_2_relu[0][0]']

conv5_block1_0_bn (BatchNormal (None, 7, 7, 2048) 8192 ['conv5_block1_0_conv[0][0]']

ization)

conv5_block1_3_bn (BatchNormal (None, 7, 7, 2048) 8192 ['conv5_block1_3_conv[0][0]']

ization)

conv5_block1_add (Add) (None, 7, 7, 2048) 0 ['conv5_block1_0_bn[0][0]',

'conv5_block1_3_bn[0][0]']

conv5_block1_out (Activation) (None, 7, 7, 2048) 0 ['conv5_block1_add[0][0]']

conv5_block2_1_conv (Conv2D) (None, 7, 7, 512) 1049088 ['conv5_block1_out[0][0]']

conv5_block2_1_bn (BatchNormal (None, 7, 7, 512) 2048 ['conv5_block2_1_conv[0][0]']

ization)

conv5_block2_1_relu (Activatio (None, 7, 7, 512) 0 ['conv5_block2_1_bn[0][0]']

n)

conv5_block2_2_conv (Conv2D) (None, 7, 7, 512) 2359808 ['conv5_block2_1_relu[0][0]']

conv5_block2_2_bn (BatchNormal (None, 7, 7, 512) 2048 ['conv5_block2_2_conv[0][0]']

ization)

conv5_block2_2_relu (Activatio (None, 7, 7, 512) 0 ['conv5_block2_2_bn[0][0]']

n)

conv5_block2_3_conv (Conv2D) (None, 7, 7, 2048) 1050624 ['conv5_block2_2_relu[0][0]']

conv5_block2_3_bn (BatchNormal (None, 7, 7, 2048) 8192 ['conv5_block2_3_conv[0][0]']

ization)

conv5_block2_add (Add) (None, 7, 7, 2048) 0 ['conv5_block1_out[0][0]',

'conv5_block2_3_bn[0][0]']

conv5_block2_out (Activation) (None, 7, 7, 2048) 0 ['conv5_block2_add[0][0]']

conv5_block3_1_conv (Conv2D) (None, 7, 7, 512) 1049088 ['conv5_block2_out[0][0]']

conv5_block3_1_bn (BatchNormal (None, 7, 7, 512) 2048 ['conv5_block3_1_conv[0][0]']

ization)

conv5_block3_1_relu (Activatio (None, 7, 7, 512) 0 ['conv5_block3_1_bn[0][0]']

n)

conv5_block3_2_conv (Conv2D) (None, 7, 7, 512) 2359808 ['conv5_block3_1_relu[0][0]']

conv5_block3_2_bn (BatchNormal (None, 7, 7, 512) 2048 ['conv5_block3_2_conv[0][0]']

ization)

conv5_block3_2_relu (Activatio (None, 7, 7, 512) 0 ['conv5_block3_2_bn[0][0]']

n)

conv5_block3_3_conv (Conv2D) (None, 7, 7, 2048) 1050624 ['conv5_block3_2_relu[0][0]']

conv5_block3_3_bn (BatchNormal (None, 7, 7, 2048) 8192 ['conv5_block3_3_conv[0][0]']

ization)

conv5_block3_add (Add) (None, 7, 7, 2048) 0 ['conv5_block2_out[0][0]',

'conv5_block3_3_bn[0][0]']

conv5_block3_out (Activation) (None, 7, 7, 2048) 0 ['conv5_block3_add[0][0]']

avg_pool (GlobalAveragePooling (None, 2048) 0 ['conv5_block3_out[0][0]']

2D)

predictions (Dense) (None, 1000) 2049000 ['avg_pool[0][0]']

==================================================================================================

Total params: 25,636,712

Trainable params: 25,583,592

Non-trainable params: 53,120

__________________________________________________________________________________________________

None

preds = model.predict(x)

1/1 [==============================] - 1s 854ms/step

print('Predicted:', decode_predictions(preds, top=5)[0])

Predicted: [('n02108422', 'bull_mastiff', 0.3921146), ('n02110958', 'pug', 0.2944119), ('n02093754', 'Border_terrier', 0.14356579), ('n02108915', 'French_bulldog', 0.057976846), ('n02099712', 'Labrador_retriever', 0.052499186)]

TensorFlow2.2基本应用

import tensorflow as tf

x=tf.random.normal([2,16])

w1=tf.Variable(tf.random.truncated_normal([16,8],stddev=0.1))

b1=tf.Variable(tf.zeros([8]))

o1=tf.matmul(x,w1)+b1

o1=tf.nn.relu(o1)

o1

<tf.Tensor: id=8263, shape=(2, 8), dtype=float32, numpy=

array([[0.16938789, 0. , 0.08883161, 0.14095941, 0.34751543,

0.353898 , 0. , 0.13356908],

[0. , 0. , 0.48546872, 0.37623546, 0.5447475 ,

0.21755993, 0.40121362, 0. ]], dtype=float32)>

from tensorflow.keras import layers

x=tf.random.normal([4,16*16])

fc=layers.Dense(5,activation=tf.nn.relu)

h1=fc(x)

h1

<tf.Tensor: id=8296, shape=(4, 5), dtype=float32, numpy=

array([[0. , 0. , 0. , 0.14286758, 0. ],

[0. , 2.2727172 , 0. , 0. , 0.34961763],

[0.1311972 , 0. , 1.4005635 , 0. , 0. ],

[0. , 1.7266206 , 0.64711714, 1.3494569 , 0. ]],

dtype=float32)>

#获取权值矩阵w

fc.kernel

<tf.Variable ‘dense/kernel:0’ shape=(256, 5) dtype=float32, numpy=

array([[-0.0339304 , 0.02273461, -0.12746884, 0.14963049, 0.00773269],

[-0.05978647, 0.07886668, -0.09110804, 0.14902723, 0.13007113],

[ 0.10187459, 0.13089484, 0.14367685, 0.12212327, -0.06235344],

…,

[ 0.10417426, 0.05112691, 0.12206474, 0.01141772, -0.05271714],

[ 0.03493455, -0.13473712, -0.01317982, -0.09485313, 0.04731715],

[ 0.12421742, 0.00030141, -0.00211757, -0.04196439, -0.03638943]],

dtype=float32)>

fc.bias

<tf.Variable ‘dense/bias:0’ shape=(5,) dtype=float32, numpy=array([0., 0., 0., 0., 0.], dtype=float32)>

fc.trainable_variables

[<tf.Variable ‘dense/kernel:0’ shape=(256, 5) dtype=float32, numpy=

array([[-0.0339304 , 0.02273461, -0.12746884, 0.14963049, 0.00773269],

[-0.05978647, 0.07886668, -0.09110804, 0.14902723, 0.13007113],

[ 0.10187459, 0.13089484, 0.14367685, 0.12212327, -0.06235344],

…,

[ 0.10417426, 0.05112691, 0.12206474, 0.01141772, -0.05271714],

[ 0.03493455, -0.13473712, -0.01317982, -0.09485313, 0.04731715],

[ 0.12421742, 0.00030141, -0.00211757, -0.04196439, -0.03638943]],

dtype=float32)>,

<tf.Variable ‘dense/bias:0’ shape=(5,) dtype=float32, numpy=array([0., 0., 0., 0., 0.], dtype=float32)>]文章来源:https://www.toymoban.com/news/detail-666264.html

fc.variables

[<tf.Variable ‘dense/kernel:0’ shape=(256, 5) dtype=float32, numpy=

array([[-0.0339304 , 0.02273461, -0.12746884, 0.14963049, 0.00773269],

[-0.05978647, 0.07886668, -0.09110804, 0.14902723, 0.13007113],

[ 0.10187459, 0.13089484, 0.14367685, 0.12212327, -0.06235344],

…,

[ 0.10417426, 0.05112691, 0.12206474, 0.01141772, -0.05271714],

[ 0.03493455, -0.13473712, -0.01317982, -0.09485313, 0.04731715],

[ 0.12421742, 0.00030141, -0.00211757, -0.04196439, -0.03638943]],

dtype=float32)>,

<tf.Variable ‘dense/bias:0’ shape=(5,) dtype=float32, numpy=array([0., 0., 0., 0., 0.], dtype=float32)>]文章来源地址https://www.toymoban.com/news/detail-666264.html

5. 使用深度学习进行手写数字识别

import tensorflow as tf

#载入MNIST 数据集。

mnist = tf.keras.datasets.mnist

#拆分数据集

(x_train, y_train), (x_test, y_test) = mnist.load_data()

#将样本进行预处理,并从整数转换为浮点数

x_train, x_test = x_train / 255.0, x_test / 255.0

#使用tf.keras.Sequential将模型的各层堆叠,并设置参数

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation='softmax')

])

#设置模型的优化器和损失函数

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

#训练并验证模型

model.fit(x_train, y_train, epochs=5)

model.evaluate(x_test, y_test, verbose=2)

Train on 60000 samples

Epoch 1/5

60000/60000 [==============================] - 6s 95us/sample - loss: 0.2931 - accuracy: 0.9146

Epoch 2/5

60000/60000 [==============================] - 5s 77us/sample - loss: 0.1419 - accuracy: 0.9592

Epoch 3/5

60000/60000 [==============================] - 5s 78us/sample - loss: 0.1065 - accuracy: 0.9683

Epoch 4/5

60000/60000 [==============================] - 5s 78us/sample - loss: 0.0852 - accuracy: 0.9738

Epoch 5/5

60000/60000 [==============================] - 6s 100us/sample - loss: 0.0735 - accuracy: 0.9769

10000/1 - 0s - loss: 0.0338 - accuracy: 0.9795

[0.0666636555833742, 0.9795]

附:系列文章

| 实验 | 目录 | 直达链接 |

|---|---|---|

| 1 | Numpy以及可视化回顾 | https://want595.blog.csdn.net/article/details/131891689 |

| 2 | 线性回归 | https://want595.blog.csdn.net/article/details/131892463 |

| 3 | 逻辑回归 | https://want595.blog.csdn.net/article/details/131912053 |

| 4 | 多分类实践(基于逻辑回归) | https://want595.blog.csdn.net/article/details/131913690 |

| 5 | 机器学习应用实践-手动调参 | https://want595.blog.csdn.net/article/details/131934812 |

| 6 | 贝叶斯推理 | https://want595.blog.csdn.net/article/details/131947040 |

| 7 | KNN最近邻算法 | https://want595.blog.csdn.net/article/details/131947885 |

| 8 | K-means无监督聚类 | https://want595.blog.csdn.net/article/details/131952371 |

| 9 | 决策树 | https://want595.blog.csdn.net/article/details/131991014 |

| 10 | 随机森林和集成学习 | https://want595.blog.csdn.net/article/details/132003451 |

| 11 | 支持向量机 | https://want595.blog.csdn.net/article/details/132010861 |

| 12 | 神经网络-感知器 | https://want595.blog.csdn.net/article/details/132014769 |

| 13 | 基于神经网络的回归-分类实验 | https://want595.blog.csdn.net/article/details/132127413 |

| 14 | 手写体卷积神经网络 | https://want595.blog.csdn.net/article/details/132223494 |

| 15 | 将Lenet5应用于Cifar10数据集 | https://want595.blog.csdn.net/article/details/132223751 |

| 16 | 卷积、下采样、经典卷积网络 | https://want595.blog.csdn.net/article/details/132223985 |

到了这里,关于【Python机器学习】实验16 卷积、下采样、经典卷积网络的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!