基础版本与环境信息:

MacBook Pro Apple M2 Max

VMware Fusion Player 版本 13.0.2 (21581413)

ubuntu-22.04.2-live-server-arm64

k8s-v1.27.3

docker 24.0.2

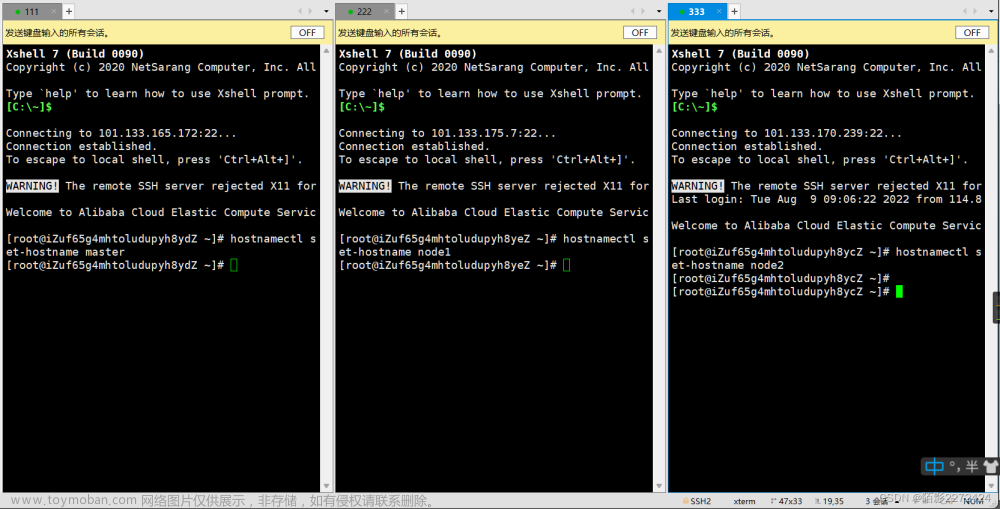

MacBook上安装VMware Fusion,再虚拟化出6个ubuntu节点,采用kubeadm来安装k8s+containerd,组成一个非高可用的k8s集群,网络采用flannel插件。vmware和ubuntu的安装已这里不回介绍,网上参考的文章很多。本次的实验k8s集群,共有6个ubuntu节点,1个作为master,5个作为worker。接下来的所有操作都是非root用户下进行,笔者使用的linux用户名为: zhangzk。

| hostname | IP地址 | k8s角色 | 配置 |

| zzk-1 | 192.168.19.128 | worker | 2核&4G |

| zzk-2 | 192.168.19.130 | worker | 2核&4G |

| zzk-3 | 192.168.19.131 | worker | 2核&4G |

| zzk-4 | 192.168.19.132 | worker | 2核&4G |

| zzk-5 | 192.168.19.133 | worker | 2核&4G |

| test | 192.168.19.134 | master | 2核&4G |

1、更新环境(所有节点)

sudo apt update

2、永久关闭swap(所有节点)

sudo swapon --show

如果启用了Swap分区,会看到Swap分区文件的路径及其大小

也可以通过free命令进行检查:

free -h

如果启用了Swap分区则会显示Swap分区的总大小和使用情况。

运行以下命令以禁用Swap分区

sudo swapoff -a

然后删除Swap分区文件:

sudo rm /swap.img

接下来修改fstab文件,以便在系统重新启动后不会重新创建Swap分区文件。 注释或删除/etc/fstab的:

/swap.img none swap sw 0 0

再次运行sudo swapon –show检查是否已禁用,如果禁用的话该命令应该没有输出。

3、关闭防火墙(所有节点)

查看当前的防火墙状态:sudo ufw status

inactive状态是防火墙关闭状态 active是开启状态。

关闭防火墙: sudo ufw disable

4、允许 iptables 检查桥接流量(所有节点)

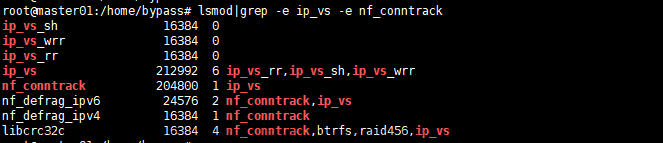

1.加载overlay和br_netfilter两个内核模块

sudo modprobe overlay && sudo modprobe br_netfilter持久化加载上述两个模块,避免重启失效。

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF通过运行 lsmod | grep br_netfilter 来验证 br_netfilter 模块是否已加载

通过运行 lsmod | grep overlay 来验证 overlay模块是否已加载

2.修改内核参数,确保二层的网桥在转发包时也会被iptables的FORWARD规则所过滤

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF应用 sysctl 参数而不重新启动

sudo sysctl --systemK8s为啥要启用bridge-nf-call-iptables内核参数?用案例给你讲明白

开启bridge功能是什么意思(k8s安装时开启bridge-nf-call-iptables)

5、安装 docker(所有节点)

直接使用docker的最新版,安装docker的时候,会自动安装containerd.

具体安装过程,参考官方文档:

Install Docker Engine on Ubuntu | Docker Documentation

Uninstall old versions

Before you can install Docker Engine, you must first make sure that any conflicting packages are uninstalled.

for pkg in docker.io docker-doc docker-compose podman-docker containerd runc; do sudo apt-get remove $pkg; doneInstall using the apt repository

Before you install Docker Engine for the first time on a new host machine, you need to set up the Docker repository. Afterward, you can install and update Docker from the repository.

Set up the repository

-

Update the

aptpackage index and install packages to allowaptto use a repository over HTTPS:sudo apt-get update sudo apt-get install ca-certificates curl gnupg -

Add Docker’s official GPG key:

sudo install -m 0755 -d /etc/apt/keyrings curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg sudo chmod a+r /etc/apt/keyrings/docker.gpg -

Use the following command to set up the repository:

echo \ "deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \ "$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | \ sudo tee /etc/apt/sources.list.d/docker.list > /dev/nullNote

If you use an Ubuntu derivative distro, such as Linux Mint, you may need to use

UBUNTU_CODENAMEinstead ofVERSION_CODENAME.

Install Docker Engine

-

Update the

aptpackage index:sudo apt-get update -

Install Docker Engine, containerd, and Docker Compose.

- Latest

- Specific version

To install the latest version, run:

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

-

Verify that the Docker Engine installation is successful by running the

hello-worldimage.sudo docker run hello-worldThis command downloads a test image and runs it in a container. When the container runs, it prints a confirmation message and exits.

6、安装 k8s(所有节点)

参考官方文档:Installing kubeadm | Kubernetes

1. the apt package index and install packages needed to use the Kubernetes apt repository:

sudo apt-get update

sudo apt-get install -y apt-transport-https ca-certificates curl2.Download the Google Cloud public signing key:

#使用google源(需要科学上网)

curl -fsSL https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-archive-keyring.gpg#使用aliyun源(建议使用)

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-archive-keyring.gpg3.Add the Kubernetes apt repository:

#使用google源(需要科学上网)

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list#使用aliyun源(建议使用)

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-archive-keyring.gpg] https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list4.Update apt package index, install kubelet, kubeadm and kubectl, and pin their version:

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl备注:安装指定版本的kubectl\kubeadm\kubelet,这里以1.23.6为例:

#安装1.23.6版本

apt-get install kubelet=1.23.6-00

apt-get install kubeadm=1.23.6-00

apt-get install kubectl=1.23.6-00

#查看版本

kubectl version --client && kubeadm version && kubelet --version#开机启动

systemctl enable kubelet7、修改运行时containerd 配置(所有节点)

首先生成containerd的默认配置文件:

containerd config default | sudo tee /etc/containerd/config.toml1.第一个修改是启用 systemd:

修改文件:/etc/containerd/config.toml

找到 containerd.runtimes.runc.options 修改 SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

Root = ""

ShimCgroup = ""

SystemdCgroup = true

2.第二个修改是把远程下载地址从 Google 家的改为阿里云的:

修改文件:/etc/containerd/config.toml

把这行:sandbox_image = "registry.k8s.io/pause:3.6"

改成:sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

[plugins."io.containerd.grpc.v1.cri"]

restrict_oom_score_adj = false

#sandbox_image = "registry.k8s.io/pause:3.6"

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

selinux_category_range = 1024

注意,不改为“pause:3.9”会导致用kubeadm来初始化master的时候有如下错误:

W0628 15:02:49.331359 21652 checks.go:835] detected that the sandbox image "registry.aliyuncs.com/google_containers/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image.

重启并且设置为开机自启动

sudo systemctl restart containerd

sudo systemctl enable containerd查看镜像版本号

kubeadm config images list8、master节点初始化(仅master节点)

生成初始化配置信息:

kubeadm config print init-defaults > kubeadm.conf修改kubeadm.conf配置

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: test #修改为master节点的主机名

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certifiapiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.19.134 #修改为master机器的地址

bindPort: 6443

nodeRegistration:

catesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers #修改为阿里云源

kind: ClusterConfiguration

kubernetesVersion: 1.27.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 # 新增

serviceSubnet: 10.96.0.0/12

scheduler: {}

完成主节点master的初始化

sudo kubeadm init --config=kubeadm.conf输出日志如下:

[init] Using Kubernetes version: v1.27.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local test] and IPs [10.96.0.1 192.168.19.134]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost test] and IPs [192.168.19.134 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost test] and IPs [192.168.19.134 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 3.501515 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node test as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node test as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.19.134:6443 --token abcdef.0123456789abcdef

--discovery-token-ca-cert-hash sha256:f8139e292a34f8371b4b541a86d8f360f166363886348a596e31f2ebd5c1cdbf

由于采用的非root用户,所以需要在master上执行如下命令来配置kubectl

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config10、配置网络(仅master节点)

在master节点,添加网络插件fannel

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml上述如果超时了,多试几次,持续失败则需要用科学上网。

也可以把kube-flannel.yml内容下载到本地后再配置网络。

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

kubectl apply -f kube-flannel.yml

11、worker节点加入(非master节点执行)

在master节点执行如下命令,打印join命令

kubeadm token create --print-join-command在需要加入集群的worker节点执行上述join命令

sudo kubeadm join 192.168.19.134:6443 --token lloamy.3g9y3tx0bjnsdhqk --discovery-token-ca-cert-hash sha256:f8139e292a34f8371b4b541a86d8f360f166363886348a596e31f2ebd5c1cdbf配置worker节点的kubectl

将master节点中的“/etc/kubernetes/admin.conf”文件拷贝到从节点相同目录下,再在从节点执行如下命令(非root):

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config查看集群节点

zhangzk@test:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

test Ready control-plane 13h v1.27.3

zzk-1 Ready 2m21s v1.27.3

zzk-2 Ready 89s v1.27.3

zzk-3 Ready 75s v1.27.3

zzk-4 Ready 68s v1.27.3

zzk-5 Ready 42s v1.27.3

12、常用命令

获取所有namespace下的运行的所有pod: kubectl get po --all-namespaces

获取所有namespace下的运行的所有pod的标签: kubectl get po --show-labels

获取该节点的所有命名空间: kubectl get namespace

查看节点: kubectl get nodes文章来源:https://www.toymoban.com/news/detail-666422.html

至此,可以进入云原生的世界开始飞翔了。文章来源地址https://www.toymoban.com/news/detail-666422.html

到了这里,关于基于Ubuntu下安装kubernetes集群指南的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!