//mycamera.h

#ifndef MYCAMERA_H

#define MYCAMERA_H

#include <QObject>

#include <QAbstractVideoSurface>

#include <QVideoFrame>

#include <QVideoSurfaceFormat>

#include <QTimer>

#include <QDebug>

class MyCamera : public QObject

{

Q_OBJECT

Q_PROPERTY(QAbstractVideoSurface *videoSurface READ videoSurface WRITE setVideoSurface)

public:

explicit MyCamera(QObject *parent = nullptr);

QAbstractVideoSurface *videoSurface() const;

/*!

* \brief 可设置外部自定义QAbstractVideoSurface

* \param surface

*/

void setVideoSurface(QAbstractVideoSurface *surface);

/*!

* \brief 设置视频格式

* \param width 视频宽

* \param heigth 视频高

* \param format enum QVideoFrame::PixelFormat

*/

void setFormat(int width, int heigth, QVideoFrame::PixelFormat format);

signals:

public slots:

/*!

* \brief 接收外部数据源,视频帧

* \param frame

*/

void onNewVideoContentReceived(const QVideoFrame &frame);

private:

QAbstractVideoSurface *m_surface = nullptr;

QVideoSurfaceFormat m_format;

};

#endif // MYCAMERA_H

#include "mycamera.h"

MyCamera::MyCamera(QObject *parent) : QObject(parent)

{

}

QAbstractVideoSurface *MyCamera::videoSurface() const

{

return m_surface;

}

void MyCamera::setVideoSurface(QAbstractVideoSurface *surface)

{

qDebug() << "setVideoSurface";

if (m_surface && m_surface != surface && m_surface->isActive()) {

m_surface->stop();

}

m_surface = surface;

if (m_surface && m_format.isValid())

{

m_format = m_surface->nearestFormat(m_format);

m_surface->start(m_format);

}

}

//设置当前帧的视频格式

void MyCamera::setFormat(int width, int heigth, QVideoFrame::PixelFormat format)

{

QSize size(width, heigth);

QVideoSurfaceFormat vsformat(size, format);

m_format = vsformat;

if (m_surface)

{

if (m_surface->isActive())

{

m_surface->stop();

}

m_format = m_surface->nearestFormat(m_format);

m_surface->start(m_format);

}

}

//添加已经封装好的视频帧

void MyCamera::onNewVideoContentReceived(const QVideoFrame &frame)

{

//按照视频帧设置格式

setFormat(frame.width(),frame.height(),QVideoFrame::Format_RGB32);//frame.pixelFormat()

if (m_surface)

{

m_surface->present(frame);

}

}

//myvideosurface.h

#ifndef MYVIDEOSURFACE_H

#define MYVIDEOSURFACE_H

#include <QObject>

#include <QAbstractVideoSurface>

#include <QDebug>

class MyVideoSurface : public QAbstractVideoSurface

{

Q_OBJECT

public:

explicit MyVideoSurface(QObject *parent = nullptr);

virtual bool present(const QVideoFrame &frame);

virtual QList<QVideoFrame::PixelFormat> supportedPixelFormats(QAbstractVideoBuffer::HandleType type = QAbstractVideoBuffer::NoHandle) const;

signals:

void frameChanged(const QVideoFrame &frame);

};

#endif // MYVIDEOSURFACE_H

//myvideosurface.cpp

#include "myvideosurface.h"

MyVideoSurface::MyVideoSurface(QObject *)

{

}

bool MyVideoSurface::present(const QVideoFrame &frame)

{

// 该函数会在摄像头拍摄到每一帧的图片后,自动调用

// 图像处理函数,目标是把图片的格式从 QVideoFrame转换成 QPixmap(或者是能够轻易的转化成QPixmap的其他类)

#if 0

QVideoFrame fm = frame;

//拷贝构造,内部地址浅拷贝,视频帧依旧存放于不可读取的内存上,需要进行映射

fm.map(QAbstractVideoBuffer::ReadOnly);

//映射视频帧内容,映射到可访问的地址上

//现在 QVideroFrame,视频帧数据可正常访问了

/*QVideoFrame转换成 QPixmap ,我们要寻找转换的方式,一般思路上有2中在QVideoFrame里面,寻找: 返回值为 QPixmap,参数为空,函数名类似于 toPixmap 的方法或者在QVideoFrame的静态方法里面,寻找返回值为 QPixmap,参数为QVidoFrame的方法或者在 QPixmap的构造函数里面,寻找参数为QVideoFrame或者 QPixmap的静态方法里面,寻找返回值为 QPixmap,参数为QVideoFrame的方法经过这两轮的寻找,没找到可以直接将 QVideoFrame转换成 QPixmap的方法QT里面有一个万能的图像类,叫做 QImage因为一看构造函数,发现,构建一个QImage只需要图像的首地址,图像的宽度,图像的高度,(图像每一行的字节数),这些数据,任意图像类型,都可以轻易获取例如QVideoFrame就能够轻易的获取图像首地址:bits图像宽度:width图像高度:height图像每行字节数:bytesPerLine图像格式:需求格式为 QImage::Format_RGB32QImage::QImage(const uchar *data, int width, int height, Format format, QImageCleanupFunction cleanupFunction = Q_NULLPTR, void *cleanupInfo = Q_NULLPTR)

*/

//QImage image(fm.bits(),fm.width(),fm.height(),QImage::Format_RGB32); 以下主要是图片格式的转化不同的形式,结果是一样的

//QImage image(fm.bits(),fm.width(),fm.height(),fm.imageFormatFromPixelFormat(QVideoFrame::Format_RGB32));

QImage image(fm.bits(),fm.width(),fm.height(),fm.imageFormatFromPixelFormat(fm.pixelFormat()));

// 注意,摄像头拍摄到的图片,是上下左右相反的,所以我们还需要镜像一下

image = image.mirrored(1);

//表示横向镜像,纵向镜像是默认值

#endif

emit frameChanged(frame);

return true;

}

QList<QVideoFrame::PixelFormat> MyVideoSurface::supportedPixelFormats(QAbstractVideoBuffer::HandleType type) const

{

Q_UNUSED(type);

// 这个函数在 AbstractVideoSurface构造函数的时候,自动调用,目的是确认AbstractVideoSurface能够支持的像素格式,摄像头拍摄到的图片,他的像素格式是 RGB_3

// 所以,我们要把RGB_32这个像素格式,添加到AbstractVideoSurface支持列表里面了

// 当前函数,返回的就是AbstractVideoSurface所支持的像素格式的列表

return QList<QVideoFrame::PixelFormat>() << QVideoFrame::Format_RGB32;// 此处的 operoatr<< 相遇 QList::append方法

}

//main.cpp

int main(int argc, char *argv[])

{

QCameraInfo info = QCameraInfo::defaultCamera();

QCamera *camera = new QCamera(info);

MyVideoSurface* surface = new MyVideoSurface();

//图像处理类

camera->setViewfinder(surface);

MyCamera *myCamer = new MyCamera;

QObject::connect(surface, &MyVideoSurface::frameChanged, myCamer, &MyCamera::onNewVideoContentReceived);

QGuiApplication app(argc, argv);

QQmlApplicationEngine engine;

QmlLanguage qmlLanguage(app, engine);

engine.rootContext()->setContextProperty("camera", myCamer);

engine.load(QUrl(QStringLiteral("qrc:/main.qml")));

camera->start();

return app.exec();

}

//main.qml

Window {

id : root

visible: true

width: 640

height: 480

title: qsTr("cpp+camera")

VideoOutput {

id: videoOutput

anchors.fill: parent

source: camera

}

}

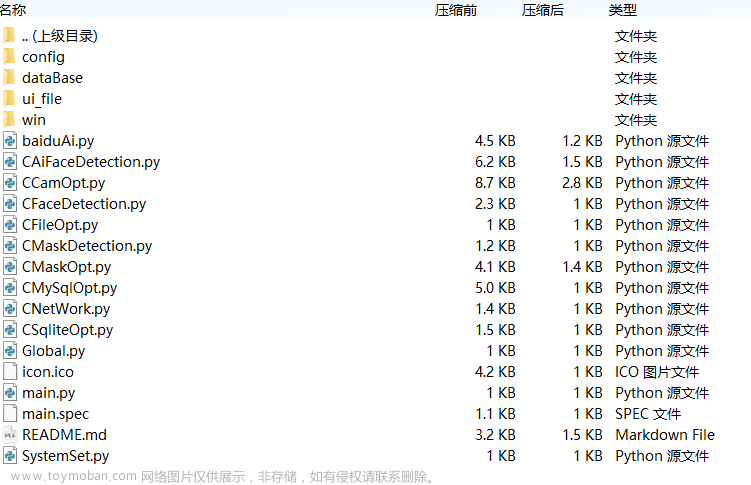

运行效果如下: 文章来源地址https://www.toymoban.com/news/detail-669231.html

文章来源地址https://www.toymoban.com/news/detail-669231.html

文章来源:https://www.toymoban.com/news/detail-669231.html

到了这里,关于qt cpp结合VideoOutput显示摄像头数据的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!