系统环境:

Red Hat Enterprise Linux 9.1 (Plow)

Kernel: Linux 5.14.0-162.6.1.el9_1.x86_64

| 主机名 | 地址 |

| master | 192.168.19.128 |

| node01 | 192.168.19.129 |

| node02 | 192.168.19.130 |

目录

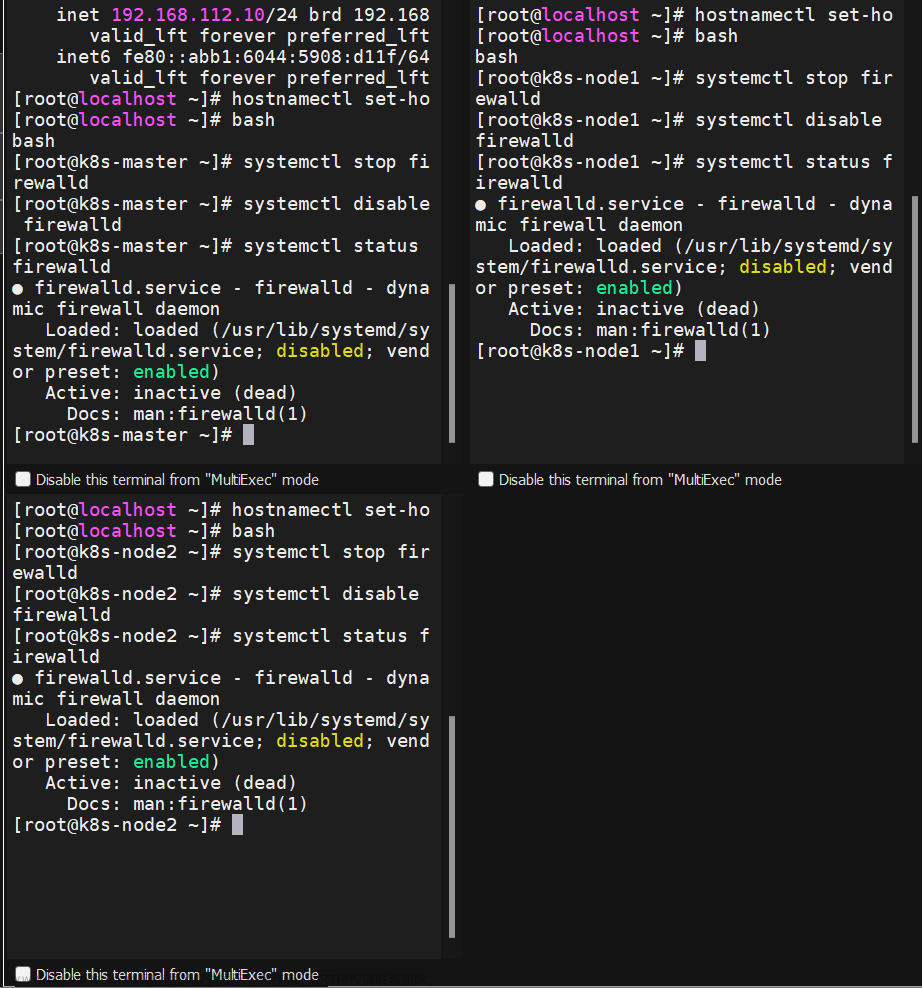

1、关闭防火墙,关闭SElinxu ,开启时间同步服务

2、关闭swap

3、网络参数调整

4、建立仓库

6、下载必要的软件包

7、启动服务

8、重新拉取镜像

9、关闭虚机进行克隆

k8s初始化

1、关闭防火墙,关闭SElinxu ,开启时间同步服务

[root@Vivyan ~]# systemctl stop firewalld

[root@Vivyan ~]# systemctl disable firewalld

[root@Vivyan ~]# vim /etc/sysconfig/selinux #SELINUX=permissive

[root@Vivyan ~]# setenforce 0

[root@Vivyan ~]# systemctl restart chronyd

[root@Vivyan ~]# systemctl enable chronyd

2、关闭swap

[root@master ~]# tail -n 2 /etc/fstab

#/dev/mapper/rhel-swap none swap defaults 0 0

[root@Vivyan ~]# swapon -sFilename Type Size Used Priority

/dev/dm-1 partition 2097148 157696 -2

[root@Vivyan ~]# swapoff /dev/dm-1

[root@Vivyan ~]# free -m

total used free shared buff/cache available

Mem: 1743 1479 61 26 380 264

Swap: 0 0 0

3、网络参数调整

#配置iptables参数,使得流经网桥的流量也经过iptables/netfilter防火墙

[root@Vivyan ~]# cat /etc/sysctl.d/kubernetes.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-cal1-iptables = 1

net.ipv4.ip_forward = 1

#生效

[root@Vivyan ~]# systctl -p

#配置生效

[root@Vivyan ~]# modprobe br_netfilter

[root@Vivyan ~]# lsmod | grep br_netfilter

br_netfilter 32768 0

bridge 315392 1 br_netfilter

4、建立仓库

[root@Vivyan yum.repos.d]# cat k8s.repo

[k8s]

name=k8s

baseurl=Index of /kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=0

[root@Vivyan yum.repos.d]# cat xixi.repo

[baseos]

name=baseos

baseurl=/mnt/BaseOS

gpgcheck=0

[AppStream]

name=AppStream

baseurl=/mnt/AppStream

gpgcheck=0

5、创建仓库并挂载

[root@Vivyan yum.repos.d]# wget -O /etc/yum.repos.d/docker-ce.repo https://download.docker.com/linux/centos/docker-ce.repo

[root@Vivyan yum.repos.d]# sed -i 's+download.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo

[root@Vivyan yum.repos.d]# mount /dev/sr0 /mnt

mount: /mnt: /dev/sr0 already mounted on /mnt/cdrom.

6、下载必要的软件包

[root@Vivyan yum.repos.d]# dnf install -y iproute-tc yum-utils device-mapper-persistent-data lvm2 kubelet-1.21.3 kubeadm-1.21.3 kubectl-1.21.3 docker-ce

如果docker-ce下载失败,解决办法:

[root@Vivyan yum.repos.d]# dnf remove podman -y

[root@Vivyan yum.repos.d]# dnf install -y docker-ce [--allowerasing]

7、启动服务

[root@Vivyan yum.repos.d]# systemctl enable kubelet

[root@Vivyan yum.repos.d]# systemctl enable --now docker

编辑json仓库

[root@Vivyan yum.repos.d]# vim /etc/docker/daemon.json

{ "exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://8zs3633v.mirror.aliyuncs.com"]

}

[root@Vivyan yum.repos.d]# systemctl restart docker #重启仓库

[root@Vivyan yum.repos.d]# systemctl status docker #查看状态

8、重新拉取镜像

列出当前镜像

[root@Vivyan ~]# kubeadm config images list

I0528 15:01:02.677618 6941 version.go:254] remote version is much newer: v1.27.2; falling back to: stable-1.21

k8s.gcr.io/kube-apiserver:v1.21.14

k8s.gcr.io/kube-controller-manager:v1.21.14

k8s.gcr.io/kube-scheduler:v1.21.14

k8s.gcr.io/kube-proxy:v1.21.14

k8s.gcr.io/pause:3.4.1

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns/coredns:v1.8.0

拉取镜像

docker pull kittod/kube-apiserver:v1.21.3

docker pull kittod/kube-controller-manager:v1.21.3

docker pull kittod/kube-scheduler:v1.21.3

docker pull kittod/kube-proxy:v1.21.3

docker pull kittod/pause:3.4.1

docker pull kittod/etcd:3.4.13-0

docker pull kittod/coredns:v1.8.0

docker pull kittod/flannel:v0.14.0

重新标记

docker tag kittod/kube-apiserver:v1.21.3 k8s.gcr.io/kube-apiserver:v1.21.3

docker tag kittod/kube-controller-manager:v1.21.3 k8s.gcr.io/kube-controller-manager:v1.21.3

docker tag kittod/kube-scheduler:v1.21.3 k8s.gcr.io/kube-scheduler:v1.21.3

docker tag kittod/kube-proxy:v1.21.3 k8s.gcr.io/kube-proxy:v1.21.3

docker tag kittod/pause:3.4.1 k8s.gcr.io/pause:3.4.1

docker tag kittod/etcd:3.4.13-0 k8s.gcr.io/etcd:3.4.13-0

docker tag kittod/coredns:v1.8.0 k8s.gcr.io/coredns/coredns:v1.8.0

docker tag kittod/flannel:v0.14.0 quay.io/coreos/flannel:v0.14.0

查看镜像

[root@Vivyan ~]#docker images

删除

docker rmi kittod/kube-apiserver:v1.21.3

docker rmi kittod/kube-controller-manager:v1.21.3

docker rmi kittod/kube-scheduler:v1.21.3

docker rmi kittod/kube-proxy:v1.21.3

docker rmi kittod/pause:3.4.1

docker rmi kittod/etcd:3.4.13-0

docker rmi kittod/coredns:v1.8.0

docker rmi kittod/flannel:v0.14.0

9、关闭虚机进行克隆

关闭: init 0 / poweroff

克隆个数:2个 (本地解析、改IP地址、免密登录)

ping master

ping node1

ping node2

本地解析 hosts文件

192.168.19.128 master

192.168.19.129 node01

192.168.19.130 node02

发送到另外两个主机

scp /etc/hosts root@192.168.19.129:/etc/

scp /etc/hosts root@192.168.19.130:/etc/

改主机名

hostnamectl set-hostname master/node01/node02

改IP地址

nmcli connection modify ens160 ipv4.addresses 192.168.19.130/24

nmcli connection modify ens160 ipv4.gateway 192.168.19.2

nmcli connection modify ens160 ipv4.dns 114.114.114.114

nmcli connection modify ens160 ipv4.method manual

nmcli connection modify ens160 connection.autoconnect yes

nmcli connection up ens160

免密登录(三个主机分别做完后,ssh登录主机可不要密码)

ssh-keygen

ssh-copy-id root@master

ssh-copy-id root@node01

ssh-copy-id root@node02

k8s初始化

kubeadm init \

--kubernetes-version=v1.21.3 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--apiserver-advertise-address=192.168.19.128

如果不成功:

systemctl stop kubelet

rm -rf /etc/kubernetes/*

systemctl stop docker

如果停止失败

reboot

docker container prune docker ps -a

初始化成功后把以下内容复制到某个地方以备后用

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:

#节点加入集群

kubeadm join 192.168.19.128:6443 --token hbax17.wm0rhemz2pm2h9ai \

--discovery-token-ca-cert-hash sha256:38171a1e6706a749bdf7812277272bbfd23a479c604194e643cfcd4c8213f68e

下载文件

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

……

daemonset.apps/kube-flannel-ds created

然后将 node01 和 node02 加入集群,然后查看集群pod状态便可看到他们两个的节点状态和运行状态

查看节点状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 4m59s v1.21.3

node01 Ready <none> 107s v1.21.3

node02 Ready <none> 104s v1.21.3

如果忘了master初始化完成之后的节点加入集群指令,请在master主机输入一下指令: kubeadm token create --print-join-command

如果节点加入失败:

1、kubeadm reset -y

2、rm -rf /etc/kubernetes/kubelet.conf

rm -rf /etc/kubernetes/pki/ca.crt

systemctl restart kubelet

查看集群pod状态

[root@master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-558bd4d5db-597rj 1/1 Running 0 4m5s

coredns-558bd4d5db-qj9n4 1/1 Running 0 4m6s

etcd-master 1/1 Running 0 4m14s

kube-apiserver-master 1/1 Running 0 4m14s

kube-controller-manager-master 1/1 Running 0 4m14s

kube-proxy-4qkht 1/1 Running 0 72s

kube-proxy-bgmv5 1/1 Running 0 70s

kube-proxy-zjd2z 1/1 Running 0 4m6s

kube-scheduler-master 1/1 Running 0 4m14s在节点上查看日志

journalctl -f -u kubelet

如果节点状态为notready,可以查看节点日志,大多原因是镜像拉取失败

********************************************************

docker pull kittod/pause:3.4.1

docker tag kittod/pause:3.4.1 k8s.gcr.io/pause:3.4.1

docker pull kittod-kube-proxy:v1.21.3

docker tag kittod/kube-proxy:v1.21.3 k8s.gcr.io/kube-proxy:v1.21.3

reboot

********************************************************

自动补齐

echo "source <(kubectl completion bash)" >> /root/.bashrc source /root/.bashrc

拉取镜像 [root@master ~]# docker pull nginx

重新标记 docker tag nginx:latest kittod/nginx:1.21.5

创建部署 kubectl create deployment nginx --image=kittod/nginx:1.21.5

暴露端口 kubectl expose deployment nginx --port=80 --type=NodePort

查看pod和服务 kubectl get pods,service

查看映射的随机端口 netstat -lntup | grep 30392

测试nginx服务 curl localhost:30392

具体步骤

[root@master ~]# docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

a2abf6c4d29d: Pull complete

a9edb18cadd1: Pull complete

589b7251471a: Pull complete

186b1aaa4aa6: Pull complete

b4df32aa5a72: Pull complete

a0bcbecc962e: Pull complete

Digest: sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest[root@master ~]# docker tag nginx:latest kittod/nginx:1.21.5

[root@master ~]# kubectl create deployment nginx --image=kittod/nginx:1.21.5

deployment.apps/nginx created[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed[root@master ~]# kubectl get pods,service

NAME READY STATUS RESTARTS AGE

pod/nginx-8675954f95-b84t7 0/1 Pending 0 2m48sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 177m

service/nginx NodePort 10.107.157.167 <none> 80:30684/TCP 2m28s

[root@master ~]# netstat -lntup | grep 30684

tcp 0 0 0.0.0.0:30684 0.0.0.0:* LISTEN 5255/kube-proxy[root@master ~]# curl localhost:30684

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p><p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>文章来源:https://www.toymoban.com/news/detail-680248.html<p><em>Thank you for using nginx.</em></p>

</body>

</html>文章来源地址https://www.toymoban.com/news/detail-680248.html

到了这里,关于基于Centos搭建k8s仓库的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!