点我获取项目数据集及代码

1.项目概述

1.1.项目背景

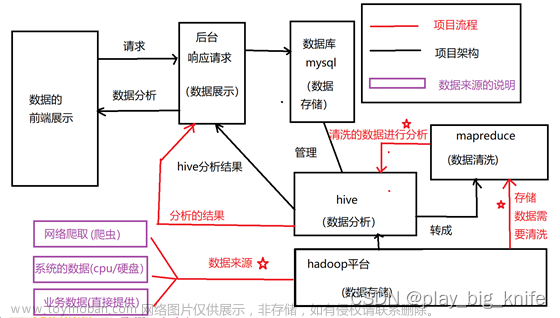

随着我国科学技术水平的不断发展,计算机网络技术的广泛应用,我国已经步入了大数据时代。在大数据背景下,各种繁杂的数据层出不穷,一时难以掌握其基本特征及一般规律,这也给企业的运营数据分析工作增添了不小的难度。在大数据的背景下,基于大数据前沿技术构建企业运营数据分析平台系统受到越来越多的企业的重视,在具体的数据分析工作中,也起到了越来越重要的作用。通过建立完善的运营数据后台管理系统,能够实现对分析展示系统的用户访问、业务操作及系统运行状态监控等进行有效管控。

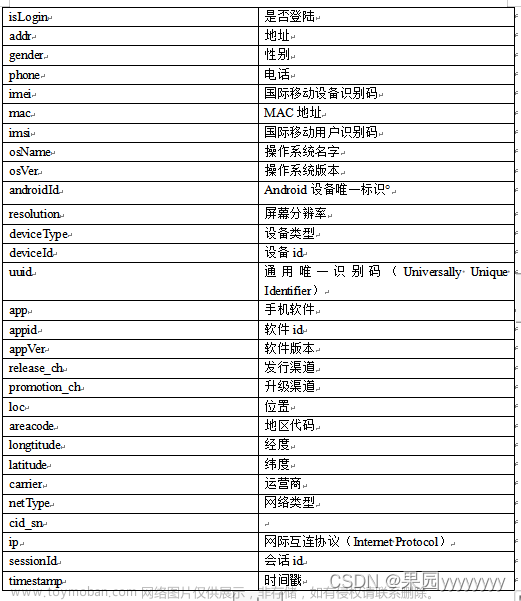

1.2.项目流程

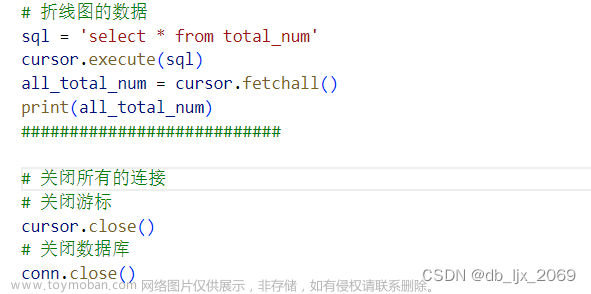

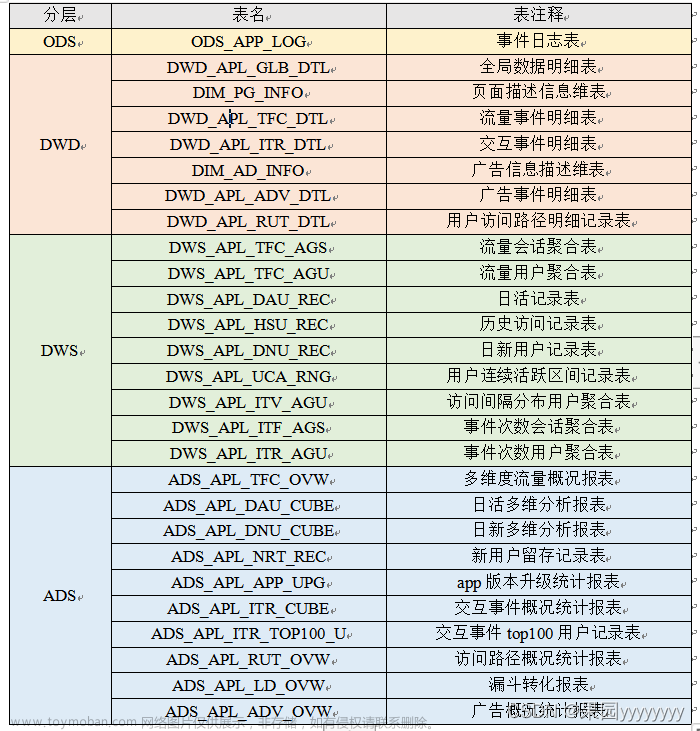

模拟日志数据,通过字典构建、数据预处理将处理后的数据作为数仓的数据源、导入Hive中,通过ODS层、DWD层、DWS层和ADS层进行数仓的构建,共构建了27个数据表。最后通过Presto整合Hive,将SpringBoot项目连接Presto数据源进行前后端开发与前后端联调,最终通过Echarts图表呈现在Web界面中。具体流程图如下:

2.功能需求描述

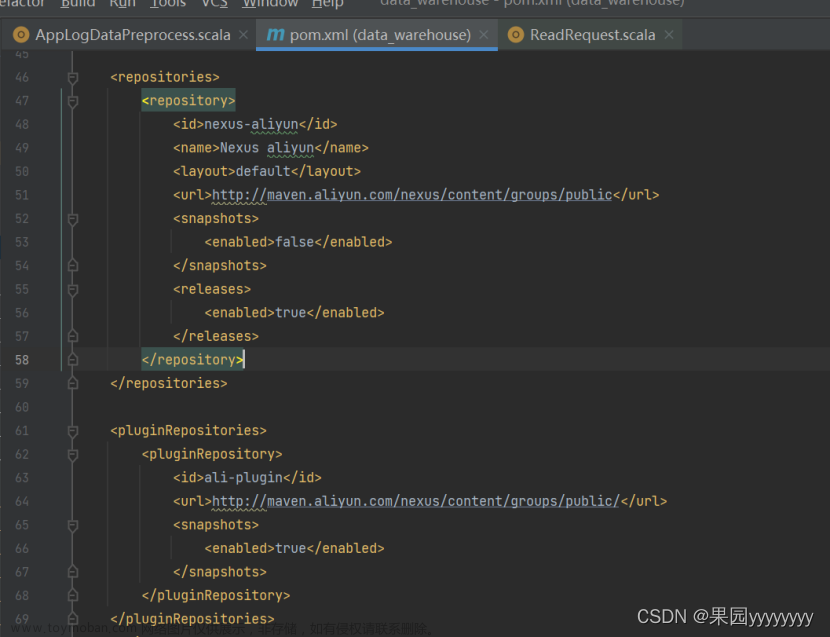

2.1.系统功能组成

主要功能模块:

(1)流量概况分析

(2)日新日活分析

(3)交互事件分析

(4)广告事件分析

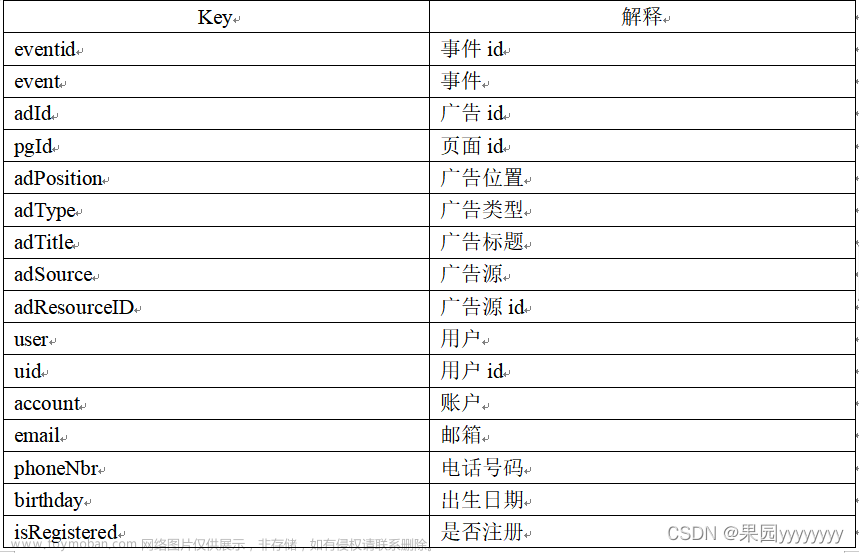

2.2.数据描述

点我下载数据集

2.3.功能描述

2.3.1.流量概况分析

各个维度下的流量概况,如:pv数、uv数、访问次数等折线图及明细报表,维度包括:省份、城市、区县、设备类型、操作系统名称、操作系统版本、发行渠道、升级渠道。

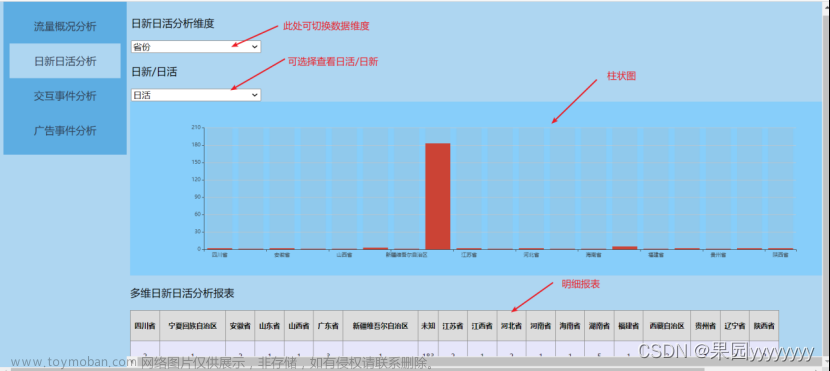

2.3.2.日新日活分析

各个维度下的日新或日活数柱状图及明细报表,维度包括:省份、城市、区县、设备类型、操作系统名称、操作系统版本、发行渠道、升级渠道。

2.3.3.交互事件分析

1.交互事件概况分析

各个事件发生的总次数和总人数折线图及明细报表。

2.交互事件用户Top10分析

各个事件维度下,事件发生次数最多的前10个用户的柱状图及明细报表,维度包括:投诉事件、点击事件、打开事件、浏览事件、点击频道事件、评论事件、进入选项事件、点赞事件、获取代码事件、下载事件、登录事件、搜索事件、分享转发事件、报名事件、查看内容详细信息事件、网页停留事件。

2.3.4.广告事件分析

每个广告的曝光次数、曝光人数、点击次数、点击人数的折线图及明细报表。

3.架构设计

3.1.名词解释

**ODS层:**操作数据存储(Operational data storage),存放原始数据,它的作用就是备份。

**DWD层:**细节数据层(data warehouse detail ),对 ODS 层的数据进行数据清洗,同时对清洗后的数据生成结构与粒度相同的明细表。

**DWS层:**应用程序数据存储(Application Data Store),服务数据层(data warehouse service),以 DWD 为基础,进行轻度聚合的表。

**ADS层:**联机分析处理(Online Analytical Processing),以 DWS 或 DWT 为基础,为各种统计报表提供数据。统计报表就是要进行展示的表。

3.2.系统环境

3.2.1.软件

Hadoop、Spark、Hive、Presto、Echarts、SpringBoot。

3.2.2.硬件

集群中共三台节点,每台节点内存3G、CPU 2 核、磁盘分别为50G、20G、20G。

3.3.系统设计

数据采集:通过模拟数据产生

数据存储:HDFS、Hive

数据计算:Spark、Hive

数据可视化:Echarts、SpringBoot

3.4.系统架构图

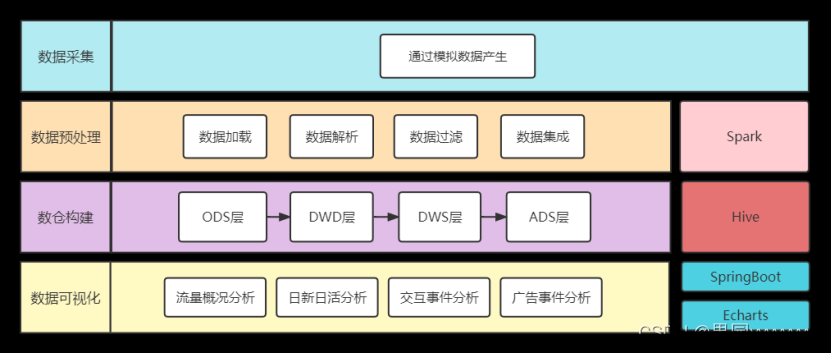

4.Web原型设计

4.1.流量概况分析模块

4.2.日新日活分析模块

4.3.交互事件分析模块

4.4.广告事件分析模块

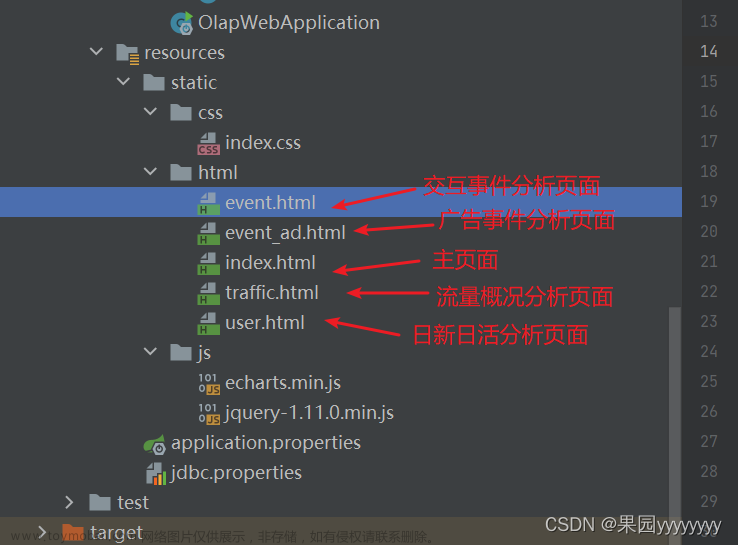

5.Web设计

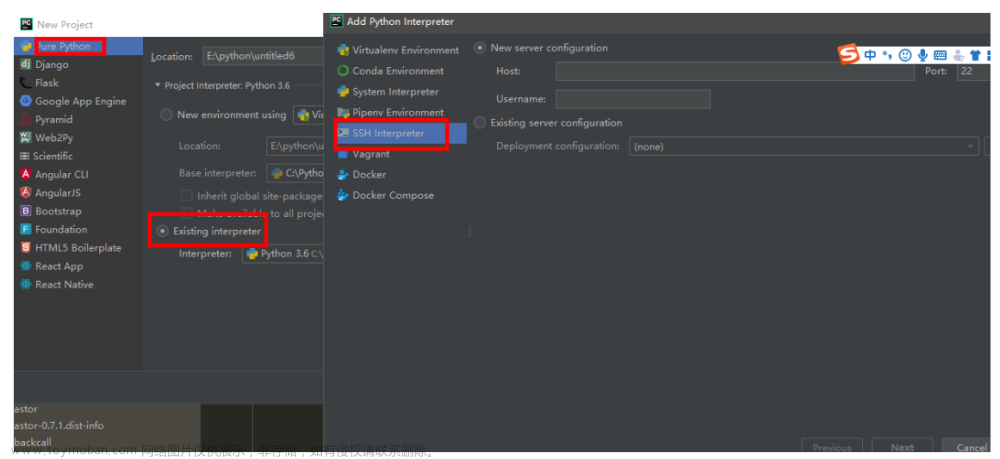

- 技术选型:SpringBoot

- 前端:Echarts

- 数据库:Hive

- 数据库访问:JDBC

5.1.概念总览图

5.1.1.分层:四层结构

**(1)视图层:**视图根据接到的数据最终展示页面给用户浏览。

**(2)web控制层:**响应用户请求。

**(3)业务逻辑层:**实现业务逻辑。

**(4)数据访问层:**访问数据库。

5.1.2.分包:前端和后端

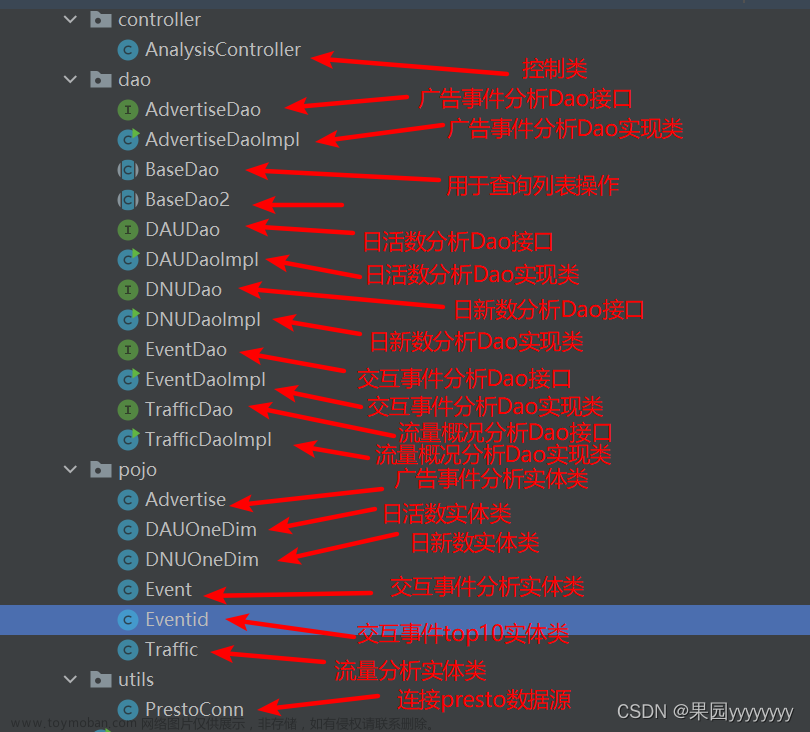

(1)后端分包

(2)前端分包

6.数仓表结构设计

7.工程搭建

7.1.项目结构

7.2.修改父项目的pom文件

8.字典构建

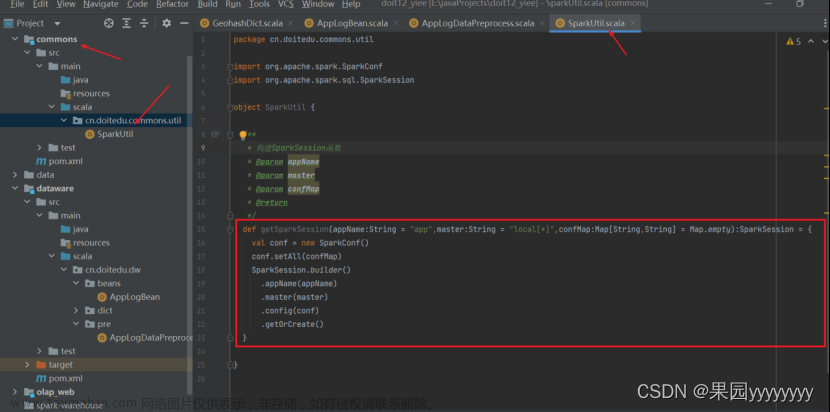

8.1.编写构造SparkSession函数

在commons子项目中编写构造SparkSession的函数,便于后续直接调用。

8.2.地理位置字典构建

8.2.1.提取数据库中地理位置的经纬度

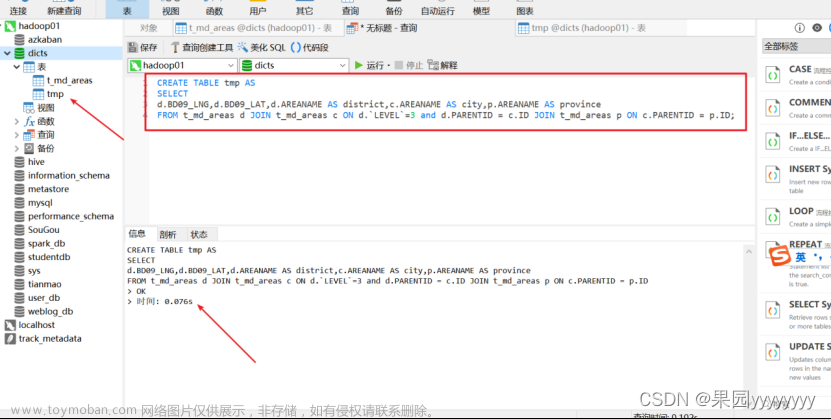

t_md_areas.sql文件中包含了腾讯、百度、高德地图的GPS坐标数据。

由于采集的数据中含有经纬度,故通过将地理位置字典t_md_areas.sql导入dicts数据库中,利用select语句将各个经纬度对应的省、市、区存到tmp表中。

CREATE TABLE tmp AS

SELECT

d.BD09_LNG,d.BD09_LAT,d.AREANAME AS district,c.AREANAME AS city,p.AREANAME AS province

FROM t_md_areas d JOIN t_md_areas c ON d.`LEVEL`=3 and d.PARENTID = c.ID JOIN t_md_areas p ON c.PARENTID = p.ID;

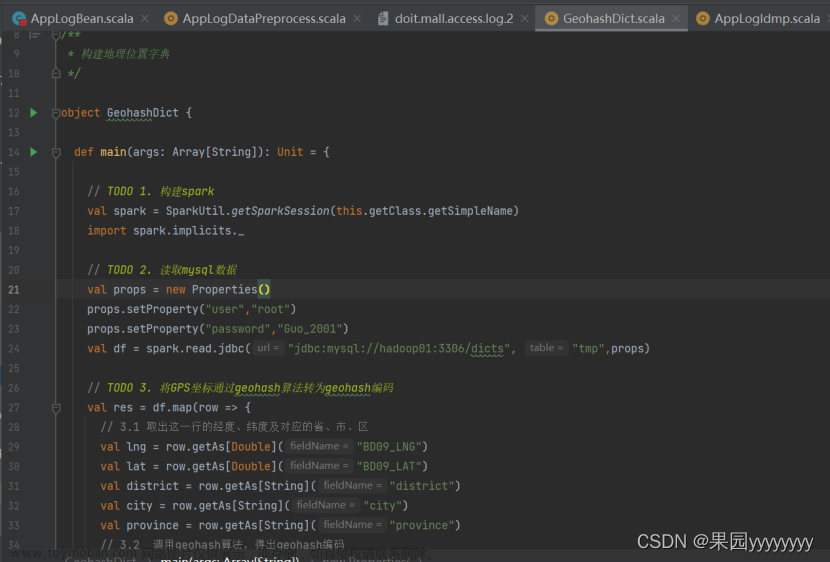

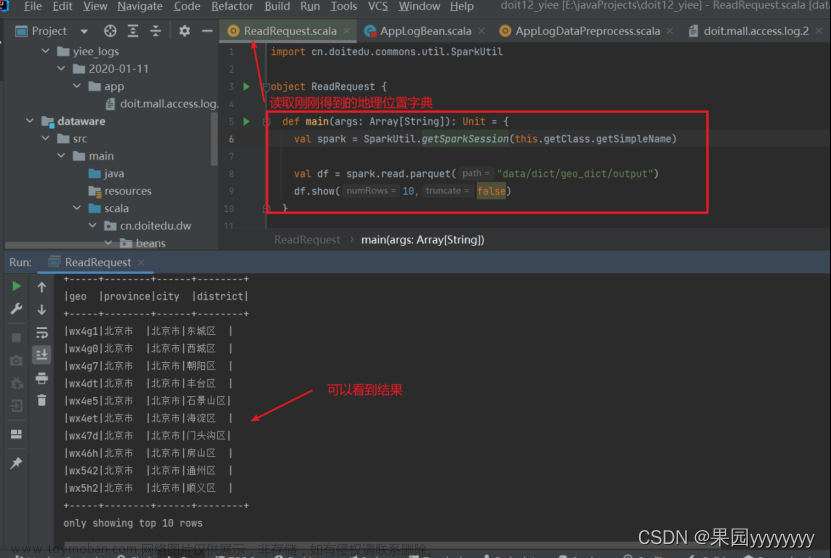

8.2.2.将geo坐标转变为hashgeo编码

1.在dataware子项目中添加geohash依赖、mysql依赖和commons子项目的依赖

2.编写代码

3.运行测试

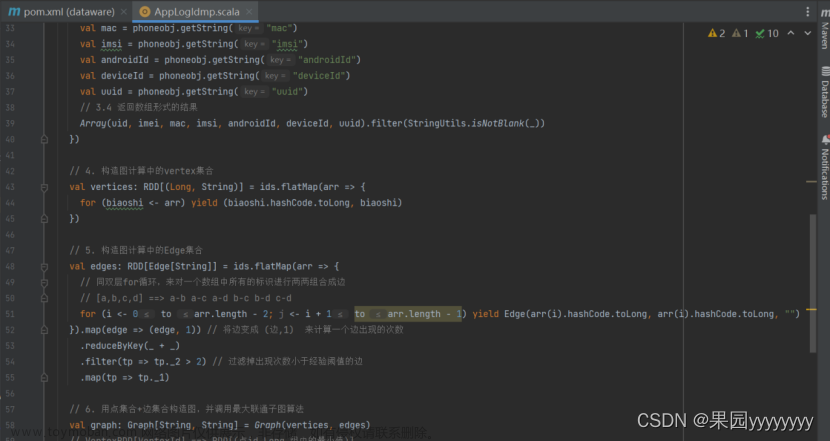

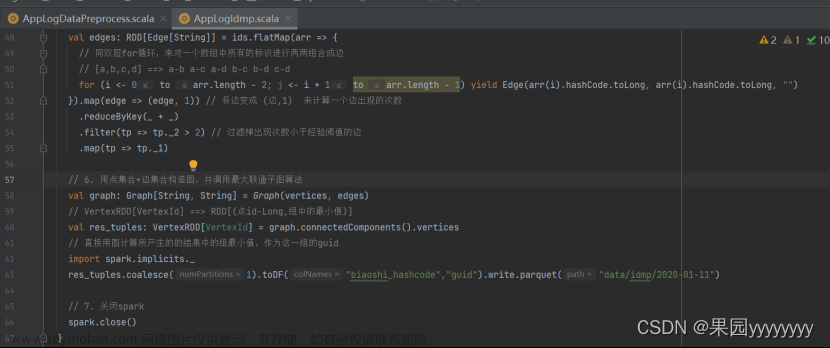

8.3.id映射字典构建

8.3.1.在父项目pom中添加fastjson依赖

8.3.2.在dataware子项目中添加graphx依赖

8.3.3.编写代码

8.3.4.运行测试

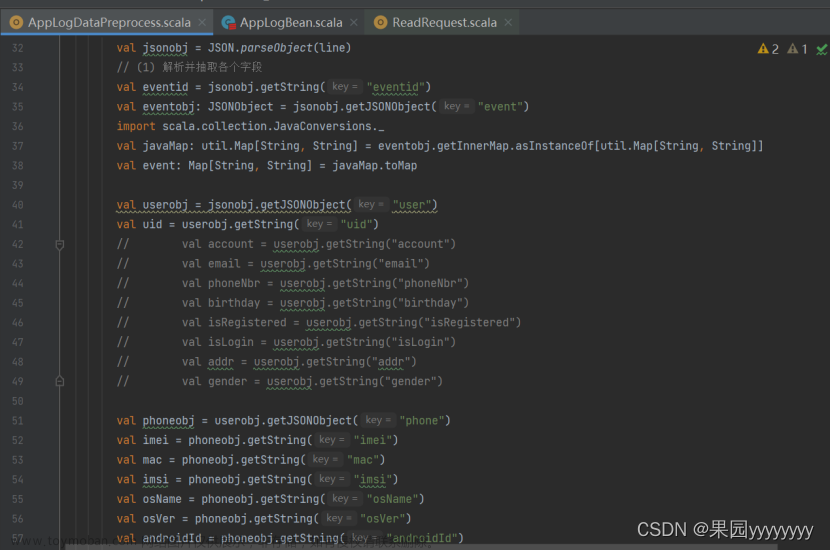

9.数据预处理

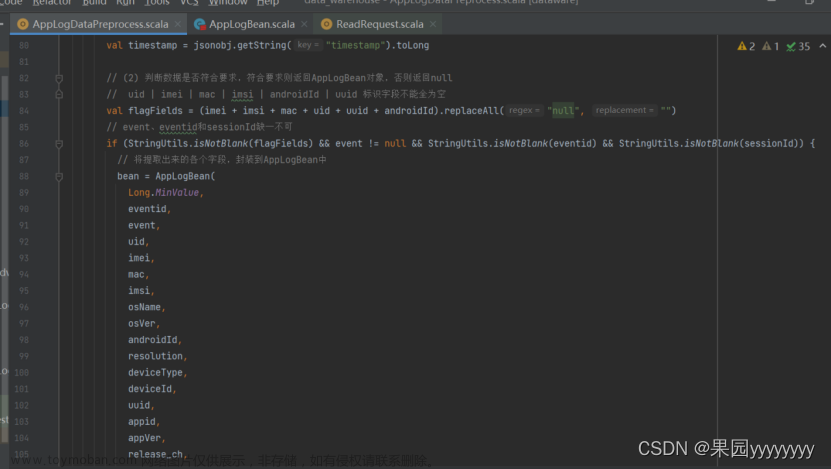

(1)数据加载——加载当日的app埋点日志数据

(2)数据解析——json 解析,解析成功的返回 LogBean 对象,解析失败的返回 null

(3)数据过滤——对解析后的结果进行过滤,清掉 json 不完整的脏数据,清掉不符合规则的数据

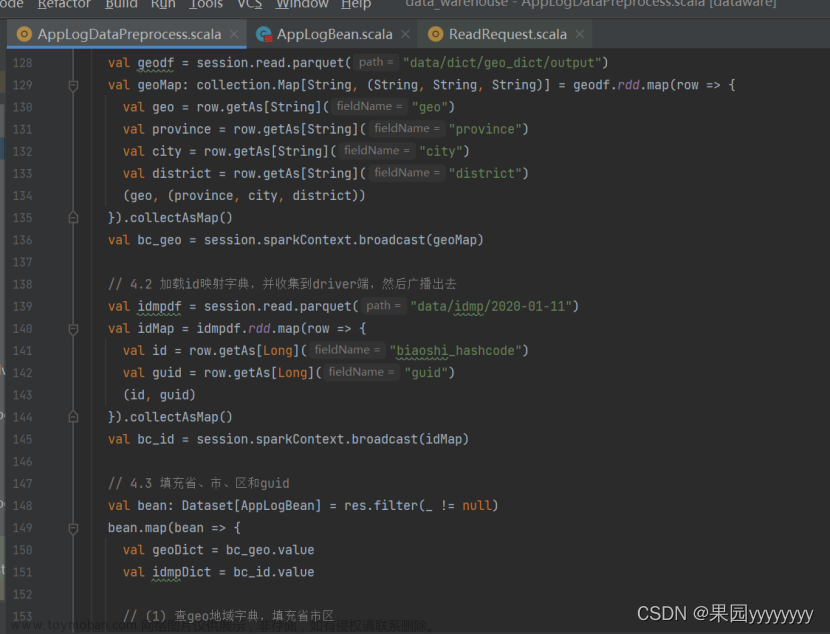

(4)数据集成——对数据进行字典知识集成,填充省、市、区和guid

9.1.编写代码

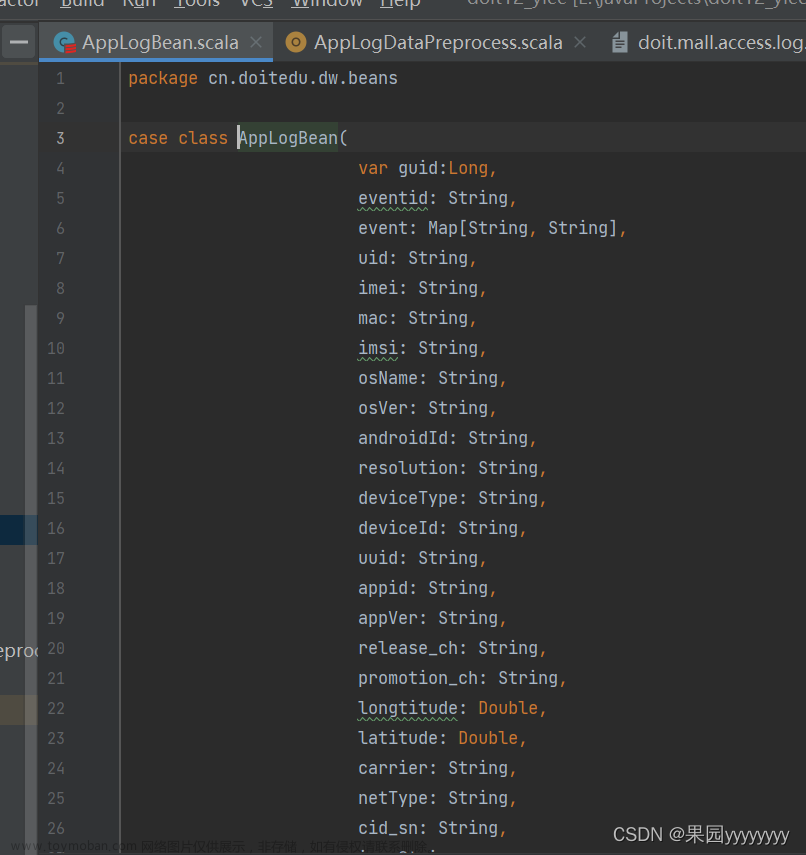

9.1.1.编写AppLogBean实体类

9.1.2.编写AppLogDataPreprocess

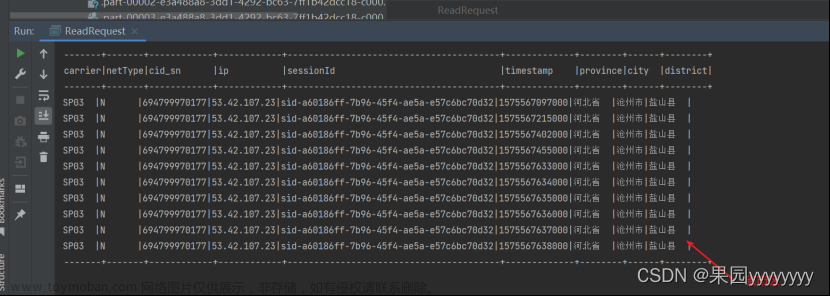

9.2.运行测试

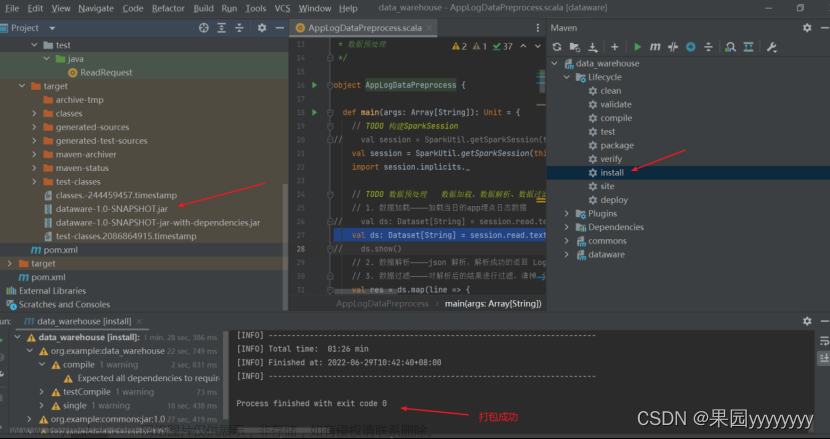

9.3.打包提交线上运行

9.3.1.将代码中写死的路径换成参数形式

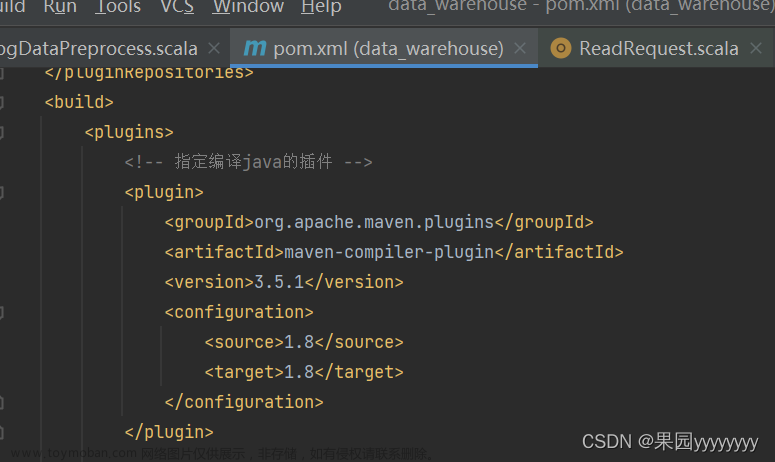

9.3.2.在父项目中添加编译java和scala的插件

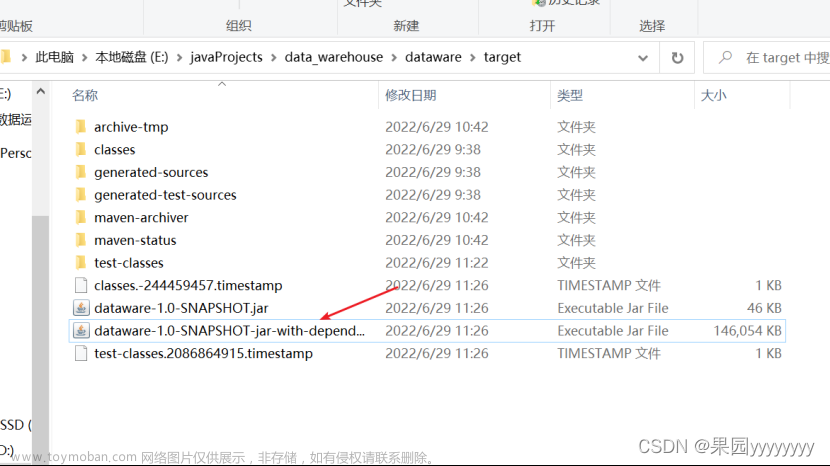

9.3.3.项目打包并上传

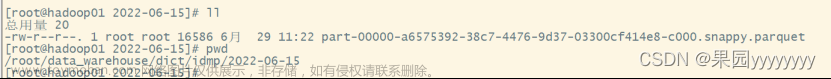

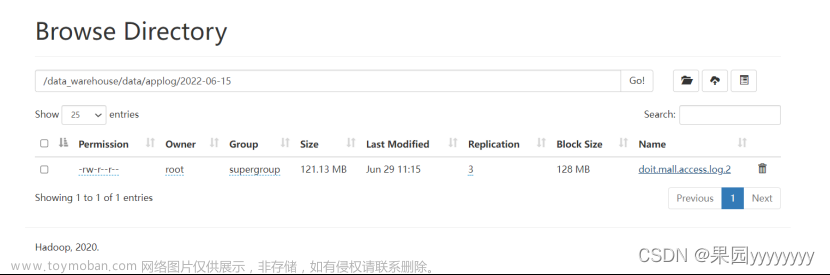

9.3.4.将数据文件上传至hdfs

9.3.5.提交项目jar包

bin/spark-submit \

--master spark://hadoop01:7077 \

--class cn.doitedu.dw.pre.AppLogDataPreprocess \

/root/dataware-1.0-SNAPSHOT-jar-with-dependencies.jar \

/data_warehouse/data/applog/2022-06-15 \

/data_warehouse/data/dict/area \

/data_warehouse/data/dict/idmp/2022-06-15 \

/data_warehouse/data/applog-output/2022-06-15 \

local

10.数仓构建

10.1.ODS层数据加载

10.1.1.启动hive

10.1.2.创建数据库data_warehouse

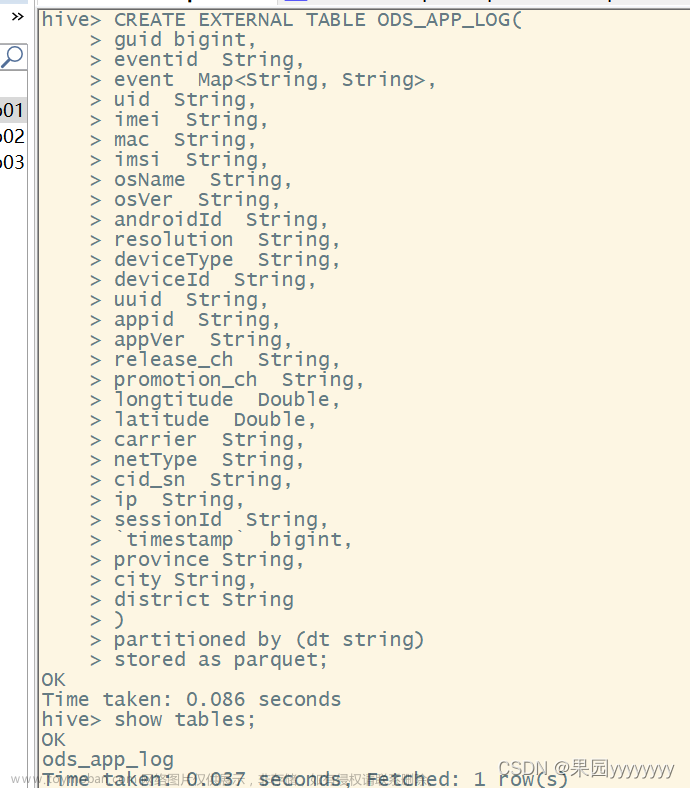

10.1.3.创建外部分区表ODS_APP_LOG

CREATE EXTERNAL TABLE ODS_APP_LOG(

guid bigint,

eventid String,

event Map<String, String>,

uid String,

imei String,

mac String,

imsi String,

osName String,

osVer String,

androidId String,

resolution String,

deviceType String,

deviceId String,

uuid String,

appid String,

appVer String,

release_ch String,

promotion_ch String,

longtitude Double,

latitude Double,

carrier String,

netType String,

cid_sn String,

ip String,

sessionId String,

`timestamp` bigint,

province String,

city String,

district String

)

partitioned by (dt string)

stored as parquet;

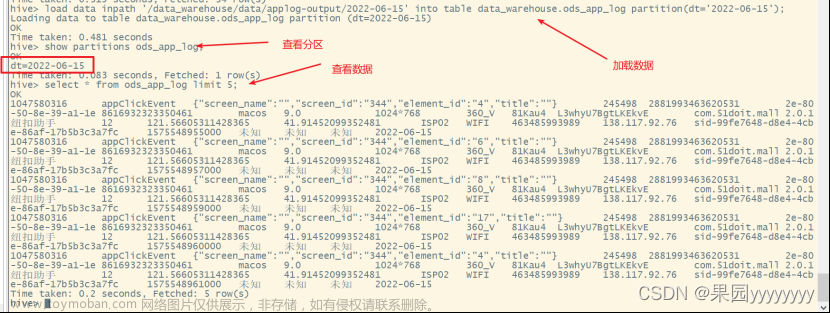

10.1.4.加载数据

load data inpath '/data_warehouse/data/applog-output/2022-06-15' into table data_warehouse.ods_app_log partition(dt='2022-06-15');

show partitions ods_app_log;

select * from ods_app_log limit 5;

10.2.DWD 层建模及开发

- 全局数据明细表:DWD_APL_GLB_DTL

- 页面描述信息维表DIM_PG_INFO

- 流量事件明细表:DWD_APL_TFC_DTL

- 交互事件明细表:DWD_APL_ITR_DTL

- 广告信息描述维表:DIM_AD_INFO

- 广告事件明细表:DWD_APL_ADV_DTL

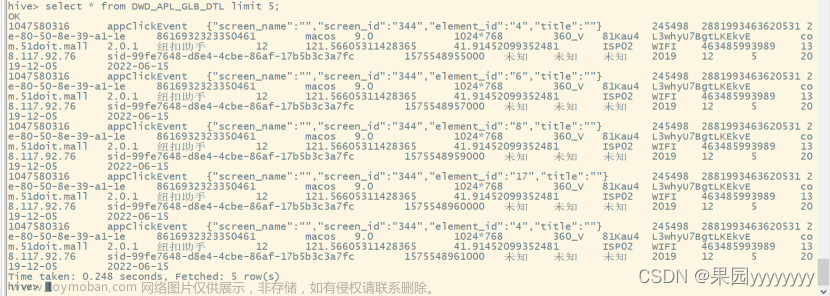

10.2.1.全局数据明细表:DWD_APL_GLB_DTL

1.创建DWD_APL_GLB_DTL表

CREATE TABLE DWD_APL_GLB_DTL(

guid bigint COMMENT '用户全局统一标识',

eventid String COMMENT '事件ID',

event Map<String, String> COMMENT '事件详情信息',

uid String COMMENT '用户登录账号的id',

imei String,

mac String,

imsi String,

osName String,

osVer String,

androidId String,

resolution String,

deviceType String,

deviceId String,

uuid String,

appid String,

appVer String,

release_ch String,

promotion_ch String,

longtitude Double,

latitude Double,

carrier String,

netType String,

cid_sn String,

ip String,

sessionId String,

`timestamp` bigint,

province String,

city String,

district String,

year string,

month string,

day string,

datestr string

)

COMMENT '全局数据明细表'

partitioned by (dt string)

stored as parquet;

2.数据扩展

将日期分割成年月日和date新增到全局表中的字段中

INSERT INTO TABLE DWD_APL_GLB_DTL PARTITION(dt='2022-06-15')

SELECT

guid,

eventid,

event,

uid,

imei,

mac,

imsi,

osName,

osVer,

androidId,

resolution,

deviceType,

deviceId,

uuid,

appid,

appVer,

release_ch,

promotion_ch,

longtitude,

latitude,

carrier,

netType,

cid_sn,

ip,

sessionId,

`timestamp`,

province,

city,

district,

year(from_unixtime(cast(`timestamp`/1000 as bigint))) as year,

month(from_unixtime(cast(`timestamp`/1000 as bigint))) as month,

day(from_unixtime(cast(`timestamp`/1000 as bigint))) as day,

from_unixtime(cast(`timestamp`/1000 as bigint),'yyyy-MM-dd') AS datestr

FROM ODS_APP_LOG WHERE dt='2022-06-15';

3.查看结果

10.2.2.页面描述信息维表DIM_PG_INFO

1.创建DIM_PG_INFO表

CREATE TABLE DIM_PG_INFO(

pgid STRING,

channel STRING,

category STRING,

url STRING

)

COMMENT '页面描述信息维表'

ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

2.将页面描述信息文件上传至虚拟机

3.加载数据并查看结果

load data local inpath '/root/data_warehouse/dim/dim_pg.txt' into table DIM_PG_INFO;

10.2.3.流量事件明细表:DWD_APL_TFC_DTL

1.创建DWD_APL_TFC_DTL表

CREATE TABLE DWD_APL_TFC_DTL(

guid bigint COMMENT '用户全局统一标识',

eventid String COMMENT '事件ID',

event Map<String, String> COMMENT '事件详情信息',

uid String COMMENT '用户登录账号的id',

imei String,

mac String,

imsi String,

osName String,

osVer String,

androidId String,

resolution String,

deviceType String,

deviceId String,

uuid String,

appid String,

appVer String,

release_ch String,

promotion_ch String,

longtitude Double,

latitude Double,

carrier String,

netType String,

cid_sn String,

ip String,

sessionId String,

`timestamp` bigint,

province String,

city String,

district String,

year string,

month string,

day string,

datestr string,

channel STRING , -- 频道

category STRING, -- 页面类别(内容详情页、频道页、首页、活动页)

url STRING -- 页面地址

)

COMMENT '流量事件明细表'

partitioned by (dt string)

stored as parquet;

2.添加页面浏览事件(pgviewEvent)对应的频道、页面类别和页面地址url

2.添加页面浏览事件(pgviewEvent)对应的频道、页面类别和页面地址url

INSERT INTO TABLE DWD_APL_TFC_DTL PARTITION(dt='2022-06-15')

SELECT

a.guid,

a.eventid,

a.event,

a.uid,

a.imei,

a.mac,

a.imsi,

a.osName,

a.osVer,

a.androidId,

a.resolution,

a.deviceType,

a.deviceId,

a.uuid,

a.appid,

a.appVer,

a.release_ch,

a.promotion_ch,

a.longtitude,

a.latitude,

a.carrier,

a.netType,

a.cid_sn,

a.ip,

a.sessionId,

a.`timestamp`,

a.province,

a.city,

a.district,

a.year,

a.month,

a.day,

a.datestr,

b.channel,

b.category,

b.url

FROM

(SELECT * FROM DWD_APL_GLB_DTL WHERE dt='2022-06-15' and eventid='pgviewEvent') a

JOIN DIM_PG_INFO b ON a.event['pgid'] = b.pgid;

3.查看结果

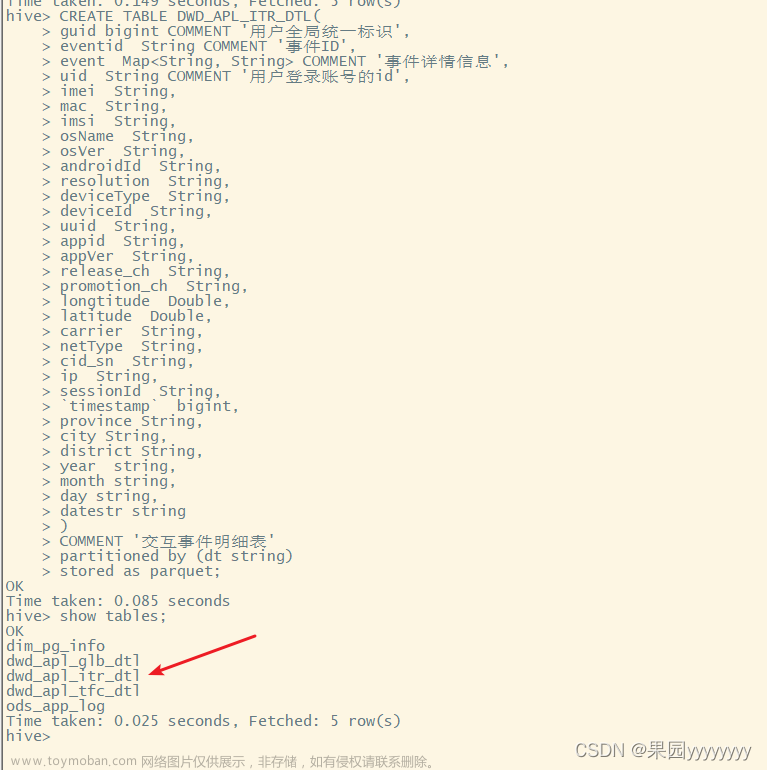

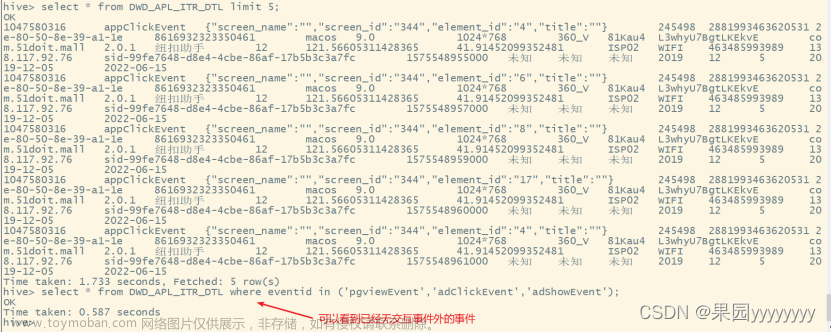

10.2.4.交互事件明细表:DWD_APL_ITR_DTL

1.创建DWD_APL_ITR_DTL表

CREATE TABLE DWD_APL_ITR_DTL(

guid bigint COMMENT '用户全局统一标识',

eventid String COMMENT '事件ID',

event Map<String, String> COMMENT '事件详情信息',

uid String COMMENT '用户登录账号的id',

imei String,

mac String,

imsi String,

osName String,

osVer String,

androidId String,

resolution String,

deviceType String,

deviceId String,

uuid String,

appid String,

appVer String,

release_ch String,

promotion_ch String,

longtitude Double,

latitude Double,

carrier String,

netType String,

cid_sn String,

ip String,

sessionId String,

`timestamp` bigint,

province String,

city String,

district String,

year string,

month string,

day string,

datestr string

)

COMMENT '交互事件明细表'

partitioned by (dt string)

stored as parquet;

2.设置动态分区

set hive.exec.dynamic.partition.mode=nonstrict;

3.将交互事件的log数据加到DWD_APL_ITR_DTL表中

除浏览事件(pgviewEvent)和有关广告的事件(adClickEvent、adShowEvent)外,均是交互事件

INSERT INTO TABLE DWD_APL_ITR_DTL PARTITION(dt)

SELECT * FROM DWD_APL_GLB_DTL

WHERE dt='2022-06-15' AND eventid not in ('pgviewEvent','adClickEvent','adShowEvent');

4.查看结果

10.2.5.广告信息描述维表DIM_AD_INFO

1.创建DIM_AD_INFO表

CREATE TABLE DIM_AD_INFO(

adid STRING, -- 广告ID

medium STRING, -- 广告媒体形式

campaign STRING -- 广告系列名称

)

COMMENT '广告信息描述维表'

ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

2.将广告描述信息文件上传至虚拟机

3.加载数据并查看结果

load data local inpath '/root/data_warehouse/dim/dim_ad.txt' into table DIM_AD_INFO;

10.2.6.广告事件明细表DWD_APL_ADV_DTL

1.创建DWD_APL_ADV_DTL表

CREATE TABLE DWD_APL_ADV_DTL(

guid bigint COMMENT '用户全局统一标识',

eventid String COMMENT '事件ID',

event Map<String, String> COMMENT '事件详情信息',

uid String COMMENT '用户登录账号的id',

imei String,

mac String,

imsi String,

osName String,

osVer String,

androidId String,

resolution String,

deviceType String,

deviceId String,

uuid String,

appid String,

appVer String,

release_ch String,

promotion_ch String,

longtitude Double,

latitude Double,

carrier String,

netType String,

cid_sn String,

ip String,

sessionId String,

`timestamp` bigint,

province String,

city String,

district String,

year string,

month string,

day string,

datestr string,

channel STRING , -- 频道

category STRING, -- 页面类别(内容详情页、频道页、首页、活动页)

url STRING, -- 页面地址

medium STRING, -- 广告媒体形式

campaign STRING -- 广告系列名称

)

COMMENT '广告事件明细表'

partitioned by (dt string)

stored as parquet;

2.连接全局明细表DWD_APL_GLB_DTL、页面描述信息维表DIM_PG_INFO和广告描述维表DIM_AD_INFO

with a as

(

SELECT * FROM DWD_APL_GLB_DTL

WHERE dt='2022-06-15' and (eventid='adShowEvent' or eventid='adClickEvent')

)

INSERT INTO TABLE DWD_APL_ADV_DTL PARTITION(dt='2022-06-15')

SELECT

a.guid ,

a.eventid ,

a.event ,

a.uid ,

a.imei ,

a.mac ,

a.imsi ,

a.osName ,

a.osVer ,

a.androidId ,

a.resolution ,

a.deviceType ,

a.deviceId ,

a.uuid ,

a.appid ,

a.appVer ,

a.release_ch ,

a.promotion_ch ,

a.longtitude ,

a.latitude ,

a.carrier ,

a.netType ,

a.cid_sn ,

a.ip ,

a.sessionId ,

a.`timestamp` ,

a.province ,

a.city ,

a.district ,

a.year ,

a.month ,

a.day ,

a.datestr ,

b.channel , -- 频道

b.category , -- 页面类别(内容详情页、频道页、首页、活动页)

b.url , -- 页面地址

c.medium , -- 广告媒体形式

c.campaign -- 广告系列名称

FROM a JOIN dim_pg_info b ON a.event['pgId']=b.pgid JOIN dim_ad_info c ON a.event['adId']=c.adid;

3.查看结果

10.3.流量概况分析

- 流量会话聚合表:DWS_APL_TFC_AGS

- 流量用户聚合表:DWS_APL_TFC_AGU

- 多维度流量概况报表:ADS_APL_TFC_OVW

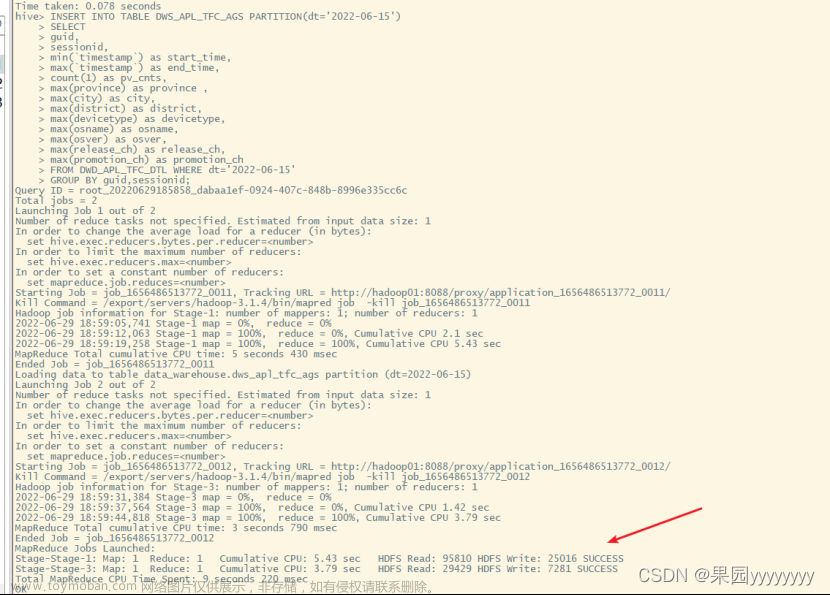

10.3.1.DWS层建模及开发

1.流量会话聚合表:DWS_APL_TFC_AGS

(1)创建DWS_APL_TFC_AGS表

CREATE TABLE DWS_APL_TFC_AGS(

guid bigint,

sessionid string,

start_time bigint,

end_time bigint,

pv_cnts int,

province string,

city string,

district string,

devicetype string,

osname string,

osver string,

release_ch string,

promotion_ch string

)

COMMENT '流量会话聚合表'

PARTITIONED BY (dt string)

STORED AS PARQUET;

(2)计算会话的起始时间、结束时间和流量数

INSERT INTO TABLE DWS_APL_TFC_AGS PARTITION(dt='2022-06-15')

SELECT

guid,

sessionid,

min(`timestamp`) as start_time,

max(`timestamp`) as end_time,

count(1) as pv_cnts,

max(province) as province ,

max(city) as city,

max(district) as district,

max(devicetype) as devicetype,

max(osname) as osname,

max(osver) as osver,

max(release_ch) as release_ch,

max(promotion_ch) as promotion_ch

FROM DWD_APL_TFC_DTL WHERE dt='2022-06-15'

GROUP BY guid,sessionid;

(3)查看结果

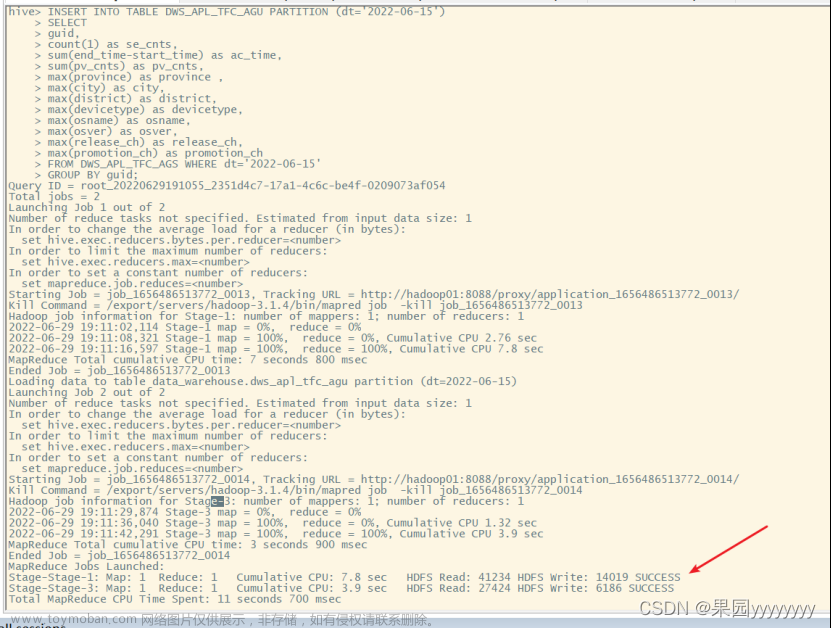

2.流量用户聚合表:DWS_APL_TFC_AGU

(1)创建DWS_APL_TFC_AGU表

CREATE TABLE DWS_APL_TFC_AGU(

guid bigint,

se_cnts int,

ac_time bigint,

pv_cnts int,

province string,

city string,

district string,

devicetype string,

osname string,

osver string,

release_ch string,

promotion_ch string

)

COMMENT '流量用户聚合表'

PARTITIONED BY (dt string)

stored as parquet;

(2)计算用户访问次数、访问总时长、流量总数

INSERT INTO TABLE DWS_APL_TFC_AGU PARTITION (dt='2022-06-15')

SELECT

guid,

count(1) as se_cnts,

sum(end_time-start_time) as ac_time,

sum(pv_cnts) as pv_cnts,

max(province) as province ,

max(city) as city,

max(district) as district,

max(devicetype) as devicetype,

max(osname) as osname,

max(osver) as osver,

max(release_ch) as release_ch,

max(promotion_ch) as promotion_ch

FROM DWS_APL_TFC_AGS WHERE dt='2022-06-15'

GROUP BY guid;

(3)查看结果

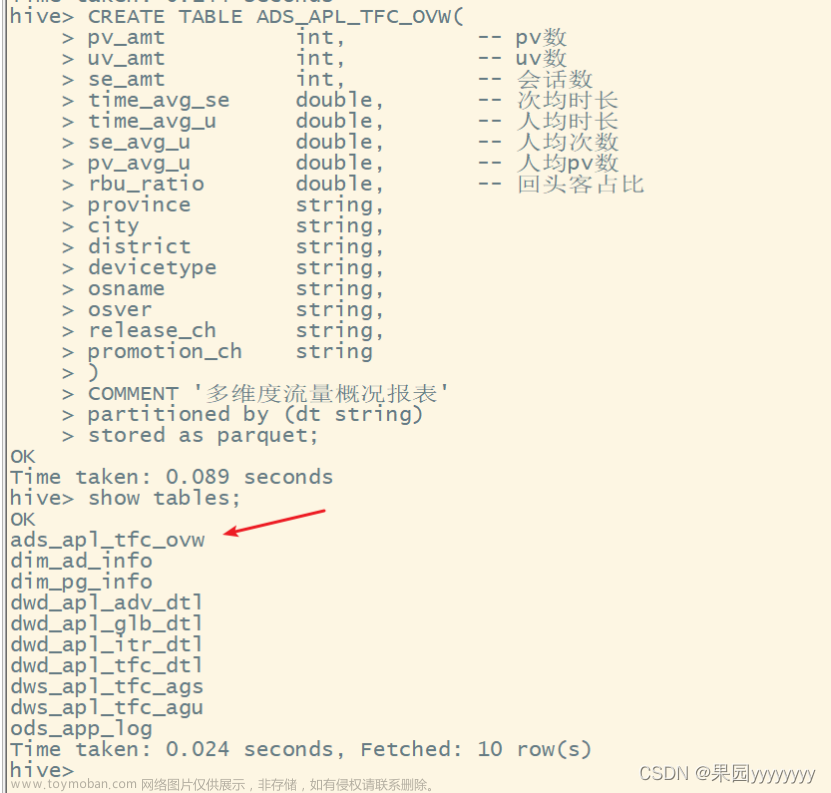

10.3.2.ADS层报表开发

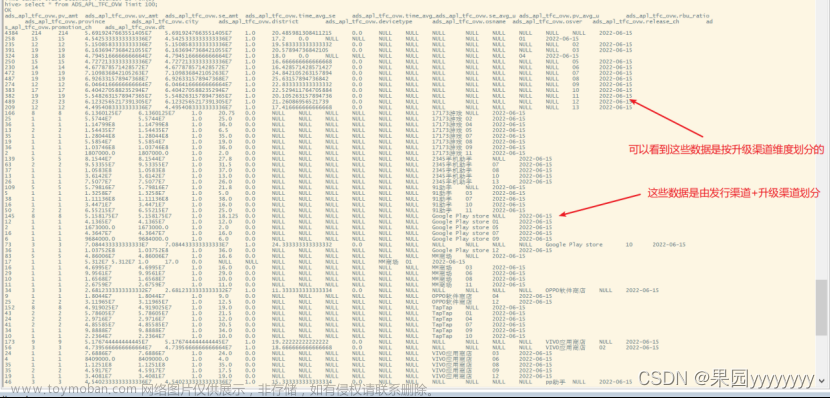

1.多维度流量概况报表:ADS_APL_TFC_OVW

(1)创建ADS_APL_TFC_OVW表

CREATE TABLE ADS_APL_TFC_OVW(

pv_amt int, -- pv数

uv_amt int, -- uv数

se_amt int, -- 会话数

time_avg_se double, -- 次均时长

time_avg_u double, -- 人均时长

se_avg_u double, -- 人均次数

pv_avg_u double, -- 人均pv数

rbu_ratio double, -- 回头客占比

province string,

city string,

district string,

devicetype string,

osname string,

osver string,

release_ch string,

promotion_ch string

)

COMMENT '多维度流量概况报表'

partitioned by (dt string)

stored as parquet;

(2)设置维度参数

set hive.new.job.grouping.set.cardinality=1024;

(3)多维度下计算总pv、总uv、总访问次数、平均每次访问时长、人均访问次数、人均访问深度、人均访问时长、回头客占比等

INSERT INTO TABLE ADS_APL_TFC_OVW PARTITION(dt='2022-06-15')

SELECT

sum(pv_cnts) as pv_amt,

count(distinct guid) as uv_amt,

sum(se_cnts) as se_amt,

sum(ac_time)/sum(se_cnts) as time_avg_se,

sum(ac_time)/count(distinct guid) as time_avg_u,

sum(se_cnts)/count(distinct guid) as se_avg_u,

sum(pv_cnts)/count(distinct guid) as pv_avg_u,

count(if(se_cnts>=2,"ok",null))/count(distinct guid) as rbu_ratio,

province,

city,

district,

devicetype,

osname,

osver,

release_ch,

promotion_ch

FROM DWS_APL_TFC_AGU WHERE dt='2022-06-15'

GROUP BY province,city,district,devicetype,osname,osver,release_ch,promotion_ch

WITH CUBE;

(4)查看结果

10.4.日新日活分析

10.4.1.DWS层建模及开发

1.日活记录表:DWS_APL_DAU_REC

(1)创建DWS_APL_DAU_REC表

CREATE TABLE DWS_APL_DAU_REC(

guid bigint,

province string,

city string,

district string,

appver string,

devicetype string

)

COMMENT '日活记录表'

PARTITIONED BY (dt string)

stored as parquet;

(2)提取日活记录

INSERT INTO TABLE DWS_APL_DAU_REC PARTITION(dt='2022-06-15')

SELECT

guid,province,city,district,appver,devicetype

FROM DWD_APL_GLB_DTL WHERE dt='2022-06-15'

GROUP BY guid,province,city,district,appver,devicetype;

(3)查看结果

2.历史访问记录表:DWS_APL_HSU_REC

(1)创建DWS_APL_HSU_REC表

CREATE TABLE DWS_APL_HSU_REC(

guid bigint,

first_dt string,

last_dt string

)

COMMENT '历史访问记录表'

PARTITIONED BY (dt string)

stored as parquet;

(2)更新计算历史访问记录表

先取出当日日活中的所有guid,再将tmp表 FULL JOIN 历史表

WITH tmp AS (

SELECT guid

FROM DWS_APL_DAU_REC WHERE dt='2022-06-15'

GROUP BY guid

)

INSERT INTO TABLE DWS_APL_HSU_REC PARTITION(dt='2022-06-15')

SELECT

if(tmp.guid is not null,tmp.guid,his.guid) as guid,

if(his.first_dt is not null,his.first_dt,'2022-06-15') as first_dt,

if(tmp.guid is not null,'2022-06-15',his.last_dt) as last_dt

FROM tmp FULL JOIN DWS_APL_HSU_REC his ON tmp.guid=his.guid;

(3)查看结果

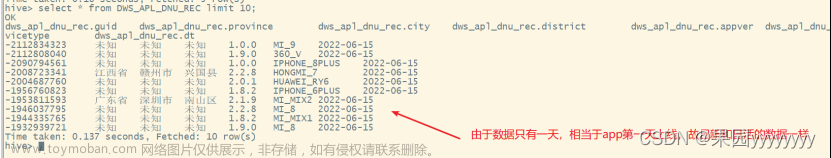

3.日新用户记录表:DWS_APL_DNU_REC

(1)创建DWS_APL_DNU_REC表

CREATE TABLE DWS_APL_DNU_REC(

guid bigint,

province string,

city string,

district string,

appver string,

devicetype string

)

COMMENT '日新用户记录表'

PARTITIONED BY (dt string)

stored as parquet;

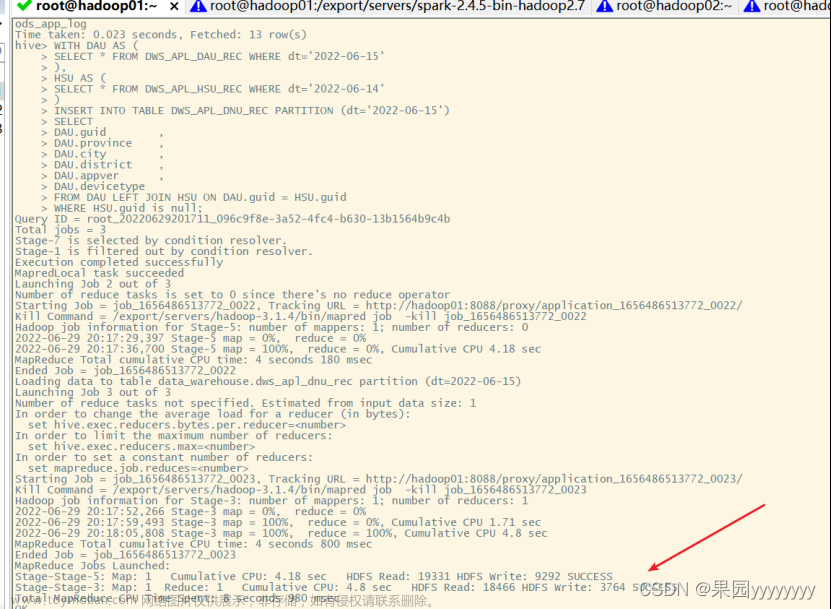

(2)当天的日活记录+昨日的历史访问记录

WITH DAU AS (

SELECT * FROM DWS_APL_DAU_REC WHERE dt='2022-06-15'

),

HSU AS (

SELECT * FROM DWS_APL_HSU_REC WHERE dt='2022-06-14'

)

INSERT INTO TABLE DWS_APL_DNU_REC PARTITION (dt='2022-06-15')

SELECT

DAU.guid ,

DAU.province ,

DAU.city ,

DAU.district ,

DAU.appver ,

DAU.devicetype

FROM DAU LEFT JOIN HSU ON DAU.guid = HSU.guid

WHERE HSU.guid is null;

(3)查看结果

10.4.2.ADS层报表开发

1.日活多维分析报表:ADS_APL_DAU_CUBE

(1)创建ADS_APL_DAU_CUBE表

CREATE TABLE ADS_APL_DAU_CUBE(

dau_cnts int,

province string,

city string,

district string,

appver string,

devicetype string

)

COMMENT '日活多维分析报表'

PARTITIONED BY (dt string)

stored as parquet;

(2)设置维度参数

set hive.new.job.grouping.set.cardinality=1024;

(3)多维分组聚合

INSERT INTO TABLE ADS_APL_DAU_CUBE PARTITION(dt='2022-06-15')

SELECT

count(distinct guid) as dau_cnts,

province,

city,

district,

appver,

devicetype

FROM DWS_APL_DAU_REC WHERE dt='2022-06-15'

GROUP BY province,city,district,appver,devicetype

WITH CUBE;

(4)查看结果

2.日新多维分析报表:ADS_APL_DNU_CUBE

(1)创建ADS_APL_DNU_CUBE表

CREATE TABLE ADS_APL_DNU_CUBE(

dnu_cnts int,

province string,

city string,

district string,

appver string,

devicetype string

)

COMMENT '日新多维分析报表'

PARTITIONED BY (dt string)

stored as parquet;

(2)多维分组聚合

INSERT INTO TABLE ADS_APL_DNU_CUBE PARTITION(dt='2022-06-15')

SELECT

count(distinct guid) as dnu_cnts,

province,

city,

district,

appver,

devicetype

FROM DWS_APL_DNU_REC WHERE dt='2022-06-15'

GROUP BY province,city,district,appver,devicetype

WITH CUBE;

(3)查看结果

10.5.新用户留存分析

10.5.1.新用户留存记录表:ADS_APL_NRT_REC

1.创建ADS_APL_NRT_REC表

CREATE TABLE ADS_APL_NRT_REC(

dt string, -- 日期

dnu_cnts int, -- 日新总数

nrt_days int, -- 留存天数

nrt_cnts int -- 留存人数

)

COMMENT '新用户留存记录表'

STORED AS PARQUET;

2.计算日新总数、留存天数和留存人数

INSERT INTO TABLE ADS_APL_NRT_REC

SELECT

first_dt as dt,

count(1) as dnu_cnts,

datediff('2022-06-15',first_dt) as nrt_days,

count(if(last_dt='2022-06-15',1,null)) as nrt_cnts

FROM DWS_APL_HSU_REC WHERE dt='2022-06-15' AND datediff('2022-06-15',first_dt) BETWEEN 1 and 30

GROUP BY first_dt;

**3.查看结果

10.6.用户活跃成分分析

10.6.1.用户连续活跃区间记录表:DWS_APL_UCA_RNG

1.创建DWS_APL_UCA_RNG表

CREATE TABLE DWS_APL_UCA_RNG(

guid bigint, -- 用户标识

first_dt string, -- 新增日期

rng_start string, -- 区间起始

rng_end string -- 区间结束

)

COMMENT '用户连续活跃区间记录表'

PARTITIONED BY (dt string)

STORED AS ORC;

2.计算新增日期和区间始末

WITH rng as(

-- 约束前日的区间记录

SELECT

guid,first_dt,rng_start,rng_end

FROM DWS_APL_UCA_RNG

WHERE dt=date_sub('2022-06-15',1)

),

-- 约束当日日活,且id去重

dau as

(

select distinct(guid)

from dws_apl_dau_rec

where dt='2022-06-15'

)

INSERT INTO TABLE DWS_APL_UCA_RNG PARTITION (dt='2022-06-15')

SELECT

if(rng.guid is not null,rng.guid,dau.guid) as guid,

if(rng.guid is not null,rng.first_dt,'2022-06-15') as first_dt,

if(rng.guid is not null,rng.rng_start,'2022-06-15') as rng_start,

case

when rng.guid is not null and rng.rng_end != '9999-12-31' then rng.rng_end

when rng.guid is not null and rng.rng_end = '9999-12-31' and dau.guid is null then date_sub('2022-06-15',1)

else '9999-12-31'

end as rng_end

FROM rng FULL JOIN dau ON rng.guid=dau.guid

UNION ALL

-- 处理2: 为最近没来,但是今天来了的人,生成一条新的区间记录

SELECT

a.guid,

a.first_dt,

'2022-06-15' as rng_start,

'9999-12-31' as rng_end

FROM

(

SELECT

guid,

max(first_dt) as first_dt

FROM rng

GROUP BY guid

HAVING max(rng_end) != '9999-12-31'

) a

JOIN dau

ON a.guid = dau.guid;

3.查看结果

10.6.2.访问间隔分布用户聚合表:DWS_APL_ITV_AGU

1.创建DWS_APL_ITV_AGU表

create table dws_apl_itv_agu(

guid bigint,

itv_days int,

itv_cnts int

)

COMMENT '访问间隔分布用户聚合表'

partitioned by (dt string)

stored as orc;

2.计算访问间隔

with tmp as(

select

guid,

if(datediff('2022-06-15',rng_start)>30,date_sub('2022-06-15',30),rng_start) as rng_start,

rng_end

from

dws_apl_uca_rng -- 连续活跃区间记录表

where datediff('2022-06-15',if(rng_end='9999-12-31','2022-06-15',rng_end))<=30

AND dt='2022-06-15'

)

insert into dws_apl_itv_agu partition(dt='2022-06-15')

select

guid,

0,

datediff(if(rng_end='9999-12-31','2022-06-15',rng_end),rng_start)

from tmp

UNION ALL

select * from

(

select

guid,

datediff(lead(rng_start,1,null) over(partition by guid order by rng_start),rng_end) as itv_days,

1 as itv_cnts

from tmp

) o

where itv_days is not null;

3.查看结果

10.7.app版本升级分析

10.7.1.app版本升级统计报表:ADS_APL_APP_UPG

1.创建ADS_APL_APP_UPG表

CREATE TABLE ADS_APL_APP_UPG(

guid bigint,

pre_ver string, -- 升级前版本

post_ver string -- 升级后版本

)

COMMENT 'app版本升级统计报表'

partitioned by (dt string)

stored as orc;

2.app版本升级统计

INSERT INTO TABLE ADS_APL_APP_UPG PARTITION(dt='2022-06-15')

SELECT

guid,appver,post_ver

FROM

(

SELECT

guid,

appver,

lead(appver,1,null) over(partition by guid order by appver) as post_ver

FROM dwd_apl_tfc_dtl WHERE dt='2022-06-15'

) o

WHERE post_ver>appver;

3.查看结果

10.8.交互事件分析

10.8.1.事件次数会话聚合表:DWS_APL_ITF_AGS

1.创建表DWS_APL_ITF_AGS

CREATE TABLE DWS_APL_ITR_AGS(

guid bigint , -- 用户标识

sessionid string , -- 会话标识

eventid string , -- 事件类型

cnt int , -- 发生次数

province string ,

city string ,

district string ,

osname string ,

osver string ,

appver string ,

release_ch string

)

COMMENT '事件次数会话聚合表'

partitioned by (dt string)

stored as orc;

2.计算会话聚合事件次数

INSERT INTO TABLE DWS_APL_ITR_AGS PARTITION (dt='2022-06-15')

SELECT

guid ,

sessionid ,

eventid ,

count(1) as cnt ,

max(province ) as province ,

max(city ) as city ,

max(district ) as district ,

max(osname ) as osname ,

max(osver ) as osver ,

max(appver ) as appver ,

max(release_ch ) as release_ch

FROM DWD_APL_ITR_DTL

WHERE dt='2022-06-15'

GROUP BY guid,sessionid,eventid;

3.查看结果

10.8.2.事件次数用户聚合表:DWS_APL_ITR_AGU

1.创建DWS_APL_ITR_AGU表

CREATE TABLE DWS_APL_ITR_AGU(

guid bigint , -- 用户标识

eventid string , -- 事件类型

cnt int , -- 发生次数

province string ,

city string ,

district string ,

osname string ,

osver string ,

appver string ,

release_ch string

)

COMMENT '事件次数用户聚合表'

partitioned by (dt string)

stored as orc;

2.计算用户聚合事件次数

INSERT INTO TABLE DWS_APL_ITR_AGU PARTITION(dt='2022-06-15')

SELECT

guid ,

eventid ,

sum(cnt) as cnt ,

max(province ) as province ,

max(city ) as city ,

max(district ) as district ,

max(osname ) as osname ,

max(osver ) as osver ,

max(appver ) as appver ,

max(release_ch ) as release_ch

FROM DWS_APL_ITR_AGS

WHERE dt='2022-06-15'

GROUP BY guid,eventid;

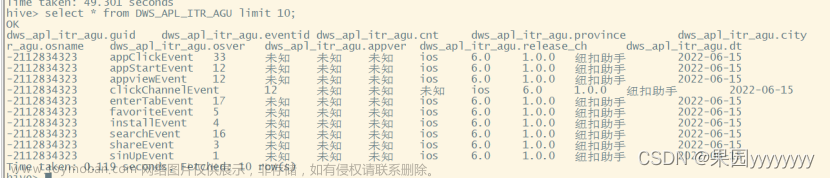

3.查看结果

10.8.3.交互事件概况统计报表:ADS_APL_ITR_CUBE

1.创建ADS_APL_ITR_CUBE表

CREATE TABLE ADS_APL_ITR_CUBE(

eventid string , -- 事件类型

province string ,

city string ,

district string ,

osname string ,

osver string ,

appver string ,

release_ch string ,

cnt int , -- 发生次数

users int -- 发生人数

)

COMMENT '交互事件概况统计报表'

PARTITIONED BY (dt string)

stored as orc;

2.交互事件概况统计

INSERT INTO TABLE ADS_APL_ITR_CUBE PARTITION(dt='2022-06-15')

SELECT

eventid,

province,

city,

district,

osname,

osver,

appver,

release_ch,

sum(cnt) as cnt,

count(1) as users

FROM DWS_APL_ITR_AGU WHERE dt='2022-06-15'

GROUP BY eventid,province,city,district,osname,osver,appver,release_ch

GROUPING SETS(

(eventid),

(eventid,province),

(eventid,province,city),

(eventid,province,city,district),

(eventid,osname),

(eventid,osname,osver),

(eventid,appver),

(eventid,release_ch)

);

3.查看结果

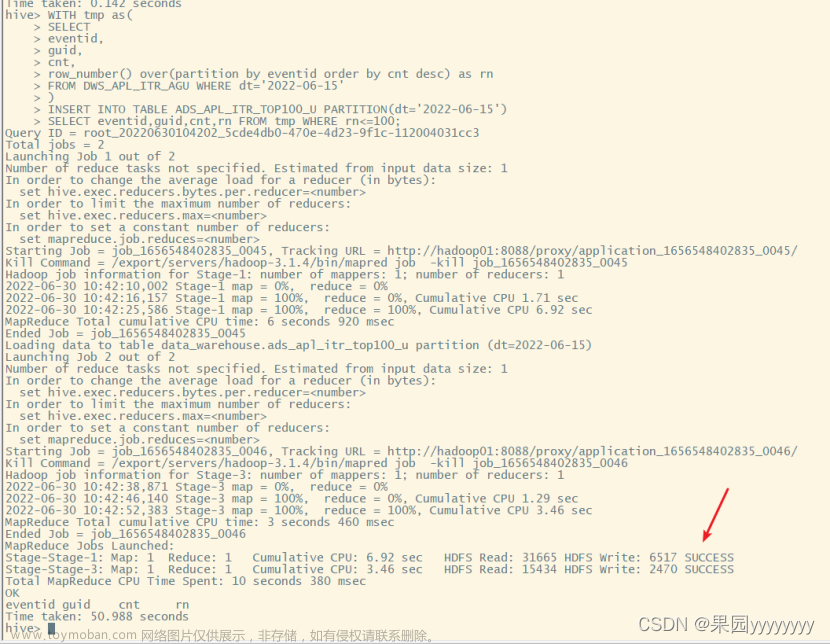

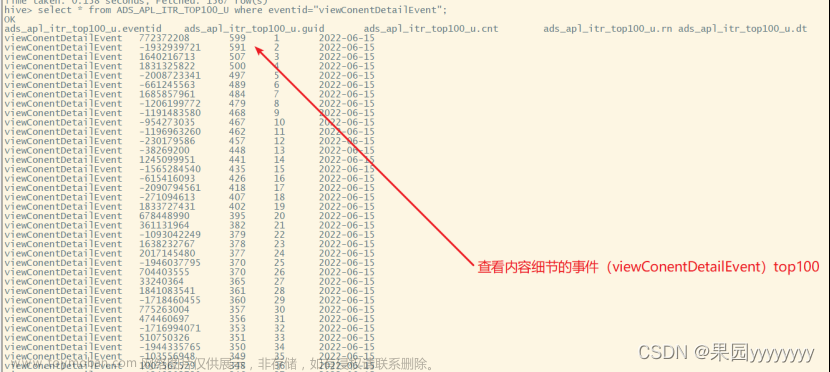

10.8.4.交互事件top100用户记录表:ADS_APL_ITR_TOP100_U

1.创建ADS_APL_ITR_TOP100_U表

CREATE TABLE ADS_APL_ITR_TOP100_U(

eventid string,

guid bigint,

cnt int,

rn int

)

COMMENT '交互事件top100用户记录表'

PARTITIONED BY (dt string)

stored as orc;

2.计算交互事件Top100

WITH tmp as(

SELECT

eventid,

guid,

cnt,

row_number() over(partition by eventid order by cnt desc) as rn

FROM DWS_APL_ITR_AGU WHERE dt='2022-06-15'

)

INSERT INTO TABLE ADS_APL_ITR_TOP100_U PARTITION(dt='2022-06-15')

SELECT eventid,guid,cnt,rn FROM tmp WHERE rn<=100;

3.查看结果

10.9.访问路径分析

10.9.1.用户访问路径明细记录表:DWD_APL_RUT_DTL

1.创建DWD_APL_RUT_DTL表

CREATE TABLE DWD_APL_RUT_DTL(

guid bigint , -- 用户标识

sessionid string , -- 会话id

page string , -- 页面(id或url)

ts bigint , -- 页面打开时间

stepno int , -- 访问步骤号

stay_time bigint -- 停留时长

)

COMMENT '用户访问路径明细记录表'

PARTITIONED BY (dt string)

STORED AS ORC;

2.分别计算访问步骤号和停留时长

WITH tmp as (

SELECT

guid,

sessionid,

event['url'] as page,

`timestamp` as ts,

row_number() over(partition by guid,sessionid order by `timestamp`) as stepno,

lead(`timestamp`,1,null) over(partition by guid,sessionid order by `timestamp`) - `timestamp` as stay_time

FROM DWD_APL_TFC_DTL WHERE dt='2022-06-15'

)

INSERT INTO TABLE DWD_APL_RUT_DTL PARTITION(dt='2022-06-15')

SELECT

guid,

sessionid,

page,

ts,

stepno,

if(stay_time is null,30000,stay_time) as stay_time

FROM tmp;

3.查看结果

10.9.2.访问路径概况统计报表:ADS_APL_RUT_OVW

1.创建ADS_APL_RUT_OVW表

CREATE TABLE ADS_APL_RUT_OVW(

stepno int ,

page string,

rut_s_cnt int, -- 路径会话数(page + step + ref)

step_s_cnt int, -- 步骤会话数(page + step)

page_s_cnt int -- 页面会话数(page)

)

COMMENT '访问路径概况统计报表'

PARTITIONED BY (dt string)

STORED AS ORC;

2.计算各个访问路径组合下的会话数

WITH tmp as (

SELECT

page,stepno,count(sessionid) as rut_s_cnt

FROM DWD_APL_RUT_DTL WHERE dt='2022-06-15'

GROUP BY page,stepno

)

INSERT INTO TABLE ADS_APL_RUT_OVW PARTITION(dt='2022-06-15')

SELECT

page,

stepno,

rut_s_cnt,

sum(rut_s_cnt) over(partition by page,stepno order by page rows between unbounded preceding and unbounded following) as step_s_cnt, -- page +step的会话数

sum(rut_s_cnt) over(partition by page order by page rows between unbounded preceding and unbounded following) as page_s_cnt --页面会话数

FROM tmp;

3.查看结果

10.10.转化漏斗分析

10.10.1.漏斗转化报表:ADS_APL_LD_OVW

1.创建ADS_APL_LD_OVW表

CREATE TABLE ADS_APL_LD_OVW(

ld_name string, -- 漏斗名称

stepno int, -- 漏斗步骤

user_cnt int, -- 步骤人数

rel_ratio double,

abs_ratio double

)

COMMENT '漏斗转化报表'

PARTITIONED BY (dt string)

stored as orc;

2.计算漏斗转化报表

with tmp as (

SELECT

'多易寻宝漏斗' as ld_name,

1 as stepno ,

count(1) as user_cnt

FROM (

SELECT

guid

FROM DWD_APL_GLB_DTL

WHERE dt='2022-06-15' and eventid='adShowEvent' and event['adId']='5'

GROUP BY guid

) o1

UNION ALL

SELECT

'多易寻宝漏斗' as ld_name,

2 as stepno,

count(1) as user_cnt

FROM (

SELECT

guid

FROM DWD_APL_GLB_DTL

WHERE dt='2022-06-15' and eventid='adClickEvent' and event['adId']='5'

GROUP BY guid

) o2

UNION ALL

SELECT

'多易寻宝漏斗' as ld_name,

3 as stepno,

count(1) as user_cnt

FROM (

SELECT

guid

FROM DWD_APL_GLB_DTL

WHERE dt='2022-06-15' and eventid='appClickEvent' and event['element_id']='14'

GROUP BY guid

) o3

UNION ALL

SELECT

'多易寻宝漏斗' as ld_name,

4 as stepno,

count(1) as user_cnt

FROM (

SELECT

guid

FROM DWD_APL_GLB_DTL

WHERE dt='2022-06-15' and eventid='appviewEvent' and event['screen_id']='665'

GROUP BY guid

) o4

UNION ALL

SELECT

'多易寻宝漏斗' as ld_name,

5 as stepno,

count(1) as user_cnt

FROM (

SELECT

guid

FROM DWD_APL_GLB_DTL

WHERE dt='2022-06-15' and eventid='appClickEvent' and event['element_id']='26'

GROUP BY guid

) o5

)

INSERT INTO TABLE ADS_APL_LD_OVW PARTITION (dt='2022-06-15')

select

ld_name,

stepno,

user_cnt,

if(stepno=1,1,user_cnt/lag(user_cnt,1,null) over(partition by ld_name order by stepno) ) as rel_ratio, -- 相对转化率

user_cnt/first_value(user_cnt) over(partition by ld_name order by stepno) as abs_ratio -- 整体转化率

from tmp;

3.查看结果

10.11.广告事件分析

10.11.1.广告概况统计报表:ADS_APL_ADV_OVW

1.创建ADS_APL_ADV_OVW表

CREATE TABLE ADS_APL_ADV_OVW(

dt string,

adid string,

show_ct int,

show_us int,

show_max_pg string,

click_ct int,

click_us int,

click_max_pg string

)

COMMENT '广告概况统计报表'

STORED AS ORC;

2.计算广告的曝光次数,曝光人数,点击次数,点击人数

WITH tmp1 as (

SELECT

'2022-06-15' as dt ,

event['adId'] as adid ,

count(if(eventid='adShowEvent',1,null)) as show_ct ,

count(distinct if(eventid='adShowEvent',guid,null)) as show_us ,

count(if(eventid='adClickEvent',1,null)) as click_ct ,

count(distinct if(eventid='adClickEvent',guid,null)) as click_us

FROM DWD_APL_ADV_DTL WHERE dt='2022-06-15'

GROUP BY event['adId']

),

tmp2 as (

SELECT

adid, -- 广告id

eventid, -- 事件类型

pgid -- 人数最大页

FROM

(

SELECT

adid,

pgid,

eventid,

us,

row_number() over(partition by adid,eventid order by us desc) as rn

FROM

(

SELECT

event['adId'] as adid,

event['pgId'] as pgid,

eventid as eventid,

count(distinct guid) as us

FROM DWD_APL_ADV_DTL WHERE dt='2022-06-15'

GROUP BY event['adId'],event['pgId'],eventid

) o1

)o2

WHERE rn=1)

INSERT INTO TABLE ADS_APL_ADV_OVW

SELECT

tmp1.dt ,

tmp1.adid ,

tmp1.show_ct ,

tmp1.show_us ,

tmp3.pgid as show_max_pg ,

tmp1.click_ct ,

tmp1.click_us ,

tmp4.pgid as click_max_pg

FROM tmp1

JOIN (select * from tmp2 where eventid='adShowEvent') tmp3 ON tmp1.adid=tmp3.adid

JOIN (select * from tmp2 where eventid='adClickEvent') tmp4 ON tmp1.adid=tmp4.adid;

3.查看结果

11.olap平台开发(项目具体代码点击获取)

11.1.presto安装与配置

11.2.后端开发

11.2.1.流量概况分析

1.省份维度流量分析

(1)接口规范

(2)代码实现

(3)查看结果

2.城市维度流量分析

(1)接口规范

(2)代码实现

(3)查看结果

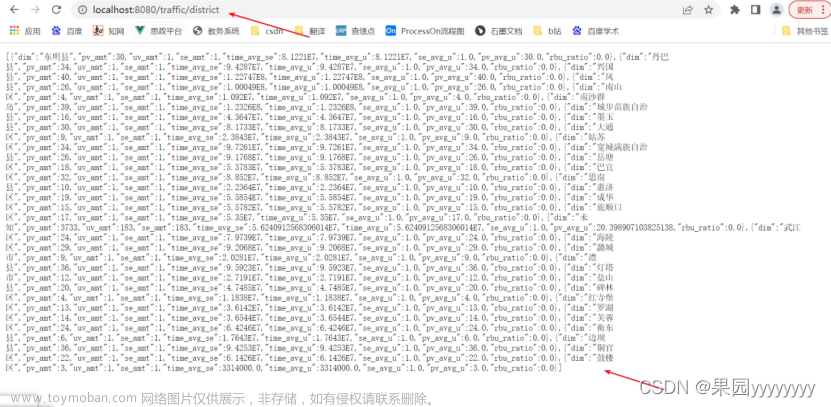

3.区县维度流量分析

(1)接口规范

(2)代码实现

(3)查看结果

4.设备类型维度流量分析

(1)接口规范

(2)代码实现

(3)查看结果

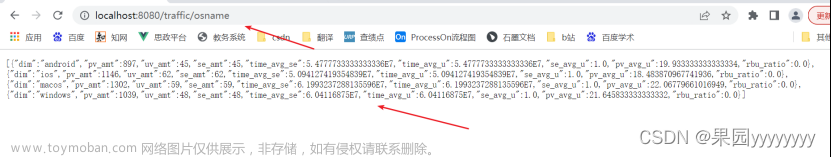

5.操作系统名称维度流量分析

(1)接口规范

(2)代码实现

(3)查看结果

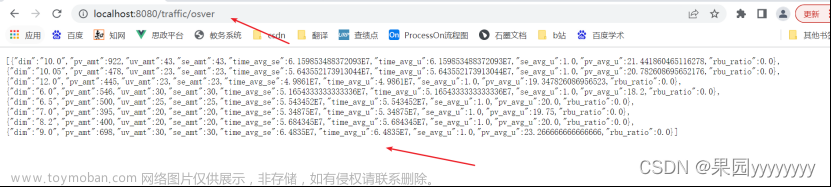

6.操作系统版本维度流量分析

(1)接口规范

(2)代码实现

(3)查看结果

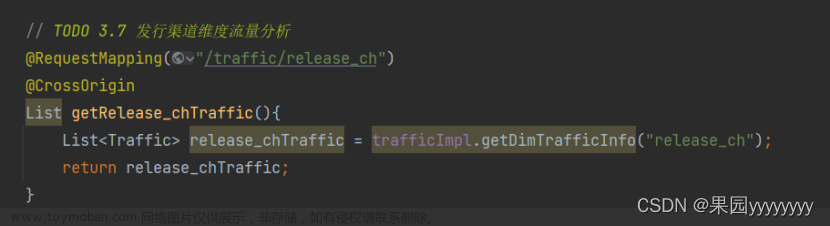

7.发行渠道维度流量分析

(1)接口规范

(2)代码实现

(3)查看结果

8.升级渠道维度流量分析

(1)接口规范

(2)代码实现

(3)查看结果

11.2.2.日新日活分析

1.省份维度日活数

(1)接口规范

(2)代码实现

(3)查看结果

2.城市维度日活数

(1)接口规范

(2)代码实现

(3)查看结果

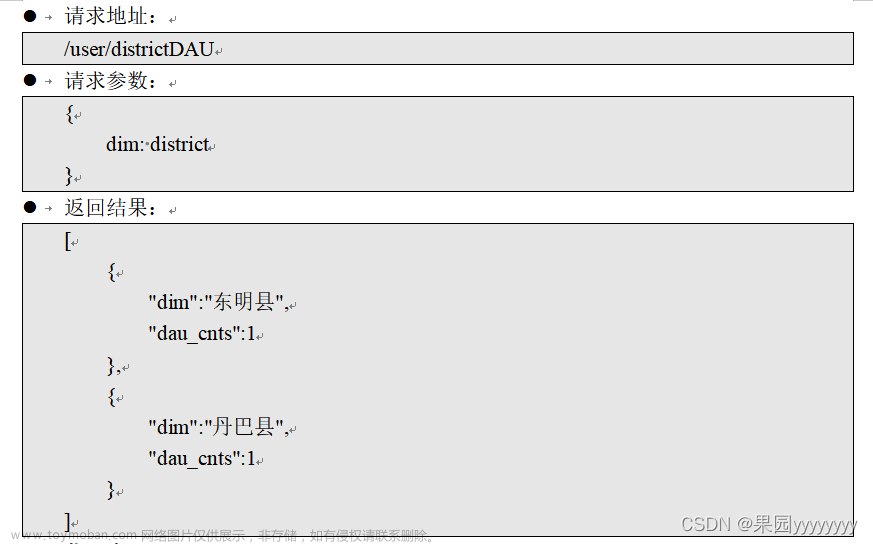

3.区县维度日活数

(1)接口规范

(2)代码实现

(3)查看结果

4.软件版本维度日活数

(1)接口规范

(2)代码实现

(3)查看结果

5.设备类型维度日活数

(1)接口规范

(2)代码实现

(3)查看结果

6.省份维度日新数

(1)接口规范

(2)代码实现

(3)查看结果

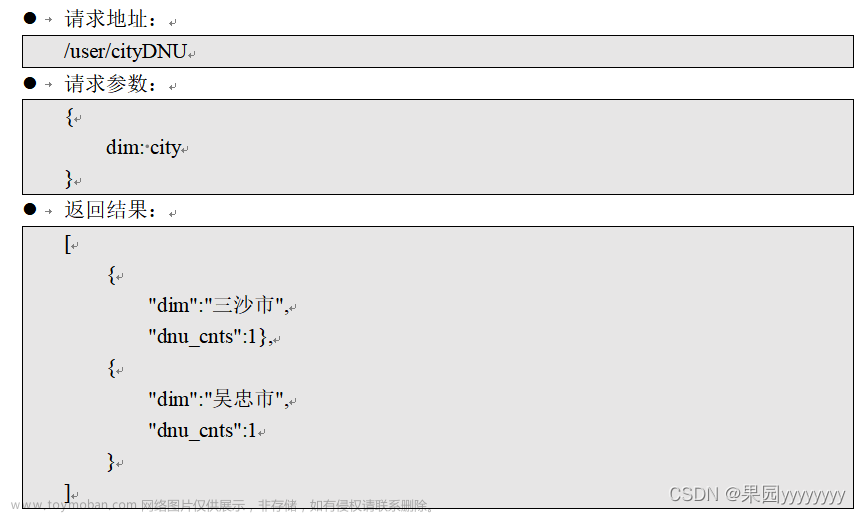

7.城市维度日活数

(1)接口规范

(2)代码实现

(3)查看结果

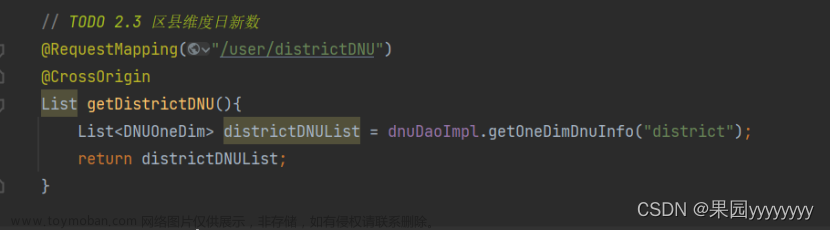

8.区县维度日活数

(1)接口规范

(2)代码实现

(3)查看结果

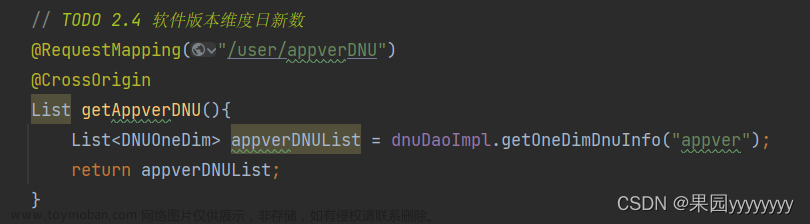

9.软件版本维度日活数

(1)接口规范

(2)代码实现

(3)查看结果

10.设备类型维度日活数

(1)接口规范

(2)代码实现

(3)查看结果

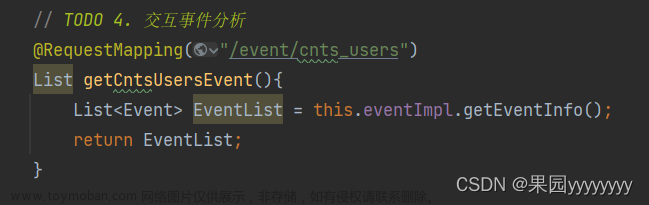

11.2.3.交互事件分析

1.交互事件概况分析

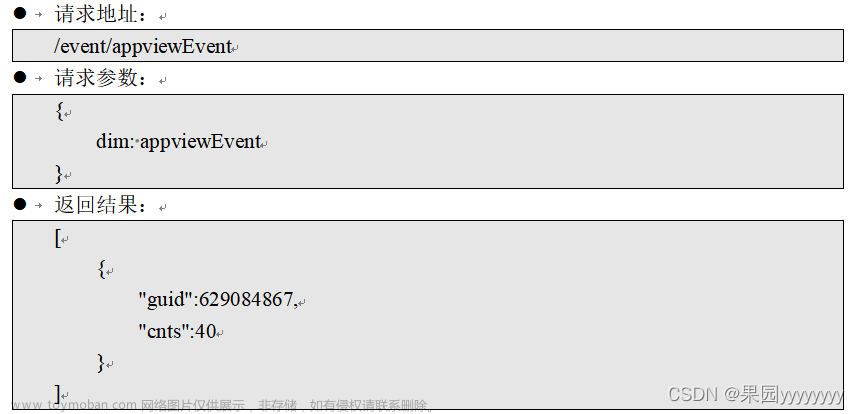

(1)接口规范

(2)代码实现

(3)查看结果

2.交互事件用户Top10分析

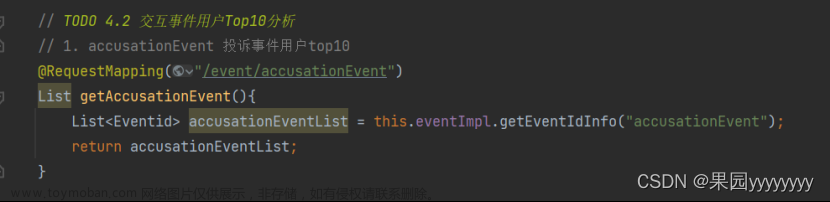

(1)投诉事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

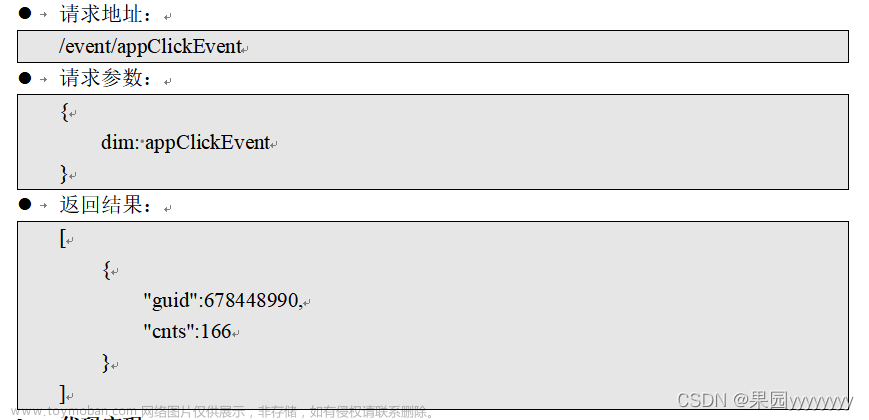

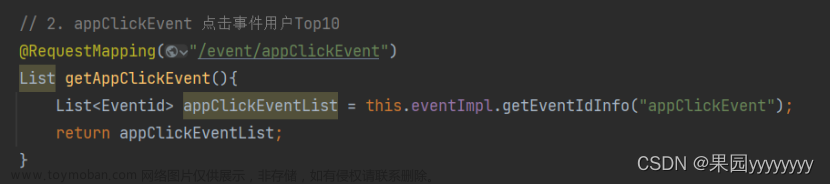

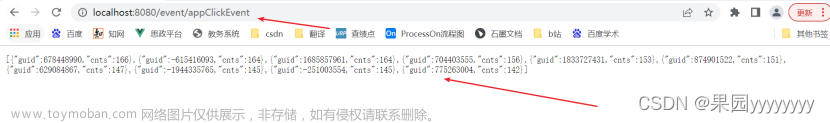

(2)点击事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

(3)打开事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

(4)浏览事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

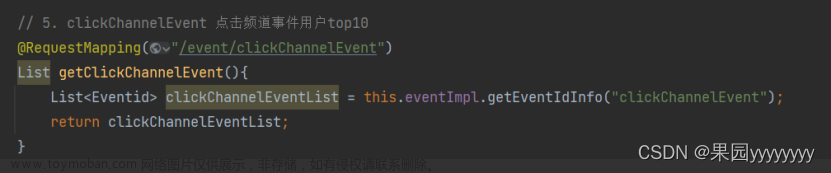

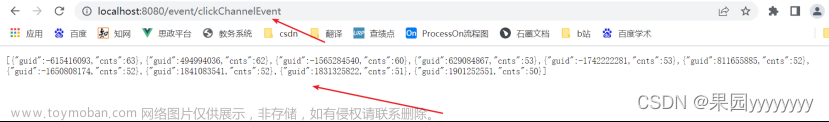

(5)点击频道事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

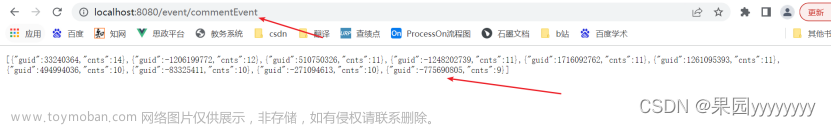

(6)评论事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

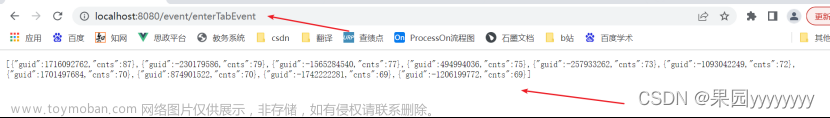

(7)进入选项事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

(8)点赞事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

(9)获取代码事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

(10)下载事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

(11)登录事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

(12)搜素事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

(13)分享转发事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

(14)报名事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

(15)查看内容详细信息事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

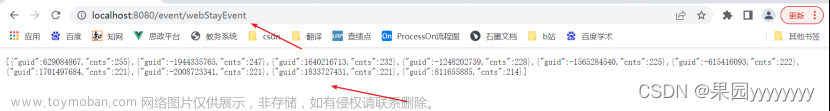

(16)网页停留事件用户top10

(1)接口规范

(2)代码实现

(3)查看结果

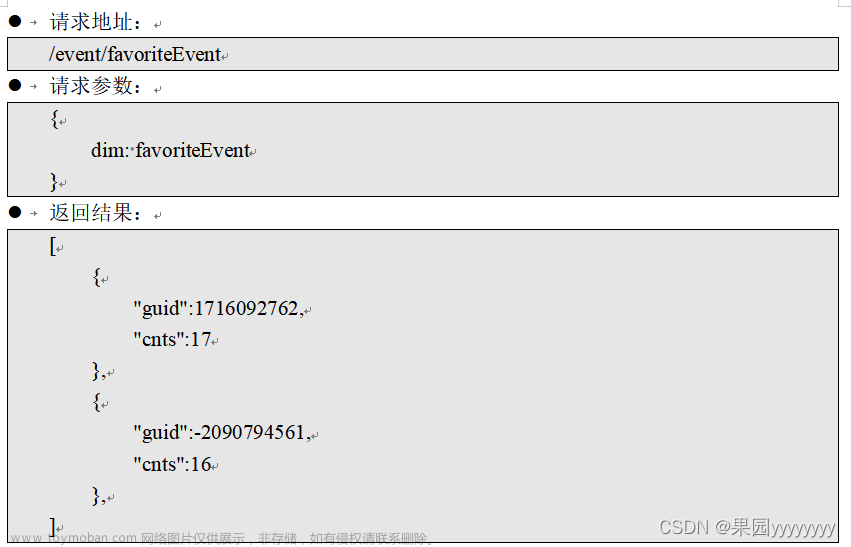

11.2.4.广告事件分析

(1)接口规范

(2)代码实现

(3)查看结果

11.3.前端开发

11.4.前后端联调

以“广告事件分析”为例,其余见项目代码压缩包。

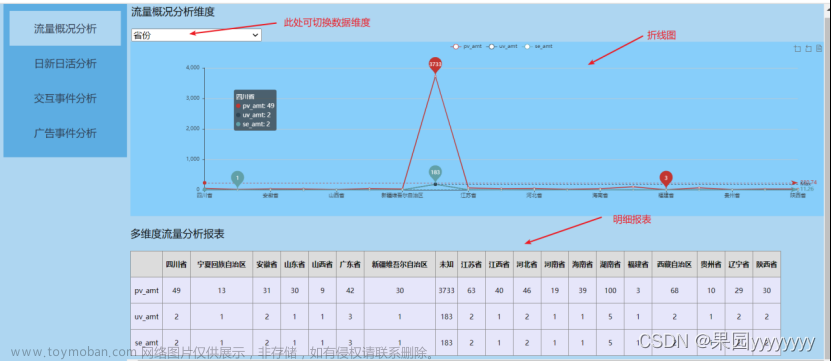

11.5.效果展示

11.5.1.流量概况分析

11.5.2.日新日活分析

11.5.3.交互事件分析

文章来源:https://www.toymoban.com/news/detail-682806.html

文章来源:https://www.toymoban.com/news/detail-682806.html

11.5.4.广告事件分析

点我获取项目数据集及代码文章来源地址https://www.toymoban.com/news/detail-682806.html

到了这里,关于【Spark+Hadoop+Hive+MySQL+Presto+SpringBoot+Echarts】基于大数据技术的用户日志数据分析及可视化平台搭建项目的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!