ELK日志收集系统集群实验

实验环境

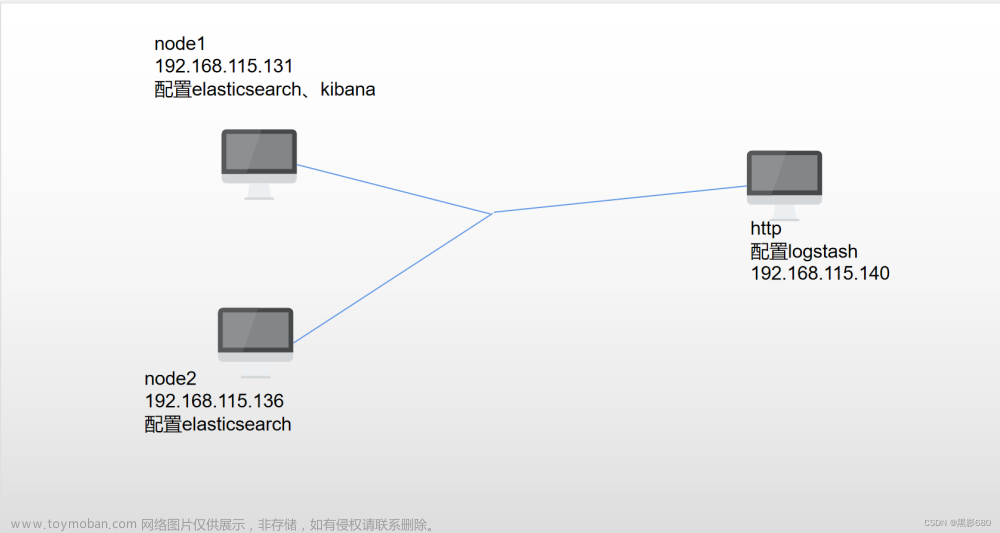

| 角色 | 主机名 | IP | 接口 |

| httpd | 192.168.31.50 | ens33 | |

| node1 | 192.168.31.51 | ens33 | |

| noed2 | 192.168.31.53 | ens33 | |

环境配置

设置各个主机的ip地址为拓扑中的静态ip,并修改主机名

#httpd

[root@localhost ~]# hostnamectl set-hostname httpd

[root@localhost ~]# bash

[root@httpd ~]#

#node1

[root@localhost ~]# hostnamectl set-hostname node1

[root@localhost ~]# bash

[root@node1 ~]# vim /etc/hosts

192.168.31.51 node1

192.168.31.53 node2

#node2

[root@localhost ~]# hostnamectl set-hostname node2

[root@localhost ~]# bash

[root@node2 ~]# vim /etc/hosts

192.168.31.51 node1

192.168.31.53 node2

安装elasticsearch

#node1

[root@node1 ~]# ls

elk软件包 公共 模板 视频 图片 文档 下载 音乐 桌面

[root@node1 ~]# mv elk软件包 elk

[root@node1 ~]# ls

elk 公共 模板 视频 图片 文档 下载 音乐 桌面

[root@node1 ~]# cd elk

[root@node1 elk]# ls

elasticsearch-5.5.0.rpm kibana-5.5.1-x86_64.rpm node-v8.2.1.tar.gz

elasticsearch-head.tar.gz logstash-5.5.1.rpm phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node1 elk]# rpm -ivh elasticsearch-5.5.0.rpm

警告:elasticsearch-5.5.0.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

Creating elasticsearch group... OK

Creating elasticsearch user... OK

正在升级/安装...

1:elasticsearch-0:5.5.0-1 ################################# [100%]

### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemd

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.service

### You can start elasticsearch service by executing

sudo systemctl start elasticsearch.service

#node2

配置

node1

vim /etc/elasticsearch/elasticsearch.yml

17 cluster.name: my-elk-cluster //集群名称

23 node.name: node1 //节点名字

33 path.data: /var/lib/elasticsearch //数据存放路径

37 path.logs: /var/log/elasticsearch/ //日志存放路径

43 bootstrap.memory_lock: false //在启动的时候不锁定内存

55 network.host: 0.0.0.0 //提供服务绑定的IP地址,0.0.0.0代表所有地址

59 http.port: 9200 //侦听端口为9200

68 discovery.zen.ping.unicast.hosts: ["node1", "node2"] //群集发现通过单播实现

node2

17 cluster.name: my-elk-cluster //集群名称

23 node.name: node1 //节点名字

33 path.data: /var/lib/elasticsearch //数据存放路径

37 path.logs: /var/log/elasticsearch/ //日志存放路径

43 bootstrap.memory_lock: false //在启动的时候不锁定内存

55 network.host: 0.0.0.0 //提供服务绑定的IP地址,0.0.0.0代表所有地址

59 http.port: 9200 //侦听端口为9200

68 discovery.zen.ping.unicast.hosts: ["node1", "node2"] //群集发现通过单播实现

在node1安装-elasticsearch-head插件

移动到elk文件夹

#安装插件编译很慢

[root@node1 ~]# cd elk/

[root@node1 elk]# ls

elasticsearch-5.5.0.rpm kibana-5.5.1-x86_64.rpm phantomjs-2.1.1-linux-x86_64.tar.bz2

elasticsearch-head.tar.gz logstash-5.5.1.rpm node-v8.2.1.tar.gz

[root@node1 elk]# tar xf node-v8.2.1.tar.gz

[root@node1 elk]# cd node-v8.2.1/

[root@node1 node-v8.2.1]# ./configure && make && make install

[root@node1 elk]# cd ~/elk

[root@node1 elk]# tar xf phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node1 elk]# cd phantomjs-2.1.1-linux-x86_64/bin/

[root@node1 bin]# ls

phantomjs

[root@node1 bin]# cp phantomjs /usr/local/bin/

[root@node1 bin]# cd ~/elk/

[root@node1 elk]# tar xf elasticsearch-head.tar.gz

[root@node1 elk]# cd elasticsearch-head/

[root@node1 elasticsearch-head]# npm install

npm WARN deprecated fsevents@1.2.13: The v1 package contains DANGEROUS / INSECURE binaries. Upgrade to safe fsevents v2

npm WARN optional SKIPPING OPTIONAL DEPENDENCY: fsevents@^1.0.0 (node_modules/karma/node_modules/chokidar/node_modules/fsevents):

npm WARN notsup SKIPPING OPTIONAL DEPENDENCY: Unsupported platform for fsevents@1.2.13: wanted {"os":"darwin","arch":"any"} (current: {"os":"linux","arch":"x64"})

npm WARN elasticsearch-head@0.0.0 license should be a valid SPDX license expression

up to date in 3.536s

修改elasticsearch配置文件

[root@node1 ~]# vim /etc/elasticsearch/elasticsearch.yml

84 # ---------------------------------- Various -----------------------------------

85 #

86 # Require explicit names when deleting indices:

87 #

88 #action.destructive_requires_name: true

89 http.cors.enabled: true //开启跨域访问支持,默认为false

90 http.cors.allow-origin:"*" //跨域访问允许的域名地址

[root@node1 ~]# systemctl restart elasticsearch.service

#启动elasticsearch-head

cd /root/elk/elasticsearch-head

npm run start &

#查看监听

netstat -anput | grep :9100

#访问

http://192.168.31.51:9100

node1服务器安装logstash

[root@node1 elk]# rpm -ivh logstash-5.5.1.rpm

警告:logstash-5.5.1.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

软件包 logstash-1:5.5.1-1.noarch 已经安装

#开启并创建一个软连接

[root@node1 elk]# systemctl start logstash.service

[root@node1 elk]# In -s /usr/share/logstash/bin/logstash /usr/local/bin/

#测试1

[root@node1 elk]# logstash -e 'input{ stdin{} }output { stdout{} }'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

16:03:50.250 [main] INFO logstash.setting.writabledirectory - Creating directory {:setting=>"path.queue", :path=>"/usr/share/logstash/data/queue"}

16:03:50.256 [main] INFO logstash.setting.writabledirectory - Creating directory {:setting=>"path.dead_letter_queue", :path=>"/usr/share/logstash/data/dead_letter_queue"}

16:03:50.330 [LogStash::Runner] INFO logstash.agent - No persistent UUID file found. Generating new UUID {:uuid=>"9ba08544-a7a7-4706-a3cd-2e2ca163548d", :path=>"/usr/share/logstash/data/uuid"}

16:03:50.584 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>250}

16:03:50.739 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

16:03:50.893 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

^C16:04:32.838 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

16:04:32.855 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

#测试2

[root@node1 elk]# logstash -e 'input { stdin{} } output { stdout{ codec=>rubydebug }}'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

16:46:23.975 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>250}

The stdin plugin is now waiting for input:

16:46:24.014 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

16:46:24.081 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

^C16:46:29.970 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

16:46:29.975 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

#测试3

16:46:29.975 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

[root@node1 elk]# logstash -e 'input { stdin{} } output { elasticsearch{ hosts=>["192.168.31.51:9200"]} }'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

16:46:55.951 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://192.168.31.51:9200/]}}

16:46:55.955 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Running health check to see if an Elasticsearch connection is working {:healthcheck_url=>http://192.168.31.51:9200/, :path=>"/"}

16:46:56.049 [[main]-pipeline-manager] WARN logstash.outputs.elasticsearch - Restored connection to ES instance {:url=>#<Java::JavaNet::URI:0x3a106333>}

16:46:56.068 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Using mapping template from {:path=>nil}

16:46:56.204 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>50001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"_all"=>{"enabled"=>true, "norms"=>false}, "dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date", "include_in_all"=>false}, "@version"=>{"type"=>"keyword", "include_in_all"=>false}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

16:46:56.233 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Installing elasticsearch template to _template/logstash

16:46:56.429 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>[#<Java::JavaNet::URI:0x19aeba5c>]}

16:46:56.432 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>250}

16:46:56.461 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

16:46:56.561 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

^C16:46:57.638 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

16:46:57.658 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

logstash日志收集文件格式(默认存储在/etc/logstash/conf.d)

Logstash配置文件基本由三部分组成:input、output以及 filter(根据需要)。标准的配置文件格式如下:

input (...) 输入

filter {...} 过滤

output {...} 输出

在每个部分中,也可以指定多个访问方式。例如,若要指定两个日志来源文件,则格式如下:

input {

file{path =>"/var/log/messages" type =>"syslog"}

file { path =>"/var/log/apache/access.log" type =>"apache"}

}

案例:通过logstash收集系统信息日志

[root@node1 conf.d]# chmod o+r /var/log/messages

[root@node1 conf.d]# vim /etc/logstash/conf.d/system.conf

input {

file{

path => "/var/log/messages"

type => "system"

start_position => "beginning"

}

}

output {

elasticsearch{

hosts =>["192.168.31.51:9200"]

index => "system-%{+YYYY.MM.dd}"

}

}

[root@node1 conf.d]# systemctl restart logstash.service

node1节点安装kibana

cd ~/elk

[root@node1 elk]# rpm -ivh kibana-5.5.1-x86_64.rpm

警告:kibana-5.5.1-x86_64.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:kibana-5.5.1-1 ################################# [100%]

[root@node1 elk]# vim /etc/kibana/kibana.yml

2 server.port: 5601 //Kibana打开的端口

7 server.host: "0.0.0.0" //Kibana侦听的地址

21 elasticsearch.url: "http://192.168.31.51:9200" //和Elasticsearch 建立连接

30 kibana.index: ".kibana" //在Elasticsearch中添加.kibana索引

[root@node1 elk]# systemctl start kibana.service 访问kibana

首次访问需要添加索引,我们添加前面已经添加过的索引:system-*

企业案例

收集nginx访问日志信息

在httpd服务器上安装logstash,参数上述安装过程

logstash在httpd服务器上作为agent(代理),不需要启动

编写httpd日志收集配置文件

[root@httpd ]# yum install -y httpd

[root@httpd ]# systemctl start httpd

[root@httpd ]# systemctl start logstash

[root@httpd ]# vim /etc/logstash/conf.d/httpd.conf

input {

file {

path => "/var/log/httpd/access_log"

type => "access"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["192.168.31.51:9200"]

index => "httpd-%{+YYYY.MM.dd}"

}

}

[root@httpd ]# logstash -f /etc/logstash/conf.d/httpd.conf

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

21:29:34.272 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://192.168.31.51:9200/]}}

21:29:34.275 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Running health check to see if an Elasticsearch connection is working {:healthcheck_url=>http://192.168.31.51:9200/, :path=>"/"}

21:29:34.400 [[main]-pipeline-manager] WARN logstash.outputs.elasticsearch - Restored connection to ES instance {:url=>#<Java::JavaNet::URI:0x1c254b0a>}

21:29:34.423 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Using mapping template from {:path=>nil}

21:29:34.579 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>50001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"_all"=>{"enabled"=>true, "norms"=>false}, "dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date", "include_in_all"=>false}, "@version"=>{"type"=>"keyword", "include_in_all"=>false}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

21:29:34.585 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>[#<Java::JavaNet::URI:0x3b483278>]}

21:29:34.588 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>125}

21:29:34.845 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

21:29:34.921 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

wwwww

w

w

文章来源:https://www.toymoban.com/news/detail-685336.html

文章来源:https://www.toymoban.com/news/detail-685336.html

文章来源地址https://www.toymoban.com/news/detail-685336.html

文章来源地址https://www.toymoban.com/news/detail-685336.html

到了这里,关于系统学习Linux-ELK日志收集系统的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!