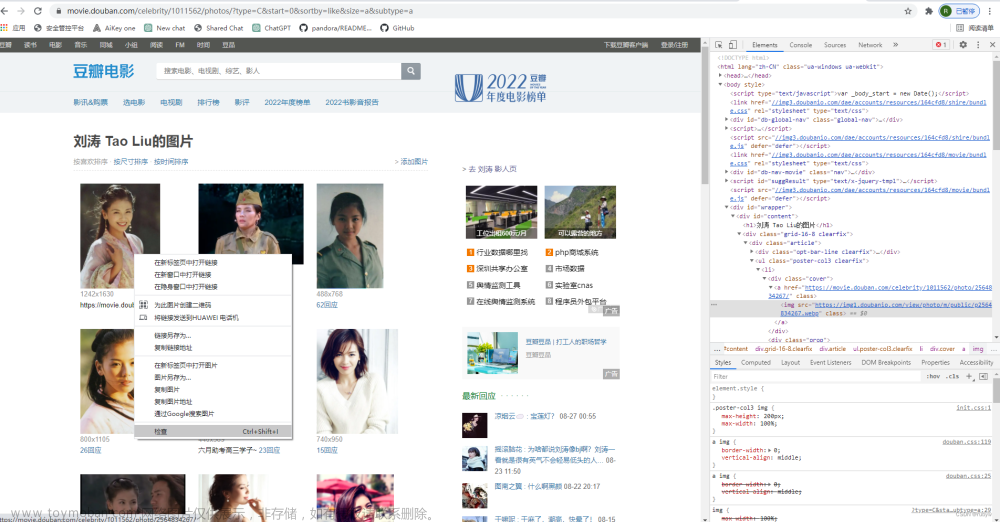

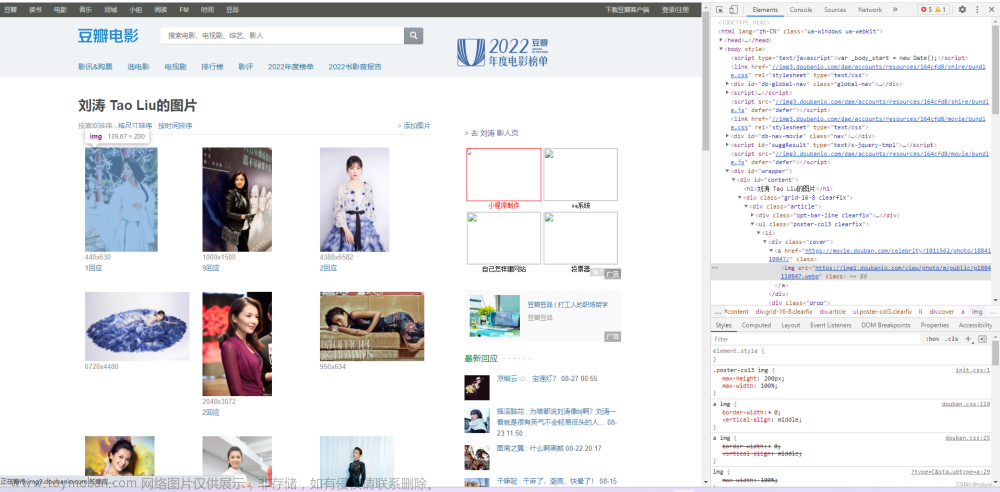

从谷歌浏览器的开发工具进入

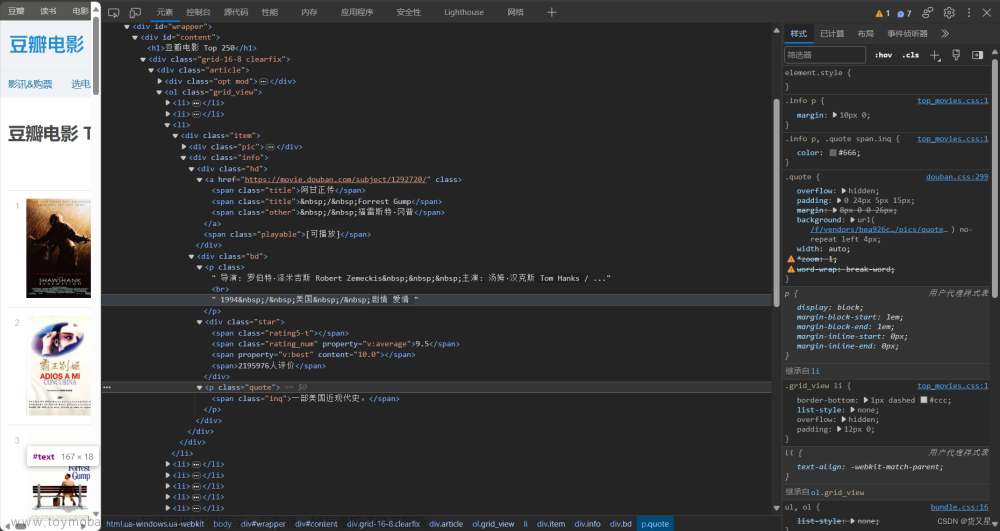

选择图片右键点击检查

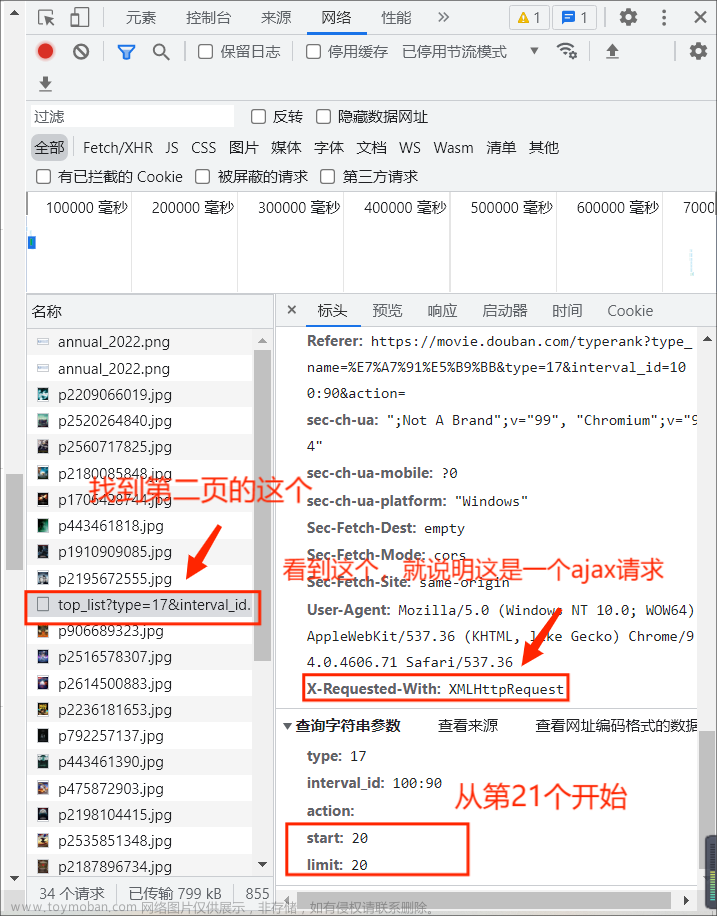

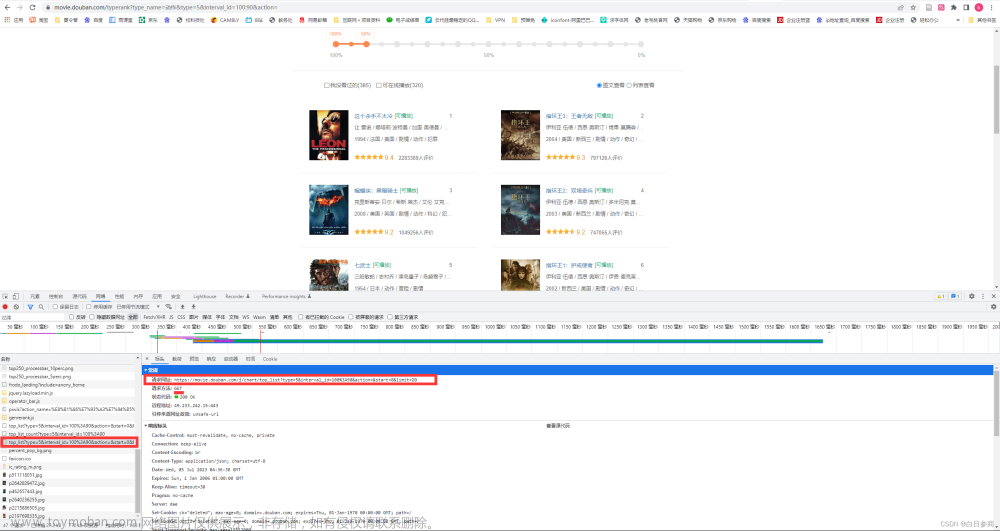

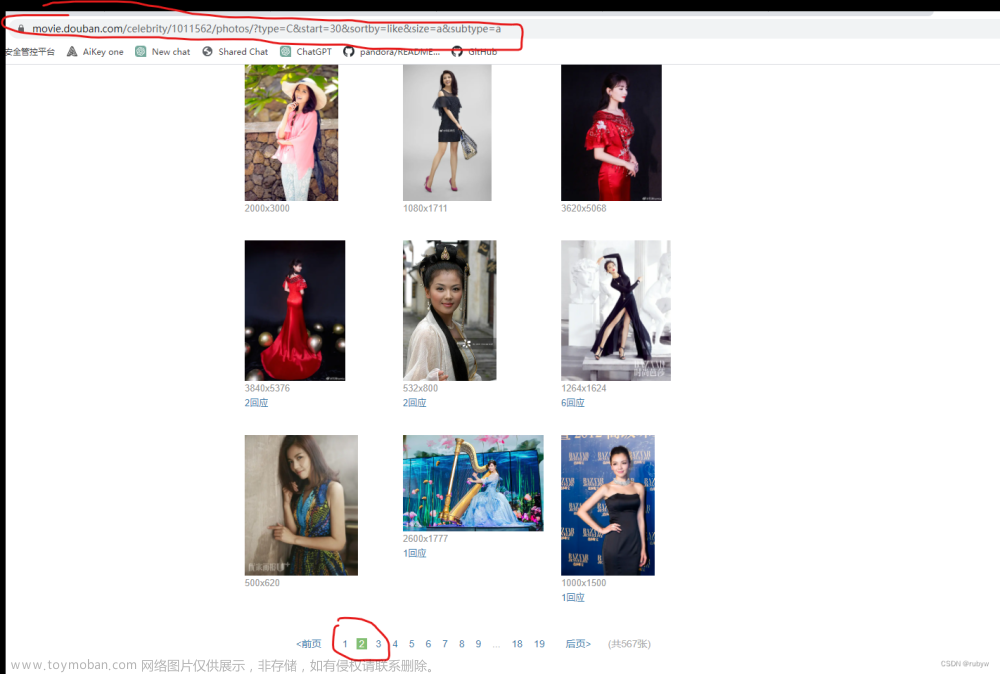

翻页之后发现网址变化的只有start数值,每次变化值为30

Python代码

import requests

from bs4 import BeautifulSoup

import time

import os

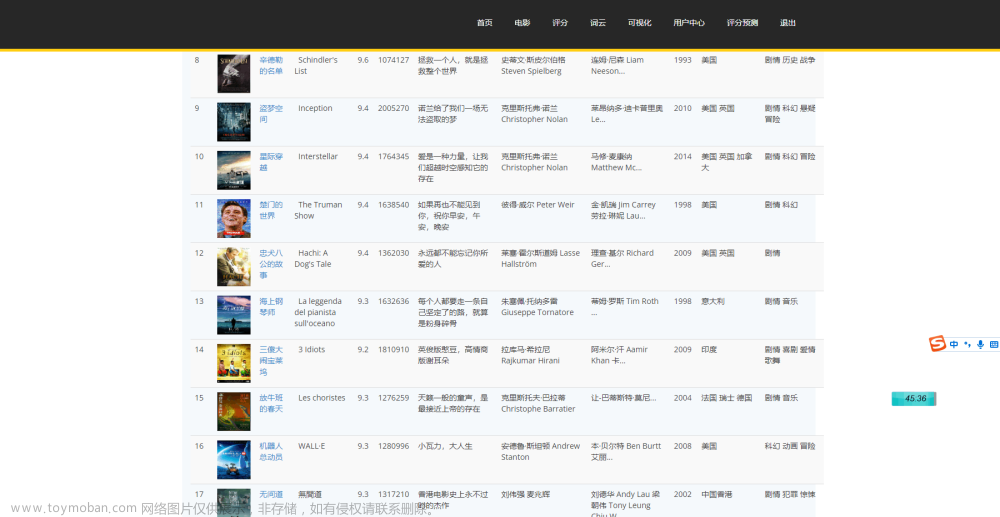

# 豆瓣影人图片

url = 'https://movie.douban.com/celebrity/1011562/photos/'

res = requests.get(url=url, headers="").text

content = BeautifulSoup(res, "html.parser")

data = content.find_all('div', attrs={'class': 'cover'})

picture_list = []

for d in data:

plist = d.find('img')['src']

picture_list.append(plist)

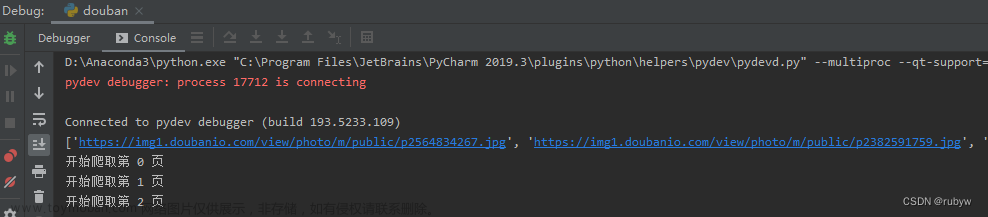

print(picture_list)

# https://movie.douban.com/celebrity/1011562/photos/?type=C&start=30&sortby=like&size=a&subtype=a

def get_poster_url(res):

content = BeautifulSoup(res, "html.parser")

data = content.find_all('div', attrs={'class': 'cover'})

picture_list = []

for d in data:

plist = d.find('img')['src']

picture_list.append(plist)

return picture_list

# XPath://*[@id="content"]/div/div[1]/ul/li[1]/div[1]/a/img

def download_picture(pic_l):

if not os.path.exists(r'picture'):

os.mkdir(r'picture')

for i in pic_l:

pic = requests.get(i)

p_name = i.split('/')[7]

with open('picture\\' + p_name, 'wb') as f:

f.write(pic.content)

def fire():

page = 0

for i in range(0, 450, 30):

print("开始爬取第 %s 页" % page)

url = 'https://movie.douban.com/celebrity/1011562/photos/?type=C&start={}&sortby=like&size=a&subtype=a'.format(i)

res = requests.get(url=url, headers="").text

data = get_poster_url(res)

download_picture(data)

page += 1

time.sleep(1)

fire()

文章来源:https://www.toymoban.com/news/detail-686353.html

文章来源:https://www.toymoban.com/news/detail-686353.html

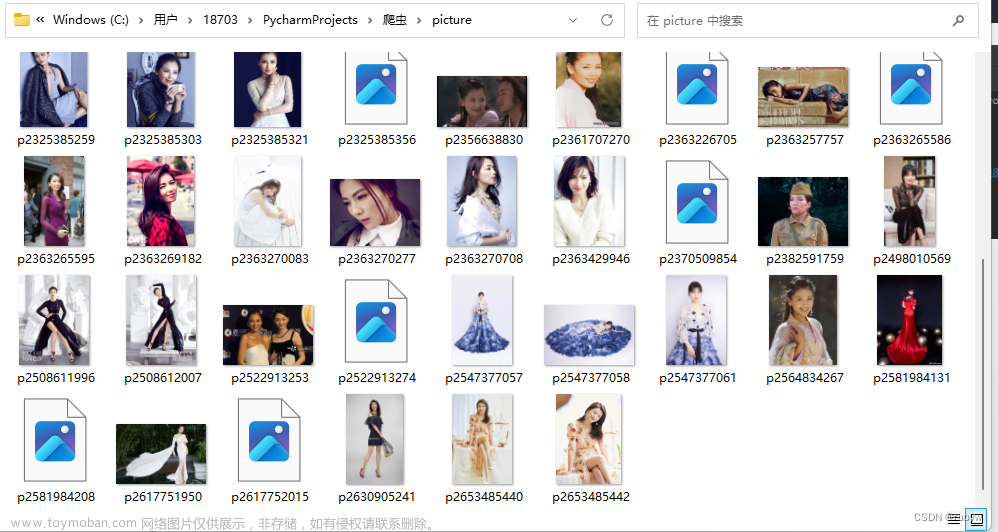

把爬取的图片全部放到新建的文件夹中存放 文章来源地址https://www.toymoban.com/news/detail-686353.html

文章来源地址https://www.toymoban.com/news/detail-686353.html

到了这里,关于Python爬虫:一个爬取豆瓣电影人像的小案例的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![python爬取豆瓣电影排行前250获取电影名称和网络链接[静态网页]————爬虫实例(1)](https://imgs.yssmx.com/Uploads/2024/01/415693-1.png)

![[Python练习]使用Python爬虫爬取豆瓣top250的电影的页面源码](https://imgs.yssmx.com/Uploads/2024/02/797225-1.png)