一些比较简单的和实验的没有在这里给出。文章来源:https://www.toymoban.com/news/detail-689186.html

遗传算法求解TSP问题

#include "stdio.h"

#include "stdlib.h"

#include "time.h"

#define cityNum 10

#define popSize 10

#define croRate 0.85

#define mutRate 0.1

#define MAX 999

//定义染色体的结构

struct Chrom

{

int cityArr[cityNum];

char name;

float adapt;

int dis;

};

struct Chrom genes[popSize];

struct Chrom genesNew[popSize];

struct Chrom temp;

char names[cityNum] = {'A','B','C','D','E','F','G','H','I','J'};

int distance[cityNum][cityNum] = {{ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9 },

{ 1, 0, 1, 2, 3, 4, 5, 6, 7, 8 },

{ 2, 1, 0, 1, 2, 3, 4, 5, 6, 7 },

{ 3, 2, 1, 0, 1, 2, 3, 4, 5, 6 },

{ 4, 3, 2, 1, 0, 1, 2, 3, 4, 5 },

{ 5, 4, 3, 2, 1, 0, 1, 2, 3, 4 },

{ 6, 5, 4, 3, 2, 1, 0, 1, 2, 3 },

{ 7, 6, 5, 4, 3, 2, 1, 0, 1, 2 },

{ 8, 7, 6, 5, 4, 3, 2, 1, 0, 1 },

{ 9, 8, 7, 6, 5, 4, 3, 2, 1, 0 }};

void initGroup()

{

int i,j,k;

int t = 0;

int flag = 0;

srand(time(NULL));

for(i = 0; i < popSize; i ++)

{

temp.name = names[i];

temp.adapt = 0.0f;

temp.dis = 0;

for(j = 0; j < cityNum;)

{

t = rand()%cityNum;

flag = 1;

for(k = 0; k < j; k ++)

{

if(genes[i].cityArr[k] == t)

{

flag = 0;

break;

}

}

if(flag)

{

temp.cityArr[j] = t;

genes[i] = temp;

j++;

}

}

}

}

void popFitness()

{

int i,n1,n2;

for(i = 0; i < popSize; i ++)

{

genes[i].dis = 0;

for(int j = 1;j < cityNum; j ++)

{

n1 = genes[i].cityArr[j-1];

n2 = genes[i].cityArr[j];

genes[i].dis += distance[n1][n2];

}

genes[i].dis += distance[genes[i].cityArr[0]][genes[i].cityArr[cityNum-1]];

genes[i].adapt = (float)1/genes[i].dis;

}

}

int chooseBest()

{

int choose = 0;

float best = 0.0f;

best = genes[0].adapt;

for(int i = 0; i < popSize; i ++)

{

if(genes[i].adapt < best)

{

best = genes[i].adapt;

choose = i;

}

}

return choose;

}

void select()

{

float biggestSum = 0.0f;

float adapt_pro[popSize];

float pick = 0.0f;

int i;

for(i = 0; i < popSize; i ++)

{

biggestSum += genes[i].adapt;

}

for(i = 0; i < popSize; i ++)

{

adapt_pro[i] = genes[i].adapt / biggestSum;

}

for(i = 0;i < popSize; i ++)

{

pick = (float)rand()/RAND_MAX;

/********** Begin **********/

float sum = 0;

for(int j=0;j<popSize;j++){

if(pick<=sum+adapt_pro[j]){

genesNew[i]=genes[j];

break;

}

sum += adapt_pro[j];

}

/********** End **********/

}

for(i = 0;i < popSize; i++)

{

genes[i] = genesNew[i];

}

}

void cross()

{

float pick;

int choice1,choice2;

int pos1,pos2;

int temp;

int conflict1[popSize];

int conflict2[popSize];

int num1;

int num2;

int index1,index2;

int move = 0;

while(move < popSize-1)

{

pick = (float)rand()/RAND_MAX;

if(pick > croRate)

{

move += 2;

continue;

}

choice1 = move;

choice2 = move+1;

pos1 = rand()%popSize;

pos2 = rand()%popSize;

while(pos1 > popSize -2 || pos1 < 1)

{

pos1 = rand()%popSize;

}

while(pos2 > popSize -2 || pos2 < 1)

{

pos2 = rand()%popSize;

}

if(pos1 > pos2)

{

temp = pos1;

pos1 = pos2;

pos2 = temp;

}

for(int j = pos1;j <= pos2; j++)

{

temp = genes[choice1].cityArr[j];

genes[choice1].cityArr[j] = genes[choice2].cityArr[j];

genes[choice2].cityArr[j] = temp;

}

num1 = 0;

num2 = 0;

if(pos1 > 0 && pos2 < popSize - 1)

{

/********** Begin **********/

for(int j=0;j<pos1;j++)

{

for(int k=pos1;k<=pos2;k++)

{

if(genes[choice1].cityArr[j]==genes[choice1].cityArr[k])

conflict1[num1++]=j;

if(genes[choice2].cityArr[j]==genes[choice2].cityArr[k])

conflict2[num2++]=j;

}

}

/********** End **********/

for(int j = pos2 + 1;j < popSize;j++)

{

for(int k = pos1; k <= pos2; k ++)

{

/********** Begin **********/

if(genes[choice1].cityArr[j]==genes[choice1].cityArr[k])

conflict1[num1++]=j;

if(genes[choice2].cityArr[j]==genes[choice2].cityArr[k])

conflict2[num2++]=j;

/********** End **********/

}

}

}

if((num1 == num2) && num1 > 0)

{

for(int j = 0;j < num1; j ++)

{

index1 = conflict1[j];

index2 = conflict2[j];

temp = genes[choice1].cityArr[index1];

genes[choice1].cityArr[index1] = genes[choice2].cityArr[index2];

genes[choice2].cityArr[index2] = temp;

}

}

move += 2;

}

}

void mutation()

{

double pick;

int pos1,pos2,temp;

for(int i = 0;i < popSize; i ++)

{

pick = (float)rand()/RAND_MAX;

if(pick > mutRate)

{

continue;

}

pos1 = rand()%popSize;

pos2 = rand()%popSize;

while(pos1 > popSize - 1)

{

pos1 = rand()%popSize;

}

while(pos2 > popSize - 1)

{

pos2 = rand()%popSize;

}

int a = genes[i].dis;

temp = genes[i].cityArr[pos1];

genes[i].cityArr[pos1] = genes[i].cityArr[pos2];

genes[i].cityArr[pos2] = temp;

popFitness();

if(genes[i].dis > a)

{

temp = genes[i].cityArr[pos1];

genes[i].cityArr[pos1] = genes[i].cityArr[pos2];

genes[i].cityArr[pos2] = temp;

}

}

}使用搜索算法实现罗马尼亚问题的求解

#include<iostream>

#include<vector>

#include<memory.h>

#include<stack>

#include<algorithm>

#include<cmath>

#define A 0

#define B 1

#define C 2

#define D 3

#define E 4

#define F 5

#define G 6

#define H 7

#define I 8

#define L 9

#define M 10

#define N 11

#define O 12

#define P 13

#define R 14

#define S 15

#define T 16

#define U 17

#define V 18

#define Z 19

using namespace std;

int h[20] =

{ 366,0,160,242,161,

178,77,151,226,244,

241,234,380,98,193,

253,329,80,199,374 };

struct node

{

int g;

int h;

int f;

int name;

node(int name, int g, int h)

{

this->name = name;

this->g = g;

this->h = h;

this->f = g + h;

};

bool operator <(const node &a)const

{

return f < a.f;

}

};

class Graph

{

public:

Graph()

{

memset(graph, -1, sizeof(graph));

}

int getEdge(int from, int to)

{

return graph[from][to];

}

void addEdge(int from, int to, int cost)

{

if (from >= 20 || from < 0 || to >= 20 || to < 0)

return;

graph[from][to] = cost;

}

void init(){

addEdge(O, Z, 71);

addEdge(Z, O, 71);

addEdge(O, S, 151);

addEdge(S, O, 151);

addEdge(Z, A, 75);

addEdge(A, Z, 75);

addEdge(A, S, 140);

addEdge(S, A, 140);

addEdge(A, T, 118);

addEdge(T, A, 118);

addEdge(T, L, 111);

addEdge(L, T, 111);

addEdge(L, M, 70);

addEdge(M, L, 70);

addEdge(M, D, 75);

addEdge(D, M, 75);

addEdge(D, C, 120);

addEdge(C, D, 120);

addEdge(C, R, 146);

addEdge(R, C, 146);

addEdge(S, R, 80);

addEdge(R, S, 80);

addEdge(S, F, 99);

addEdge(F, S, 99);

addEdge(F, B, 211);

addEdge(B, F, 211);

addEdge(P, C, 138);

addEdge(C, P, 138);

addEdge(R, P, 97);

addEdge(P, R, 97);

addEdge(P, B, 101);

addEdge(B, P, 101);

addEdge(B, G, 90);

addEdge(G, B, 90);

addEdge(B, U, 85);

addEdge(U, B, 85);

addEdge(U, H, 98);

addEdge(H, U, 98);

addEdge(H, E, 86);

addEdge(E, H, 86);

addEdge(U, V, 142);

addEdge(V, U, 142);

addEdge(I, V, 92);

addEdge(V, I, 92);

addEdge(I, N, 87);

addEdge(N, I, 87);

}

private:

int graph[20][20];

};

bool list[20];

vector<node> openList;

bool closeList[20];

stack<int> road;

int parent[20];

void A_star(int goal,node &src,Graph &graph)

{

openList.push_back(src);

sort(openList.begin(), openList.end());

while (!openList.empty())

{

/********** Begin **********/

node cur = openList[0];

if(cur.name==goal) return;

openList.erase(openList.begin());

closeList[cur.name] = true;

list[cur.name] = false;

for(int i=0;i<20;i++){

if(graph.getEdge(cur.name, i)!=-1 && !closeList[i]){

int cost = cur.g + graph.getEdge(cur.name, i);

if(list[i]){

//更新扩展节点

for(int j=0;j<openList.size();j++){

if(openList[j].name==i){

if(openList[j].g>cost){

openList[j].g = cost;

openList[j].f = openList[j].h + cost;

parent[i] = cur.name;

}

break;

}

}

}

else{

node newNode(i, cost, h[i]);

openList.push_back(newNode);

list[i] = true;

parent[i] = cur.name;

}

}

}

sort(openList.begin(), openList.end());

/********** End **********/

}

}

void print_result(Graph &graph)

{

int p = openList[0].name;

int lastNodeNum;

road.push(p);

while (parent[p] != -1)

{

road.push(parent[p]);

p = parent[p];

}

lastNodeNum = road.top();

int cost = 0;

cout << "solution: ";

while (!road.empty())

{

cout << road.top() << "-> ";

if (road.top() != lastNodeNum)

{

cost += graph.getEdge(lastNodeNum, road.top());

lastNodeNum = road.top();

}

road.pop();

}

cout << "end" << endl;

cout << "cost:" << cost;

}

AlphaBeta剪枝算法求解博弈树最优选择

# -*- coding:utf-8 -*-

import copy # 注意对象的深拷贝和浅拷贝的使用!!!

class GameNode:

'''博弈树结点数据结构

成员变量:

name - string 结点名字

val - int 结点值

children - list[GameNode] 子结点列表

'''

def __init__(self, name='', val=0):

self.name = name # char

self.val = val # int

self.children = [] # list of nodes

class GameTree:

'''博弈树结点数据结构

成员变量:

root - GameNode 博弈树根结点

成员函数:

buildTree - 创建博弈树

'''

def __init__(self):

self.root = None # GameNode 博弈树根结点

def buildTree(self, data_list, root):

'''递归法创建博弈树

参数:

data_list - list[] like this ['A', ['B', ('E', 3), ('F', 12)], ['C', ('H', 2)], ['D', ('K', 14)]]

root - GameNode

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

if self.root==None:

self.root = root

for i in range(1, len(data_list)):

if type(data_list[i])==list:

root.children.append(GameNode(data_list[i][0]))

self.buildTree(data_list[i], root.children[i-1])

else:

root.children.append(GameNode(data_list[i][0], data_list[i][1]))

#********** End **********#

class AlphaBeta:

'''博弈树结点数据结构

成员变量:

game_tree - GameTree 博弈树

成员函数:

minmax_with_alphabeta - 带AlphaBeta剪枝的极大极小值算法,计算最优行动

max_value - 计算最大值

min_value - 计算最小值

get_value - 返回结点的值

isTerminal - 判断某结点是否为最终结点

'''

def __init__(self, game_tree):

self.game_tree = game_tree # GameTree 博弈树

def minmax_with_alphabeta(self, node):

'''带AlphaBeta剪枝的极大极小值算法,计算最优行动

参数:

node - GameNode 博弈树结点

返回值:

clf - GameNode 最优行动的结点

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

clf = self.max_value(node,-0x3f3f3f3f,0x3f3f3f3f)

for child in node.children:

if clf==child.val:

return child

#********** End **********#

def max_value(self, node, alpha, beta):

'''计算最大值

参数:

node - GameNode 博弈树结点

alpha - int 剪枝区间下限值

beta - int 剪枝区间上限值

返回值:

clf - int 子结点中的最大的评估值

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

#max层节点算下界最大值

clf = -0x3f3f3f3f

if self.isTerminal(node):

return self.get_value(node)

for child in node.children:

clf = max(self.min_value(child, alpha, beta), clf)

alpha = max(clf, alpha)

if alpha>=beta:

return alpha;

node.val = alpha

return alpha

#********** End **********#

def min_value(self, node, alpha, beta):

'''计算最小值

参数:

node - GameNode 博弈树结点

alpha - int 剪枝区间下限值

beta - int 剪枝区间上限值

返回值:

clf - int 子结点中的最小的评估值

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

#MIN层求上界

clf = 0x3f3f3f3f

if self.isTerminal(node):

return self.get_value(node)

for child in node.children:

clf = min(self.max_value(child,alpha,beta), clf)

beta = min(beta, clf)

if(alpha>=beta):

return beta

node.val = beta

return beta

#********** End **********#

def get_value(self, node):

'''返回结点的值

参数:

node - GameNode 博弈树结点

返回值:

clf - int 结点的值,即 node.val

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

return node.val

#********** End **********#

def isTerminal(self, node):

'''判断某结点是否为最终结点(无子结点)

参数:

node - GameNode 博弈树结点

返回值:

clf - bool 是最终状态,返回True,否则返回False

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

if len(node.children)==0:

return True

else:

return False

#********** End **********#

朴素贝叶斯分类

import numpy as np

class NaiveBayesClassifier(object):

def __init__(self):

'''

self.label_prob表示每种类别在数据中出现的概率

例如,{0:0.333, 1:0.667}表示数据中类别0出现的概率为0.333,类别1的概率为0.667

'''

self.label_prob = {}

'''

self.condition_prob表示每种类别确定的条件下各个特征出现的概率

例如训练数据集中的特征为 [[2, 1, 1],

[1, 2, 2],

[2, 2, 2],

[2, 1, 2],

[1, 2, 3]]

标签为[1, 0, 1, 0, 1]

那么当标签为0时第0列的值为1的概率为0.5,值为2的概率为0.5;

当标签为0时第1列的值为1的概率为0.5,值为2的概率为0.5;

当标签为0时第2列的值为1的概率为0,值为2的概率为1,值为3的概率为0;

当标签为1时第0列的值为1的概率为0.333,值为2的概率为0.666;

当标签为1时第1列的值为1的概率为0.333,值为2的概率为0.666;

当标签为1时第2列的值为1的概率为0.333,值为2的概率为0.333,值为3的概率为0.333;

因此self.label_prob的值如下:

{

0:{

0:{

1:0.5

2:0.5

}

1:{

1:0.5

2:0.5

}

2:{

1:0

2:1

3:0

}

}

1:

{

0:{

1:0.333

2:0.666

}

1:{

1:0.333

2:0.666

}

2:{

1:0.333

2:0.333

3:0.333

}

}

}

'''

self.condition_prob = {}

def fit(self, feature, label):

'''

对模型进行训练,需要将各种概率分别保存在self.label_prob和self.condition_prob中

:param feature: 训练数据集所有特征组成的ndarray

:param label:训练数据集中所有标签组成的ndarray

:return: 无返回

'''

#********* Begin *********#

#计算label_prob

cnt = 0

num = 0

for item in label:

num+=1

if item == 1:

cnt+=1

self.label_prob[0] = (num-cnt)/num

self.label_prob[1] = cnt/num

#计算condition_prob

self.condition_prob[0] = {}

self.condition_prob[1] = {}

#初始化每个特征取值的字典

for item in self.condition_prob:

for feat in range(len(feature[0])):

self.condition_prob[item][feat] = {}

#记录每个特征的取值

i=0 #样本编号

for data in feature:

j=0 #特征序号

for feat in data:

if(self.condition_prob[0][j].get(feat)==None):

self.condition_prob[0][j][feat] = 0

if(self.condition_prob[1][j].get(feat)==None):

self.condition_prob[1][j][feat] = 0

if label[i]==0:

self.condition_prob[0][j][feat] += 1

else:

self.condition_prob[1][j][feat] += 1

j+=1

i+=1

#计算条件概率,每个特征取值除label为0和1的个数

for feat in range(len(feature[0])):

for item in self.condition_prob[0][feat]:

self.condition_prob[0][feat][item] /= (num-cnt)

for item in self.condition_prob[1][feat]:

self.condition_prob[1][feat][item] /= cnt

#********* End *********#

def predict(self, feature):

'''

对数据进行预测,返回预测结果

:param feature:测试数据集所有特征组成的ndarray

:return:

'''

# ********* Begin *********#

res = []

for item in feature:

P_good = self.label_prob[1]

P_bad = self.label_prob[0]

feat_idx = 0

for feat in item:

P_good *= self.condition_prob[1][feat_idx][feat]

P_bad *= self.condition_prob[0][feat_idx][feat]

feat_idx+=1

if P_good>P_bad:

res.append(1)

else:

res.append(0)

return res

#********* End *********#拉普拉斯平滑

import numpy as np

class NaiveBayesClassifier(object):

def __init__(self):

'''

self.label_prob表示每种类别在数据中出现的概率

例如,{0:0.333, 1:0.667}表示数据中类别0出现的概率为0.333,类别1的概率为0.667

'''

self.label_prob = {}

'''

self.condition_prob表示每种类别确定的条件下各个特征出现的概率

例如训练数据集中的特征为 [[2, 1, 1],

[1, 2, 2],

[2, 2, 2],

[2, 1, 2],

[1, 2, 3]]

标签为[1, 0, 1, 0, 1]

那么当标签为0时第0列的值为1的概率为0.5,值为2的概率为0.5;

当标签为0时第1列的值为1的概率为0.5,值为2的概率为0.5;

当标签为0时第2列的值为1的概率为0,值为2的概率为1,值为3的概率为0;

当标签为1时第0列的值为1的概率为0.333,值为2的概率为0.666;

当标签为1时第1列的值为1的概率为0.333,值为2的概率为0.666;

当标签为1时第2列的值为1的概率为0.333,值为2的概率为0.333,值为3的概率为0.333;

因此self.label_prob的值如下:

{

0:{

0:{

1:0.5

2:0.5

}

1:{

1:0.5

2:0.5

}

2:{

1:0

2:1

3:0

}

}

1:

{

0:{

1:0.333

2:0.666

}

1:{

1:0.333

2:0.666

}

2:{

1:0.333

2:0.333

3:0.333

}

}

}

'''

self.condition_prob = {}

def fit(self, feature, label):

'''

对模型进行训练,需要将各种概率分别保存在self.label_prob和self.condition_prob中

:param feature: 训练数据集所有特征组成的ndarray

:param label:训练数据集中所有标签组成的ndarray

:return: 无返回

'''

#********* Begin *********#

#计算label_prob

cnt = 0

num = 0

for item in label:

num+=1

if item == 1:

cnt+=1

types = 2

self.label_prob[0] = (num-cnt)+1/(num+types)

self.label_prob[1] = cnt+1/(num+types)

#计算condition_prob

self.condition_prob[0] = {}

self.condition_prob[1] = {}

#初始化每个特征取值的字典

for item in self.condition_prob:

for feat in range(len(feature[0])):

self.condition_prob[item][feat] = {}

#记录每个特征的取值

i=0 #样本编号

for data in feature:

j=0 #特征序号

for feat in data:

if(self.condition_prob[0][j].get(feat)==None):

self.condition_prob[0][j][feat] = 1 #分子加一,初始化为1

if(self.condition_prob[1][j].get(feat)==None):

self.condition_prob[1][j][feat] = 1 #分子加一,初始化为1

if label[i]==0:

self.condition_prob[0][j][feat] += 1

else:

self.condition_prob[1][j][feat] += 1

j+=1

i+=1

#计算条件概率,每个特征取值除label为0和1的个数

for feat in range(len(feature[0])):

for item in self.condition_prob[0][feat]:

self.condition_prob[0][feat][item] /= (num-cnt)+len(self.condition_prob[0][feat])

for item in self.condition_prob[1][feat]:

self.condition_prob[1][feat][item] /= cnt+len(self.condition_prob[1][feat])

#********* End *********#

def predict(self, feature):

'''

对数据进行预测,返回预测结果

:param feature:测试数据集所有特征组成的ndarray

:return:

'''

result = []

# 对每条测试数据都进行预测

for i, f in enumerate(feature):

# 可能的类别的概率

prob = np.zeros(len(self.label_prob.keys()))

ii = 0

for label, label_prob in self.label_prob.items():

# 计算概率

prob[ii] = label_prob

for j in range(len(feature[0])):

prob[ii] *= self.condition_prob[label][j][f[j]]

ii += 1

# 取概率最大的类别作为结果

result.append(list(self.label_prob.keys())[np.argmax(prob)])

return np.array(result)

决策树算法求解分类预测问题

# -*- coding: UTF-8 -*-

import math

class TreeNode:

'''决策树结点数据结构

成员变量:

row - int 列表数据的行数,初始13

col - int 列表数据的列数,初始12

data - list[[]] 二维列表数据,初始数据形式在testDecisionTree.py里

第0行:[第0列:example(样本名字) 中间各列(1-10):各个特征属性名称 第11列:WillW ait(目标分类) ]

第1-12行:[样本名字,具体属性值,分类目标]

data = [

['example', 'Alt', 'Bar', 'Fri', 'Hun', 'Pat', 'Price', 'Rain', 'Res', 'Type', 'Est', 'WillW ait'],

['x1', 'Yes', 'No', 'No', 'Yes', 'Some', '$$$', 'No', 'Yes', 'French', '0-10', 'y1=Yes' ],

['x2', 'Yes', 'No', 'No', 'Yes', 'Full', '$', 'No', 'No', 'Thai', '30-60', 'y2=No' ],

........ ..... ..... ......... ............

['x12', 'Yes', 'Yes', 'Yes', 'Yes', 'Full', '$', 'No', 'No', 'Burger', '30-60', 'y12=Yes' ] ]

targ - string 分类结果 Yes No

name - string 结点名字:特征属性名称

attr - list[string] 该特征属性下的各个属性值

children - list[GameNode] 该特征属性下的各个决策树子结点,与 attr 一一对应

'''

def __init__(self, row, col, data):

self.row = row

self.col = col

self.data = data

self.targ = '' # target result

self.name = '' # attribute name

self.attr = [] # attribute value list

self.child = [] # attribute - TreeNode List

class DecisionTree:

'''决策树

成员变量:

root - TreeNode 博弈树根结点

成员函数:

buildTree - 创建决策树

predict - 预测样本分类标签

_parse_data_ - 解析数据中最大信息增益的特性属性

_calc_all_gain_ - 计算整个样本的信息熵

_calc_attr_gain_ - 计算某一特征属性的信息熵

_calc_bool_gain_ - 通用计算函数:计算二值随机变量的信息熵

_get_targ_ - 获取叶子结点的决策分类标签

_is_leaf_ - 判断该结点是否为叶子结点

'''

def __init__(self, row, col, data):

self.root = TreeNode(row, col, data)

def build(self, root):

'''递归法创建博弈树

参数:

root - TreeNode 初始为决策树根结点

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

if self._is_leaf_(root):

root.targ = self._get_targ_(root)

return

root.name, root.attr = self._parse_data_(root.row,root.col,root.data)

x = [i for i in range(root.col) if root.data[0][i]==root.name][0]

for attr in root.attr:

row = 0

col = root.col - 1

data = []

for i in range(root.row):

if i!=0 and root.data[i][x]!=attr:

continue

vec = []

for j in range(root.col):

if j==x:

continue

vec.append(root.data[i][j])

data.append(vec)

row+=1

node = TreeNode(row,col,data)

root.child.append(node)

for node in root.child:

self.build(node)

#********** End **********#

def predict(self, root, x):

'''分类预测

参数:

root - TreeNode 决策树根结点

x - [[]] 测试数据,形如:

[ ['example', 'Alt', 'Bar', 'Fri', 'Hun', 'Pat', 'Price', 'Rain', 'Res', 'Type', 'Est'],

['x1', 'Yes', 'No', 'No', 'Yes', 'Some', '$$$', 'No', 'Yes', 'French','0-10'] ]

返回值:

clf - string 分类标签 Yes No

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

if self._is_leaf_(root):

return root.targ

idx = x[0].index(root.name)

for idattr,attr in enumerate(root.attr):

if attr==x[1][idx]:

return self.predict(root.child[idattr],x)

#********** End **********#

def _parse_data_(self, row, col, data):

'''解析数据:计算数据中最大信息增益的特性属性

参数:

row - int 列表数据的行数

col - int 列表数据的列数

data - list[[]] 二维列表数据,形如:

第0行:[第0列:example(样本名字) 中间各列(1-10):各个特征属性名称 第11列:WillW ait(目标分类) ]

第1-12行:[样本名字,具体属性值,分类目标]

data = [

['example', 'Alt', 'Bar', 'Fri', 'Hun', 'Pat', 'Price', 'Rain', 'Res', 'Type', 'Est', 'WillW ait'],

['x1', 'Yes', 'No', 'No', 'Yes', 'Some', '$$$', 'No', 'Yes', 'French', '0-10', 'y1=Yes' ],

['x2', 'Yes', 'No', 'No', 'Yes', 'Full', '$', 'No', 'No', 'Thai', '30-60', 'y2=No' ],

........ ..... ..... ......... ............

['x12', 'Yes', 'Yes', 'Yes', 'Yes', 'Full', '$', 'No', 'No', 'Burger', '30-60', 'y12=Yes' ] ]

返回值:

clf - string, list[] 信息增益最大的属性名称 及其 属性值列表

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

maxGain = -float('inf')

maxName = ''

maxAttr = []

maxIdx = -1

gains = self._calc_all_gain_(row-1,[x[-1] for x in data[1:]])

for i in range(1,col-1,1):

tmp = []

for j in range(1,row,1):

tmp.append([data[j][i],data[j][-1]])

tmpGain = self._calc_attr_gain_(row-1,tmp)

if (gains-tmpGain) > maxGain:

maxGain = gains - tmpGain

maxName = data[0][i]

maxIdx = i

for i in range(1,row,1):

if data[i][maxIdx] not in maxAttr:

maxAttr.append(data[i][maxIdx])

return maxName,maxAttr

#********** End **********#

def _calc_all_gain_(self, row, data):

'''计算整个样本的信息熵

参数:

row - int 列表数据的行数

data - list[] 一维列表数据,形如:[分类目标]

data = ['y1=Yes', 'y2=No', ........, 'y12=Yes']

返回值:

clf - float 信息熵

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

dict_ = {'yes':0.0,'no':0.0}

for i in range(row):

if data[i][-1]=='s':

dict_['yes'] += 1.0

else:

dict_['no'] += 1.0

sum = 0.0

for _key in dict_:

sum +=(1.0*dict_[_key]/float(row))*math.log(1.0*dict_[_key]/float(row),2)

return -sum

#********** End **********#

def _calc_attr_gain_(self, row, data):

'''计算某一特征属性的信息熵

参数:

row - int 列表数据的行数

data - list[[]] 二维列表数据(2列),形如:[[某一属性值,分类目标]]

[ ['0-10', 'y1=Yes' ],

['30-60', 'y2=No' ],

........

['30-60', 'y12=Yes' ] ]

返回值:

clf - float 信息熵

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

dict_ = {}

for i in range(row):

if data[i][0] not in dict_:

dict_[data[i][0]] = [0.0,0.0]

if data[i][1][-1] =='s':

dict_[data[i][0]][0] += 1.0

else:

dict_[data[i][0]][1] += 1.0

sum = 0.0

for _key in dict_:

p = 1.0*dict_[_key][0] / (dict_[_key][0] + dict_[_key][1])

sum += (1.0*(dict_[_key][0]+dict_[_key][1])/float(row)) * self._calc_bool_gain_(p)

return sum

#********** End **********#

def _calc_bool_gain_(self, p):

'''通用计算函数:计算二值随机变量的信息熵

参数:

p - float 二值随机变量的概率 在[0, 1]之间

返回值:

clf - float 信息熵

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

if p==1 or p==0:

return 0.0

return -(p*math.log(p,2)+(1-p)*math.log((1-p),2))

#********** End **********#

def _get_targ_(self, node):

'''计算叶子结点的决策分类标签

参数:

node - TreeNode 决策树结点

返回值:

clf - string 分类标签 Yes No

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

yes = 0

no = 0

for i in range(1,node.row,1):

if node.data[i][-1][-1] == 's':

yes +=1

else:

no +=1

if yes>no:

return 'Yes'

else:

return 'No'

#********** End **********#

def _is_leaf_(self, node):

'''判断该结点是否为叶子结点

参数:

node - TreeNode 决策树结点

返回值:

clf - bool 叶子结点True 非叶子结点False

'''

#请在这里补充代码,完成本关任务

#********** Begin **********#

if node.col ==2:

return True

targ = node.data[-1][-1][-1]

for i in range(node.row):

if i==0:

continue

if node.data[i][-1][-1] != targ:

return False

return True

#********** End **********#

神经元与感知机

#encoding=utf8

import numpy as np

#构建感知机算法

class Perceptron(object):

def __init__(self, learning_rate = 0.01, max_iter = 200):

self.lr = learning_rate

self.max_iter = max_iter

def fit(self, data, label):

'''

input:data(ndarray):训练数据特征

label(ndarray):训练数据标签

output:w(ndarray):训练好的权重

b(ndarry):训练好的偏置

'''

#编写感知机训练方法,w为权重,b为偏置

self.w = np.random.randn(data.shape[1])

self.b = np.random.rand(1)

#********* Begin *********#

for i in range(len(label)):

while label[i]*(np.matmul(self.w,data[i])+self.b)<=0:

self.w = self.w+self.lr*(label[i]*data[i])

self.b = self.b + self.lr*label[i]

#********* End *********#

return None

def predict(self, data):

'''

input:data(ndarray):测试数据特征

'''

#编写感知机预测方法,若是正类返回1,负类返回-1

#********* Begin *********#

predicted = np.matmul(data,self.w)+self.b

for i in range(len(predicted)):

if predicted[i]>=0:

predicted[i] = 1

else:

predicted[i] = -1

return predicted

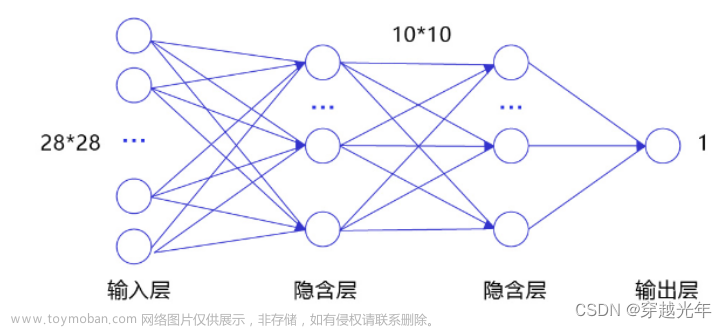

#********* End *********#反向传播算法

#encoding=utf8

import numpy as np

from math import sqrt

#bp神经网络训练方法

def bp_train(feature,label,n_hidden,maxcycle,alpha,n_output):

'''

计算隐含层的输入

input:feature(mat):特征

label(mat):标签

n_hidden(int)隐藏层的节点个数

maxcycle(int):最大迭代次数

alpha(float):学习率

n_output(int):输出层的节点个数

output:w0(mat):输入层到隐藏层之间的权重

b0(mat):输入层到隐藏层之间的偏置

w1(mat):隐藏层到输出层之间的权重

b1(mat):隐藏层到输出层之间的偏置

'''

m,n = np.shape(feature)

#初始化

w0 = np.mat(np.random.rand(n,n_hidden))

w0 = w0*(8.0*sqrt(6)/sqrt(n+n_hidden))-\

np.mat(np.ones((n,n_hidden)))*\

(4.0*sqrt(6)/sqrt(n+n_hidden))

b0 = np.mat(np.random.rand(1,n_hidden))

b0 = b0*(8.0*sqrt(6)/sqrt(n+n_hidden))-\

np.mat(np.ones((1,n_hidden)))*\

(4.0*sqrt(6)/sqrt(n+n_hidden))

w1 = np.mat(np.random.rand(n_hidden,n_output))

w1 = w1*(8.0*sqrt(6)/sqrt(n_hidden+n_output))-\

np.mat(np.ones((n_hidden,n_output)))*\

(4.0*sqrt(6)/sqrt(n_hidden+n_output))

b1 = np.mat(np.random.rand(1,n_output))

b1 = b1*(8.0*sqrt(6)/sqrt(n_hidden+n_output))-\

np.mat(np.ones((1,n_output)))*\

(4.0*sqrt(6)/sqrt(n_hidden+n_output))

#训练

i = 0

while i <= maxcycle:

#********* Begin *********#

#前向传播

#计算隐藏层的输入

hidden_input = hidden_in(feature,w0,b0)

#计算隐藏层的输出

hidden_output = hidden_out(hidden_input)

#计算输出层的输入

output_in = predict_in(hidden_output,w1,b1)

#计算输出层的输出

output_out = predict_out(output_in)

#反向传播

#隐藏层到输出层之间的残差

delta_output = -np.multiply((label-output_out),partial_sig(output_in))

#输入层到隐藏层之间的残差

delta_hidden = np.multiply((delta_output*w1.T),partial_sig(hidden_input))

#更新权重与偏置

w1 = w1-alpha*(hidden_output.T*delta_output)

b1 = b1-alpha*np.sum(delta_output,axis=0)*(1.0/m)

w0=w0-alpha*(feature.T*delta_hidden)

b0 = b0-alpha*np.sum(delta_hidden,axis=0)*(1.0/m)

#********* End *********#

i +=1

return w0,w1,b0,b1

#计算隐藏层的输入函数

def hidden_in(feature,w0,b0):

m = np.shape(feature)[0]

hidden_in = feature*w0

for i in range(m):

hidden_in[i,] += b0

return hidden_in

#计算隐藏层的输出函数

def hidden_out(hidden_in):

hidden_output = sig(hidden_in)

return hidden_output

#计算输出层的输入函数

def predict_in(hidden_out,w1,b1):

m = np.shape(hidden_out)[0]

predict_in = hidden_out*w1

for i in range(m):

predict_in[i,] +=b1

return predict_in

#计算输出层的输出的函数

def predict_out(predict_in):

result = sig(predict_in)

return result

#sigmoid函数

def sig(x):

return 1.0/(1+np.exp(-x))

#计算sigmoid函数偏导

def partial_sig(x):

m,n = np.shape(x)

out = np.mat(np.zeros((m,n)))

for i in range(m):

for j in range(n):

out[i,j] = sig(x[i,j])*(1-sig(x[i,j]))

return out

感知机实践

#encoding=utf8

import os

from sklearn.linear_model.perceptron import Perceptron

import pandas as pd

if os.path.exists('./step2/result.csv'):

os.remove('./step2/result.csv')

#********* Begin *********#

train_data = pd.read_csv('./step2/train_data.csv')

train_label = pd.read_csv('./step2/train_label.csv')

train_label = train_label['target']

test_data = pd.read_csv('./step2/test_data.csv')

clf = Perceptron(eta0=0.8,max_iter=1000)

clf.fit(train_data, train_label)

result = clf.predict(test_data)

write_res = pd.DataFrame({'result':result})

write_res.to_csv('./step2/result.csv', index = False)

#********* End *********#猫狗大战

这个过不了是正常的,是平台的问题,多次提交可能可以过文章来源地址https://www.toymoban.com/news/detail-689186.html

from keras.layers import Dense, Activation, Flatten, Dropout, Conv2D, MaxPooling2D

import keras

import os

import numpy as np

import cv2

# 设置随机种子

np.random.seed(1447)

IMAGE_HEIGHT = 128

IMAGE_WIDTH = 128

def get_train_data(data_path):

'''

读取并处理数据

:return:处理好的图像和对应的one-hot编码

'''

images = []

onehot = np.zeros((500, 2))

#********* Begin *********#

for i, img_name in enumerate(os.listdir(data_path)):

if 'cat' in img_name:

onehot[i, 0] = 1

else:

onehot[i, 1] = 1

img = cv2.imread(os.path.join(data_path, img_name))

img = cv2.resize(img, (IMAGE_HEIGHT, IMAGE_WIDTH))

img = img / 255.0

images.append(img)

#********* End *********#

return np.array(images), onehot

def build_model():

'''

构建模型

:return:构建好的模型

'''

model = keras.Sequential()

#********* Begin *********#

model.add(Conv2D(32, kernel_size=3, activation='relu', input_shape=[IMAGE_HEIGHT, IMAGE_WIDTH, 3]))

model.add(MaxPooling2D(pool_size=2))

model.add(Conv2D(32, kernel_size=3, activation='relu'))

model.add(MaxPooling2D(pool_size=2))

model.add(Flatten())

model.add(Dense(96, activation='relu'))

model.add(Dense(2, activation='softmax'))

#********* End *********#

return model

def fit_and_predict(model, train_images, onehot, test_images):

'''

训练模型,并对测试图像进行预测

:param model: 训练好的模型

:param train_images: 训练集图像

:param onehot: 训练集的one-hot编码

:param test_images: 测试集图像

:return: 预测结果

'''

#********* Begin *********#

# 编译模型

model.compile(loss='categorical_crossentropy', optimizer=keras.optimizers.Adam(lr=0.0001), metrics=['accuracy'])

#********* End *********#

model.fit(train_images, onehot, epochs=20, batch_size=32, verbose=0)

result = model.predict(test_images, batch_size=10)

predict_idx = np.argmax(result, axis=1)

return predict_idx

到了这里,关于人工智能-实训平台的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!