目录

1.环境介绍

2.配置keepalived

3.测试

1.测试负载均衡

2.测试RS高可用

3.测试LVS高可用

3.1测试lvs主服务宕机

3.2.测试lvs主服务器恢复

4.我在实验中遇到的错误

1.环境介绍

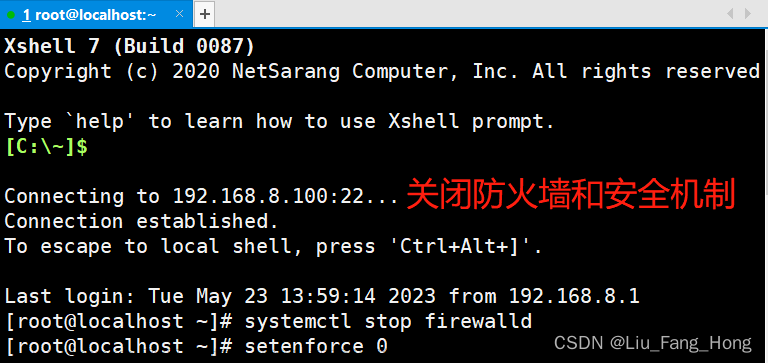

环境:centos7

RS1---RIP1:192.168.163.145

VIP 192.168.163.200

RS2---RIP2:192.168.163.146

VIP 192.168.163.200

LVS_MASTER : DIP 192.168.163.144

VIP:192.168.163.200

LVS_BACKUP: DIP 192.168.163.150

VIP:192.168.163.200

CLIENT :192.168.163.151

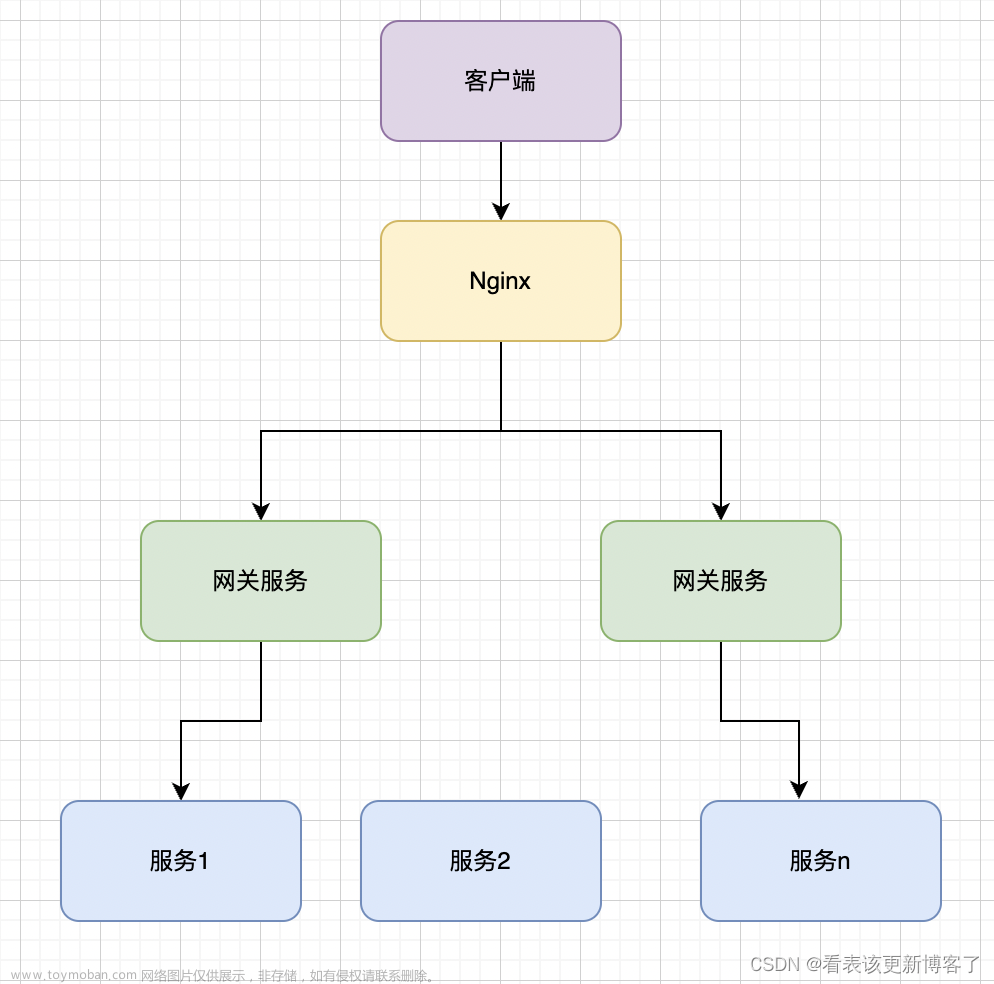

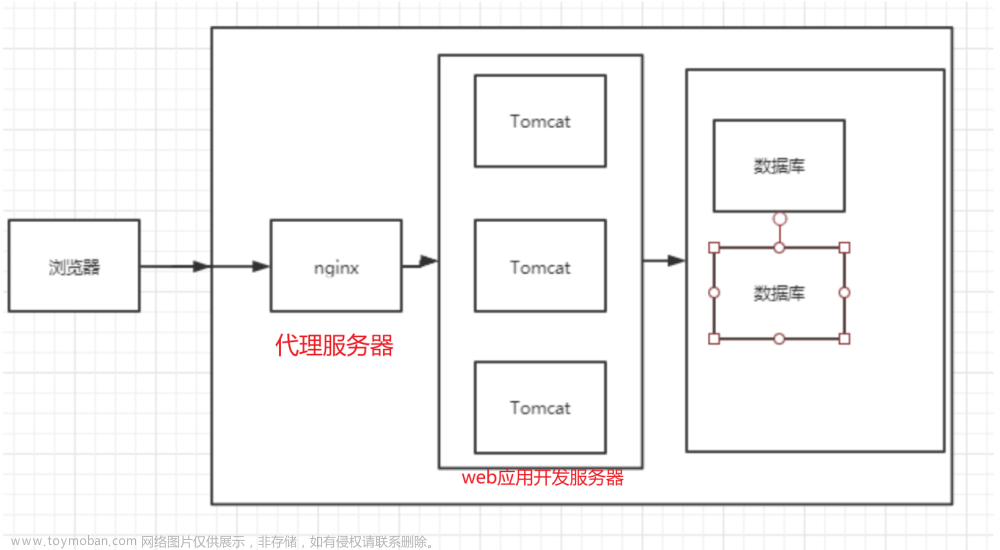

我使用的是LVS-DR模式来负载均衡,详情请见http://t.csdn.cn/iiU4s

ipvsadm 已经在这篇文章搭建好

2.配置keepalived

现在我们需要在两台LVS服务器都下载keepalivd

yum install keepalived -y下载号后,我们会在/etc/keepalived的目录下找一个配置文件文件

[root@lvs-backup ~]# cd /etc/keepalived/

[root@lvs-backup keepalived]# ll

total 4

-rw-r--r--. 1 root root 1376 Aug 31 12:12 keepalived.conf

里面内容如下

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

#上面的配置无需关注,重点关注和修改下面的配置

vrrp_instance VI_1 {

state MASTER#标识当前lvs是主,根据实际lvs服务器规划确定,可选值MASTER和BACKUP

interface eth0#lvs服务器提供服务器的网卡,根据实际服务器网卡进行修改

virtual_router_id 51#lvs提供的服务所属ID,目前无需修改

priority 100#lvs服务器的优先级,主服务器最高,备份服务器要低于主服务器

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

#virtual_ipaddress用于配置VIP和LVS服务器的网卡绑定关系,一般需要修改

#示例: 192.168.116.134/24 dev ens33 label ens33:9

virtual_ipaddress {

192.168.200.16

192.168.200.17

192.168.200.18

}

}

#配置lvs服务策略,相当于ipvsadm -A -t 192.168.116.134:80 -s rr,一般需要修改

virtual_server 192.168.200.100 443 {

delay_loop 6

lb_algo rr#配置lvs调度算法,默认轮询

lb_kind NAT#配置lvs工作模式,可以改为DR

persistence_timeout 50#用于指定同一个client在多久内,只去请求第一次提供服务的RS,为查看轮询效 果,这里需要改为0

protocol TCP#TCP协议

#配置RS信息,相当于ipvsadm -a -t 192.168.116.134:80 -r 192.168.116.131 -g

real_server 192.168.201.100 443 {

weight 1#当前RS的权重

SSL_GET {#SSL_GET健康检查,一般改为HTTP_GET

#两个url可以删除一个,url内的内容改为path /和status_code 200,digest删除

url {

path /

digest ff20ad2481f97b1754ef3e12ecd3a9cc

}

url {

path /mrtg/

digest 9b3a0c85a887a256d6939da88aabd8cd

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

#下面的配置实际是两组lvs服务的配置,含义和上面的lvs服务配置一致。如果用不到,下面的配置可以全部删除

virtual_server 10.10.10.2 1358 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

sorry_server 192.168.200.200 1358

real_server 192.168.200.2 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.200.3 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334c

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334c

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

virtual_server 10.10.10.3 1358 {

delay_loop 3

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.200.4 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.200.5 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}在两台机器上修改我们需要修改的配置

LVS_MASTER

[root@lvs ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 200

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.163.200/24 brd 192.168.163.255 dev ens33 label ens33:200

}

}

virtual_server 192.168.163.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 0

protocol TCP

real_server 192.168.163.145 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.163.146 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

LVS_BACKUP

[root@lvs-backup keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 180

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.163.200/24 brd 192.168.163.255 dev ens33 label ens33:200

}

}

virtual_server 192.168.163.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 0

protocol TCP

real_server 192.168.163.145 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.163.146 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

注意:主服务的优先级要高于备份服务器

在两台服务器上开启keepalived服务

[root@lvs ~]# systemctl restart keepalived

上述步骤执行完毕后,可以在lvs主服务器和备份服务器分别执行ifconfig命令,可以查看到VIP被绑定到了主服务器,如下:

[root@lvs ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.163.144 netmask 255.255.255.0 broadcast 192.168.163.255

inet6 fe80::491f:4a6e:f34:a1b9 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:a3:4f:a2 txqueuelen 1000 (Ethernet)

RX packets 156094 bytes 70487425 (67.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 195001 bytes 16040484 (15.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33:200: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.163.200 netmask 255.255.255.0 broadcast 192.168.163.255

ether 00:0c:29:a3:4f:a2 txqueuelen 1000 (Ethernet)

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 331 bytes 28808 (28.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 331 bytes 28808 (28.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@lvs ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.163.200:80 rr

-> 192.168.163.145:80 Route 1 0 0

-> 192.168.163.146:80 Route 1 0 0

3.测试

1.测试负载均衡

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.145 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.145 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .

2.测试RS高可用

关闭一台RS后(这里可以使用ifconfig 网卡名 down命令暂时关闭网卡),客户端继续发起请求,查看是否可以正常访问,如下:

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .会发现,此时客户端可以正常访问,但只有RS2在提供服务。这说明,keepAlived检测到了RS1服务器异常,将其剔除了。

此时再启动RS1服务器,客户端继续访问,会发现响应结果如下,keepAlived检测到RS1服务器恢复正常,又将其加入服务列表了。

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.145 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.145 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .3.测试LVS高可用

这里主要进行两个测试

3.1测试lvs主服务宕机

使用ifconfig 网卡名 down命令,关闭主服务器网卡,此时主服务器不能提供服务。观察备份服务器是否将VIP绑定到自己,以及客户端是否可以继续正常访问。如下:

关闭主服务器网卡

[root@lvs ~]# ifconfig ens33 down

观察备份服务器,会发现VIP已经绑定过来了。这里实际是keepAlived检测到了主服务器的异常,而做出的故障转移和自动切换。

[root@lvs-backup keepalived]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.163.150 netmask 255.255.255.0 broadcast 192.168.163.255

inet6 fe80::94e3:7456:5dc9:ce5d prefixlen 64 scopeid 0x20<link>

inet6 fe80::9aec:8c8f:ee55:a8eb prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:c0:57:db txqueuelen 1000 (Ethernet)

RX packets 43484 bytes 5026535 (4.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 12787 bytes 1188939 (1.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33:200: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.163.200 netmask 255.255.255.0 broadcast 192.168.163.255

ether 00:0c:29:c0:57:db txqueuelen 1000 (Ethernet)

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

用客户进行测试

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.145 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.145 .

[root@client ~]# curl 192.168.163.200

web1 test, ip is 192.168.163.146 .

3.2.测试lvs主服务器恢复

上述测试通过后,可以开启主服务器网卡,让其能够提供服务,然后观察VIP是否会回到主服务器。

开启主服务器网卡

[root@lvs ~]# ifconfig ens33 up

我们会发现,在主服务器开启端口后,VIP又换绑到主服务器上了文章来源:https://www.toymoban.com/news/detail-689941.html

[root@lvs ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.163.144 netmask 255.255.255.0 broadcast 192.168.163.255

inet6 fe80::491f:4a6e:f34:a1b9 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:a3:4f:a2 txqueuelen 1000 (Ethernet)

RX packets 157697 bytes 70649781 (67.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 200310 bytes 16401598 (15.6 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33:200: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.163.200 netmask 255.255.255.0 broadcast 192.168.163.255

ether 00:0c:29:a3:4f:a2 txqueuelen 1000 (Ethernet)

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 331 bytes 28808 (28.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 331 bytes 28808 (28.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@lvs-backup keepalived]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.163.150 netmask 255.255.255.0 broadcast 192.168.163.255

inet6 fe80::94e3:7456:5dc9:ce5d prefixlen 64 scopeid 0x20<link>

inet6 fe80::9aec:8c8f:ee55:a8eb prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:c0:57:db txqueuelen 1000 (Ethernet)

RX packets 43995 bytes 5081851 (4.8 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 13240 bytes 1226592 (1.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

4.我在实验中遇到的错误

在测试阶段,我发现即使我的VIP已经成功和服务器绑定,也有当前ipvs模块中记录的链接,但就是无法通过VIP连接,这是因为在keepalived配置文件中,关于vrrp协议的vrrp_strict是默认打开的我们需要把他注释掉,这样就能顺利连接了文章来源地址https://www.toymoban.com/news/detail-689941.html

到了这里,关于Lvs+KeepAlived高可用高性能负载均衡的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!