点云配准

将点云数据统一到一个世界坐标系的过程称之为点云配准或者点云拼接。(registration/align)

点云配准的过程其实就是找到同名点对;即找到在点云中处在真实世界同一位置的点。

常见的点云配准算法:

ICP、Color ICP、Trimed-ICP 算法流程:

- 选点:

确定参与到配准过程中的点集。 - 匹配确定同名点对:

ICP以两片点云中欧式空间距离最小的点对为同名点 - 非线性优化求解:

采用SVD或者四元数求解变换 - 变换:

将求解的变换参数应用到待配准点云上 - 迭代:

计算此时的状态参数判断配准是否完成。以前后两次参数迭代变

化的差或者RMSE值是否小于给定阈值为迭代终止条件。否则返回(1)

ICP 算法以两片点云中欧式空间距离最小的点对为同名点,如果初始点选择不合适,可能会陷入局部最优配准失败。

基于点特征的配准方法

两种方式:

一种通过特征描述,先分割出场景里的点线等特征,利用这些点进行同名点的匹配,如基于几何空间一致性筛选同名点对。

一种通过点特征描述符确定同名点,如基于FPFH双向最近邻确定同名点对,FPFH描述向量最大的特性是对于点云的同名点的特征向量表现出相似性,即该点云的同名点之间的FPFH特征描述子的二范数趋于零。

测试用例

基于icp的点云匹配(参考)

- 点到点的配准

import open3d as o3d

import numpy as np

# 获取示例数据

source_cloud = o3d.io.read_point_cloud("./data/cloud_bin_0.pcd")

target_cloud = o3d.io.read_point_cloud("./data/cloud_bin_1.pcd")

source_cloud.paint_uniform_color([1, 0.706, 0])

target_cloud.paint_uniform_color([0, 0.651, 0.929])

threshold = 0.02# RMSE残差阈值,小于该残差阈值,迭代终止

#初始位姿

trans_init = np.asarray([[0.862, 0.011, -0.507, 0.5],

[-0.139, 0.967, -0.215, 0.7],

[0.487, 0.255, 0.835, -1.4], [0.0, 0.0, 0.0, 1.0]])

# 显示未配准点云

o3d.visualization.draw_geometries([source_cloud, target_cloud],

zoom=0.4459,

front=[0.9288, -0.2951, -0.2242],

lookat=[1.6784, 2.0612, 1.4451],

up=[-0.3402, -0.9189, -0.1996])

# 点到点的ICP

result = o3d.pipelines.registration.registration_icp(

source_cloud, target_cloud, threshold,trans_init,

o3d.pipelines.registration.TransformationEstimationPointToPoint())

print(result)

print("Transformation is:")

print(result.transformation)

# 显示点到点的配准结果

source_cloud.transform(result.transformation)

o3d.visualization.draw_geometries([source_cloud, target_cloud],

zoom=0.4459,

front=[0.9288, -0.2951, -0.2242],

lookat=[1.6784, 2.0612, 1.4451],

up=[-0.3402, -0.9189, -0.1996])

配准前:

配准结果:

- 点到面的配准

import open3d as o3d

import numpy as np

# 获取示例数据

source_cloud = o3d.io.read_point_cloud("./data/cloud_bin_0.pcd")

target_cloud = o3d.io.read_point_cloud("./data/cloud_bin_1.pcd")

source_cloud.paint_uniform_color([1, 0.706, 0])

target_cloud.paint_uniform_color([0, 0.651, 0.929])

threshold = 0.02# RMSE残差阈值,小于该残差阈值,迭代终止

#初始位姿

trans_init = np.asarray([[0.862, 0.011, -0.507, 0.5],

[-0.139, 0.967, -0.215, 0.7],

[0.487, 0.255, 0.835, -1.4], [0.0, 0.0, 0.0, 1.0]])

source_cloud = o3d.io.read_point_cloud("./data/cloud_bin_0.pcd")

target_cloud = o3d.io.read_point_cloud("./data/cloud_bin_1.pcd")

source_cloud.paint_uniform_color([1, 0.706, 0])

target_cloud.paint_uniform_color([0, 0.651, 0.929])

# 显示未配准点云

o3d.visualization.draw_geometries([source_cloud, target_cloud],

zoom=0.4459,

front=[0.9288, -0.2951, -0.2242],

lookat=[1.6784, 2.0612, 1.4451],

up=[-0.3402, -0.9189, -0.1996])

# 点到面的ICP

result = o3d.pipelines.registration.registration_icp(

source_cloud, target_cloud, threshold,trans_init,

o3d.pipelines.registration.TransformationEstimationPointToPlane())

print(result)

print("Transformation is:")

print(result.transformation, "\n")

# 显示点到面的配准结果

source_cloud.transform(result.transformation)

o3d.visualization.draw_geometries([source_cloud, target_cloud],

zoom=0.4459,

front=[0.9288, -0.2951, -0.2242],

lookat=[1.6784, 2.0612, 1.4451],

up=[-0.3402, -0.9189, -0.1996])

配准结果如图:

基于Color ICP的点云匹配 参考

import open3d as o3d

import numpy as np

import copy

def draw_registration_result_original_color(source, target, transformation):

source_temp = copy.deepcopy(source)

source_temp.transform(transformation)

o3d.visualization.draw_geometries([source_temp, target],

zoom=0.5,

front=[-0.2458, -0.8088, 0.5342],

lookat=[1.7745, 2.2305, 0.9787],

up=[0.3109, -0.5878, -0.7468])

print("1. Load two point clouds and show initial pose")

demo_colored_icp_pcds = o3d.data.DemoColoredICPPointClouds()

source = o3d.io.read_point_cloud(demo_colored_icp_pcds.paths[0])

target = o3d.io.read_point_cloud(demo_colored_icp_pcds.paths[1])

# draw initial alignment

current_transformation = np.identity(4)

draw_registration_result_original_color(source, target, current_transformation)

# point to plane ICP

current_transformation = np.identity(4)

print("2. Point-to-plane ICP registration is applied on original point")

print(" clouds to refine the alignment. Distance threshold 0.02.")

result_icp = o3d.pipelines.registration.registration_icp(

source, target, 0.02, current_transformation,

o3d.pipelines.registration.TransformationEstimationPointToPlane())

print(result_icp)

draw_registration_result_original_color(source, target,

result_icp.transformation)

# colored pointcloud registration

# This is implementation of following paper

# J. Park, Q.-Y. Zhou, V. Koltun,

# Colored Point Cloud Registration Revisited, ICCV 2017

voxel_radius = [0.04, 0.02, 0.01]

max_iter = [50, 30, 14]

current_transformation = np.identity(4)

print("3. Colored point cloud registration")

for scale in range(3):

iter = max_iter[scale]

radius = voxel_radius[scale]

print([iter, radius, scale])

print("3-1. Downsample with a voxel size %.2f" % radius)

source_down = source.voxel_down_sample(radius)

target_down = target.voxel_down_sample(radius)

print("3-2. Estimate normal.")

source_down.estimate_normals(

o3d.geometry.KDTreeSearchParamHybrid(radius=radius * 2, max_nn=30))

target_down.estimate_normals(

o3d.geometry.KDTreeSearchParamHybrid(radius=radius * 2, max_nn=30))

print("3-3. Applying colored point cloud registration")

result_icp = o3d.pipelines.registration.registration_colored_icp(

source_down, target_down, radius, current_transformation,

o3d.pipelines.registration.TransformationEstimationForColoredICP(),

o3d.pipelines.registration.ICPConvergenceCriteria(relative_fitness=1e-6,

relative_rmse=1e-6,

max_iteration=iter))

current_transformation = result_icp.transformation

print(result_icp)

draw_registration_result_original_color(source, target,

result_icp.transformation)

配准前:

基于点到平面icp的配准:

基于color icp的配准结果: 文章来源:https://www.toymoban.com/news/detail-690676.html

文章来源:https://www.toymoban.com/news/detail-690676.html

基于fpfh特征的点云匹配

import numpy as np

import copy

import open3d as o3d

def draw_registration_result(source, target, transformation):

source_temp = copy.deepcopy(source)

target_temp = copy.deepcopy(target)

source_temp.paint_uniform_color([1, 0.706, 0])

target_temp.paint_uniform_color([0, 0.651, 0.929])

source_temp.transform(transformation)

o3d.visualization.draw_geometries([source_temp, target_temp],

zoom=0.4559,

front=[0.6452, -0.3036, -0.7011],

lookat=[1.9892, 2.0208, 1.8945],

up=[-0.2779, -0.9482, 0.1556])

def preprocess_point_cloud(pcd, voxel_size):

print(":: Downsample with a voxel size %.3f." % voxel_size)

pcd_down = pcd.voxel_down_sample(voxel_size)

radius_normal = voxel_size * 2

print(":: Estimate normal with search radius %.3f." % radius_normal)

pcd_down.estimate_normals(

o3d.geometry.KDTreeSearchParamHybrid(radius=radius_normal, max_nn=30))

radius_feature = voxel_size * 5

print(":: Compute FPFH feature with search radius %.3f." % radius_feature)

pcd_fpfh = o3d.pipelines.registration.compute_fpfh_feature(

pcd_down,

o3d.geometry.KDTreeSearchParamHybrid(radius=radius_feature, max_nn=100))

return pcd_down, pcd_fpfh

def prepare_dataset(voxel_size):

print(":: Load two point clouds and disturb initial pose.")

demo_icp_pcds = o3d.data.DemoICPPointClouds()

source = o3d.io.read_point_cloud(demo_icp_pcds.paths[0])

target = o3d.io.read_point_cloud(demo_icp_pcds.paths[1])

trans_init = np.asarray([[0.0, 0.0, 1.0, 0.0], [1.0, 0.0, 0.0, 0.0],

[0.0, 1.0, 0.0, 0.0], [0.0, 0.0, 0.0, 1.0]])

source.transform(trans_init)

draw_registration_result(source, target, np.identity(4))

source_down, source_fpfh = preprocess_point_cloud(source, voxel_size)

target_down, target_fpfh = preprocess_point_cloud(target, voxel_size)

return source, target, source_down, target_down, source_fpfh, target_fpfh

def execute_global_registration(source_down, target_down, source_fpfh,

target_fpfh, voxel_size):

distance_threshold = voxel_size * 1.5

print(":: RANSAC registration on downsampled point clouds.")

print(" Since the downsampling voxel size is %.3f," % voxel_size)

print(" we use a liberal distance threshold %.3f." % distance_threshold)

result = o3d.pipelines.registration.registration_ransac_based_on_feature_matching(

source_down, target_down, source_fpfh, target_fpfh, True,

distance_threshold,

o3d.pipelines.registration.TransformationEstimationPointToPoint(False),

3, [

o3d.pipelines.registration.CorrespondenceCheckerBasedOnEdgeLength(

0.9),

o3d.pipelines.registration.CorrespondenceCheckerBasedOnDistance(

distance_threshold)

], o3d.pipelines.registration.RANSACConvergenceCriteria(100000, 0.999))

return result

voxel_size = 0.05 # means 5cm for this dataset

source, target, source_down, target_down, source_fpfh, target_fpfh = prepare_dataset(

voxel_size)

result_ransac = execute_global_registration(source_down, target_down,

source_fpfh, target_fpfh,

voxel_size)

print(result_ransac)

draw_registration_result(source_down, target_down, result_ransac.transformation)

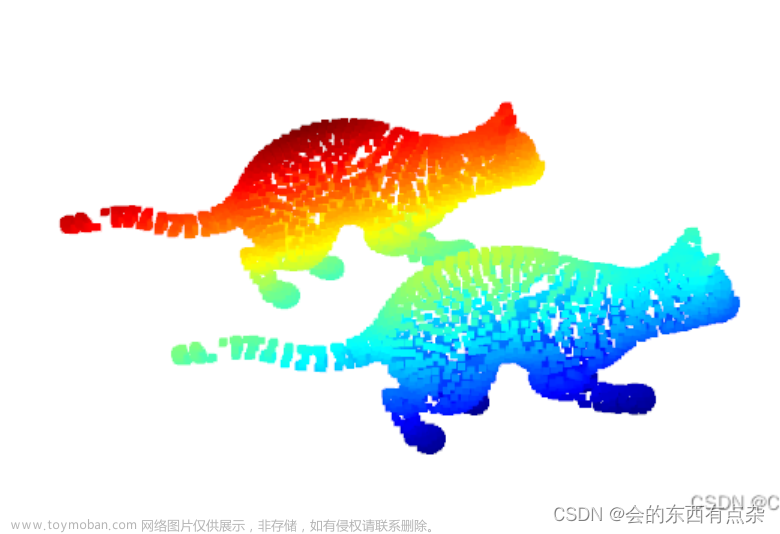

匹配前:

匹配结果: 文章来源地址https://www.toymoban.com/news/detail-690676.html

文章来源地址https://www.toymoban.com/news/detail-690676.html

到了这里,关于基于Open3D的点云处理16-特征点匹配的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!