JavaScript动态渲染界面爬取-Selenium实战

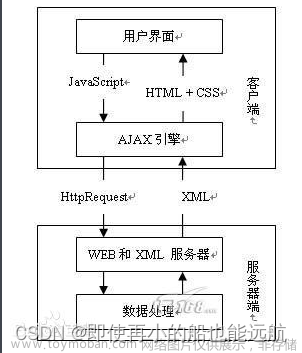

爬取的网页为:https://spa2.scrape.center,里面的内容都是通过Ajax渲染出来的,在分析xhr时候发现url里面有token参数,所有我们使用selenium自动化工具来爬取JavaScript渲染的界面。文章来源地址https://www.toymoban.com/news/detail-691614.html

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.common.exceptions import TimeoutException, NoSuchElementException

from selenium.webdriver.support.ui import WebDriverWait

import logging

from selenium.webdriver.support import expected_conditions

import re

import json

from os import makedirs

from os.path import exists

# 配置日志

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s: %(message)s')

# 基本url

url = "https://spa2.scrape.center/page/{page}"

# selenium初始化

browser = webdriver.Chrome()

# 显式等待初始化

wait = WebDriverWait(browser, 10)

book_url = list()

# 目录设置

RESULTS_DIR = 'results'

exists(RESULTS_DIR) or makedirs(RESULTS_DIR)

# 任意异常

class ScraperError(Exception):

pass

# 获取书本URL

def PageDetail(URL):

browser.get(URL)

try:

all_element = wait.until(expected_conditions.presence_of_all_elements_located((By.CSS_SELECTOR, ".el-card .name")))

return all_element

except TimeoutException:

logging.info("Time error happen in %s while finding the href", URL)

# 获取书本信息

def GetDetail(book_list):

try:

for book in book_list:

browser.get(book)

URL = browser.current_url

book_name = wait.until(expected_conditions.presence_of_element_located((By.CLASS_NAME, "m-b-sm"))).text

categories = [elements.text for elements in wait.until(expected_conditions.presence_of_all_elements_located((By.CSS_SELECTOR, ".categories button span")))]

content = wait.until(expected_conditions.presence_of_element_located((By.CSS_SELECTOR, ".item .drama p[data-v-f7128f80]"))).text

detail = {

"URL": URL,

"book_name": book_name,

"categories": categories,

"content": content

}

SaveDetail(detail)

except TimeoutException:

logging.info("Time error happen in %s while finding the book detail", browser.current_url)

# JSON文件保存

def SaveDetail(detail):

cleaned_name = re.sub(r'[\/:*?"<>|]', '_', detail.get("book_name"))

detail["book_name"] = cleaned_name

data_path = f'{RESULTS_DIR}/{cleaned_name}.json'

logging.info("Saving Book %s...", cleaned_name)

try:

json.dump(detail, open(data_path, 'w', encoding='utf-8'),

ensure_ascii=False, indent=2)

logging.info("Saving Book %s over", cleaned_name)

except ScraperError as e:

logging.info("Some error happen in %s while saving the book detail", cleaned_name)

# 主函数

def main():

try:

for page in range(1, 11):

for each_page in PageDetail(url.format(page= page)):

book_url.append(each_page.get_attribute("href"))

GetDetail(book_url)

except ScraperError as e:

logging.info("An abnormal position has occurred")

finally:

browser.close()

if __name__ == "__main__":

main()

文章来源:https://www.toymoban.com/news/detail-691614.html

到了这里,关于【爬虫】7.2. JavaScript动态渲染界面爬取-Selenium实战的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!