前言:

本片文章主要对爬虫爬取网页数据来进行一个简单的解答,对与其中的数据来进行一个爬取。

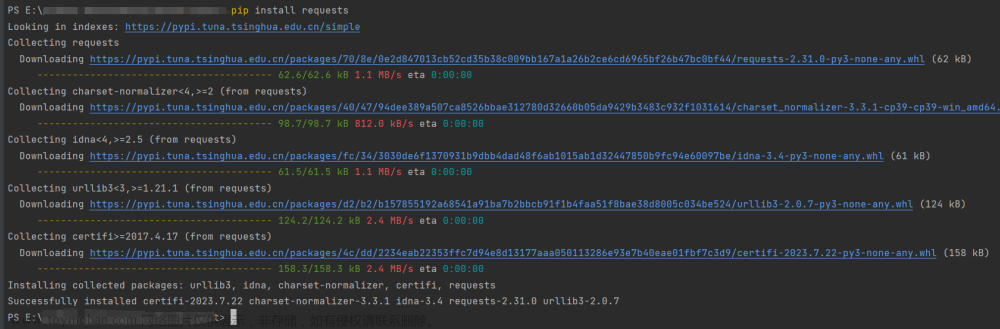

一:环境配置

Python版本:3.7.3

IDE:PyCharm

所需库:requests ,parsel

二:网站页面

我们需要获取以下数据:

'地区', '店名', '标题', '价格', '浏览次数', '卖家承诺', '在售只数',

'年龄', '品种', '预防', '联系人', '联系方式', '异地运费', '是否纯种',

'猫咪性别', '驱虫情况', '能否视频', '详情页'文章来源:https://www.toymoban.com/news/detail-694633.html

三:具体代码实现

# _*_ coding : utf-8 _*_

# @Time : 2023/9/3 23:03

# @Author : HYT

# @File : 猫

# @Project : 爬虫教程

import requests

import parsel

import csv

url ='http://www.maomijiaoyi.com/index.php?/list_0_78_0_0_0_0.html'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.90 Safari/537.36'

}

response = requests.get(url=url, headers=headers)

selector = parsel.Selector(response.text)

href = selector.css('div.content:nth-child(1) a::attr(href)').getall()

areas = selector.css('div.content:nth-child(1) a .area span.color_333::text').getall()

areas = [i.strip() for i in areas]

zip_data = zip(href, areas)

for index in zip_data:

# http://www.maomijiaoyi.com/index.php?/chanpinxiangqing_546549.html

index_url = 'http://www.maomijiaoyi.com' + index[0]

response_1 = requests.get(url=index_url, headers=headers)

selector_1 = parsel.Selector(response_1.text)

area = index[1] # 地区

shop = selector_1.css('.dinming::text').get().strip() # 店名

title = selector_1.css('.detail_text .title::text').get().strip() # 标题

price = selector_1.css('span.red.size_24::text').get() # 价格

views = selector_1.css('.info1 span:nth-child(4)::text').get() # 浏览次数

promise = selector_1.css('.info1 div:nth-child(2) span::text').get().replace('卖家承诺: ', '') # 卖家承诺

sale = selector_1.css('.info2 div:nth-child(1) div.red::text').get() # 在售

age = selector_1.css('.info2 div:nth-child(2) div.red::text').get() # 年龄

breed = selector_1.css('.info2 div:nth-child(3) div.red::text').get() # 品种

safe = selector_1.css('.info2 div:nth-child(4) div.red::text').get() # 预防

people = selector_1.css('div.detail_text .user_info div:nth-child(1) .c333::text').get() # 联系人

phone = selector_1.css('div.detail_text .user_info div:nth-child(2) .c333::text').get() # 联系方式

fare = selector_1.css('div.detail_text .user_info div:nth-child(3) .c333::text').get().strip() # 异地运费

purebred = selector_1.css(

'.xinxi_neirong div:nth-child(1) .item_neirong div:nth-child(1) .c333::text').get().strip() # 是否纯种

sex = selector_1.css(

'.xinxi_neirong div:nth-child(1) .item_neirong div:nth-child(4) .c333::text').get().strip() # 猫咪性别

worming = selector_1.css(

'.xinxi_neirong div:nth-child(2) .item_neirong div:nth-child(2) .c333::text').get().strip() # 驱虫情况

video = selector_1.css(

'.xinxi_neirong div:nth-child(2) .item_neirong div:nth-child(4) .c333::text').get().strip() # 能否视频

dit = {

'地区': area,

'店名': shop,

'标题': title,

'价格': price,

'浏览次数': views,

'卖家承诺': promise,

'在售只数': sale,

'年龄': age,

'品种': breed,

'预防': safe,

'联系人': people,

'联系方式': phone,

'异地运费': fare,

'是否纯种': purebred,

'猫咪性别': sex,

'驱虫情况': worming,

'能否视频': video,

'详情页': index_url,

}

print(area, shop, title, price, views, promise, sale, age, breed,

safe, people, phone, fare, purebred, sex, worming, video, index_url, sep=' | ')四:结果展示

文章来源地址https://www.toymoban.com/news/detail-694633.html

文章来源地址https://www.toymoban.com/news/detail-694633.html

到了这里,关于爬虫源码---爬取小猫猫交易网站的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![[Python练习]使用Python爬虫爬取豆瓣top250的电影的页面源码](https://imgs.yssmx.com/Uploads/2024/02/797225-1.png)