基本介绍:KubeKey 是 KubeSphere 社区开源的一款高效集群部署工具,运行时默认使用 Docker , 也可对接 Containerd CRI-O iSula 等 CRI 运行时,且 ETCD 集群独立运行,支持与 K8s 分离部署,提高环境部署灵活性。文章来源:https://www.toymoban.com/news/detail-695313.html

一、准备一台kubekey虚拟机

cpu;4 内存:8G 系统盘;100G

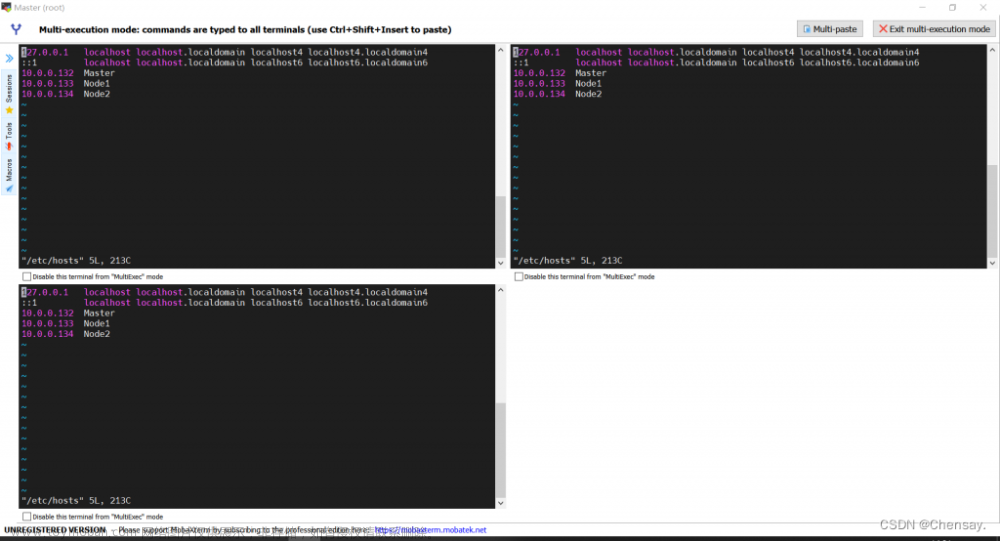

三台k8s host

192.168.5.240 master

192.168.5.227 node1

192.168.5.126 node2

二、前期准备工作

1:关闭selinux firewalld

2: 安装socat,conntrack

3: 设置系统变量:export KKZONE=cn

4:下载kubekey命令:https://github.com/kubesphere/kubekey/releases/download/v3.0.2/kubekey-v3.0.2-linux-amd64.tar.gz

5:添加hosts解析以及自身做免密登录

三、部署kubekey

解压kubekey

tar -zxvf kubekey-v2.0.0-rc.3-linux-amd64.tar.gz

- 查看kubekey版本

[root@kubekey ~]# ./kk version

kk version: &version.Info{Major:"3", Minor:"0", GitVersion:"v3.0.2", GitCommit:"1c395d22e75528d0a7d07c40e1af4830de265a23", GitTreeState:"clean", BuildDate:"2022-11-22T02:04:26Z", GoVersion:"go1.19.2", Compiler:"gc", Platform:"linux/amd64"}

- 查看支持的k8s版本

[root@kubekey ~]# ./kk version --show-supported-k8s

v1.19.0

v1.19.8

v1.19.9

v1.19.15

v1.20.4

v1.20.6

v1.20.10

v1.21.0

v1.21.1

v1.21.2

v1.21.3

v1.21.4

v1.21.5

v1.21.6

v1.21.7

v1.21.8

v1.21.9

v1.21.10

v1.21.11

v1.21.12

v1.21.13

v1.21.14

v1.22.0

v1.22.1

v1.22.2

v1.22.3

v1.22.4

v1.22.5

v1.22.6

v1.22.7

v1.22.8

v1.22.9

v1.22.10

v1.22.11

v1.22.12

v1.22.13

v1.22.14

v1.22.15

v1.22.16

v1.23.0

v1.23.1

v1.23.2

v1.23.3

v1.23.4

v1.23.5

v1.23.6

v1.23.7

v1.23.8

v1.23.9

v1.23.10

v1.23.11

v1.23.12

v1.23.13

v1.23.14

v1.24.0

v1.24.1

v1.24.2

v1.24.3

v1.24.4

v1.24.5

v1.24.6

v1.24.7

v1.24.8

v1.25.0

v1.25.1

v1.25.2

v1.25.3

v1.25.4

- 创建kubekey集群

此集群我们部署1.25.3.因为从1.24开始,k8s默认不支持docker

[root@kubekey ~]# ./kk create cluster --with-kubernetes v1.25.3 --container-manager containerd ##指定runtime,默认为docker

等待集群创建完成

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

17:36:51 CST [GreetingsModule] Greetings

17:36:51 CST message: [kubekey]

Greetings, KubeKey!

17:36:51 CST success: [kubekey]

17:36:51 CST [NodePreCheckModule] A pre-check on nodes

17:36:52 CST success: [kubekey]

17:36:52 CST [ConfirmModule] Display confirmation form

+---------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | ipvsadm | conntrack | chrony | docker | containerd | nfs client | ceph client | glusterfs client | time |

+---------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| kubekey | y | y | y | y | y | | | y | y | | 1.6.10 | y | | | CST 17:36:52 |

+---------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]: yes

17:36:54 CST success: [LocalHost]

17:36:54 CST [NodeBinariesModule] Download installation binaries

17:36:54 CST message: [localhost]

downloading amd64 kubeadm v1.25.3 ...

17:36:54 CST message: [localhost]

kubeadm is existed

17:36:54 CST message: [localhost]

downloading amd64 kubelet v1.25.3 ...

17:36:55 CST message: [localhost]

kubelet is existed

17:36:55 CST message: [localhost]

downloading amd64 kubectl v1.25.3 ...

17:36:55 CST message: [localhost]

kubectl is existed

17:36:55 CST message: [localhost]

downloading amd64 helm v3.9.0 ...

17:36:56 CST message: [localhost]

helm is existed

17:36:56 CST message: [localhost]

downloading amd64 kubecni v0.9.1 ...

17:36:56 CST message: [localhost]

kubecni is existed

17:36:56 CST message: [localhost]

downloading amd64 crictl v1.24.0 ...

17:36:57 CST message: [localhost]

crictl is existed

17:36:57 CST message: [localhost]

downloading amd64 etcd v3.4.13 ...

17:36:57 CST message: [localhost]

etcd is existed

17:36:57 CST message: [localhost]

downloading amd64 containerd 1.6.4 ...

17:36:58 CST message: [localhost]

containerd is existed

17:36:58 CST message: [localhost]

downloading amd64 runc v1.1.1 ...

17:36:58 CST message: [localhost]

runc is existed

17:36:58 CST success: [LocalHost]

17:36:58 CST [ConfigureOSModule] Get OS release

17:36:58 CST success: [kubekey]

17:36:58 CST [ConfigureOSModule] Prepare to init OS

17:37:00 CST success: [kubekey]

17:37:00 CST [ConfigureOSModule] Generate init os script

17:37:00 CST success: [kubekey]

17:37:00 CST [ConfigureOSModule] Exec init os script

17:37:01 CST stdout: [kubekey]

setenforce: SELinux is disabled

Disabled

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

vm.swappiness = 1

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

17:37:01 CST success: [kubekey]

17:37:01 CST [ConfigureOSModule] configure the ntp server for each node

17:37:01 CST skipped: [kubekey]

17:37:01 CST [KubernetesStatusModule] Get kubernetes cluster status

17:37:02 CST success: [kubekey]

17:37:02 CST [InstallContainerModule] Sync containerd binaries

17:37:02 CST skipped: [kubekey]

17:37:02 CST [InstallContainerModule] Sync crictl binaries

17:37:02 CST skipped: [kubekey]

17:37:02 CST [InstallContainerModule] Generate containerd service

17:37:02 CST skipped: [kubekey]

17:37:02 CST [InstallContainerModule] Generate containerd config

17:37:02 CST skipped: [kubekey]

17:37:02 CST [InstallContainerModule] Generate crictl config

17:37:02 CST skipped: [kubekey]

17:37:02 CST [InstallContainerModule] Enable containerd

17:37:02 CST skipped: [kubekey]

17:37:02 CST [PullModule] Start to pull images on all nodes

17:37:02 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.8

17:37:04 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.25.3

17:37:05 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.25.3

17:37:06 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.25.3

17:37:07 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.25.3

17:37:07 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.9.3

17:37:08 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

17:37:08 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

17:37:09 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

17:37:09 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

17:37:10 CST message: [kubekey]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

17:37:11 CST success: [kubekey]

17:37:11 CST [ETCDPreCheckModule] Get etcd status

17:37:11 CST stdout: [kubekey]

ETCD_NAME=etcd-kubekey

17:37:11 CST success: [kubekey]

17:37:11 CST [CertsModule] Fetch etcd certs

17:37:11 CST success: [kubekey]

17:37:11 CST [CertsModule] Generate etcd Certs

[certs] Using existing ca certificate authority

[certs] Using existing admin-kubekey certificate and key on disk

[certs] Using existing member-kubekey certificate and key on disk

[certs] Using existing node-kubekey certificate and key on disk

17:37:12 CST success: [LocalHost]

17:37:12 CST [CertsModule] Synchronize certs file

17:37:15 CST success: [kubekey]

17:37:15 CST [CertsModule] Synchronize certs file to master

17:37:15 CST skipped: [kubekey]

17:37:15 CST [InstallETCDBinaryModule] Install etcd using binary

17:37:17 CST success: [kubekey]

17:37:17 CST [InstallETCDBinaryModule] Generate etcd service

17:37:17 CST success: [kubekey]

17:37:17 CST [InstallETCDBinaryModule] Generate access address

17:37:17 CST success: [kubekey]

17:37:17 CST [ETCDConfigureModule] Health check on exist etcd

17:37:17 CST success: [kubekey]

17:37:17 CST [ETCDConfigureModule] Generate etcd.env config on new etcd

17:37:17 CST skipped: [kubekey]

17:37:17 CST [ETCDConfigureModule] Join etcd member

17:37:17 CST skipped: [kubekey]

17:37:17 CST [ETCDConfigureModule] Restart etcd

17:37:17 CST skipped: [kubekey]

17:37:17 CST [ETCDConfigureModule] Health check on new etcd

17:37:17 CST skipped: [kubekey]

17:37:17 CST [ETCDConfigureModule] Check etcd member

17:37:17 CST skipped: [kubekey]

17:37:17 CST [ETCDConfigureModule] Refresh etcd.env config on all etcd

17:37:18 CST success: [kubekey]

17:37:18 CST [ETCDConfigureModule] Health check on all etcd

17:37:18 CST success: [kubekey]

17:37:18 CST [ETCDBackupModule] Backup etcd data regularly

17:37:18 CST success: [kubekey]

17:37:18 CST [ETCDBackupModule] Generate backup ETCD service

17:37:18 CST success: [kubekey]

17:37:18 CST [ETCDBackupModule] Generate backup ETCD timer

17:37:19 CST success: [kubekey]

17:37:19 CST [ETCDBackupModule] Enable backup etcd service

17:37:20 CST success: [kubekey]

17:37:20 CST [InstallKubeBinariesModule] Synchronize kubernetes binaries

17:37:32 CST success: [kubekey]

17:37:32 CST [InstallKubeBinariesModule] Synchronize kubelet

17:37:32 CST success: [kubekey]

17:37:32 CST [InstallKubeBinariesModule] Generate kubelet service

17:37:32 CST success: [kubekey]

17:37:32 CST [InstallKubeBinariesModule] Enable kubelet service

17:37:33 CST success: [kubekey]

17:37:33 CST [InstallKubeBinariesModule] Generate kubelet env

17:37:33 CST success: [kubekey]

17:37:33 CST [InitKubernetesModule] Generate kubeadm config

17:37:34 CST success: [kubekey]

17:37:34 CST [InitKubernetesModule] Init cluster using kubeadm

17:37:51 CST stdout: [kubekey]

W1202 17:37:34.445440 18353 common.go:84] your configuration file uses a deprecated API spec: "kubeadm.k8s.io/v1beta2". Please use 'kubeadm config migrate --old-config old.yaml --new-config new.yaml', which will write the new, similar spec using a newer API version.

W1202 17:37:34.446807 18353 common.go:84] your configuration file uses a deprecated API spec: "kubeadm.k8s.io/v1beta2". Please use 'kubeadm config migrate --old-config old.yaml --new-config new.yaml', which will write the new, similar spec using a newer API version.

W1202 17:37:34.451446 18353 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[init] Using Kubernetes version: v1.25.3

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubekey kubekey.cluster.local kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local lb.kubesphere.local localhost] and IPs [10.233.0.1 192.168.5.30 127.0.0.1]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 11.504382 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node kubekey as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node kubekey as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: 90t423.8ehhtkv8domjs7no

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join lb.kubesphere.local:6443 --token 90t423.8ehhtkv8domjs7no \

--discovery-token-ca-cert-hash sha256:58329bea84ba4ee8b87682da9fb5b1b8a8ce87ae8a5fdee702315a5fd6f52006 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join lb.kubesphere.local:6443 --token 90t423.8ehhtkv8domjs7no \

--discovery-token-ca-cert-hash sha256:58329bea84ba4ee8b87682da9fb5b1b8a8ce87ae8a5fdee702315a5fd6f52006

17:37:51 CST success: [kubekey]

17:37:51 CST [InitKubernetesModule] Copy admin.conf to ~/.kube/config

17:37:51 CST success: [kubekey]

17:37:51 CST [InitKubernetesModule] Remove master taint

17:37:51 CST stdout: [kubekey]

error: taint "node-role.kubernetes.io/master:NoSchedule" not found

17:37:51 CST [WARN] Failed to exec command: sudo -E /bin/bash -c "/usr/local/bin/kubectl taint nodes kubekey node-role.kubernetes.io/master=:NoSchedule-"

error: taint "node-role.kubernetes.io/master:NoSchedule" not found: Process exited with status 1

17:37:51 CST stdout: [kubekey]

node/kubekey untainted

17:37:51 CST success: [kubekey]

17:37:51 CST [InitKubernetesModule] Add worker label

17:37:52 CST stdout: [kubekey]

node/kubekey labeled

17:37:52 CST success: [kubekey]

17:37:52 CST [ClusterDNSModule] Generate coredns service

17:37:52 CST success: [kubekey]

17:37:52 CST [ClusterDNSModule] Override coredns service

17:37:52 CST stdout: [kubekey]

service "kube-dns" deleted

17:37:54 CST stdout: [kubekey]

service/coredns created

Warning: resource clusterroles/system:coredns is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/system:coredns configured

17:37:54 CST success: [kubekey]

17:37:54 CST [ClusterDNSModule] Generate nodelocaldns

17:37:54 CST success: [kubekey]

17:37:54 CST [ClusterDNSModule] Deploy nodelocaldns

17:37:54 CST stdout: [kubekey]

serviceaccount/nodelocaldns created

daemonset.apps/nodelocaldns created

17:37:54 CST success: [kubekey]

17:37:54 CST [ClusterDNSModule] Generate nodelocaldns configmap

17:37:55 CST success: [kubekey]

17:37:55 CST [ClusterDNSModule] Apply nodelocaldns configmap

17:37:56 CST stdout: [kubekey]

configmap/nodelocaldns created

17:37:56 CST success: [kubekey]

17:37:56 CST [KubernetesStatusModule] Get kubernetes cluster status

17:37:56 CST stdout: [kubekey]

v1.25.3

17:37:56 CST stdout: [kubekey]

kubekey v1.25.3 [map[address:192.168.5.30 type:InternalIP] map[address:kubekey type:Hostname]]

17:37:57 CST stdout: [kubekey]

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

8fa0cf5c3cd6b435d507323cc3639c33f9e4931de745018fcd043c0210e2d1d8

17:37:57 CST stdout: [kubekey]

secret/kubeadm-certs patched

17:37:57 CST stdout: [kubekey]

secret/kubeadm-certs patched

17:37:58 CST stdout: [kubekey]

secret/kubeadm-certs patched

17:37:58 CST stdout: [kubekey]

uumdk0.eruq90yy8li1v12m

17:37:58 CST success: [kubekey]

17:37:58 CST [JoinNodesModule] Generate kubeadm config

17:37:58 CST skipped: [kubekey]

17:37:58 CST [JoinNodesModule] Join control-plane node

17:37:58 CST skipped: [kubekey]

17:37:58 CST [JoinNodesModule] Join worker node

17:37:58 CST skipped: [kubekey]

17:37:58 CST [JoinNodesModule] Copy admin.conf to ~/.kube/config

17:37:58 CST skipped: [kubekey]

17:37:58 CST [JoinNodesModule] Remove master taint

17:37:58 CST skipped: [kubekey]

17:37:58 CST [JoinNodesModule] Add worker label to master

17:37:58 CST skipped: [kubekey]

17:37:58 CST [JoinNodesModule] Synchronize kube config to worker

17:37:58 CST skipped: [kubekey]

17:37:58 CST [JoinNodesModule] Add worker label to worker

17:37:58 CST skipped: [kubekey]

17:37:58 CST [DeployNetworkPluginModule] Generate calico

17:37:58 CST success: [kubekey]

17:37:58 CST [DeployNetworkPluginModule] Deploy calico

17:37:59 CST stdout: [kubekey]

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

17:37:59 CST success: [kubekey]

17:37:59 CST [ConfigureKubernetesModule] Configure kubernetes

17:37:59 CST success: [kubekey]

17:37:59 CST [ChownModule] Chown user $HOME/.kube dir

17:38:00 CST success: [kubekey]

17:38:00 CST [AutoRenewCertsModule] Generate k8s certs renew script

17:38:00 CST success: [kubekey]

17:38:00 CST [AutoRenewCertsModule] Generate k8s certs renew service

17:38:00 CST success: [kubekey]

17:38:00 CST [AutoRenewCertsModule] Generate k8s certs renew timer

17:38:01 CST success: [kubekey]

17:38:01 CST [AutoRenewCertsModule] Enable k8s certs renew service

17:38:01 CST success: [kubekey]

17:38:01 CST [SaveKubeConfigModule] Save kube config as a configmap

17:38:01 CST success: [LocalHost]

17:38:01 CST [AddonsModule] Install addons

17:38:01 CST success: [LocalHost]

17:38:01 CST Pipeline[CreateClusterPipeline] execute successfully

Installation is complete.

Please check the result using the command:

kubectl get pod -A

- 环境查看

[root@kubekey ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-75c594996d-wtrk9 1/1 Running 0 32s

kube-system calico-node-48vks 1/1 Running 0 32s

kube-system coredns-67ddbf998c-k2fwf 1/1 Running 0 32s

kube-system coredns-67ddbf998c-kk6sp 1/1 Running 0 32s

kube-system kube-apiserver-kubekey 1/1 Running 0 49s

kube-system kube-controller-manager-kubekey 1/1 Running 0 46s

kube-system kube-proxy-hjn6j 1/1 Running 0 32s

kube-system kube-scheduler-kubekey 1/1 Running 0 46s

kube-system nodelocaldns-zcrvr 1/1 Running 0 32s

查看容器文章来源地址https://www.toymoban.com/news/detail-695313.html

[root@kubekey ~]# crictl ps

I1202 17:46:07.309812 28107 util_unix.go:103] "Using this endpoint is deprecated, please consider using full URL format" endpoint="/run/containerd/containerd.sock" URL="unix:///run/containerd/containerd.sock"

I1202 17:46:07.311257 28107 util_unix.go:103] "Using this endpoint is deprecated, please consider using full URL format" endpoint="/run/containerd/containerd.sock" URL="unix:///run/containerd/containerd.sock"

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

6bfd3d45bd034 ec95788d0f725 7 minutes ago Running calico-kube-controllers 0 0e3d60df6f9e7

146ef8a1fa933 5185b96f0becf 7 minutes ago Running coredns 0 13edf51da4822

7a34634370e96 5185b96f0becf 7 minutes ago Running coredns 0 85830b1a14ad7

1013cf001bd21 a3447b26d32c7 7 minutes ago Running calico-node 0 cbaf5547f890d

92db9c525c99a beaaf00edd38a 8 minutes ago Running kube-proxy 0 30190f94c0c22

ec3526860d5f0 5340ba194ec91 8 minutes ago Running node-cache 0 3cca48e41ad39

7a4316c0488e3 6d23ec0e8b87e 8 minutes ago Running kube-scheduler 0 e8b22ab1ad2a4

258487c11704d 6039992312758 8 minutes ago Running kube-controller-manager 0 edfc8079077bb

5f20bcf73c4e4 0346dbd74bcb9 8 minutes ago Running kube-apiserver 0 2974695f93619

四、部署k8s集群

- 编辑config.yaml文件

apiVersion: kubekey.kubesphere.io/v1alpha1

kind: Cluster

metadata:

name: test.com

spec:

hosts:

- {name: master, address: 192.168.5.240, internalAddress: 192.168.5.240, privateKeyPath: ~/.ssh/id_rsa}

- {name: node1, address: 192.168.5.227, internalAddress: 192.168.5.227, privateKeyPath: ~/.ssh/id_rsa}

- {name: node2, address: 192.168.5.126, internalAddress: 192.168.5.126, privateKeyPath: ~/.ssh/id_rsa}

roleGroups:

etcd:

- master

control-plane:

- master

worker:

- node1

- node2

controlPlaneEndpoint:

domain: lb.test.com

address: ""

port: 6443

kubernetes:

version: v1.25.3

clusterName: test.com

containerManager: containerd

DNSDomain: test.com

- 编辑host环境,添加域名解析

192.168.5.240 master

192.168.5.227 node1

192.168.5.126 node2

- 做主机免密登录

ssh-copy-id 192.168.5.240(三台都要做)

- 开始创建集群

./kk create cluster -f config.yaml

- 查看集群环境

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready control-plane 2d19h v1.25.3

node1 Ready worker 2d19h v1.25.3

node2 Ready worker 2d19h v1.25.3

[root@master ~]#

[root@master ~]# kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-57bcc88b-d29ds 1/1 Running 0 2d19h

kube-system calico-node-4dt5p 1/1 Running 0 2d19h

kube-system calico-node-kgmr2 1/1 Running 0 2d19h

kube-system calico-node-pdh8x 1/1 Running 0 2d19h

kube-system coredns-6d69f479b-2dc2f 1/1 Running 0 2d19h

kube-system coredns-6d69f479b-m8qbn 1/1 Running 0 2d19h

kube-system kube-apiserver-master 1/1 Running 0 2d19h

kube-system kube-controller-manager-master 1/1 Running 0 2d19h

kube-system kube-proxy-ccsdp 1/1 Running 0 2d19h

kube-system kube-proxy-tvzbc 1/1 Running 0 2d19h

kube-system kube-proxy-x5fx5 1/1 Running 0 2d19h

kube-system kube-scheduler-master 1/1 Running 0 2d19h

kube-system nodelocaldns-7p9jb 1/1 Running 0 2d19h

kube-system nodelocaldns-qzn8f 1/1 Running 0 2d19h

kube-system nodelocaldns-tnwt6 1/1 Running 0 2d19h

[root@master ~]#

五、部署metrics-server(在k8s master节点)

编辑metrics-server.yaml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:aggregated-metrics-reader

labels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rules:

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

port: 443

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

hostNetwork: true

containers:

- name: metrics-server

image: eipwork/metrics-server:v0.3.7

# command:

# - /metrics-server

# - --kubelet-insecure-tls

# - --kubelet-preferred-address-types=InternalIP

args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-insecure-tls=true

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,externalDNS

ports:

- name: main-port

containerPort: 4443

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

imagePullPolicy: Always

volumeMounts:

- name: tmp-dir

mountPath: /tmp

nodeSelector:

beta.kubernetes.io/os: linux

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: 4443

[root@master ~]#

- 部署

[root@master ~]# kubectl apply -f metrics-server.yaml

- 测试,查看资源使用率

[root@master ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 121m 3% 2691Mi 18%

node1 84m 2% 1515Mi 10%

node2 125m 3% 1512Mi 10%

到了这里,关于kubekey部署k8s集群的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!