MPDIoU: A Loss for Efficient and Accurate Bounding Box

RegressionMPDIoU:一个有效和准确的边界框损失回归函数

摘要

边界框回归(Bounding box regression, BBR)广泛应用于目标检测和实例分割,是目标定位的重要步骤。然而,当预测框与边界框具有相同的纵横比,但宽度和高度值完全不同时,大多数现有的边界框回归损失函数都无法优化。为了解决上述问题,我们充分挖掘水平矩形的几何特征,提出了一种新的基于最小点距离的边界框相似性比较指标MPDIoU,该指标包含了现有损失函数中考虑的所有相关因素,即重叠或不重叠区域、中心点距离、宽度和高度偏差,同时简化了计算过程。在此基础上,我们提出了基于MPDIoU的边界框回归损失函数,称为LMPDIoU。实验结果表明,MPDIoU损失函数应用于最先进的实例分割(如YOLACT)和基于PASCAL VOC、MS COCO和IIIT5k训练的目标检测(如YOLOv7)模型,其性能优于现有的损失函数。

关键词:目标检测,实例分割,边界框回归,损失函数

1.介绍

目标检测和实例分割是计算机视觉中的两个重要问题,近年来引起了研究者的广泛关注。大多数最先进的目标检测器(如YOLO系列[1,2,3,4,5,6],Mask R-CNN [7],Dynamic R-CNN[8]和DETR[9])依赖于边界框回归(BBR)模块来确定目标的位置。在此基础上,设计良好的损失函数对BBR的成功至关重要。目前,大多数BBR的损失函数可分为两类:基于n范数的损失函数和基于IoU的损失函数。

然而,现有的边界框回归的损失函数在不同的预测结果下具有相同的值,这降低了边界框回归的收敛速度和精度。因此,考虑到现有边界框回归损失函数的优缺点,受水平矩形几何特征的启发,我们尝试设计一种新的基于最小点距离的边界框回归损失函数LMPDIoU

,并将MPDIoU作为边界框回归过程中比较预测边界框与真值边界框相似度的新标准。我们还提供了一个易于实现的解决方案来计算两个轴线对齐的矩形之间的MPDIoU,允许它被用作最先进的对象检测和实例分割算法的评估指标,我们在一些主流的对象检测、场景文本识别和实例分割数据集(如PASCAL VOC[10]、MS COCO[11]、IIIT5k[12]和MTHv2[13])上进行了测试,以验证我们提出的MPDIoU的性能。

本文的贡献可以概括为以下几点:

1.我们考虑了现有基于IoU的损失和n范数损失的优缺点,提出了一种基于最小点距离的IoU损失,称为LMPDIoU

,以解决现有损失的问题,并获得更快的收敛速度和更准确的回归结果。

2.在目标检测、字符级场景文本识别和实例分割任务上进行了大量的实验。出色的实验结果验证了所提出的MPDIoU损失函数的优越性。详细的消融研究显示了不同设置的损失函数和参数值的影响。

2.相关工作

2.1.目标检测和实例分割

在过去的几年里,来自不同国家和地区的研究人员提出了大量基于深度学习的目标检测和实例分割方法。综上所述,在许多具有代表性的目标检测和实例分割框架中,边界框回归已经被作为一个基本组成部分[14]。在目标检测的深度模型中,R-CNN系列[15]、[16]、[17]采用两个或三个边界框回归模块来获得更高的定位精度,而YOLO系列[2、3、6]和SSD系列[18、19、20]采用一个边界框回归模块来实现更快的推理。RepPoints[21]预测几个点来定义一个矩形框。FCOS[22]通过预测采样点到边界框的上、下、左、右的欧氏距离来定位目标。

对于实例分割,PolarMask[23]在n个方向上预测从采样点到物体边缘的n条射线的长度来分割一个实例。还有一些检测器,如RRPN[24]和R2CNN[25],通过旋转角度回归来检测任意方向的物体,用于遥感检测和场景文本检测。Mask R-CNN[7]在Faster R-CNN[15]上增加了一个额外的实例掩码分支,而最近最先进的YOLACT[26]在RetinaNet[27]上做了同样的事情。综上所述,边界框回归是用于目标检测和实例分割的最先进深度模型的关键组成部分。

2.2.场景文本识别

为了解决任意形状的场景文本检测和识别问题,ABCNet[28]及其改进版本ABCNet v2[29]使用BezierAlign将任意形状的文本转换为规则文本。这些方法通过纠错模块将检测和识别统一为端到端的可训练系统,取得了很大的进步。[30]提出了RoI Masking来提取任意形状文本识别的特征。与[30,31]类似,尝试使用更快的检测器进行场景文本检测。AE TextSpotter[32]利用识别结果通过语言模型指导检测。受[33]的启发,[34]提出了一种基于transformer的场景文本识别方法,该方法提供了实例级文本分割结果。

2.3.边界框回归的损失函数

一开始,在边界框回归中广泛使用的是n范数损失函数,它非常简单,但对各种尺度都很敏感。在YOLO v1[35]中,采用平方根w和h来缓解这种影响,而YOLO v3[2]使用2−wh。为了更好地计算真实边界框与预测边界框之间的差异,从Unitbox开始使用IoU loss[36]。为了保证训练的稳定性,Bounded-IoU loss[37]引入了IoU的上界。对于训练对象检测和实例分割的深度模型,基于IoU的度量被认为比ℓn范式更一致[38,37,39]。原始IoU表示预测边界框与真实边界框的相交面积和并集面积之比(如图1(a)所示),可表示为:

图1:现有边界盒回归指标的计算因子包括GIoU、DIoU、CIoU和EIoU

式中,Bgt

为真实边界框,Bprd

为预测边界框。我们可以看到,原来的IoU只计算两个边界框的并集面积,无法区分两个边界框不重叠的情况。如式1所示,如果|Bgt∩Bprd|=0

,则IoU(Bgt, Bprd)=0

。在这种情况下,IoU不能反映两个框是彼此靠近还是彼此很远。于是,提出了GIoU[39]来解决这一问题。GIoU可以表示为:

其中,C

为覆盖Bgt

和Bprd

的最小方框(如图1(a)中黑色虚线框所示),|C|

为方框C

的面积。由于在GIoU损失中引入了惩罚项,在不重叠的情况下,预测方框会向目标方框移动。GIoU损失已被应用于训练最先进的目标检测器,如YOLO v3和Faster R-CNN,并取得了比MSE损失和IoU损失更好的性能。但是,当预测边界框完全被真实边界框覆盖时,GIoU将失去有效性。为了解决这一问题,提出了DIoU[40],考虑了预测边界框与真实边界框之间的质心点距离。DIoU的公式可以表示为:

其中ρ2(Bgt, Bprd)

为预测边界框中心点与真实边界框中心点之间的欧氏距离(如图1(b)中红色虚线所示)。C2

表示最小的封闭矩形的对角线长度(如图1(b)中所示的黑色虚线)。我们可以看到,LDIoU

的目标直接最小化了预测边界框中心点与真实边界框中心点之间的距离。但是,当预测边界框的中心点与真实边界框的中心点重合时,会退化为原始IoU。为了解决这一问题,提出了同时考虑中心点距离和纵横比的CIoU。CIoU的公式可以写成如下:

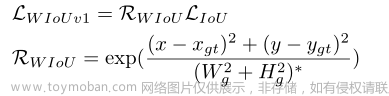

但是,从CIoU中定义的纵横比是相对值,而不是绝对值。针对这一问题,在DIoU的基础上提出了EIoU[41],其定义如下:

然而,如图2所示,当预测的边界框和真实边界框具有相同的宽高比,但宽度和高度值不同时,上述用于边界框回归的损失函数将失去有效性,这将限制收敛速度和精度。因此,考虑到LGIoU

[39]、LDIoU

[40]、LCIoU

[42]、LEIoU

[41]所具有的优点,我们尝试设计一种新的损失函数LMPDIoU

用于边界框回归,同时具有更高的边界框回归效率和精度。

然而,边界框回归的几何性质在现有的损失函数中并没有得到充分的利用。因此,我们提出了MPDIoU损失,通过最小化预测边界框和真实边界框之间的左上和右下点距离,以更好地训练目标检测、字符级场景文本识别和实例分割的深度模型。

图2:具有不同边界框回归结果的两种情况。绿框表示真实边界框,红框表示预测边界框。LGIoU

、LDIoU

、LCIoU

这两种情况的LMPDIoU

值完全相同,但它们的LMPDIoU

3.点距最小的并集交点

在分析了上述基于IoU的损失函数的优缺点后,我们开始思考如何提高边界框回归的精度和效率。一般来说,我们使用左上角和右下角点的坐标来定义一个唯一的矩形。受边界框几何特性的启发,我们设计了一种新的基于IoU的度量,称为MPDIoU,直接最小化预测边界框与真实边界框之间的左上和右下点距离。算法1总结了MPDIoU的计算。

综上所述,我们提出的MPDIoU简化了两个边界框之间的相似性比较,可以适应重叠或非重叠的边界框回归。因此,在2D/3D计算机视觉任务中使用的所有性能测量中,MPDIoU可以作为IoU的适当替代品。在本文中,我们只关注二维目标检测和实例分割,我们可以很容易地将MPDIoU作为度量和损失。扩展到非轴对齐的3D情况是留给未来的工作。

3.1 MPDIoU边界框回损失函数

在训练阶段,模型预测的每个边界框Bprd=[xprd, yprd, wprd,hprd]TBprd=[xprd, yprd, wprd,hprd]T

,通过最小化损失函数,迫使其逼近其真实边界框Bgt=[xgt, ygt, wgt,hgt]T

:

其中Bgt

为真实边界框的集合,Θ

为深度回归模型的参数。L

的典型形式是n-范数,如均方误差(MSE)损失和Smooth-l1

损失[43],在目标检测中被广泛采用[44];行人检测[45,46];场景文本识别[34,47];三维目标检测[48,49];姿态估计[50,51];以及实例分割[52,26]。然而,最近的研究表明,基于n-范数的损失函数与评价度量即IoU(interaction over union)不一致,而是提出了基于IoU的损失函数[53,37,39]。根据上一节MPDIoU的定义,我们定义基于MPDIoU的损失函数如下:

因此,现有的边界框回归损失函数的所有因子都可以由四个点坐标确定。换算公式如下:

其中|C|

表示覆盖Bgt

和Bprd

的最小封闭矩形面积,(xcgt, ycgt)

和(xcprd, ycprd)

分别表示真实边界框和预测边界框中心点的坐标。wgt

和hgt

表示真实边界框的宽度和高度,wprd

和hprd

表示预测边界框的宽度和高度。

由式(10)-式(12)可知,现有损失函数中考虑的所有因素,如不重叠面积、中心点距离、宽度和高度偏差等,均可由左上点和右下点的坐标确定,说明我们提出的LMPDIoU

不仅考虑周到,而且简化了计算过程。

根据定理3.1,如果预测边界框和真实边界框的宽高比相同,则在真实边界框内的预测边界框的LMPDIoU

值低于在真实边界框外的预测框。这一特性保证了边界框回归的准确性,使得预测的边界框具有较少的冗余性。

图3:我们提出的LMPDIoU

的参数

图4:具有相同长宽比但不同宽度和高度的预测边界框和真实边界框示例,其中k>1, k∈R

,其中绿色框为真实边界框,红色框为预测框

定理3.1.我们定义一个真实边界框为Bgt

,两个预测边界框为Bprd1

和Bprd2

。输入图像的宽度和高度分别为w和h。假设Bgt

, Bprd1

和Bprd2

的左上和右下坐标分别为(x1gt,y1gt,x2gt, y2gt)

,(x1prd1,y1prd1, x2prd1, y2prd1)

和(x1prd2,y1prd2, x2prd2, y2prd2)

,则Bgt

, Bprd1

和Bprd2

的宽度和高度可以表示为(wgt=y2gt-y1gt,wgt=x2gt-x1gt)

和(wprd1=y2prd1-y1prd1,wprd=y2prd1-y1prd1

和(wprd2=y2prd2-y1prd2,wprd=y2prd2-y1prd2)

。若wprd1=k*wgt,hprd1=k*hgt

,则wprd2=1k*wgt,hprd2=1k*wgt

,其中k>1

和k∈N*

。

Bgt

,Bprd1

和Bprd2

的中心点都是重叠的。则GIoU(Bgt, Bprd1)=GIoU(Bgt, Bprd2)

,DIoU(Bgt, Bprd1)=DIoU(Bgt, Bprd2)

,CIoU(Bgt, Bprd1)=CIoU(Bgt, Bprd2)

,EIoU(Bgt, Bprd1)=EIoU(Bgt, Bprd2)

,但MPDIoUBgt,Bprd1>MPDIoUBgt, Bprd2

。

考虑真实边界框,Bgt是一个面积大于零的矩形,即Agt>0

. Alg. 2(1)和Alg. 2(6) 中的条件分别保证了预测区域Aprd

和交集区域I

是非负值,即Aprd≥0

和I≥0

,∀Bprd∈R4

。因此联合区域μ>0

;对于任何预测边界框Bprd=x1prd,y1prd,x2prd,y2prd∈R4

。这确保了IoU中的分母对于任何预测值的输出都不会为零。此外,对于Bprd=x1prd,y1prd,x2prd,y2prd∈R4

的任意值,其并集面积总是大于交集面积,即μ≥I

。因此,LMPDIoU

总是有界的,即0≤LMPDIoU≤3,∀Bprd∈R4

。

当IoU=0

时LMPDIoU

的情况:对于MPDIoU

损失,我们有LMPDIoU=1-MPDIoU=1+d12d2+d22d2-IoU

。当Bgt

与Bprd

不重叠,即IoU=0

时,MPDIoU损失可简化为LMPDIoU=1-MPDIoU=1+d12d2+d22d2

。在这种情况下,通过最小化LMPDIoU

,我们实际上最小化了d12d2+d22d2

。这一项是0到1之间的归一化测度,即0≤d12d2+d22d2≤2

。

4.实验结果

我们通过将新的边界框回归损失LMPDIoU入最流行的2D目标检测器和实例分割模型(如YOLO v7[6]和YOLACT[26])来评估我们的边界框回归损失LMPDIoU。为此,我们用LMPDIoU

替换它们的默认回归损失,即我们替换了YOLACT[26]中的l1-smooth

和YOLO v7[6]中的LCIoU

。我们还将基准损失与LGIoU

进行了比较。

4.1 实验设置

实验环境可以概括为:内存为32GB,操作系统为windows 11, CPU为Intel i9-12900k,显卡为NVIDIA Geforce RTX 3090,内存为24GB。为了进行公平的比较,所有的实验都是用PyTorch实现的[54]。

4.2 数据集

我们训练了所有目标检测和实例分割基线,并报告了两个标准基准测试的所有结果,即PASCAL VOC[10]和Microsoft Common Objects in Context (MS COCO 2017)[11]挑战。他们的培训方案和评估的细节将在各自的章节中解释。

PASCAL VOC 2007&2012: PASCAL Visual Object Classes (VOC)[10]基准是用于分类、目标检测和语义分割的最广泛的数据集之一,它包含了大约9963张图像。训练数据集和测试数据集各占50%,其中来自20个预定义类别的目标用水平边界框进行标注。由于用于实例分割的图像规模较小,导致性能较弱,我们只使用MS COCO 2017进行实例分割结果训练。

MS COCO: MS COCO[11]是一个广泛使用的图像字幕、目标检测和实例分割的基准,它包含了来自80个类别的超过50万个带标注目标实例的训练、验证和测试集的20多万张图像。

IIIT5k: IIIT5k[12]是一种流行的带有字符级注释的场景文本识别基准,它包含了从互联网上收集的5000个裁剪过的单词图像。字符类别包括英文字母和数字。有2000张图像用于训练,3000张图像用于测试。

MTHv2: MTHv2[13]是一种流行的带有字符级标注的OCR基准。汉字种类包括简体字和繁体字。它包含了3000多幅中国历史文献图像和100多万汉字。4.3评价指标

在本文中,我们使用了与MS COCO 2018 Challenge[11]相同的性能指标来衡量我们的所有结果,包括针对特定IoU阈值的不同类别标签的平均平均精度(mAP),以确定真阳性和假阳性。我们实验中使用的目标检测的主要性能指标是精度和mAP@0.5:0.95。我们报告IoU阈值的mAP值为0.75,如表中AP75所示。对于实例分割,我们实验中使用的主要性能度量是AP和AR,它是在不同的IoU阈值上平均mAP和mAR,即IoU ={.5,.55,…, .95}

。

所有的目标检测和实例分割基线也使用MS COCO 2017和PASCAL VOC 2007&2012的测试集进行了评估。结果将在下一节中显示。

4.4 目标检测实验结果

训练策略。我们使用了由[6]发布的YOLO v7的原始Darknet实现。对基准结果(使用GIoU loss进行训练),我们在所有实验中选择DarkNet-608作为主干,并使用报告的默认参数和每个基准的迭代次数严格遵循其训练策略。为了使用GIoU, DIoU, CIoU, EIoU和MPDIoU损失来训练YOLO v7,我们只需将边界框回归IoU损失替换为2中解释的LGIoU

, LDIoU

, LCIoU

, LEIoU

和LMPDIoU

损失。

图5:MS COCO 2017[11]和PASCAL VOC 2007[10]测试集的目标检测结果,使用(从左至右)LGIoU

, LDIoU

, LCIoU

, LEIoU

和LMPDIoU

损失训练的YOLO v7[6]

表1:使用自身损失LGIoU

, LDIoU

, LCIoU

, LEIoU

和LMPDIoU

损失训练的YOLO v7[6]的性能比较。结果报告在PASCAL VOC 2007&2012的测试集上

图6:使用LGIoU

, LDIoU

, LCIoU

, LEIoU

和LMPDIoU

损失在PASCAL VOC 2007和2012[10]上训练YOLO v7[6]时的bbox损失和AP值。

按照原始代码的训练方法,我们在数据集的训练集和验证集上使用每个损失训练YOLOv7[6],最多可达150 epoch。我们将早停机制的patience设置为5,以减少训练时间,保存性能最好的模型。在PASCAL VOC 2007&2012的测试集上对每个损失使用最佳检查点的性能进行了评估。结果见表1。

4.5 字符级场景文本识别的实验结果

训练方法。我们在目标检测实验中使用了类似的训练方案。按照原始代码的训练协议,我们在数据集的训练集和验证集上使用每个损失训练YOLOv7[6],最多30个epoch。

使用IIIT5K[12]和MTHv2[55]的测试集对每次损失使用最佳检查点的性能进行了评估。结果见表2和表3。

图7:使用(从左至右) LGIoU

, LDIoU

, LCIoU

, LEIoU

和LMPDIoU

损失训练的YOLOv7[6]对IIIT5K[12]测试集的字符级场景文本识别结果。

表2:使用自身损失LGIoU

, LDIoU

, LCIoU

, LEIoU

和LMPDIoU

损失训练的YOLO v7[6]的性能比较。结果报告在IIIT5K测试集上。

表3:使用自身损失LGIoU

, LDIoU

, LCIoU

, LEIoU

和LMPDIoU

损失训练的YOLO v7[6]的性能比较。在MTHv2测试集上报告了结果

从表2和表3的结果可以看出,与现有的LGIoU

, LDIoU

, LCIoU

, LEIoU

等回归损失相比,使用LMPDIoU

作为回归损失训练YOLO v7可以显著提高其性能。我们提出的LMPDIoU

在字符级场景文本识别方面表现出色。

4.6 实例分割的实验结果

训练方法。我们使用了最新的PyTorch实现的YOLACT[26],由加州大学发布。对于基准结果(使用LGIoU训练),我们在所有实验中选择ResNet-50作为两个YOLACT的骨干网络架构,并使用报告的默认参数和每个基准的迭代次数遵循其训练协议。为了使用GIoU, DIoU, CIoU, EIoU和MPDIoU损失来训练YOLACT,我们用2中解释的LGIoU

, LDIoU

, LCIoU

, LEIoU

和LMPDIoU

损失替换了它们在最后边界框细化阶段的ℓ1-smooth。与YOLO v7实验类似,我们用我们提出的LMPDIoU

替换了边界框回归的原始损失函数。

如图8(c)所示,将LGIoU

, LDIoU

, LCIoU

, LEIoU

作为回归损失,可以略微提高YOLACT在MS COCO 2017上的性能。然而,与使用LMPDIoU

进行训练的情况相比,改进是明显的,在LMPDIoU

中,我们针对不同的IoU阈值可视化了不同的掩膜AP值,即0.5≤IoU≤0.95。

与上述实验类似,使用LMPDIoU

作为对现有损失函数的回归损失,可以提高检测精度。如表4所示,我们提出的LMPDIoU

在大多数指标上比现有的损失函数表现得更好。然而,不同损失之间的改进量比以前的实验要少。这可能是由几个因素造成的。首先,YOLACT[26]上的检测锚框比YOLO v7[6]更密集,导致LMPDIoU

优于LIoU的场景更少,例如不重叠的边界框。其次,现有的边界框回归的损失函数在过去的几年里得到了改进,这意味着精度的提高是非常有限的,但效率的提高还有很大的空间。

图8:在MS COCO 2017[11]上使用LGIoU

, LDIoU

, LCIoU

, LEIoU

和LMPDIoU

损失以及不同IoU阈值下的掩模AP值对YOLACT[26]进行训练迭代时的bbox loss和box AP值

图9:MS COCO 2017[11]和PASCAL VOC 2007[10]测试集的实例分割结果,使用(从左至右) LGIoU

, LDIoU

, LCIoU

, LEIoU

和LMPDIoU

损失训练的YOLACT[26]。

我们还比较了不同回归损失函数下YOLACT训练期间边界框损失和AP值的变化趋势。如图8(a)、(b)所示,使用LMPDIoU

进行训练的效果优于大多数现有的损失函数LGIoU

、LDIoU

,准确率更高,收敛速度更快。虽然边界框loss和AP值波动较大,但我们提出的LMPDIoU

在训练结束时表现更好。为了更好地揭示不同损失函数在实例分割边界框回归中的性能,我们提供了一些可视化结果,如图5和图9所示。我们可以看到,与LGIoU

, LDIoU

, LCIoU

, LEIoU

相比,我们基于LMPDIoU

提供了更少冗余和更高精度的实例分割结果。

表4:YOLACT的实例分割结果[26]。我们使用LGIoU

, LDIoU

, LCIoU

, LEIoU

对模型进行再训练,并将结果报告在MS COCO 2017的测试集上[11]。记录训练期间的FPS和时间

5.结论

本文引入了一种基于最小点距的MPDIoU度量,用于比较任意两个边界框。我们证明了这个新指标具有现有基于IoU的指标所具有的所有吸引人的属性,同时简化了其计算。在2D/3D视觉任务的所有性能测量中,这将是一个更好的选择。

我们还提出了一个称为LMPDIoU

的损失函数用于边界框回归。我们使用常用的性能度量和我们提出的MPDIoU将其应用于最先进的目标检测和实例分割算法,从而提高了它们在流行的目标检测、场景文本识别和实例分割基准(如PASCAL VOC、MS COCO、MTHv2和IIIT5K)上的性能。由于度量的最优损失是度量本身,我们的MPDIoU损失可以用作所有需要2D边界框回归的应用程序的最优边界框回归损失。文章来源:https://www.toymoban.com/news/detail-695519.html

对于未来的工作,我们希望在一些基于目标检测和实例分割的下游任务上进行进一步的实验,包括场景文本识别、人物再识别等。通过以上实验,我们可以进一步验证我们提出的损失函数的泛化能力。文章来源地址https://www.toymoban.com/news/detail-695519.html

到了这里,关于MPDIoU: A Loss for Efficient and Accurate Bounding BoxRegression的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!