accelerate 分布式技巧实战–部署ChatGLM-6B(三)

基础环境

torch==2.0.0+cu118

transformers==4.28.1

accelerate==0.18.0

Tesla T4 15.3G

内存:11.8G

下载相关文件:

git clone https://github.com/THUDM/ChatGLM-6B

cd ChatGLM-6B

git clone --depth=1 https://huggingface.co/THUDM/chatglm-6b THUDM/chatglm-6b

git clone --depth=1 https://huggingface.co/THUDM/chatglm-6b-int4 THUDM/chatglm-6b-int4

pip install -r requirements.txt

pip install gradio

pip install accelerate

正常情况下,我们使用Chat-GLM需要的显存大于13G,内存没有评估过,但上述的肯定是不够的,16G应该可以。

方案一:量化模型

from accelerate import infer_auto_device_map, init_empty_weights, load_checkpoint_and_dispatch

from transformers import AutoConfig, AutoModel, AutoModelForCausalLM, AutoTokenizer

import gradio as gr

import torch

import time

tokenizer = AutoTokenizer.from_pretrained("./THUDM/chatglm-6b-int4", trust_remote_code=True)

model = AutoModel.from_pretrained("./THUDM/chatglm-6b-int4", trust_remote_code=True).half().cuda()

model = model.eval()

def predict(input, history=None):

print(f'predict started: {time.time()}');

if history is None:

history = []

response, history = model.chat(tokenizer, input, history)

return response, history

while True:

text = input(">>用户:")

response, history = model.chat(tokenizer, input, history)

print(">>CHatGLM:", response)

GPU使用4.9G,内存使用5.5G。

方案二:一块GPU

from accelerate import infer_auto_device_map, init_empty_weights, load_checkpoint_and_dispatch

from transformers import AutoConfig, AutoModel, AutoModelForCausalLM, AutoTokenizer

import gradio as gr

import torch

import time

tokenizer = AutoTokenizer.from_pretrained("./THUDM/chatglm-6b", trust_remote_code=True)

config = AutoConfig.from_pretrained("./THUDM/chatglm-6b", trust_remote_code=True)

with init_empty_weights():

model = AutoModel.from_config(config, trust_remote_code=True)

for name, _ in model.named_parameters():

print(name)

# device_map = infer_auto_device_map(model, no_split_module_classes=["GLMBlock"])

# print(device_map)

device_map = {'transformer.word_embeddings': 0, 'transformer.layers.0': 0, 'transformer.layers.1': 0, 'transformer.layers.2': 0, 'transformer.layers.3': 0, 'transformer.layers.4': 0, 'transformer.layers.5': 0, 'transformer.layers.6': 0, 'transformer.layers.7': 0, 'transformer.layers.8': 0, 'transformer.layers.9': 0, 'transformer.layers.10': 0, 'transformer.layers.11': 0, 'transformer.layers.12': 0, 'transformer.layers.13': 0, 'transformer.layers.14': 0, 'transformer.layers.15': 0, 'transformer.layers.16': 0, 'transformer.layers.17': 0, 'transformer.layers.18': 0, 'transformer.layers.19': 0, 'transformer.layers.20': 0, 'transformer.layers.21': 'cpu', 'transformer.layers.22': 'cpu', 'transformer.layers.23': 'cpu', 'transformer.layers.24': 'cpu', 'transformer.layers.25': 'cpu', 'transformer.layers.26': 'cpu', 'transformer.layers.27': 'cpu', 'transformer.final_layernorm': 'cpu', 'lm_head': 'cpu'}

model = load_checkpoint_and_dispatch(model, "./THUDM/chatglm-6b", device_map=device_map, offload_folder="offload", offload_state_dict=True, no_split_module_classes=["GLMBlock"]).half()

def predict(input, history=None):

print(f'predict started: {time.time()}');

if history is None:

history = []

response, history = model.chat(tokenizer, input, history)

return response, history

while True:

history = None

text = input(">>用户:")

response, history = model.chat(tokenizer, text, history)

print(">>CHatGLM:", response)

GPU使用9.7G,内存使用5.9G。第一轮输入你好后GPU使用11.2G。

方案三:accelerate,多块GPU

import os

os.environ["cuda_visible_devices"] = "0,1"

from accelerate import infer_auto_device_map, init_empty_weights, load_checkpoint_and_dispatch

from transformers import AutoConfig, AutoModel, AutoModelForCausalLM, AutoTokenizer

# import gradio as gr

# import torch

import time

tokenizer = AutoTokenizer.from_pretrained(".\\chatglm-6b\\", trust_remote_code=True)

config = AutoConfig.from_pretrained(".\\chatglm-6b\\", trust_remote_code=True)

with init_empty_weights():

model = AutoModel.from_config(config, trust_remote_code=True)

for name, _ in model.named_parameters():

print(name)

# device_map = infer_auto_device_map(model, no_split_module_classes=["GLMBlock"])

# print(device_map)

# device_map = {'transformer.word_embeddings': 0, 'transformer.layers.0': 0, 'transformer.layers.1': 0, 'transformer.layers.2': 0, 'transformer.layers.3': 0, 'transformer.layers.4': 0, 'transformer.layers.5': 0, 'transformer.layers.6': 0, 'transformer.layers.7': 0, 'transformer.layers.8': 0, 'transformer.layers.9': 0, 'transformer.layers.10': 0, 'transformer.layers.11': 0, 'transformer.layers.12': 0, 'transformer.layers.13': 0, 'transformer.layers.14': 0, 'transformer.layers.15': 0, 'transformer.layers.16': 0, 'transformer.layers.17': 0, 'transformer.layers.18': 0, 'transformer.layers.19': 0, 'transformer.layers.20': 0, 'transformer.layers.21': 'cpu', 'transformer.layers.22': 'cpu', 'transformer.layers.23': 'cpu', 'transformer.layers.24': 'cpu', 'transformer.layers.25': 'cpu', 'transformer.layers.26': 'cpu', 'transformer.layers.27': 'cpu', 'transformer.final_layernorm': 'cpu', 'lm_head': 'cpu'}

model = load_checkpoint_and_dispatch(model, ".\\chatglm-6b\\", device_map="balanced", offload_folder="offload", offload_state_dict=True, no_split_module_classes=["GLMBlock"]).half()

def predict(input, history=None):

print(f'predict started: {time.time()}')

if history is None:

history = []

response, history = model.chat(tokenizer, input, history)

return response, history

while True:

history = None

text = input(">>用户:")

response, history = model.chat(tokenizer, text, history)

print(">>CHatGLM:", response)

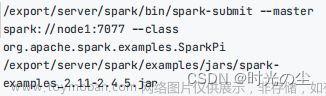

注意,这里我们设置设备映射为balanced,并只使用前两块GPU。显卡占用情况文章来源:https://www.toymoban.com/news/detail-699390.html

参考

https://cloud.tencent.com/developer/article/2274903?areaSource=102001.17&traceId=dUu9a81soH3zQ5nQGczRV文章来源地址https://www.toymoban.com/news/detail-699390.html

到了这里,关于accelerate 分布式技巧实战--部署ChatGLM-6B(三)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![[分布式] Ceph实战应用](https://imgs.yssmx.com/Uploads/2024/02/579407-1.png)