1、爬取单张图片

# 爬取单张图片

import requests # 导入requests库

url = "https://scpic.chinaz.net/files/pic/pic9/202112/hpic4875.jpg" # 图片地址

response = requests.get(url) # 获取图片

with open("img/test1.jpg", "wb") as f: # wb:写入二进制文件

f.write(response.content) # 写入图片

print("图片下载完成")

2、爬取批量图片文章来源:https://www.toymoban.com/news/detail-705062.html

# 爬取批量图片

import requests # 导入requests库

import os # 导入os库

from bs4 import BeautifulSoup # 从bs4库中导入BeautifulSoup

name_path = 'img2'

if not os.path.exists(name_path): # 判断文件夹是否存在

os.mkdir(name_path) # 创建文件夹

def getUrl():

url = "https://sc.chinaz.com/tupian/gudianmeinvtupian.html" # 图片地址

response = requests.get(url)

img_txt = BeautifulSoup(response.content, "html.parser") # 解析网页

find = img_txt.find("div", attrs={'class': 'tupian-list com-img-txt-list'}) # 查找图片

find_all = find.find_all("div", attrs={'class': 'item'}) # 查找所有图片

for i in find_all:

url = 'https:' + i.find('img').get('data-original') # 获取图片地址

name = i.find('a').text # 获取图片名字

# print(name, url)

try:

getImg(url, name) # 调用getImg方法

except: # 相当于java中的catch

print("下载失败");

continue # 如果下载失败,跳过

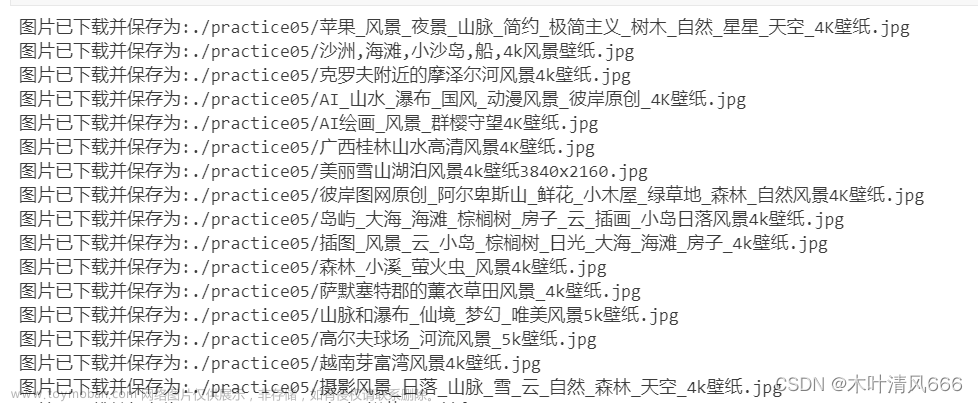

def getImg(ImageUrl, ImageName):

response = requests.get(ImageUrl).content # 获取图片

with open(f'{name_path}/{ImageName}.jpg', 'wb') as f: # 保存图片,wb表示写入二进制文件

f.write(response)

print(ImageName, "下载完成")

if __name__ == '__main__':

getUrl()

3、如果一个网页的图片很多,可以进行分页爬取文章来源地址https://www.toymoban.com/news/detail-705062.html

# 分页爬取图片

import requests # 导入requests库

import os # 导入os库

from bs4 import BeautifulSoup # 从bs4库中导入BeautifulSoup

name_path = 'img2'

if not os.path.exists(name_path): # 判断文件夹是否存在

os.mkdir(name_path) # 创建文件夹

Sum = 0 # 用于记录下载的图片数量

def getUrl(num):

if num == '1': # 第一页特殊处理

url = "https://sc.chinaz.com/tupian/gudianmeinvtupian.html"

else:

url = f"https://sc.chinaz.com/tupian/gudianmeinvtupian_{num}.html" # 图片地址

response = requests.get(url)

img_txt = BeautifulSoup(response.content, "html.parser") # 解析网页

find = img_txt.find("div", attrs={'class': 'tupian-list com-img-txt-list'}) # 查找图片

find_all = find.find_all("div", attrs={'class': 'item'}) # 查找所有图片

for i in find_all:

url = 'https:' + i.find('img').get('data-original') # 获取图片地址

name = i.find('a').text # 获取图片名字

# print(name, url)

try:

getImg(url, name) # 调用getImg方法

except: # 相当于java中的catch

print("下载失败");

continue # 如果下载失败,跳过

def getImg(ImageUrl, ImageName):

response = requests.get(ImageUrl).content # 获取图片

with open(f'{name_path}/{ImageName}.jpg', 'wb') as f: # 保存图片,wb表示写入二进制文件

f.write(response)

print(ImageName, "下载完成")

global Sum

Sum += 1

if __name__ == '__main__':

num = input_num = input("请输入要爬取的总页数:[1-7]\n")

if (int(num) > 7):

print("输入有误,最大为7")

exit()

else:

for i in range(1, int(num) + 1):

getUrl(num)

print(f"第{i}页爬取完成")

print(f"共下载{Sum}张图片")

到了这里,关于用python爬取某个图片网站的图片的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![[爬虫篇]Python爬虫之爬取网页音频_爬虫怎么下载已经找到的声频](https://imgs.yssmx.com/Uploads/2024/04/855397-1.png)