前言:

在kubesphere部署的过程中,由于kubernetes集群的版本和kubesphere的版本不匹配,因此想要回退重新部署,但发现要用的namespace kubesphere-system 普通的删除方法无效,一直处于Terminating状态

[root@centos1 ~]# kubectl get ns

NAME STATUS AGE

default Active 12h

kube-flannel Active 95m

kube-node-lease Active 12h

kube-public Active 12h

kube-system Active 12h

kubesphere-system Terminating 27m

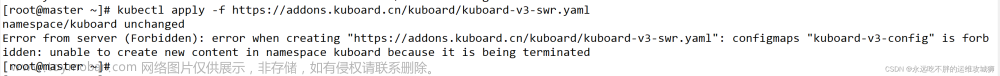

新部署由于namespace一直是删除状态,无法继续进行:

[root@centos1 ~]# kubectl apply -f kubesphere-installer.yaml

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io created

Warning: Detected changes to resource kubesphere-system which is currently being deleted.

namespace/kubesphere-system unchanged

clusterrole.rbac.authorization.k8s.io/ks-installer configured

clusterrolebinding.rbac.authorization.k8s.io/ks-installer unchanged

Error from server (Forbidden): error when creating "kubesphere-installer.yaml": serviceaccounts "ks-installer" is forbidden: unable to create new content in namespace kubesphere-system because it is being terminated

Error from server (Forbidden): error when creating "kubesphere-installer.yaml": deployments.apps "ks-installer" is forbidden: unable to create new content in namespace kubesphere-system because it is being terminated

具体表现为一直挂在删除界面:

[root@centos1 ~]# kubectl delete ns kubesphere-system

namespace "kubesphere-system" deleted

^C

[root@centos1 ~]# kubectl delete ns kubesphere-system

namespace "kubesphere-system" deleted

^C

下面就本次拍错和最终解决方案做一个比较详细的说明

一,

解决方案一

这个说来惭愧,不过也是比较常规的,因为百分之九十的错误可以通过重启服务解决,百分之九十九的错误可以通过重启服务器解决,但很不幸,这次的namespace异常状态是那百分之一

重启服务,重启服务器没什么好说的,该方案无效

二,

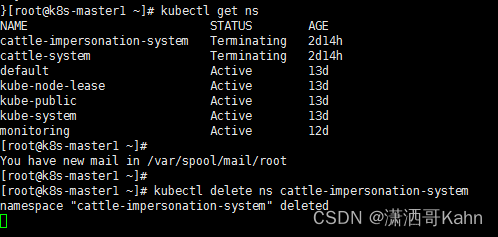

解决方案二

删除命令增加强制删除参数

kubectl delete ns kubesphere-system --force --grace-period=0实际效果不尽如人意,仍然没有完成删除:

可以看到该命令贴心(无用)的给了一个警告,现在是立刻删除,不会等待Terminating状态结束的立刻删除,然而并没有卵用

[root@centos1 ~]# kubectl delete ns kubesphere-system --force --grace-period=0

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

namespace "kubesphere-system" force deleted

三,

解决方案三

其实普通的方式已经确定是无法删除的,那么,现在有两条路,一个是通过etcd直接删除,一个是通过apiserver服务的api来进行删除

那么,etcd直接删除是有一定的风险的,因此,这里使用api删除

1,

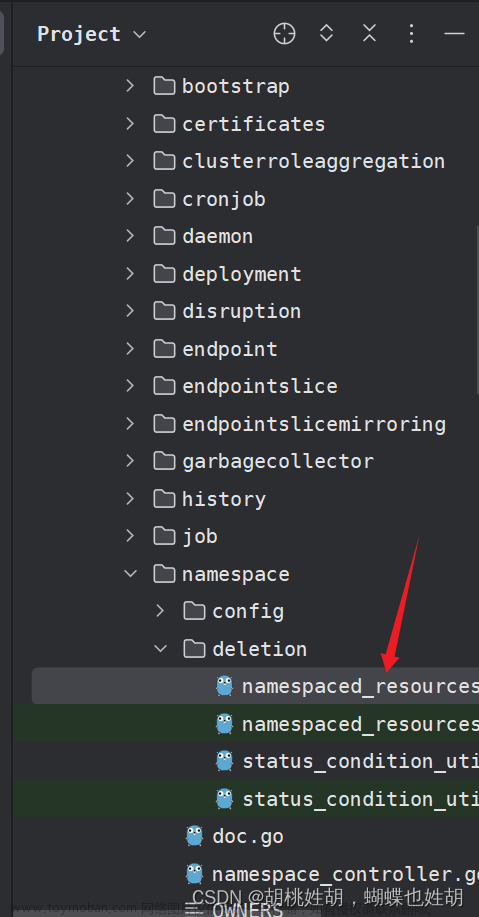

获取namespace的顶用文件,格式为json

kubectl get ns kubesphere-system -o json > /tmp/kubesphere.json文件关键内容如下:

"spec": {

"finalizers": [

"kubernetes"

]

}, "apiVersion": "v1",

"kind": "Namespace",

"metadata": {

"annotations": {

"kubectl.kubernetes.io/last-applied-configuration": "{\"apiVersion\":\"v1\",\"kind\":\"Namespace\",\"metadata\":{\"annotations\":{},\"name\":\"kubesphere-system\"}}\n"

},

"creationTimestamp": "2023-06-29T15:16:45Z",

"deletionGracePeriodSeconds": 0,

"deletionTimestamp": "2023-06-30T04:28:31Z",

"finalizers": [

"finalizers.kubesphere.io/namespaces"

],

删除后:

"apiVersion": "v1",

"kind": "Namespace",

"metadata": {

"annotations": {

"kubectl.kubernetes.io/last-applied-configuration": "{\"apiVersion\":\"v1\",\"kind\":\"Namespace\",\"metadata\":{\"annotations\":{},\"name\":\"kubesphere-system\"}}\n"

},

"creationTimestamp": "2023-06-29T15:16:45Z",

"deletionGracePeriodSeconds": 0,

"spec": {

},

Finalize字段的说明:

Finalizers字段属于 Kubernetes GC 垃圾收集器,是一种删除拦截机制,能够让控制器实现异步的删除前(Pre-delete)回调。其存在于任何一个资源对象的 Meta 中,在 k8s 源码中声明为 []string,该 Slice 的内容为需要执行的拦截器名称。

对带有 Finalizer 的对象的第一个删除请求会为其 metadata.deletionTimestamp 设置一个值,但不会真的删除对象。一旦此值被设置,finalizers 列表中的值就只能被移除。

当 metadata.deletionTimestamp 字段被设置时,负责监测该对象的各个控制器会通过轮询对该对象的更新请求来执行它们所要处理的所有 Finalizer。 当所有 Finalizer 都被执行过,资源被删除。

metadata.deletionGracePeriodSeconds 的取值控制对更新的轮询周期。

每个控制器要负责将其 Finalizer 从列表中去除。

每执行完一个就从 finalizers 中移除一个,直到 finalizers 为空,之后其宿主资源才会被真正的删除。

因此,将finalizers字段删除即可,(有得情况是只有spec字段有finalizers,有得情况是spec和metadata都有,总之所有finalizers删除即可,一般是只有一个spec包含finalizers)

2,

将该json文件放置到root根目录,开启apiserver的代理:

[root@centos1 ~]# kubectl proxy --port=8001

Starting to serve on 127.0.0.1:8001

3,

重新开一个shell窗口,调用api开始删除,命令如下:

curl -k -H "Content-Type: application/json" -X PUT --data-binary @kubesphere.json http://127.0.0.1:8001/api/v1/namespaces/kubesphere-system/finalize输出如下表示删除成功:

{

"kind": "Namespace",

"apiVersion": "v1",

"metadata": {

"name": "kubesphere-system",

"uid": "7a1c9fed-dbe3-4d65-9f57-db93f7a358f7",

"resourceVersion": "18113",

"creationTimestamp": "2023-06-24T02:27:18Z",

"deletionTimestamp": "2023-06-24T02:28:29Z",

"labels": {

"kubernetes.io/metadata.name": "kubesphere-system"

},

"annotations": {

"kubectl.kubernetes.io/last-applied-configuration": "{\"apiVersion\":\"v1\",\"kind\":\"Namespace\",\"metadata\":{\"annotations\":{},\"name\":\"kubesphere-system\"}}\n"

},

"managedFields": [

{

"manager": "kubectl-client-side-apply",

"operation": "Update",

"apiVersion": "v1",

"time": "2023-06-24T02:27:18Z",

"fieldsType": "FieldsV1",

"fieldsV1": {"f:metadata":{"f:annotations":{".":{},"f:kubectl.kubernetes.io/last-applied-configuration":{}},"f:labels":{".":{},"f:kubernetes.io/metadata.name":{}}}}

},

{

"manager": "kube-controller-manager",

"operation": "Update",

"apiVersion": "v1",

"time": "2023-06-24T02:28:35Z",

"fieldsType": "FieldsV1",

"fieldsV1": {"f:status":{"f:conditions":{".":{},"k:{\"type\":\"NamespaceContentRemaining\"}":{".":{},"f:lastTransitionTime":{},"f:message":{},"f:reason":{},"f:status":{},"f:type":{}},"k:{\"type\":\"NamespaceDeletionContentFailure\"}":{".":{},"f:lastTransitionTime":{},"f:message":{},"f:reason":{},"f:status":{},"f:type":{}},"k:{\"type\":\"NamespaceDeletionDiscoveryFailure\"}":{".":{},"f:lastTransitionTime":{},"f:message":{},"f:reason":{},"f:status":{},"f:type":{}},"k:{\"type\":\"NamespaceDeletionGroupVersionParsingFailure\"}":{".":{},"f:lastTransitionTime":{},"f:message":{},"f:reason":{},"f:status":{},"f:type":{}},"k:{\"type\":\"NamespaceFinalizersRemaining\"}":{".":{},"f:lastTransitionTime":{},"f:message":{},"f:reason":{},"f:status":{},"f:type":{}}}}},

"subresource": "status"

}

]

},

"spec": {

},

"status": {

"phase": "Terminating",

"conditions": [

{

"type": "NamespaceDeletionDiscoveryFailure",

"status": "True",

"lastTransitionTime": "2023-06-24T02:28:34Z",

"reason": "DiscoveryFailed",

"message": "Discovery failed for some groups, 1 failing: unable to retrieve the complete list of server APIs: metrics.k8s.io/v1beta1: the server is currently unable to handle the request"

},

{

"type": "NamespaceDeletionGroupVersionParsingFailure",

"status": "False",

"lastTransitionTime": "2023-06-24T02:28:35Z",

"reason": "ParsedGroupVersions",

"message": "All legacy kube types successfully parsed"

},

{

"type": "NamespaceDeletionContentFailure",

"status": "False",

"lastTransitionTime": "2023-06-24T02:28:35Z",

"reason": "ContentDeleted",

"message": "All content successfully deleted, may be waiting on finalization"

},

{

"type": "NamespaceContentRemaining",

"status": "False",

"lastTransitionTime": "2023-06-24T02:28:35Z",

"reason": "ContentRemoved",

"message": "All content successfully removed"

},

{

"type": "NamespaceFinalizersRemaining",

"status": "False",

"lastTransitionTime": "2023-06-24T02:28:35Z",

"reason": "ContentHasNoFinalizers",

"message": "All content-preserving finalizers finished"

}

]

}

}检查是否删除了Terminating状态的namespace kubesphere-system:文章来源:https://www.toymoban.com/news/detail-705758.html

root@centos1 ~]# kubectl get ns

NAME STATUS AGE

default Active 13h

kube-flannel Active 104m

kube-node-lease Active 13h

kube-public Active 13h

kube-system Active 13h

注:打错了namespace的错误调用api(激动了,kubesphere-system 给打成了kubespheer-system):

[root@centos1 ~]# curl -k -H "Content-Type: application/json" -X PUT --data-binary @kubesphere.json http://127.0.0.1:8001/api/v1/namespaces/kubespheer-system/finalize

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "the name of the object (kubesphere-system) does not match the name on the URL (kubespheer-system)",

"reason": "BadRequest",

"code": 400

那么,有得时候遇到删除不掉的pod也是可以用此方法删除的,等以后碰到了我在补充哈。文章来源地址https://www.toymoban.com/news/detail-705758.html

到了这里,关于云原生|kubernetes|删除不掉的namespace 一直处于Terminating状态的解决方案的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!