本文包含内容:

线性回归、softmax回归、MNIST图像分类、多层感知机、模型选择、欠拟合、过拟合问题、权重衰减、丢弃法、正向传播、反向传播、计算图、数值稳定性模型初始化、Kaggle实战:房价预测。文章来源:https://www.toymoban.com/news/detail-715454.html

(来源:d2l-zh-pytorch)文章来源地址https://www.toymoban.com/news/detail-715454.html

线性回归

完整版:

import matplotlib

import random

import matplotlib.pyplot as plt

import torch

from d2l import torch as d2l

#构造小批量数据集

def synthetic_data(w,b,num):

#生成y=Xw + b +噪声 (w为因素权重)

x = torch.normal(0,1,(num,len(w))) #正态矩阵

y = torch.matmul(x,w) + b

y += torch.normal(0, 0.01, y.shape) #0,0.01的正态矩阵噪声

return x, y.reshape((-1,1)) #y转换为列

#绘制

true_w=torch.tensor([2,-3.4]) #设置w权重

true_b=4.2

features, labels = synthetic_data(true_w, true_b, 1000)

d2l.set_figsize()

d2l.plt.scatter(features[:, 1].detach().numpy(), labels.detach().numpy(), 1)

#d2l.plt.show()

#随机取出小批量数据

def data_iter(batch_size, features, labels):

num=len(features) #样本特征数量

indices=list(range(num)) #随机生成数据下标

random.shuffle(indices) #打乱下标

for i in range(0,num,batch_size): #步长为ba……

#取i~i+batch_size

batch_indices = torch.tensor(

indices[i: min(i + batch_size, num)])

yield features[batch_size],labels[batch_size] #返回数据

#输出随机样本

batch_size = 10 #样本大小为10

for x,y in data_iter(batch_size,features,labels):

print(x,'\n',y)

break

#定义初始化模型参数

w = torch.normal(0,0.01,size=(2,1),requires_grad=True) #可计算梯度的正态分布

b = torch.zeros(1,requires_grad=True) #偏差

#定义模型

def linreg(X, w, b): #线性回归模型

return torch.matmul(X, w) + b

#定义损失函数

def squared_loss(y_hat,y):#均方误差

return (y_hat-y.reshape(y_hat.shape)) ** 2 / 2

#定义优化算法

def sgd(params, lr, batch_size): #小批量随机梯度下降(params=参数w,b表,lr学习率)

#因为更新的时候不需要参与梯度计算

with torch.no_grad(): #所有计算得出的tensor的requires_grad都自动设置为False。即不会对w求导

for param in params:

param -= lr * param.grad / batch_size #求下均值

param.grad.zero_() #梯度设成0,下次计算梯度不会与上次相关

#训练过程

lr=0.03 #学习率

num_epochs = 3 #数据扫三遍

net = linreg #选择的模型

loss = squared_loss

for epoch in range(num_epochs):

for X, y in data_iter(batch_size, features, labels):

l=loss(net(X,w,b),y) #把预测的y和真实y做损失计算

l.sum().backward() #小批量损失对模型参数求梯度

sgd([w,b],lr,batch_size) #迭代更新模型参数

#扫完一遍数据之后评价一下进度

with torch.no_grad(): #把不需要计算梯度的放在这里

train_l = loss(net(features, w, b),labels)

print(f'epoch {epoch + 1}, loss {float(train_l.mean()):f}')

print(f'w的估计误差: {true_w - w.reshape(true_w.shape)}')

print(f'b的估计误差: {true_b - b}')简洁版:

import numpy as np

import torch

from torch.utils import data

from d2l import torch as d2l

# nn是神经⽹络的缩写

from torch import nn

#生成数据集

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = d2l.synthetic_data(true_w, true_b, 1000)

#构造小批量数据集返回

def load_array(data_arrays, batch_size, is_train=True): #@save

"""构造⼀个PyTorch数据迭代器"""

dataset = data.TensorDataset(*data_arrays)

return data.DataLoader(dataset, batch_size, shuffle=is_train) #自动返回打乱的batch_size个数据

batch_size = 10

data_iter = load_array((features, labels), batch_size)

next(iter(data_iter)) #用iter构造Python迭代器

#定义模型,线性回归=单层神经网络

net = nn.Sequential(nn.Linear(2, 1))#输入维度=2,输出维度=1,sequential为容器=list

#初始化参数

net[0].weight.data.normal_(0, 0.01) #设置w为正态分布0,0.01

net[0].bias.data.fill_(0) #设置偏差b=0

# 定义损失函数

loss = nn.MSELoss() #均方误差

# 定义优化算法SGD

trainer = torch.optim.SGD(net.parameters(), lr=0.03)

#训练过程

num_epochs = 3

for epoch in range(num_epochs):

for X, y in data_iter:#遍历小批量数据

l = loss(net(X) ,y) #前向传播预测,计算损失

trainer.zero_grad() #优化器梯度清0

l.backward() #反向传播计算梯度

trainer.step() #用梯度来更新模型参数

l = loss(net(features), labels) #计算每个迭代周期损失

print(f'epoch {epoch + 1}, loss {l:f}')

w = net[0].weight.data

print('w的估计误差:', true_w - w.reshape(true_w.shape))

b = net[0].bias.data

print('b的估计误差:', true_b - b)Softmax回归

完整版

import torch

from IPython import display

from d2l import torch as d2l

import matplotlib as plt

batch_size = 256 #一个批量256张图片

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size) #读取mnist数据到训练集和测试集中

#初始化数据

num_inputs = 784 #将28*28图像展平成784长度的向量

num_outputs = 10 #分成10类,所以输出维度为10

#所以权重w为784*10的矩阵(0,0.01正态),b偏置为1*10行向量(先设0)

W = torch.normal(0, 0.01, size=(num_inputs, num_outputs), requires_grad=True)

b = torch.zeros(num_outputs, requires_grad=True)

#求和方法:0->列相加 1->行相加

# X.sum(0, keepdim=True), X.sum(1, keepdim=True)

#softmax过程

def softmax(X):

X_exp = torch.exp(X)

partition = X_exp.sum(1, keepdim=True)

return X_exp / partition # 这⾥应⽤了⼴播机制(可以把不同形状的数据进行拓展成同样的形状进行运算)

#实现运算

def net(X):

return softmax(torch.matmul(X.reshape((-1, W.shape[0])), W) + b) #先将原始图像展平

#将y作为索引取出y_hat的值 细节

# y = torch.tensor([0, 2])

# y_hat = torch.tensor([[0.1, 0.3, 0.6], [0.3, 0.2, 0.5]])

#y_hat[[0, 1], y]

#y为索引,在y_hat的第一个样本取出第0个元素,第二个样本取出第2个元素

#实现交叉熵损失函数

def cross_entropy(y_hat, y):

return - torch.log(y_hat[range(len(y_hat)), y])

#计算分类的准确度(预测类别和真实y比较)

def accuracy(y_hat, y): #@save

"""计算预测正确的数量"""

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1: #有第二就是有预测数据

y_hat = y_hat.argmax(axis=1) #每行最大概率的索引为预测数据

cmp = y_hat.type(y.dtype) == y #==判断对(1),错(0)

return float(cmp.type(y.dtype).sum())

#迭代评价模型精度

def evaluate_accuracy(net, data_iter): #@save

"""计算在指定数据集上模型的精度"""

if isinstance(net, torch.nn.Module): #isinstance判断net是不是nn模式

net.eval() # 将模型设置为评估模式

metric = Accumulator(2) #放入迭代器 正确预测数0、预测总数1

with torch.no_grad():

for X, y in data_iter:

metric.add(accuracy(net(X), y), y.numel()) #0加入预测正确的样本数,1加入总样本数

return metric[0] / metric[1] #0为正确预测数,1为总数

#迭代器定义

class Accumulator: #@save

"""在n个变量上累加"""

def __init__(self, n):

self.data = [0.0] * n

def add(self, *args):

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self):

self.data = [0.0] * len(self.data)

def __getitem__(self, idx):

return self.data[idx]

#训练

def train_epoch_ch3(net, train_iter, loss, updater): #@save

"""训练模型⼀个迭代周期(定义⻅第3章)"""

# 将模型设置为训练模式

if isinstance(net, torch.nn.Module):

net.train()

# 训练损失总和、训练准确度总和、样本数

metric = Accumulator(3)

for X, y in train_iter: #扫一遍数据

# 计算梯度并更新参数

y_hat = net(X)

l = loss(y_hat, y)

if isinstance(updater, torch.optim.Optimizer): #如果是pytorch的优化器就要梯度置0

# 使⽤PyTorch内置的优化器和损失函数

updater.zero_grad()

l.mean().backward()

updater.step()

else: #如果是自己手写优化器就不用置0梯度

# 使⽤定制的优化器和损失函数

l.sum().backward()

updater(X.shape[0])

metric.add(float(l.sum()), accuracy(y_hat, y), y.numel())

# 返回训练损失和训练精度

return metric[0] / metric[2], metric[1] / metric[2] #损失的样本数准确率、正确样本准确率

#绘制数据类

class Animator: #@save

"""在动画中绘制数据"""

def __init__(self, xlabel=None, ylabel=None, legend=None, xlim=None,

ylim=None, xscale='linear', yscale='linear',

fmts=('-', 'm--', 'g-.', 'r:'), nrows=1, ncols=1,

figsize=(3.5, 2.5)):

# 增量地绘制多条线

if legend is None:

legend = []

d2l.use_svg_display()

self.fig, self.axes = d2l.plt.subplots(nrows, ncols, figsize=figsize)

if nrows * ncols == 1:

self.axes = [self.axes, ]

# 使⽤lambda函数捕获参数

self.config_axes = lambda: d2l.set_axes(

self.axes[0], xlabel, ylabel, xlim, ylim, xscale, yscale, legend)

self.X, self.Y, self.fmts = None, None, fmts

def add(self, x, y):

# 向图表中添加多个数据点

if not hasattr(y, "__len__"):

y = [y]

n = len(y)

if not hasattr(x, "__len__"):

x = [x] * n

if not self.X:

self.X = [[] for _ in range(n)]

if not self.Y:

self.Y = [[] for _ in range(n)]

for i, (a, b) in enumerate(zip(x, y)):

if a is not None and b is not None:

self.X[i].append(a)

self.Y[i].append(b)

self.axes[0].cla()

for x, y, fmt in zip(self.X, self.Y, self.fmts):

self.axes[0].plot(x, y, fmt)

self.config_axes()

plt.draw()

plt.pause(0.001)

display.display(self.fig)

display.clear_output(wait=True)

d2l.plt.show()

#训练函数

def train_ch3(net, train_iter, test_iter, loss, num_epochs, updater): #@save

"""训练模型(定义⻅第3章)"""

animator = Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[0.3, 0.9],

legend=['train loss', 'train acc', 'test acc'])

for epoch in range(num_epochs):

train_metrics = train_epoch_ch3(net, train_iter, loss, updater)

test_acc = evaluate_accuracy(net, test_iter)

animator.add(epoch + 1, train_metrics + (test_acc,))

train_loss, train_acc = train_metrics

assert train_loss < 0.5, train_loss

assert train_acc <= 1 and train_acc > 0.7, train_acc

assert test_acc <= 1 and test_acc > 0.7, test_acc

lr = 0.1#学习率

#更新梯度

def updater(batch_size):

return d2l.sgd([W, b], lr, batch_size)

num_epochs = 10

train_ch3(net, train_iter, test_iter, cross_entropy, num_epochs, updater)

简洁版:

import matplotlib.pyplot as plt

import torch

from torch import nn

from d2l import torch as d2l

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

# PyTorch不会隐式地调整输⼊的形状。因此,

# 我们在线性层前定义了展平层(flatten),来调整⽹络输⼊的形状

net = nn.Sequential(nn.Flatten(), nn.Linear(784, 10))

#用于搭建神经网络的模块被按照被传入构造器的顺序添加到nn.Sequential()容器中

#Flatten展平成2d(一行)

#初始化参数

def init_weights(m):

if type(m) == nn.Linear:

nn.init.normal_(m.weight, std=0.01)

net.apply(init_weights)

#交叉熵损失函数

loss = nn.CrossEntropyLoss(reduction='none')

#SGD优化

trainer = torch.optim.SGD(net.parameters(), lr=0.1)

#训练

num_epochs = 10

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

d2l.plt.show()

Fashion-MNIST图像分类

import matplotlib

import matplotlib.pyplot as plt

import torch

import torchvision

from torch.utils import data

from torchvision import transforms

from d2l import torch as d2l #d2l的torch包

trans = transforms.ToTensor() #将储存图片数据的变换成浮点数格式

#下载数据集

mnist_train = torchvision.datasets.FashionMNIST(

root="../data",train=True, transform=trans, download=True)

mnist_test = torchvision.datasets.FashionMNIST(

root="../data", train=False, transform=trans, download=True)

#第一类第一张图片:mnist_train[0][0].shape

#返回所有类别标签

def get_fashion_mnist_labels(labels): #@save

"""返回Fashion-MNIST数据集的⽂本标签"""

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress', 'coat',

'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot']

return [text_labels[int(i)] for i in labels]

#可视化样本(图片信息、几行图片、一行几列图片)

def show_images(imgs, num_rows, num_cols, titles=None, scale=1.5): #@save

"""绘制图像列表"""

figsize = (num_cols * scale, num_rows * scale)

_, axes = d2l.plt.subplots(num_rows, num_cols, figsize=figsize)

axes = axes.flatten()

for i, (ax, img) in enumerate(zip(axes, imgs)):

if torch.is_tensor(img):

# 图⽚张量

ax.imshow(img.numpy())

else:

# PIL图⽚

ax.imshow(img)

ax.axes.get_xaxis().set_visible(False)

ax.axes.get_yaxis().set_visible(False)

if titles:

ax.set_title(titles[i])

return axes

#样本图片及其标签样式

#取出一个批量(18张)图片

X, y = next(iter(data.DataLoader(mnist_train, batch_size=18)))

#显示图片

show_images(X.reshape(18, 28, 28), 2, 9, titles=get_fashion_mnist_labels(y));

#plt.show()

#小批量读取数据

batch_size = 256

# 选择4个进程来读取数据(可以根据电脑情况自定)

def get_dataloader_workers(): #@save

"""使⽤4个进程来读取数据"""

return 4

train_iter = data.DataLoader(mnist_train, batch_size, shuffle=True, #shuffle(是否要打乱顺序)

num_workers=get_dataloader_workers()) #需要的进程数

def load_data_fashion_mnist(batch_size, resize=None): #@save

"""下载Fashion-MNIST数据集,然后将其加载到内存中"""

trans = [transforms.ToTensor()]

if resize:

trans.insert(0, transforms.Resize(resize))

trans = transforms.Compose(trans)

mnist_train = torchvision.datasets.FashionMNIST(

root="../data", train=True, transform=trans, download=True)

mnist_test = torchvision.datasets.FashionMNIST(

root="../data", train=False, transform=trans, download=True)

return (data.DataLoader(mnist_train, batch_size, shuffle=True,

num_workers=get_dataloader_workers()),

data.DataLoader(mnist_test, batch_size, shuffle=False,

num_workers=get_dataloader_workers()))多层感知机

完整版

import torch

from torch import nn

from d2l import torch as d2l

import matplotlib

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

#初始化定义

num_inputs, num_outputs, num_hiddens = 784, 10, 256 #输入图片信息28*28,输出10类,隐藏层单层大小256(自定)

W1 = nn.Parameter(torch.randn( #行位输入层大小,列为隐藏层大小

num_inputs, num_hiddens, requires_grad=True) * 0.01)

b1 = nn.Parameter(torch.zeros(num_hiddens, requires_grad=True)) #大小=隐藏层大小

W2 = nn.Parameter(torch.randn( #行位隐藏层大小,列为输出层大小

num_hiddens, num_outputs, requires_grad=True) * 0.01)

b2 = nn.Parameter(torch.zeros(num_outputs, requires_grad=True))#大小=输出层大小

#参数模型

params = [W1, b1, W2, b2]

#ReLU激活函数=max(0,x)

def relu(X):

a = torch.zeros_like(X)

return torch.max(X, a)

#实现模型(单层隐藏层分类)

def net(X):

X = X.reshape((-1, num_inputs)) #图像转换成一行长度为num的向量

H = relu(X @ W1 + b1) # 这⾥“@”代表矩阵乘法

return (H @ W2 + b2) #输出层

#损失函数

loss = nn.CrossEntropyLoss(reduction='none')

num_epochs, lr = 10, 0.1 #迭代次数、学习率

updater = torch.optim.SGD(params, lr=lr) #小批量梯度下降(SGD)更新模型参数

#用net训练训练集,并用测试集计算损失loss

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, updater)

#预测test集

d2l.predict_ch3(net, test_iter)

d2l.plt.show()简洁版

import torch

from torch import nn

from d2l import torch as d2l

#要添加两个全连接层

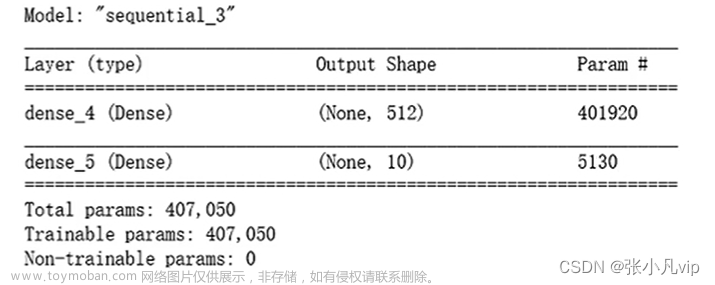

net = nn.Sequential(nn.Flatten(), #源数据展平

nn.Linear(784, 256), #展平后大小784,隐藏层大小256

nn.ReLU(), #使用ReLU激活函数

nn.Linear(256, 10)) #输出层大小10

def init_weights(m):

if type(m) == nn.Linear:

nn.init.normal_(m.weight, std=0.01)

net.apply(init_weights)

#训练

batch_size, lr, num_epochs = 256, 0.1, 10

loss = nn.CrossEntropyLoss(reduction='none')

trainer = torch.optim.SGD(net.parameters(), lr=lr)

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

d2l.plt.show()

到了这里,关于深度学习入门实战1——基础实战的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!